HDFS的高可用性

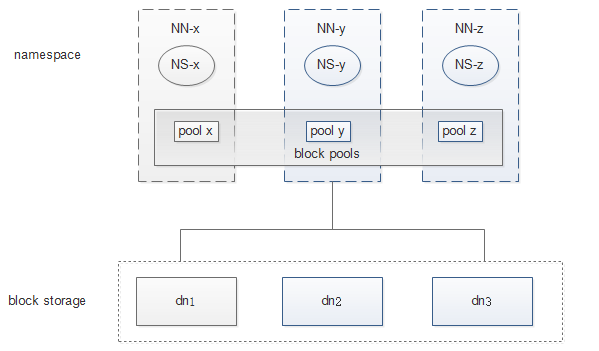

联邦hdfs

由于namenode在内存中维护系统中的文件和数据块的映射信息,所以对于一个海量文件的集群来说,内存将成为系统横向扩展瓶颈。Hadoop在2.x的版本引入了联邦HDFS(HDFS Federation),通过在集群中添加namenode实现。

Federation的架构:

原理

1、每个namenode相互独立,单独维护一个由namespace元数据和数据块池(block pool)组成的命名空间卷(namespace volume)——图中的NS-x。

2、数据块池包含该命名空间下文件的所有数据块。命名空间卷相互独立,两两间互不通信,即使一个namenode挂掉,也不会影响其他namenode

3、datanode被用作通用的数据存储设备,每个datanode要向集群中所有的namenode注册,且周期性的向所有namenode发送心跳和报告,并执行来自所有namenode的命令

4、当一个namespace被删除后,所有datanode上与其对应的block pool也会被删除。当集群升级时,每个namespacevolume作为一个基本单元进行升级。

联邦hdfs的缺点

虽然引入了多个namenode管理多份namespace,但是对于单个namenode,依然存在单点故障问题(Single point of failure),如果某个namenode挂掉了,那么所有客户端都无法操作文件。

联邦hdfs仍然需要引入secondary namenode。直到secondary namenode满足以下所有条件时,才能提供服务:

1、将命名空间镜像导入内存

2、重演编辑日志

3、接收到足够的来自datanode的块映射报告并退出安全模式。

利用NAS实现HA

保障集群的可用性,可以使用NAS共享存储。主备namenode之间通过NAS进行元数据同步。但是有一下缺陷:

1、硬件设备必须支持NAS

2、部署复杂,部署完namenode还需要在NFS挂载、解决NFS的单点及脑裂,容易出错

3、无法实现同一时间只能有一个namenode写数据

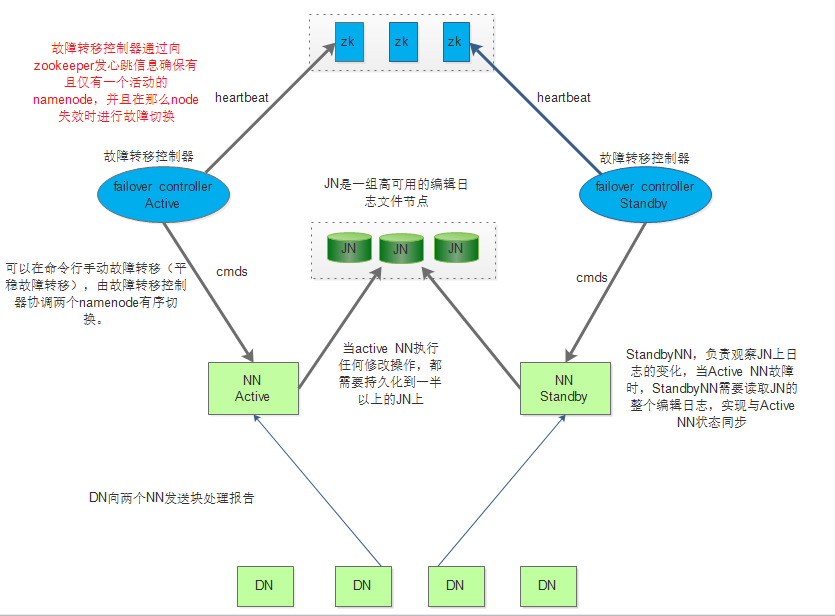

QJM高可用

Hadoop2针对以上问题增加了QJM(Quorum Journal Manager),由多个JN组成,一般配置为奇数个。QJM中有一对active-standby的namenode。当active namenode失效时,standby namenode会接管它继续提供服务。

工作原理如下:

1、namenode之间通过一组 journal node 共享编辑日志,standby namenode接管后,需要读取整个编辑日志来与active namenode同步状态,并继续读取active namenode写入的新操作。

2、datanode需要同时向这组active-standby namenode发送数据块处理报告,因为数据块的映射信息保存在namenode的内存中。

3、客户端使用ZKFC(zookeeper failover-controller)来处理namenode失效问题,该进程运行在每个namenode上,通过heartbeat监测active namenode是否失效

4、secondary namenode的角色被standby namenode取代,由standby namenode为active namenode设置check point

5、QJM的实现没有使用zookeeper。但是在HA选举active namenode时,使用了zookeeper。

6、在某些特殊情况下(如网速慢),可能发生故障转移,这时有肯能两个namenode都是active namenode——脑裂。QJM通过fencing(规避)来避免这种现象。

NameNode 的主备选举机制

Namenode(包括 YARN ResourceManager) 的主备选举是通过 ActiveStandbyElector 来完成的,ActiveStandbyElector 主要是利用了 Zookeeper 的写一致性、 临时节点和观察者机制

主备选举实现如下

1、 创建锁节点: 如果 ZKFC 检测到对应的 NameNode 的状态正常,那么表示这个 NameNode有资格参加Zookeeper 的主备选举。如果目前还没有进行过主备选举的话,那么相应的会发起一次主备选举,尝试在 Zookeeper 上创建一个路径为/hadoopha/${dfs.nameservices}/ActiveStandbyElectorLock 的临时结点, Zookeeper 的写一致性会保证最终只会有一次结点创建成功,那么创建成功的 NameNode 就会成为主 NameNode, 进而切换为 Active 状态。而创建失败的 NameNode 则切换为 Standby 状态。

2、 注册 Watcher 监听: 不管创建/hadoop-ha/${dfs.nameservices}/ActiveStandbyElectorLock 节点是否成功, ZKFC 随后都会向 Zookeeper 注册一个 Watcher 来监听这个节点的状态变化事件, ActiveStandbyElector 主要关注这个节点的 NodeDeleted 事件。

3、 自动触发主备选举: 如果 Active NameNode 状态异常时, ZKFailoverController 会主动删除临时结点/hadoop-ha/${dfs.nameservices}/ActiveStandbyElectorLock,这样处于 Standby 状态的NameNode 会收到这个结点的 NodeDeleted 事件。收到这个事件之后,会马上再次进入到创建/hadoopha/${dfs.nameservices}/ActiveStandbyElectorLock 结点的流程,如果创建成功,这个本来处于 Standby 状态的 NameNode 就选举为主 NameNode 并随后开始切换为 Active 状态。

4、 当然,如果是 Active 状态的 NameNode 所在的机器整个宕掉的话,那么根据 Zookeeper 的临时节点特性, /hadoop-ha/${dfs.nameservices}/ActiveStandbyElectorLock 节点会自动被删除,从而也会自动进行一次主备切换。

Namenode 脑裂

脑裂的原因: 如果 Zookeeper 客户端机器负载过高或者正在进行 JVM Full GC,那么可能会导致 Zookeeper 客户端到服务端的心跳不能正常发出,一旦这个时间持续较长,超过了配置的 Zookeeper Session Timeout 参数的话, Zookeeper 服务端就会认为客户端的 session 已经过期从而将客户端的 Session 关闭。“假死”有可能引起分布式系统常说的双主或脑裂(brain-split) 现象。具体到本文所述的 NameNode,假设 NameNode1 当前为 Active 状态,NameNode2 当前为 Standby 状态。如果某一时刻 NameNode1 对应的 ZKFC 进程发生了“假死”现象,那么 Zookeeper 服务端会认为 NameNode1 挂掉了,根据前面的主备切换逻辑, NameNode2 会替代 NameNode1 进入 Active 状态。但是此时 NameNode1 可能仍然处于 Active 状态正常运行,即使随后 NameNode1 对应的 ZKFailoverController 因为负载下降或者 Full GC 结束而恢复了正常,感知到自己和 Zookeeper 的 Session 已经关闭,但是由于网络的延迟以及 CPU 线程调度的不确定性,仍然有可能会在接下来的一段时间窗口内NameNode1 认为自己还是处于 Active 状态。这样 NameNode1 和 NameNode2 都处于Active 状态,都可以对外提供服务。这种情况对于 NameNode 这类对数据一致性要求非常高的系统来说是灾难性的,数据会发生错乱且无法恢复。

规避脑裂

Hadoop 的 fencing 机制防止脑裂: 中文翻译为隔离,也就是想办法把旧的 Active NameNode

隔离起来,使它不能正常对外提供服务。 ZKFC 为了实现 fencing,会在成功创建 Zookeeper临时结点 hadoop-ha/${dfs.nameservices}/ActiveStandbyElectorLock 从而成为 ActiveNameNode 之后,创建另外一个路径为/hadoop-ha/${dfs.nameservices}/ActiveBreadCrumb 的持久节点,这个节点里面也保存了 Active NameNode 的地址信息。 正常关闭 Active NameNode时, ActiveStandbyElectorLock 临时结点会自动删除,同时, ZKFC 会删除 ActiveBreadCrumb结点。但是如果在异常的状态下 Zookeeper Session 关闭 (比如前述的 Zookeeper 假死),那么由于 ActiveBreadCrumb 是持久节点,会一直保留下来。后面当另一个 NameNode 选主成功之后,会注意到上一个 Active NameNode 遗留下来的这个节点,从而会对旧的 ActiveNameNode 进行 fencing

Hadoop 的两种 fencing 机制:

1) sshfence:通过 SSH 登录到目标机器上,执行命令将对应的进程杀死;

2) shellfence:执行一个用户自定义的 shell 脚本来将对应的进程隔离;

只有在成功地执行完成 fencing 之后,选主成功的 ActiveStandbyElector 才会回调ZKFailoverController 的 becomeActive 方法将对应的 NameNode 转换为 Active 状态,开始

对外提供服务。

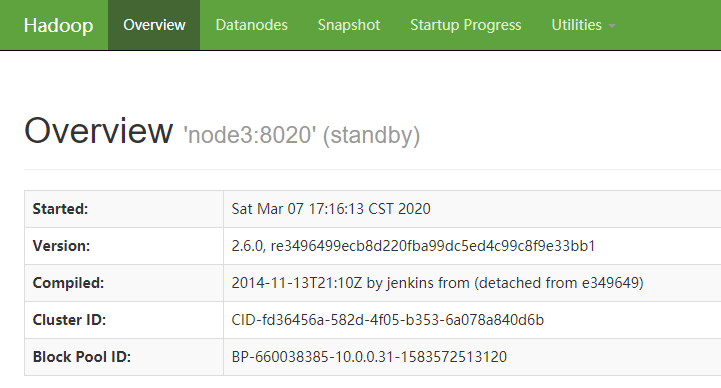

安装过程

安装zookeeper(启动)

https://www.cnblogs.com/zh-dream/p/12434514.html

hadoo集群准备(不启动)

https://www.cnblogs.com/zh-dream/articles/12416899.html

1、 修改配置文件hdfs-site.xml

<!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定 HDFS 块副本的数量 --> <property> <name>dfs.replication</name> <value>3</value> </property> <!--property> <name>dfs.namenode.secondary.http-address</name> <value>node3:50090</value> </property --> <property> <name>dfs.namenode.name.dir</name> <value>/opt/module/ha/dfs/name1,/opt/module/ha/dfs/name2</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/opt/module/ha/dfs/data1,/opt/module/ha/dfs/data2</value> </property> <property> <name>dfs.nameservices</name> <value>mycluster</value> </property> <property> <name>dfs.ha.namenodes.mycluster</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.nn1</name> <value>node1:8020</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.nn2</name> <value>node3:8020</value> </property> <property> <name>dfs.namenode.http-address.mycluster.nn1</name> <value>node1:50070</value> </property> <property> <name>dfs.namenode.http-address.mycluster.nn2</name> <value>node3:50070</value> </property> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://node1:8485;node2:8485;node3:8485/mycluster</value> </property> <property> <name>dfs.client.failover.proxy.provider.mycluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.ha.fencing.methods</name> <value> sshfence shell(/bin/true) </value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/home/hdfs/.ssh/id_rsa</value> </property> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>node1:2181,node2:2181,node3:2181</value> </property> </configuration>

2、修改core-site.xml

<!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定 HDFS 中 NameNode(master)节点 的地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://mycluster</value> </property> <!-- 指定 hadoop 运行时产生文件的存储目录,包括索引数据和真实数据 --> <property> <name>hadoop.tmp.dir</name> <value>/opt/module/ha</value> </property> <property> <name>hadoop.http.staticuser.user</name> <value>hdfs</value> </property> <!-- 指定hdfs回收时间 --> <property> <name>fs.trash.interval</name> <value>1440</value> </property> </configuration>

3、指定启动datanode节点

# cat slaves

node1

node2

node3

4、指定启动namenode节点

# vim masters

node1

node3

5、同步配置文件

# for i in node{2,3};do scp /opt/module/hadoop-2.6.0/etc/hadoop/* $i:/opt/module/hadoop-2.6.0/etc/hadoop/;done

6、同步元数据

# scp -r /opt/module/ha node3:/opt/module/ VERSION 100% 200 356.4KB/s 00:00 seen_txid 100% 2 1.7KB/s 00:00 fsimage_0000000000000000000.md5 100% 62 106.4KB/s 00:00 fsimage_0000000000000000000 100% 351 605.5KB/s 00:00 VERSION 100% 200 317.7KB/s 00:00 seen_txid 100% 2 3.0KB/s 00:00 fsimage_0000000000000000000.md5 100% 62 82.1KB/s 00:00 fsimage_0000000000000000000 100% 351 737.6KB/s 00:00 VERSION 100% 229 454.0KB/s 00:00 VERSION 100% 128 159.2KB/s 00:00 dfsUsed 100% 18 45.9KB/s 00:00 dncp_block_verification.log.curr 100% 0 0.0KB/s 00:00 VERSION 100% 229 651.3KB/s 00:00 VERSION 100% 128 374.4KB/s 00:00 dfsUsed 100% 18 18.3KB/s 00:00 VERSION 100% 155 468.9KB/s 00:00 last-promised-epoch 100% 2 3.2KB/s 00:00 committed-txid 100% 8 17.3KB/s 00:00 last-writer-epoch 100% 2 4.9KB/s 00:00 edits_0000000000000000024-0000000000000000025 100% 42 97.0KB/s 00:00 edits_inprogress_0000000000000000026 100% 1024KB 26.5MB/s 00:00

7、初始化zk

# hdfs zkfc -formatZK 20/03/07 15:59:09 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at node1/10.0.0.31:8020 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:host.name=node1 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_191 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.home=/opt/module/jdk1.8.0_191/jre 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/opt/module/hadoop-2.6.0/etc/hadoop:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jettison-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/gson-2.2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-client-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-framework-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/junit-4.11.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/htrace-core-3.0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-net-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/paranamer-2.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-el-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/avro-1.7.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/activation-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-nfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guice-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/activation-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/opt/module/hadoop-2.6.0/contrib/capacity-scheduler/*.jar 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/opt/module/hadoop-2.6.0/lib/native 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA> 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:os.version=3.10.0-693.el7.x86_64 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:user.name=root 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:user.home=/root 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Client environment:user.dir=/opt/module/hadoop-2.6.0/etc/hadoop 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=node1:2181,node2:2181,node3:2181 sessionTimeout=5000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@72f926e6 20/03/07 15:59:09 INFO zookeeper.ClientCnxn: Opening socket connection to server node2/10.0.0.32:2181. Will not attempt to authenticate using SASL (unknown error) 20/03/07 15:59:09 INFO zookeeper.ClientCnxn: Socket connection established to node2/10.0.0.32:2181, initiating session 20/03/07 15:59:09 INFO zookeeper.ClientCnxn: Session establishment complete on server node2/10.0.0.32:2181, sessionid = 0x20000f15f9d0000, negotiated timeout = 5000 20/03/07 15:59:09 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/mycluster in ZK. 20/03/07 15:59:09 INFO zookeeper.ZooKeeper: Session: 0x20000f15f9d0000 closed 20/03/07 15:59:09 WARN ha.ActiveStandbyElector: Ignoring stale result from old client with sessionId 0x20000f15f9d0000 20/03/07 15:59:09 INFO zookeeper.ClientCnxn: EventThread shut down

8、验证临时节点

# zkCli.sh -server node1:2181 Connecting to node1:2181 2020-03-07 16:01:17,169 [myid:] - INFO [main:Environment@109] - Client environment:zookeeper.version=3.5.5-390fe37ea45dee01bf87dc1c042b5e3dcce88653, built on 05/03/2019 12:07 GMT 2020-03-07 16:01:17,171 [myid:] - INFO [main:Environment@109] - Client environment:host.name=node1 2020-03-07 16:01:17,171 [myid:] - INFO [main:Environment@109] - Client environment:java.version=1.8.0_191 2020-03-07 16:01:17,172 [myid:] - INFO [main:Environment@109] - Client environment:java.vendor=Oracle Corporation 2020-03-07 16:01:17,172 [myid:] - INFO [main:Environment@109] - Client environment:java.home=/opt/module/jdk1.8.0_191/jre 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:java.class.path=/opt/module/zookeeper/bin/../zookeeper-server/target/classes:/opt/module/zookeeper/bin/../build/classes:/opt/module/zookeeper/bin/../zookeeper-server/target/lib/*.jar:/opt/module/zookeeper/bin/../build/lib/*.jar:/opt/module/zookeeper/bin/../lib/zookeeper-jute-3.5.5.jar:/opt/module/zookeeper/bin/../lib/zookeeper-3.5.5.jar:/opt/module/zookeeper/bin/../lib/slf4j-log4j12-1.7.25.jar:/opt/module/zookeeper/bin/../lib/slf4j-api-1.7.25.jar:/opt/module/zookeeper/bin/../lib/netty-all-4.1.29.Final.jar:/opt/module/zookeeper/bin/../lib/log4j-1.2.17.jar:/opt/module/zookeeper/bin/../lib/json-simple-1.1.1.jar:/opt/module/zookeeper/bin/../lib/jline-2.11.jar:/opt/module/zookeeper/bin/../lib/jetty-util-9.4.17.v20190418.jar:/opt/module/zookeeper/bin/../lib/jetty-servlet-9.4.17.v20190418.jar:/opt/module/zookeeper/bin/../lib/jetty-server-9.4.17.v20190418.jar:/opt/module/zookeeper/bin/../lib/jetty-security-9.4.17.v20190418.jar:/opt/module/zookeeper/bin/../lib/jetty-io-9.4.17.v20190418.jar:/opt/module/zookeeper/bin/../lib/jetty-http-9.4.17.v20190418.jar:/opt/module/zookeeper/bin/../lib/javax.servlet-api-3.1.0.jar:/opt/module/zookeeper/bin/../lib/jackson-databind-2.9.8.jar:/opt/module/zookeeper/bin/../lib/jackson-core-2.9.8.jar:/opt/module/zookeeper/bin/../lib/jackson-annotations-2.9.0.jar:/opt/module/zookeeper/bin/../lib/commons-cli-1.2.jar:/opt/module/zookeeper/bin/../lib/audience-annotations-0.5.0.jar:/opt/module/zookeeper/bin/../zookeeper-*.jar:/opt/module/zookeeper/bin/../zookeeper-server/src/main/resources/lib/*.jar:/opt/module/zookeeper/bin/../conf:..:/opt/module/jdk/lib:/opt/module/jdk/jre/lib:/opt/module/jdk/lib/tools.jar:/opt/module/jdk/lib:/opt/module/jdk/jre/lib:/opt/module/jdk/lib/tools.jar 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:java.io.tmpdir=/tmp 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:java.compiler=<NA> 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:os.name=Linux 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:os.arch=amd64 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:os.version=3.10.0-693.el7.x86_64 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:user.name=root 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:user.home=/root 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:user.dir=/opt/module/hadoop-2.6.0/etc/hadoop 2020-03-07 16:01:17,173 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.free=39MB 2020-03-07 16:01:17,174 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.max=247MB 2020-03-07 16:01:17,175 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.total=44MB 2020-03-07 16:01:17,177 [myid:] - INFO [main:ZooKeeper@868] - Initiating client connection, connectString=node1:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@1a93a7ca 2020-03-07 16:01:17,181 [myid:] - INFO [main:X509Util@79] - Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation 2020-03-07 16:01:17,195 [myid:] - INFO [main:ClientCnxnSocket@237] - jute.maxbuffer value is 4194304 Bytes 2020-03-07 16:01:17,202 [myid:] - INFO [main:ClientCnxn@1653] - zookeeper.request.timeout value is 0. feature enabled= 2020-03-07 16:01:17,216 [myid:node1:2181] - INFO [main-SendThread(node1:2181):ClientCnxn$SendThread@1112] - Opening socket connection to server node1/10.0.0.31:2181. Will not attempt to authenticate using SASL (unknown error) Welcome to ZooKeeper! JLine support is enabled 2020-03-07 16:01:17,400 [myid:node1:2181] - INFO [main-SendThread(node1:2181):ClientCnxn$SendThread@959] - Socket connection established, initiating session, client: /10.0.0.31:59580, server: node1/10.0.0.31:2181 2020-03-07 16:01:17,420 [myid:node1:2181] - INFO [main-SendThread(node1:2181):ClientCnxn$SendThread@1394] - Session establishment complete on server node1/10.0.0.31:2181, sessionid = 0x10000f16a1c0001, negotiated timeout = 30000 WATCHER:: WatchedEvent state:SyncConnected type:None path:null [zk: node1:2181(CONNECTED) 0] ls / [hadoop-ha, zookeeper]

9、启动JournalNode

# hadoop-daemons.sh start journalnode

10、初始化JournalNode元数据

# hdfs namenode -initializeSharedEdits 20/03/07 16:13:16 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = node1/10.0.0.31 STARTUP_MSG: args = [-initializeSharedEdits] STARTUP_MSG: version = 2.6.0 STARTUP_MSG: classpath = /opt/module/hadoop-2.6.0/etc/hadoop:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jettison-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/gson-2.2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-client-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-framework-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/junit-4.11.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/htrace-core-3.0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-net-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/paranamer-2.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-el-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/avro-1.7.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/activation-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-nfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guice-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/activation-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/opt/module/hadoop-2.6.0/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z STARTUP_MSG: java = 1.8.0_191 ************************************************************/ 20/03/07 16:13:16 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 20/03/07 16:13:16 INFO namenode.NameNode: createNameNode [-initializeSharedEdits] 20/03/07 16:13:17 WARN common.Util: Path /opt/module/ha/dfs/name1 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/07 16:13:17 WARN common.Util: Path /opt/module/ha/dfs/name2 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/07 16:13:17 WARN common.Util: Path /opt/module/ha/dfs/name1 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/07 16:13:17 WARN common.Util: Path /opt/module/ha/dfs/name2 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/07 16:13:17 WARN common.Util: Path /opt/module/ha/dfs/name1 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/07 16:13:17 WARN common.Util: Path /opt/module/ha/dfs/name2 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/07 16:13:17 INFO namenode.FSNamesystem: No KeyProvider found. 20/03/07 16:13:17 INFO namenode.FSNamesystem: fsLock is fair:true 20/03/07 16:13:17 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 20/03/07 16:13:17 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 20/03/07 16:13:17 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 20/03/07 16:13:17 INFO blockmanagement.BlockManager: The block deletion will start around 2020 Mar 07 16:13:17 20/03/07 16:13:17 INFO util.GSet: Computing capacity for map BlocksMap 20/03/07 16:13:17 INFO util.GSet: VM type = 64-bit 20/03/07 16:13:17 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB 20/03/07 16:13:17 INFO util.GSet: capacity = 2^21 = 2097152 entries 20/03/07 16:13:17 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 20/03/07 16:13:17 INFO blockmanagement.BlockManager: defaultReplication = 3 20/03/07 16:13:17 INFO blockmanagement.BlockManager: maxReplication = 512 20/03/07 16:13:17 INFO blockmanagement.BlockManager: minReplication = 1 20/03/07 16:13:17 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 20/03/07 16:13:17 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false 20/03/07 16:13:17 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 20/03/07 16:13:17 INFO blockmanagement.BlockManager: encryptDataTransfer = false 20/03/07 16:13:17 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 20/03/07 16:13:17 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 20/03/07 16:13:17 INFO namenode.FSNamesystem: supergroup = supergroup 20/03/07 16:13:17 INFO namenode.FSNamesystem: isPermissionEnabled = true 20/03/07 16:13:17 INFO namenode.FSNamesystem: Determined nameservice ID: mycluster 20/03/07 16:13:17 INFO namenode.FSNamesystem: HA Enabled: true 20/03/07 16:13:17 INFO namenode.FSNamesystem: Append Enabled: true 20/03/07 16:13:18 INFO util.GSet: Computing capacity for map INodeMap 20/03/07 16:13:18 INFO util.GSet: VM type = 64-bit 20/03/07 16:13:18 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB 20/03/07 16:13:18 INFO util.GSet: capacity = 2^20 = 1048576 entries 20/03/07 16:13:18 INFO namenode.NameNode: Caching file names occuring more than 10 times 20/03/07 16:13:18 INFO util.GSet: Computing capacity for map cachedBlocks 20/03/07 16:13:18 INFO util.GSet: VM type = 64-bit 20/03/07 16:13:18 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB 20/03/07 16:13:18 INFO util.GSet: capacity = 2^18 = 262144 entries 20/03/07 16:13:18 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 20/03/07 16:13:18 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 20/03/07 16:13:18 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 20/03/07 16:13:18 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 20/03/07 16:13:18 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 20/03/07 16:13:18 INFO util.GSet: Computing capacity for map NameNodeRetryCache 20/03/07 16:13:18 INFO util.GSet: VM type = 64-bit 20/03/07 16:13:18 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB 20/03/07 16:13:18 INFO util.GSet: capacity = 2^15 = 32768 entries 20/03/07 16:13:18 INFO namenode.NNConf: ACLs enabled? false 20/03/07 16:13:18 INFO namenode.NNConf: XAttrs enabled? true 20/03/07 16:13:18 INFO namenode.NNConf: Maximum size of an xattr: 16384 20/03/07 16:13:18 INFO common.Storage: Lock on /opt/module/ha/dfs/name1/in_use.lock acquired by nodename 10466@node1 20/03/07 16:13:18 INFO common.Storage: Lock on /opt/module/ha/dfs/name2/in_use.lock acquired by nodename 10466@node1 20/03/07 16:13:18 INFO namenode.FSImage: No edit log streams selected. 20/03/07 16:13:18 INFO namenode.FSImageFormatPBINode: Loading 1 INodes. 20/03/07 16:13:18 INFO namenode.FSImageFormatProtobuf: Loaded FSImage in 0 seconds. 20/03/07 16:13:18 INFO namenode.FSImage: Loaded image for txid 16 from /opt/module/ha/dfs/name1/current/fsimage_0000000000000000016 20/03/07 16:13:18 INFO namenode.FSNamesystem: Need to save fs image? false (staleImage=true, haEnabled=true, isRollingUpgrade=false) 20/03/07 16:13:18 INFO namenode.NameCache: initialized with 0 entries 0 lookups 20/03/07 16:13:18 INFO namenode.FSNamesystem: Finished loading FSImage in 211 msecs 20/03/07 16:13:19 INFO namenode.FileJournalManager: Recovering unfinalized segments in /opt/module/ha/dfs/name1/current 20/03/07 16:13:19 INFO namenode.FileJournalManager: Recovering unfinalized segments in /opt/module/ha/dfs/name2/current 20/03/07 16:13:19 INFO client.QuorumJournalManager: Starting recovery process for unclosed journal segments... 20/03/07 16:13:19 INFO client.QuorumJournalManager: Successfully started new epoch 1 20/03/07 16:13:19 INFO util.ExitUtil: Exiting with status 0 20/03/07 16:13:19 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at node1/10.0.0.31 ************************************************************/

11、启动 hdfs

# start-dfs.sh Starting namenodes on [node1 node3] node1: starting namenode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-namenode-node1.out node3: starting namenode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-namenode-node3.out node2: starting datanode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-datanode-node2.out node1: starting datanode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-datanode-node1.out node3: starting datanode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-datanode-node3.out Starting journal nodes [node1 node2 node3] node1: starting journalnode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-journalnode-node1.out node2: starting journalnode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-journalnode-node2.out node3: starting journalnode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-journalnode-node3.out Starting ZK Failover Controllers on NN hosts [node1 node3] node1: starting zkfc, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-zkfc-node1.out node3: starting zkfc, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-zkfc-node3.out

12、验证

# ansible kafka -m shell -a "jps" node2 | SUCCESS | rc=0 >> 22817 QuorumPeerMain 27622 Jps 27356 JournalNode node3 | SUCCESS | rc=0 >> 31872 NameNode 24468 QuorumPeerMain 31782 JournalNode 32086 DFSZKFailoverController 32330 Jps node1 | SUCCESS | rc=0 >> 23505 NameNode 23302 JournalNode 9352 QuorumPeerMain 23898 DFSZKFailoverController 24126 Jps