Gradient Descent For Multiple Variables

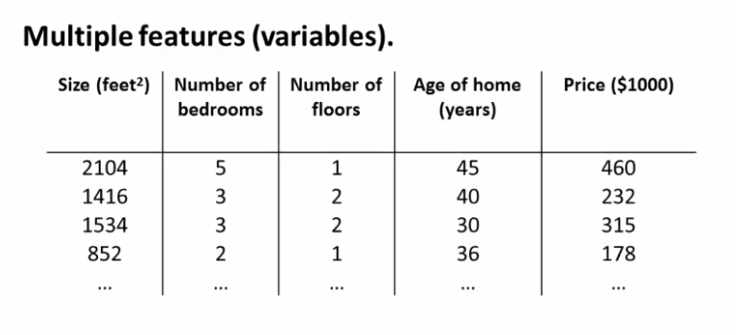

问题提出:Week2的梯度下降问题由单一变量转变成了多变量:

相应的公式如下:

梯度下降算法

\[ \begin{array}{l}{\text { repeat until convergence: }\{} \\ {\theta_{j}:=\theta_{j}-\alpha \frac{1}{m} \sum_{i=1}^{m}\left(h \theta\left(x^{(i)}\right)-y^{(i)}\right) \cdot x_{j}^{(i)} \quad \text { for } j:=0 \ldots n} \\ {\}}\end{array} \]

也就是:

\[ \begin{array}{l}{\text { repeat until convergence: }\{} \\ {\theta_{0}:=\theta_{0}-\alpha \frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) \cdot x_{0}^{(i)}} \\ {\theta_{1}:=\theta_{1}-\alpha \frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) \cdot x_{1}^{(i)}} \\ {\theta_{2}:=\theta_{2}-\alpha \frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) \cdot x_{2}^{(i)}} \\ {\cdots} \\ {\}^{\cdots}}\end{array} \]

\(\theta_{0}\)、\(\theta_{1}\)、\(\theta_{2}\)...这些参数要同时更新