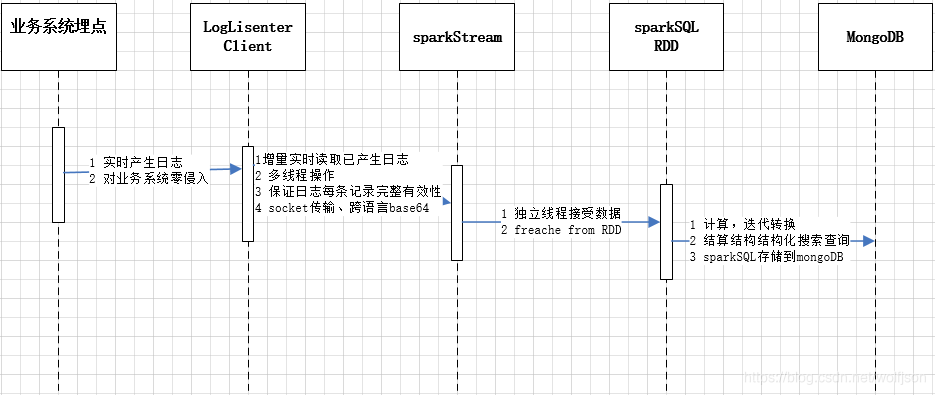

2.6 spark实战案例:实时日志分析

- 2.6.1 交互流程图

- 2.6.2 客户端监听器(java)

1 对his系统零侵入,医疗his log4j把日志切入到文件,为了保证中文完整性(socket传输、python解码)中文字符采用base64 encode

2 spark stream原生支持监听文件夹的变化,但与业务系统同网段跨机器,考虑到amout挂载共享、linux samba文件服务,但共享涉及对业务系统机器的更新以及共享文件文件控制

3 spark stream 支持kaffka日志监听,业务系统消息采用rabbitMq,综上2、3采用socket客户端监听

4 socket数据采集:保证业务系统日志完整性-需要熟悉医疗his业务日志,多线程获取日志-保证一条完整的日志不能被切分

5 java io包括aio、nio、bio,经过测试spark stream receiver线程目前版本仅支持bio

@SuppressWarnings("static-access") private void handleSocket() { lock.lock(); Writer writer = null; RandomAccessFile raf = null; try { File file = new File(filepath); raf = new RandomAccessFile(file, "r"); raf.seek(pointer); writer = new OutputStreamWriter(socket.getOutputStream(), "UTF-8"); String line = null; while ((line = raf.readLine()) != null) { if (Strings.isBlank(line)) { continue; } line = new String(line.getBytes("ISO-8859-1"), "UTF-8"); writer.write(line.concat("\n")); writer.flush(); logger.info("线程:{}----起始位置:{}----读取文件\n{} :",Thread.currentThread().getName(), pointer, line); pointer = raf.getFilePointer(); } Thread.currentThread().sleep(2000); } catch (Exception e) { logger.error(e.getMessage()); e.printStackTrace(); } finally { lock.unlock(); fclose(writer, raf); } }

- 2.6.3 sparkStream实时数据接收(python)

spark conf全局配置:

conf = SparkConf() conf.setAppName("HIS实时日志分析") conf.setMaster('yarn') # spark standalone conf.set('spark.executor.instances', 8) # cluster on yarn conf.set('spark.executor.memory', '1g') conf.set('spark.executor.cores', '1') # conf.set('spark.cores.max', '2') # conf.set('spark.logConf', True) conf.set('spark.streaming.blockInterval', 1000*4) # restart receiver interval

初始化spark stream:

sc = SparkContext(conf = conf) sc.setLogLevel('ERROR') ssc = StreamingContext(sc, 30) # time interval at which splits streaming data into block lines = ssc.socketTextStream(str(ip), int(port)) # lines.pprint() lines.foreachRDD(requestLog) lines.foreachRDD(errorLog) ssc.start() ssc.awaitTermination()

设置RDD checkpoint,hdfs数据备份恢复

sc.setCheckpointDir(‘hdfs://hadoop01:9000/hadoop/upload/checkpoint/’)

- 2.6.4 单例spark session

def getSparkSessionInstance(sparkConf): ''' :@desc 多个RDD全局共享sparksession .config("spark.mongodb.input.uri", "mongodb://127.0.0.1/test.coll") \ .config("spark.mongodb.output.uri", "mongodb://adxkj:[email protected]:27017/") \ :param sparkConf: :return: ''' if ('sparkSessionSingletonInstance' not in globals()): globals()['sparkSessionSingletonInstance'] = SparkSession \ .builder \ .config(conf=sparkConf) \ .getOrCreate() return globals()['sparkSessionSingletonInstance']

- 2.6.5 python 处理工具

def timeFomate(x): ''' :@desc 处理时间 :param x: :return: ''' if not isinstance(x, list): return None # filter microsenconds x.insert(0, ' '.join(x[0:2])) x.pop(1) x.pop(1) # filter '[]' rx = re.compile('([\[\]\',])') # text = rx.sub(r'\\\1', text) x = [rx.sub(r'', x[i]) for i in range(len(x))] # string to time x[0] = x[0][: x[0].find('.')] x[0] = ''.join(x[0]) x[0] = datetime.strptime(x[0], '%Y-%m-%d %H:%M:%S') return x def analyMod(x) : ''' :@desc 通过uri匹配模块 :param x: :return: ''' if x[6].strip() == ' ': return None hasMatch = False for k, v in URI_MODULES.items() : if x[6].strip().startswith('/' + k) : hasMatch = True x.append(v) if not hasMatch: x.append('公共模块') return x #python解码java编码中文字符 def decodeStr(x) : ''' :@desc base64解码 :param x: :return: ''' try: if x[9].strip() != '' : x[9] = base64.b64decode(x[9].encode("utf-8")).decode("utf-8") # x[9] = x[9][:5000] #mysql if x[11].strip() != '': x[11] = base64.b64decode(x[11].encode("utf-8")).decode("utf-8") # x[11] = x[11][:5000] #mysql if len(x) > 12 and x[12].strip() != '': x[12] = base64.b64decode(x[12].encode("utf-8")).decode("utf-8") except Exception as e: print("不能解码:", x, e) return x

- 2.6.6 sparkSQL储存mysql

def sqlMysql(sqlResult, table, url="jdbc:mysql://192.168.0.252:3306/hisLog", user='root', password=""): ''' :@desc sql结果保存 :param sqlResult: :param table: :param url: :param user: :param password: :return: ''' try: sqlResult.write \ .mode('append') \ .format("jdbc") \ .option("url", url) \ .option("dbtable", table) \ .option("user", user) \ .option("password", password) \ .save() except: excType, excValue, excTraceback = sys.exc_info() traceback.print_exception(excType, excValue, excTraceback, limit=3) # print(excValue) # traceback.print_tb(excTraceback)

- 2.6.7 sparkSQL存储mongoDB

备注:请把java-connector-mongodb、spark-mongodb jar放到spark classpath

def sqlMongodb(sqlResult, table): ''' :@desc sql结果保存 :param sqlResult: :param table: :param url: :param user: :param password: :return: ''' try: sqlResult.\ write.\ format("com.mongodb.spark.sql.DefaultSource"). \ options(uri="mongodb://adxkj:[email protected]:27017/hislog", database="hislog", collection=table, user="adxkj", password="123456").\ mode("append").\ save() except: excType, excValue, excTraceback = sys.exc_info() traceback.print_exception(excType, excValue, excTraceback, limit=3) # print(excValue) # traceback.print_tb(excTraceback)

- 2.6.8 RDD transform->action

def fromRDD(): reqrdd = rdd.map(lambda x: x.split(' ')).\ filter(lambda x: len(x) > 12 and x[4].find('http-nio-') > 0 and x[2].strip() == 'INFO').\ filter(lambda x: x[8].strip().upper().startswith('POST') or x[8].strip().upper().startswith('GET')).\ map(timeFomate).\ map(decodeStr).\ map(analyMod)

- 2.6.9 RDD转为dataframe、sparkSQL结构化查询

def constructSQL(reqrdd): sqlRdd = reqrdd.map(lambda x: Row(time=x[0], level=x[1], clz=x[2], thread=x[3], user=x[4], depart=x[5], uri=x[6], method=x[7], ip=x[8], request=x[9], oplen=x[10], respone=x[11], mod=x[12])) if reqrdd.isEmpty(): return None spark = getSparkSessionInstance(rdd.context.getConf()) df = spark.createDataFrame(sqlRdd) df.createOrReplaceTempView(REQUEST_TABLE) # 结构化后再分析 sqlresult = spark.sql("SELECT * FROM " + REQUEST_TABLE) sqlresult.show() return sqlresult

- 2.6.10 RDD持久化

#缓存到内存 reqrdd.cache() # rdd持久化到硬盘,降低内存消耗, cache onliy for StorageLevel.MEMORY_ONLY # reqrdd.persist(storageLevel=StorageLevel.MEMORY_AND_DISK_SER) #设置检查点,数据可从hdfs恢复 reqrdd.checkpoint() # checkpoint先cache避免计算两次,以前的rdd销毁

- 2.6.11 日志分析流程

def requestLog(time, rdd): ''' :@desc 请求日志分析 :param time: :param rdd: :return: ''' logging.info("+++++handle request log:length:%d,获取内容:++++++++++" % (rdd.count())) if rdd.isEmpty(): return None logging.info("++++++++++++++++++++++处理requestLog+++++++++++++++++++++++++++++++") fromRDD(rdd) sqlresult = constructSQL(reqrdd) # 保存 sqlMongodb(sqlresult, REQUEST_TABLE)

备注:需要完整代码请联系作者@狼