一、近期想实现一个推荐系统的API,目前正在筹备中,实现了一个新闻网站,用来做测试(大家可以看我以前的文章)今天分享的就是为我的新闻网站提供数据的爬虫代码

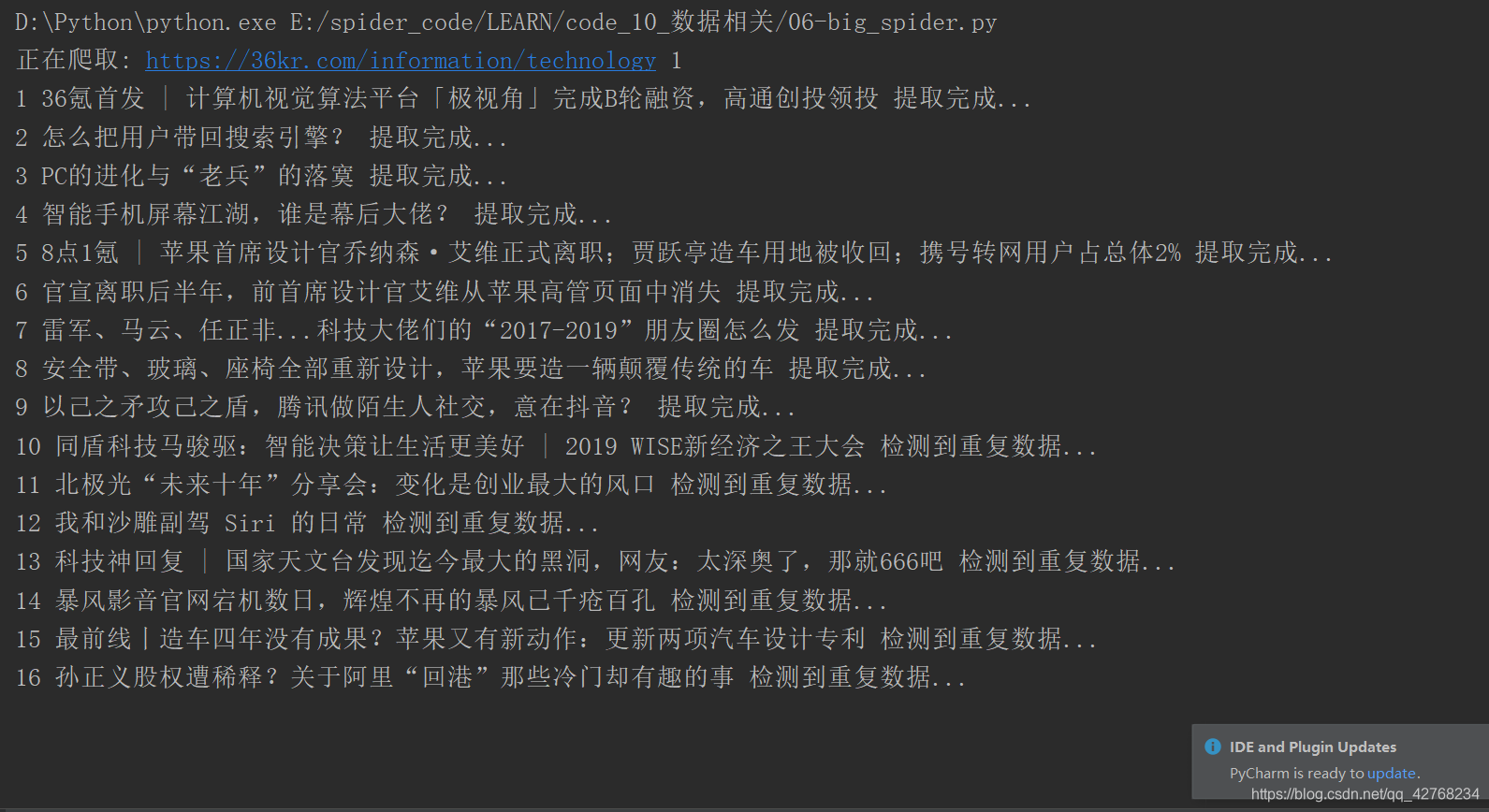

- 先看效果

检测到重复数据时程序是不会插入到数据库中的

二、实现思路

- 获取数据库以经存在的数据提取末位30

- 每次爬取数据只爬页面前30条数据

- 在爬取过程中做一个简单的对比(title)

三、源码

import datetime

import json

import time

import requests

import pandas as pd

from lxml import etree

from bs4 import BeautifulSoup

import pymysql

class Spider36Kr(object):

def __init__(self):

self.conn = pymysql.connect( # 链接MYSQL

host='localhost',

user='root',

passwd='963369',

db='news_data',

port=3306,

charset='utf8'

)

self.index = self.get_index() # 获取数据库中最后一个id

self.add_index = list() # 添加的id

self.url_list = ["https://36kr.com/information/technology", "https://36kr.com/information/travel",

"https://36kr.com/information/happy_life", "https://36kr.com/information/real_estate",

"https://36kr.com/information/web_zhichang"]

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36",

"Cookie": "acw_tc=2760823515711283155342601ebecc825e95a1d72e78ea3a1a457be0ef9d9d; kr_stat_uuid=kw8ZH26185472; krnewsfrontss=32b5a2ca9ace80d37d4885b144118ef8; M-XSRF-TOKEN=f204eeea5347017f38009858d2ee0eafb2894283c8ba69c228e3837114675d0d; M-XSRF-TOKEN.sig=GQU3yBNWi1oqskE4i2J0jyRpH8BpH13GLSsJ0sqFrDI; Hm_lvt_713123c60a0e86982326bae1a51083e1=1572744686,1572749871,1572825196,1572829918; Hm_lvt_1684191ccae0314c6254306a8333d090=1572744686,1572749871,1572825196,1572829918; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%22kw8ZH26185472%22%2C%22%24device_id%22%3A%2216dce8bab54a83-0d3eedac9e4ffb-e343166-1327104-16dce8bab55a79%22%2C%22props%22%3A%7B%22%24latest_referrer%22%3A%22https%3A%2F%2F36kr.com%2Finformation%2Ftechnology%22%2C%22%24latest_referrer_host%22%3A%2236kr.com%22%2C%22%24latest_traffic_source_type%22%3A%22%E5%BC%95%E8%8D%90%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC%22%7D%2C%22first_id%22%3A%2216dce8bab54a83-0d3eedac9e4ffb-e343166-1327104-16dce8bab55a79%22%7D; Hm_lpvt_1684191ccae0314c6254306a8333d090=1572830180; Hm_lpvt_713123c60a0e86982326bae1a51083e1=1572830180; SERVERID=6754aaff36cb16c614a357bbc08228ea|1572830181|1572829919",

}

self.deep_headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36",

"Cookie": "acw_tc=2760823515711283155342601ebecc825e95a1d72e78ea3a1a457be0ef9d9d; kr_stat_uuid=kw8ZH26185472; krnewsfrontss=32b5a2ca9ace80d37d4885b144118ef8; M-XSRF-TOKEN=f204eeea5347017f38009858d2ee0eafb2894283c8ba69c228e3837114675d0d; M-XSRF-TOKEN.sig=GQU3yBNWi1oqskE4i2J0jyRpH8BpH13GLSsJ0sqFrDI; Hm_lvt_713123c60a0e86982326bae1a51083e1=1572744686,1572749871,1572825196,1572829918; Hm_lvt_1684191ccae0314c6254306a8333d090=1572744686,1572749871,1572825196,1572829918; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%22kw8ZH26185472%22%2C%22%24device_id%22%3A%2216dce8bab54a83-0d3eedac9e4ffb-e343166-1327104-16dce8bab55a79%22%2C%22props%22%3A%7B%22%24latest_referrer%22%3A%22%22%2C%22%24latest_referrer_host%22%3A%22%22%2C%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%7D%2C%22first_id%22%3A%2216dce8bab54a83-0d3eedac9e4ffb-e343166-1327104-16dce8bab55a79%22%7D; Hm_lpvt_713123c60a0e86982326bae1a51083e1=1572830477; Hm_lpvt_1684191ccae0314c6254306a8333d090=1572830477; SERVERID=6754aaff36cb16c614a357bbc08228ea|1572830478|1572829919",

"authority": "36kr.com"

}

def __del__(self):

print("关闭数据库链接...")

self.conn.close()

def run(self):

for x, url in enumerate(self.url_list):

print("正在爬取:", url, x + 1)

data = self.request_page(url, x + 1) # 获取有效数据

try:

self.insert_data(data) # 入库

except pymysql.err.InternalError:

print("插入数据中出现异常")

print("进入20秒睡眠...")

time.sleep(20)

def get_index(self):

sql = 'select id from new'

new = pd.read_sql(sql, self.conn).tail(1)["id"].tolist()[0]

return new

def spider_one(self, num):

"""

爬取一个页面

:param num:

:return:

"""

url_list = ["https://36kr.com/information/technology", "https://36kr.com/information/travel",

"https://36kr.com/information/happy_life", "https://36kr.com/information/real_estate",

"https://36kr.com/information/web_zhichang"]

data = self.request_page(url_list[num - 1], num)

self.insert_data(data)

def request_page(self, temp_url, cate_id):

"""

请求页面数据

:return: 去重后的数据

"""

response = requests.get(temp_url, self.headers) # 发送请求

content = response.content.decode() # 解析数据

html = etree.HTML(content) # 转换格式

data_list = html.xpath("//script") # 提取数据

temp_data = None # 提取js中的json

for data in data_list:

try:

data = str(data.text).split("window.initialState=")[1]

temp_data = json.loads(data)

except IndexError:

pass

data_all = list() # 提取新闻数据

for x in range(10000):

try:

new_source = "36kr"

new_title = temp_data["information"]["informationList"][x]["title"]

index_image_url = temp_data["information"]["informationList"][x]["images"][0]

new_time = datetime.datetime.now().strftime('%Y-%m-%d')

digest = temp_data["information"]["informationList"][x]["summary"]

url = "https://36kr.com/p/" + str(temp_data["information"]["informationList"][x]["entity_id"])

new_content = self.deep_spider(url)

if str(new_title) not in self.sql_title_list(cate_id):

data_all.append(

[new_title, new_source, new_time, digest, index_image_url, new_content, 0, 0, cate_id])

print(x + 1, new_title, "提取完成...")

else:

print(x + 1, new_title, "检测到重复数据...")

except IndexError:

print("数据提取完成...")

break

data_all.sort()

data_all = pd.DataFrame(data_all, columns=["new_title", "new_source", "new_time", "digest", "index_image_url",

"new_content", "new_seenum", "new_disnum", "new_cate_id"])

return data_all

def deep_spider(self, url):

"""

提取新闻url里面的数据

:param url:

:return:

"""

response = requests.get(url, self.deep_headers)

content = response.content.decode()

soup = BeautifulSoup(content, "lxml")

data = soup.find_all('p')[0: -11]

data_str = ""

for x in data:

data_str = data_str + str(x)

return data_str

def sql_title_list(self, num):

"""

读取MYSQL中新闻的数据

:return: df(标题列表)

"""

if num == 1:

df = pd.read_sql("select * from new where new_cate_id = 1 and new_source = '36kr';",

self.conn) # 读取MySql 科技

elif num == 2:

df = pd.read_sql("select * from new where new_cate_id = 2 and new_source = '36kr';",

self.conn) # 读取MySql 汽车

elif num == 3:

df = pd.read_sql("select * from new where new_cate_id = 3 and new_source = '36kr';",

self.conn) # 读取MySql 生活

elif num == 4:

df = pd.read_sql("select * from new where new_cate_id = 4 and new_source = '36kr';",

self.conn) # 读取MySql 房产

elif num == 5:

df = pd.read_sql("select * from new where new_cate_id = 5 and new_source = '36kr';",

self.conn) # 读取MySql 职场

else:

df = pd.read_sql("select * from new where new_cate_id = 3 and new_source = '36kr';", self.conn)

df = df.tail(40)["new_title"].to_list()

return df

def insert_data(self, data):

cursor = self.conn.cursor() # 创建游标

sql = "insert into new(new_time, index_image_url, new_title, new_source," \

" new_seenum, new_disnum, digest, new_content, new_cate_id) values(%s, %s, %s, %s, %s, %s, %s, %s, %s)"

print(data.shape, "正在将数据插入数据库...")

for x, y, z, e, f, j, h, i, g in zip(data["new_time"], data["index_image_url"], data["new_title"],

data["new_source"], data["new_seenum"],

data["new_disnum"],

data["digest"], data["new_content"], data["new_cate_id"]):

cursor.execute(sql, (x, y, z, e, f, j, h, i, g))

self.conn.commit()

print(z, "插入成功...")

self.index += 1

self.add_index.append(self.index)

cursor.close()

if __name__ == '__main__':

spider = Spider36Kr()

spider.run()

# spider.spider_one(5)

注: 大家可以按照自身需求,自行修改(数据库等),仅用于学习交流

注: 新闻入库,在页面即可看到数据更新,项目做完后我会提供新闻网站和推荐系统的GitHub