本文旨在实现一个简单而完整的竞赛过程,包括自定义数据集的读取、神经网络模型的搭建、训练、测试(提交竞赛结果)

1.肺炎病灶检测竞赛介绍

来源:link

数据集规模:训练集20013张、测试集6671张

数据尺寸:每张图片为1024x1024x3

标签:取值范围[0,1,2,3,4],即每张图片对应的病灶个数

提交内容:csv文件,测试集的序号和对应的标签

2.数据集读取

数据集目录结构:

data

train

0.jpg

…

20012.jpg

test

0.jpg

…

6670.jpg

train.csv

dataset.py自定义数据集读取

#dataset.py

from torchvision import transforms, utils

from torch.utils.data import Dataset, DataLoader

from PIL import Image

import pandas as pd

import numpy as np

import os

def default_loader(path):

return Image.open(path).convert('RGB')

class Mydataset(Dataset):

def __init__(self,label_path='./data/train.csv',img_path='./data/train',transform=None,train=True):

super(Mydataset,self).__init__()

# load label

self.train = train

if train:

csv_label = pd.read_csv(label_path)

self.labels = np.zeros(csv_label.shape[0])

for row in range(csv_label.shape[0] - 1):

idx = csv_label.iloc[row, 0]

label = csv_label.iloc[row, 1]

self.labels[idx] = label

# load img (path)

self.path = img_path

if transform:

self.is_transform=True

self.transform=transform

else:

self.is_transform=False

def __getitem__(self, index):

img_path = self.path

img_name = str(index) + '.jpg'

img_data = Image.open(img_path+'/'+img_name).convert('RGB')

if self.is_transform:

img_data=self.transform(img_data)

if self.train:

img_label = self.labels[index]

return img_data,img_label

else:

return img_data

def __len__(self):

if self.train:

return self.labels.shape[0]

else:

return 6671

#size of test set

3.神经网络模型搭建

神经网络模型采用Alexnet

在model.py搭建网络模型

#model.py

import torch.nn as nn

import torch.nn.functional as F

class AlexNet(nn.Module):

def __init__(self,num_class):

super(AlexNet,self).__init__()

self.features=nn.Sequential(

nn.Conv2d(3,64,kernel_size=11,stride=4,padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(64,192,kernel_size=5,padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(192,384,kernel_size=3,padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384,256,kernel_size=3,padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256,256,kernel_size=3,padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2)

)

self.avgpool = nn.AdaptiveAvgPool2d((6,6))

self.classifier=nn.Sequential(

nn.Dropout(0.5),

nn.Linear(256*6*6,4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(4096,4096),

nn.ReLU(inplace=True),

nn.Linear(4096,num_class)

)

def forward(self,x):

x=self.features(x)

x=self.avgpool(x)

x=x.view(x.size(0),256*6*6)

logits=self.classifier(x)

probas=F.softmax(logits,dim=1)

return logits,probas

4.训练

train.py执行训练过程,将所给训练集按照4:1划分为训练集和验证集两部分,每对训练集完整训练一次后进行一次验证集精准度测试

#train.py

import os

import time

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torch.utils.data.dataset import Subset

from torch.utils.data import SubsetRandomSampler

from torchvision import datasets

from torchvision import transforms

from dataset import Mydataset

import matplotlib.pyplot as plt

from PIL import Image

from model import AlexNet

if torch.cuda.is_available():

torch.backends.cudnn.deterministic=True

# Hyperparameters

RANDOM_SEED=1

LEARNING_RATE=0.0001

BATCH_SIZE=256

NUM_EPOCHS=20

# Architecture

NUM_CLASSES=5

# Other

DEVICE="cuda:0"

LOAD_PARAMS=False

data_transform = transforms.Compose([

transforms.Scale((224,224),2),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485,0.456,0.406],std=[

0.229,0.224,0.225

])

])

dataset = Mydataset(transform=data_transform)

#split train and val dataset( 1.set parameters )

validation_split = 0.2

shuffle_dataset = True

#2.get indices of train anc val dataset

dataset_size = len(dataset)

indices = list(range(dataset_size))

split = int(np.floor(validation_split*dataset_size))

if shuffle_dataset:

np.random.seed(RANDOM_SEED)

np.random.shuffle(indices)

train_indices, val_indices = indices[split:],indices[:split]

#3.use SubsetRandomSampler to split train and val dataset

train_sampler = SubsetRandomSampler(train_indices)

val_sampler = SubsetRandomSampler(val_indices)

train_loader = torch.utils.data.DataLoader(dataset,batch_size=BATCH_SIZE,sampler=train_sampler)

val_loader = torch.utils.data.DataLoader(dataset,batch_size=BATCH_SIZE,sampler=val_sampler)

#check dataset

for images,labels in train_loader:

print('Train set')

print('Image batch dimensions:', images.size())

print('Image label dimensions:', labels.size())

break

for images,labels in val_loader:

print('\nVal set')

print('Image batch dimensions:', images.size())

print('Image label dimensions:', labels.size())

break

#get model

torch.manual_seed(RANDOM_SEED)

model = AlexNet(NUM_CLASSES)

model.to(DEVICE)

optimizer = torch.optim.Adam(model.parameters(),lr=LEARNING_RATE)

#training

def compute_acc(model,data_loader,device):

correct_pred,num_examples=0,0

model.eval()

for i,(features,targets) in enumerate(data_loader):

features = features.to(device)

targets = targets.to(device)

targets = targets.long()

logits, probas = model(features)

_,predicted_labels = torch.max(probas,1)

num_examples += targets.size(0)

assert predicted_labels.size()==targets.size()

correct_pred+=(predicted_labels==targets).sum()

return float(correct_pred)/num_examples*100

save_path = './model/'

start_time = time.time()

cost_list=[]

train_acc_list,val_acc_list=[],[]

#load params

if LOAD_PARAMS:

load_from_epoch = 10

checkpoint = torch.load(save_path+'modelparams.pth')

model.load_state_dict(checkpoint['net'])

optimizer.load_state_dict(checkpoint['optimizer'])

start_epoch = checkpoint['epoch']+1

train_acc_list=checkpoint['train_acc_list']

val_acc_list=checkpoint['val_acc_list']

cost_list=checkpoint['cost_list']

for epoch in range(NUM_EPOCHS):

model.train()

cost_epoch = 0

for batch_idx,(features,targets) in enumerate(train_loader):

features = features.to(DEVICE)

targets = targets.to(DEVICE)

targets = targets.long()

# Forward and backward prop

logits,probas = model(features)

cost = F.cross_entropy(logits,targets)

optimizer.zero_grad()

cost.backward()

# update parameters

optimizer.step()

cost_epoch += cost.item()

if not batch_idx%31:

print(f'Epoch: {epoch + 1:03d}/{NUM_EPOCHS:03d} | '

f'Batch {batch_idx:03d}/{len(train_loader):03d} |'

f' Cost: {cost_epoch:.4f}')

cost_list.append(cost_epoch)

model.eval()

with torch.set_grad_enabled(False):

train_acc = compute_acc(model,train_loader,device=DEVICE)

val_acc = compute_acc(model,val_loader,device=DEVICE)

print(f'Epoch: {epoch + 1:03d}/{NUM_EPOCHS:03d}\n'

f'Train ACC: {train_acc:.2f} | Validation ACC: {val_acc:.2f}')

train_acc_list.append(train_acc)

val_acc_list.append(val_acc)

elapsed = (time.time()-start_time)/60

print(f'Time elapsed: {elapsed:.2f} min')

#save model dict

state = {'net':model.state_dict(),'optimizer':optimizer.state_dict(),'epoch':epoch,

'train_acc_list':train_acc_list,'val_acc_list':val_acc_list,'cost_list':cost_list}

torch.save(state,save_path+'modelparams.pth')

elapsed = (time.time() - start_time)/60

print(f'Total Training Time: {elapsed:.2f} min')

5.测试

在完成训练之后,test.py在保存好的模型上对竞赛所给测试集进行识别,输出test.csv文件进行提交

#test.py

import pandas as pd

import numpy as np

import torch

from model import AlexNet

from torchvision import transforms

from dataset import Mydataset

from torch.utils.data import DataLoader

DEVICE="cuda:0"

NUM_CLASSES=5

save_path = './model/'

data_transform = transforms.Compose([

transforms.Scale((224,224),2),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485,0.456,0.406],std=[

0.229,0.224,0.225

])

])

dataset = Mydataset(img_path='./data/test',transform=data_transform,train=False)

test_loader = torch.utils.data.DataLoader(dataset,batch_size=256)

model = AlexNet(NUM_CLASSES)

checkpoint = torch.load(save_path+'modelparams.pth')

model.load_state_dict(checkpoint['net'])

model.to(DEVICE)

train_acc_list=checkpoint['train_acc_list']

val_acc_list=checkpoint['val_acc_list']

cost_list=checkpoint['cost_list']

model.eval()

predicted_labels_list=[]

for batch_idx,(features) in enumerate(test_loader):

features = features.to(DEVICE)

logits, probas = model(features)

_, predicted_labels = torch.max(probas, 1)

for label in predicted_labels:

predicted_labels_list.append(label.cpu().item())

dict={'label':predicted_labels_list}

df=pd.DataFrame(dict)

df.to_csv('test.csv',header=False,index=True)

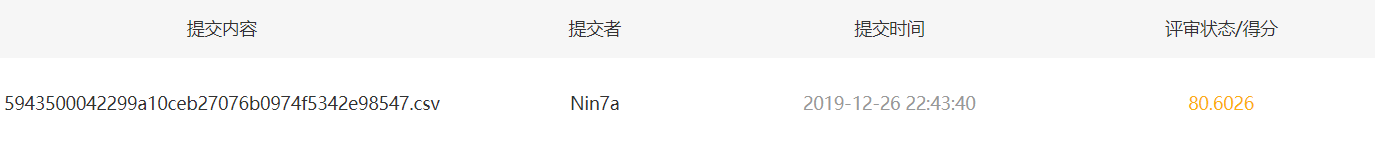

6.结果与分析

实际上这个比赛的数据集应该是个类别不平衡数据集,label为0的样本占比70%以上,肯定有更好的模型来处理这个问题,在此只是用pytorch实现一次完整过程,练练手,嘿嘿。

再次回顾一下项目目录树:

lung_pytorch

model.py

train.py

test.py

dataset.py

nd(label.cpu().item())

dict={‘label’:predicted_labels_list}

df=pd.DataFrame(dict)

df.to_csv(‘test.csv’,header=False,index=True)

## 6.结果与分析

[外链图片转存中...(img-lNcZK3M3-1579535169819)]

实际上这个比赛的数据集应该是个类别不平衡数据集,label为0的样本占比70%以上,肯定有更好的模型来处理这个问题,在此只是用pytorch实现一次完整过程,练练手,嘿嘿。

再次回顾一下项目目录树:

> lung_pytorch

>

> > model.py

> >

> > train.py

> >

> > test.py

> >

> > dataset.py

> >

> > data