目录

一、Container下Sequential层的介绍

Sequential — PyTorch 1.13 documentation

- 传入参数为module

CLASS torch.nn.Sequential(*args: Module)

- 传入参数为有序字典

1.1 作用

A sequential container. Modules will be added to it in the order they are passed in the constructor.

构建一个序列化的container,可以把想要在神经网络中添加的操作都放进去,按顺序进行执行。

1.2 Example

把卷积、非线性激活、卷积、非线性激活使用sequantial进行组合,一起放在构建的model中。

# Using Sequential to create a small model. When `model` is run,

# input will first be passed to `Conv2d(1,20,5)`. The output of

# `Conv2d(1,20,5)` will be used as the input to the first

# `ReLU`; the output of the first `ReLU` will become the input

# for `Conv2d(20,64,5)`. Finally, the output of

# `Conv2d(20,64,5)` will be used as input to the second `ReLU`

model = nn.Sequential(

nn.Conv2d(1,20,5),

nn.ReLU(),

nn.Conv2d(20,64,5),

nn.ReLU()

)

# Using Sequential with OrderedDict. This is functionally the

# same as the above code

model = nn.Sequential(OrderedDict([

('conv1', nn.Conv2d(1,20,5)),

('relu1', nn.ReLU()),

('conv2', nn.Conv2d(20,64,5)),

('relu2', nn.ReLU())

]))二、实战神经网络搭建以及sequential的使用

2.1 前期准备

2.1.1 神经网络模型

使用数据集为CIFAR10,其模型结构如下所示。

- 输入大小为3*32*32

- 经过3次【5*5卷积核卷积-2*2池化核池化】操作后,输出为64*4*4大小

- 展平后为1*1024大小

- 经过全连接层后输出为1*10

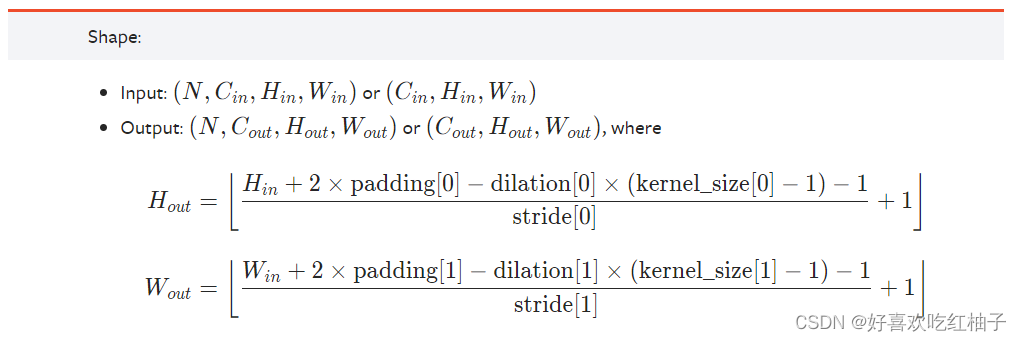

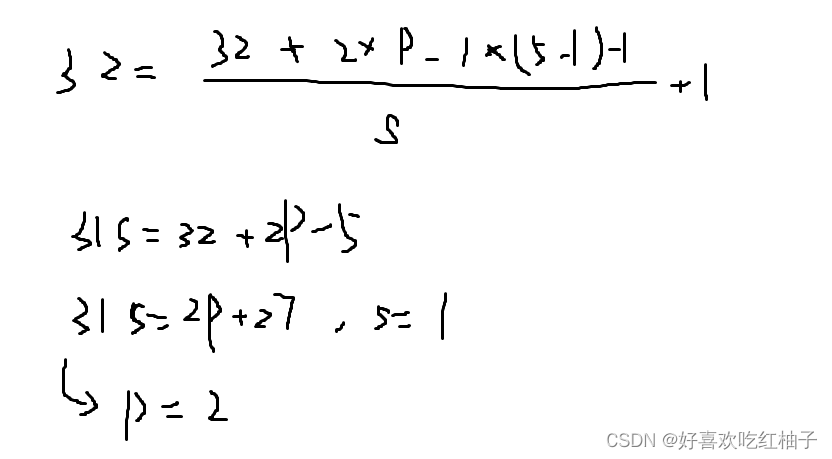

2.1.2 求卷积过程中的padding参数

在我们的第一个卷积过程中,输入通道数为3,输出通道数为32,卷积核大小为5,Conv2d所需的前三个参数已设置完成,因为没有使用空洞卷积,所示dilation默认为1,无需设置,步长stride默认为1,下面需要使用公式计算padding和stride的值为多少。

使用上述公式进行计算,把H,dilation,kernel_size进行代入,设置stride=1,padding求出=2。

如果保持输入输出的大小不变,则stride=1,padding=2,后面的卷积中都是使用该两个值,无需进行计算。

2.2 网络搭建

根据模型中的步骤进行卷积池化和全连接操作。

import torch.nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Maweiyi(torch.nn.Module):

def __init__(self):

super(Maweiyi, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2)

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2)

self.maxpool2 = MaxPool2d(kernel_size=2)

self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2)

self.maxpool3 = MaxPool2d(kernel_size=2)

self.flatten = Flatten()

self.linear1 = Linear(in_features=1024, out_features=64)

self.linear2 = Linear(in_features=64, out_features=10)

def forward(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.linear1(x)

x = self.linear2(x)

return x2.3 sequential的使用

可以看到上面神经网络进行搭建时非常繁琐,在init中进行了多个操作的定以后需要在forward中逐次进行调用,因此我们使用sequential方法,在init方法中直接定义一个model,然后在下面的forward方法中直接使用一次model即可。

在init方法中:

self.model1 = Sequential(

Conv2d(...)

MaxPool2d(...)

Linear(...)

)

在forward方法中:

x = self.model(x)

return x

class Maweiyi(torch.nn.Module):

def __init__(self):

super(Maweiyi, self).__init__()

self.model1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model1(x)

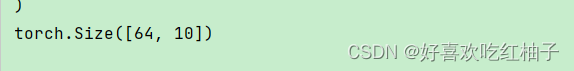

return x2.4 检验网络正确性

创建输入的tensor,大小为神经网络中一开始输入的大小,为(64,3,32,32),经过神经网络后输出大小应为10。

使用torch.ones方法进行创建规定大小的tensor

(21条消息) torch.ones(),torch.add(),torch.zeros(),torch.squeeze()_skycrygg的博客-CSDN博客_torch.add()

input = torch.ones((64,3,32,32))

output = maweiyi(input)

print(output.shape)输出如下:

三、完整代码

3.1 控制台输出

import torch.nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

class Maweiyi(torch.nn.Module):

def __init__(self):

super(Maweiyi, self).__init__()

self.model1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model1(x)

return x

maweiyi = Maweiyi()

print(maweiyi)

input = torch.ones((64,3,32,32))

output = maweiyi(input)

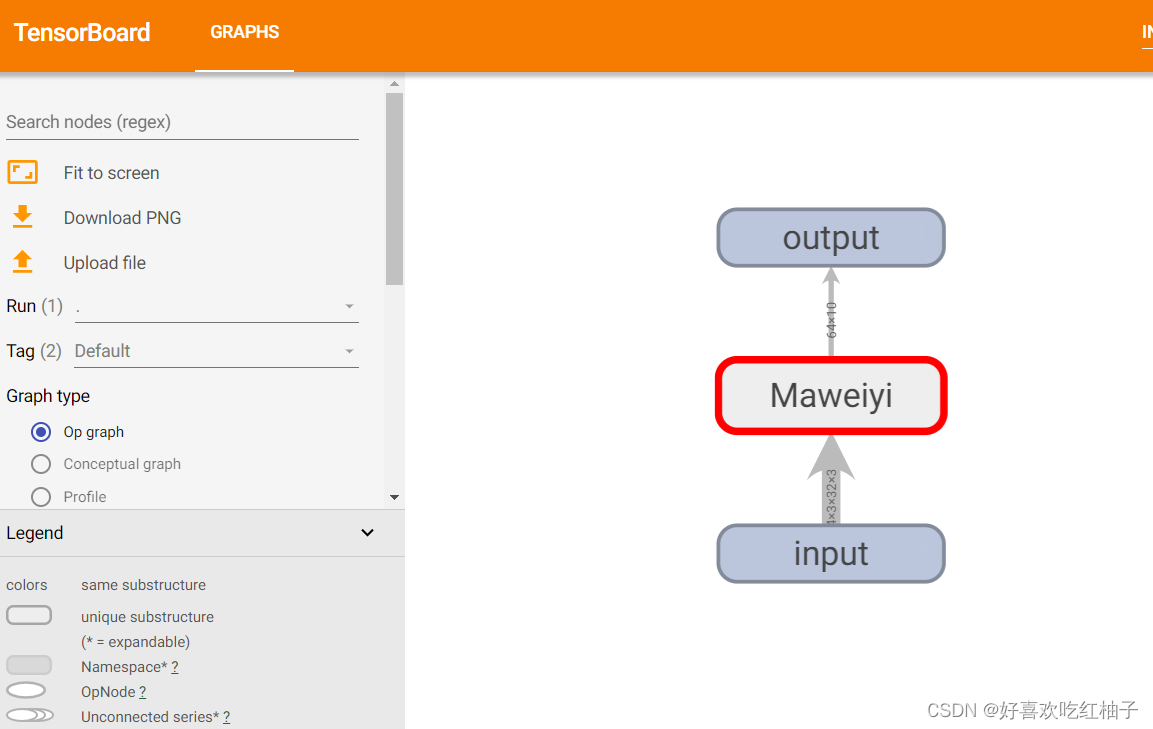

print(output.shape)3.2 tensorboard可视化

使用SummaryWriter中的add_gragh方法生成神经网络模型 。

import torch.nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Maweiyi(torch.nn.Module):

def __init__(self):

super(Maweiyi, self).__init__()

self.model1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model1(x)

return x

maweiyi = Maweiyi()

print(maweiyi)

input = torch.ones((64,3,32,32))

output = maweiyi(input)

print(output.shape)

writer = SummaryWriter("logs")

writer.add_graph(maweiyi,input)

writer.close()输出: