这几天公司需求,要做文本分类,看了些文章,各种机器学习、神经网络,看不懂。自己结合结巴分词做了个简单的文本分类实现功能,总体来说还可以。

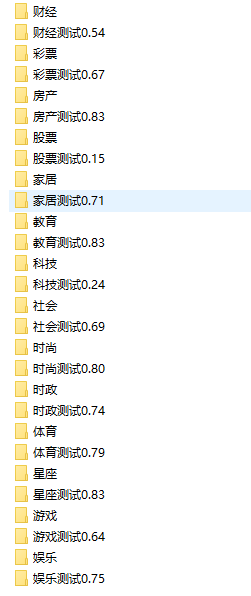

运行结果:

自己写的,咋方便咋来,反正各种半自动,没怎么注重过程,小伙伴看了之后可以自己改一下,让它更便捷。

首先要有数据,我是爬的各种新闻网站,得到的各类数据。

有了数据之后,就可以写这次的代码了

首先是训练数据,通俗来说就是把文章处理成想要的格式。

第一部分代码,目的是把文章取出来,然后结巴分词之后再存起来:

import jieba

import os

file_dir = 'D:\新闻数据\娱乐'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

for x in files:

try:

path = root + '\\' + x

print(path)

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

#没有stopwords的去网上下载好了,这个是停用词,用来去掉那些没有意义的词语的

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

#去掉长度小于2的词,因为一个字的词,意义不太大,当然不想去掉也无所谓

if len(x)>=2:

if x not in stopwords:

with open("D:\处理新闻数据\娱乐.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

print(x)

except:

pass

别忘了自己建个存数据的文件夹,我的是把处理后的数据放到了‘D:\处理新闻数据\娱乐.txt’

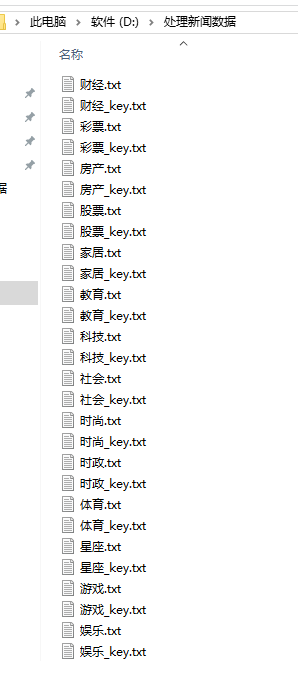

然后。。。。嘿嘿,半自动嘛,上面的代码换啊,娱乐换成财经、彩票、房产啊啥的。。对应的存储位置也换成财经、彩票、房产啊啥的。下图我处理好存的样子,带_key的先忽略,还没走到这一步,所以你的文件夹下还没有

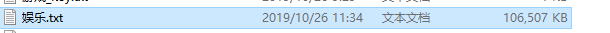

打开娱乐.txt看看,就是下图这个样子了,这个娱乐.txt里面存储了咱们从娱乐文件夹下拿到的所有娱乐文章分词后的结果。这里要注意的是,文章不要太多了,不然娱乐.txt文件会很大。我的是这么大,再大的话待会运行程序打开它的时候,程序会报内存不足的错误,所以还是不要爬下来太多的文章,几千条就行了

这样一来,娱乐相关的文章都分词完成,并且存到一个txt文件了。

有的同学说,我不想改一下运行一下,好几个文件夹,好麻烦。

好吧,拿去:

做好电脑cpu跑满的准备,会很卡哦,反正我的电脑很垃圾。

怕的话也可以一次少跑几个,我写的是六个,你可以写三个嘛

#encoding=gbk

import jieba

import os

from multiprocessing import Process

def shehui():

file_dir = 'D:\新闻数据\社会'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

num=0

for x in files:

num+=1

print(file_dir,num)

try:

path = root + '\\' + x

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x)>=2:

if x not in stopwords:

with open("D:\处理新闻数据\社会.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

except:

pass

def shishang():

file_dir = 'D:\新闻数据\时尚'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

num = 0

for x in files:

num += 1

print(file_dir, num)

try:

path = root + '\\' + x

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x)>=2:

if x not in stopwords:

with open("D:\处理新闻数据\时尚.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

except:

pass

def shizheng():

file_dir = 'D:\新闻数据\时政'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

num = 0

for x in files:

num += 1

print(file_dir, num)

try:

path = root + '\\' + x

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x)>=2:

if x not in stopwords:

with open("D:\处理新闻数据\时政.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

except:

pass

def tiyu():

file_dir = 'D:\新闻数据\体育'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

num = 0

for x in files:

num += 1

print(file_dir, num)

try:

path = root + '\\' + x

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x) >= 2:

if x not in stopwords:

with open("D:\处理新闻数据\体育.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

except:

pass

def xingzuo():

file_dir = 'D:\新闻数据\星座'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

num = 0

for x in files:

num += 1

print(file_dir, num)

try:

path = root + '\\' + x

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x) >= 2:

if x not in stopwords:

with open("D:\处理新闻数据\星座.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

except:

pass

def youxi():

file_dir = 'D:\新闻数据\游戏'

for root, dirs, files in os.walk(file_dir):

# print(root) # 当前目录路径

# print(dirs) # 当前路径下所有子目录

# print(files) # 当前路径下所有非目录子文件

num = 0

for x in files:

num += 1

print(file_dir, num)

try:

path = root + '\\' + x

with open(path, "r+", encoding="utf-8") as f:

content = f.read().replace('\n', '')

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

# 全模式

test1 = jieba.cut(content, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x) >= 2:

if x not in stopwords:

with open("D:\处理新闻数据\游戏.txt", "a+", encoding="utf-8") as f:

f.write(x + ",")

except:

pass

if __name__=='__main__':

p1=Process(target=shehui)

p2=Process(target=shishang)

p3=Process(target=shizheng)

p4=Process(target=tiyu)

p5 = Process(target=xingzuo)

p6 = Process(target=youxi)

# 运行多进程, 执行任务

p1.start()

p2.start()

p3.start()

p4.start()

p5.start()

p6.start()

# 等待所有的子进程执行结束, 再执行主进程的内容

p1.join()

p2.join()

p3.join()

p4.join()

p5.join()

p6.join()

这样一来,你的‘D:\处理新闻数据’文件夹下面就有六个各类的文件了

ok,文章处理圆满完成

进入下一个阶段。这个阶段就是要分析前面第一阶段整好的那些个txt了。那些txt里存的是各领域的相关文章分词后的结果,也就是说比如你有10篇娱乐方面的文章,那个娱乐.txt里存的就是这10篇文章被分词之后的结果。这样一来,我们通过计算这个娱乐.txt文件里哪些词出现的次数多,就说明娱乐类文章里面比较喜欢用这种词语,为了好记,我们把它叫做。。娱乐用词(我自己编的名字啊)。那么,我们把这些词语提取出来,存起来。等到以后我们碰到另一篇文章,咱们把这篇文章咔咔咔,分词了,然后用这篇文章里面的词跟咱们之前存的娱乐用词对比,如果这篇文章里很多词语都是用的娱乐用词,那咱们就说它是娱乐类文章

这段话有点长,不懂得多看几遍,这里也是这套代码的核心思路。

理解了之后,上代码:

#我们把娱乐.txt取出来

with open('D:\处理新闻数据\娱乐.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出所有词语出现的次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

#我们把count 这个字典里存的所有value值拿出来,扔到value_list列表里

value_list=[]

for key, value in count.items():

value_list.append(value)

#然后对列表开始操作

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前35个

ten_list=value_list[0:35]

#这35个就是最大的value值了,也就是所有词语里面,出现次数最多的前35个词语的次数

print(ten_list)

#光知道词语出现的次数不行啊,我们要的是这35个词语是谁:

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\娱乐_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

这时候我们就把娱乐领域那么多文章里,出现次数最多的35个词语拿出来了,存放在另一个txt文件里,叫做娱乐_key.txt。这个35是我自己写的啊,不固定,自己可以打印几次,找最合适的数量出来。这个数量取多了,那些娱乐用词就不那么准了。取少了的话,会导致后面我们用这几个词跟别的文章匹配的时候,匹配机会也少了。打个比方,你就取了一个词,叫。。‘明星‘,后来你用它跟另一篇娱乐类文章匹配,但是这篇文章里就没提到明星,你就说这篇文章不是娱乐类文章,岂不是出错了。

眼(tou)尖(lan)的同学又看出来了,我上面这部分代码还是要运行一次,改一下文件名,才能生成对应的key文件。

好吧,给你,跟上一个多进程一样的嘛:

from multiprocessing import Process

def shehui():

with open('D:\处理新闻数据\社会.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出各词出现次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

value_list=[]

for key, value in count.items():

value_list.append(value)

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前15个

ten_list=value_list[0:20]

print(ten_list)

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\社会_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

def shishang():

with open('D:\处理新闻数据\时尚.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出各词出现次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

value_list=[]

for key, value in count.items():

value_list.append(value)

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前15个

ten_list=value_list[0:35]

print(ten_list)

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\时尚_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

def shizheng():

with open('D:\处理新闻数据\时政.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出各词出现次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

value_list=[]

for key, value in count.items():

value_list.append(value)

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前15个

ten_list=value_list[0:35]

print(ten_list)

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\时政_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

def tiyu():

with open('D:\处理新闻数据\体育.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出各词出现次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

value_list=[]

for key, value in count.items():

value_list.append(value)

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前15个

ten_list=value_list[0:35]

print(ten_list)

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\体育_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

def xingzuo():

with open('D:\处理新闻数据\星座.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出各词出现次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

value_list=[]

for key, value in count.items():

value_list.append(value)

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前15个

ten_list=value_list[0:35]

print(ten_list)

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\星座_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

def youxi():

with open('D:\处理新闻数据\游戏.txt', encoding='utf8') as f:

content = f.read()

data = content[:-1].split(',')

#计算出各词出现次数

count = {}

for character in data:

count.setdefault(character, 0)

count[character] = count[character] + 1

value_list=[]

for key, value in count.items():

value_list.append(value)

#从小到大排序

value_list.sort()

#反转,从大到小

value_list.reverse()

#取前15个

ten_list=value_list[0:35]

print(ten_list)

for key, value in count.items():

if value in ten_list:

with open("D:\处理新闻数据\游戏_key.txt", "a+", encoding="utf-8") as f:

f.write(key+ ",")

print(key)

if __name__=='__main__':

p1=Process(target=shehui)

p2=Process(target=shishang)

p3=Process(target=shizheng)

p4=Process(target=tiyu)

p5 = Process(target=xingzuo)

p6 = Process(target=youxi)

# 运行多进程, 执行任务

p1.start()

p2.start()

p3.start()

p4.start()

p5.start()

p6.start()

# 等待所有的子进程执行结束, 再执行主进程的内容

p1.join()

p2.join()

p3.join()

p4.join()

p5.join()

p6.join()

此时我们第二阶段,取出娱乐用词,财经用词,体育用词,各种用词的环节就结束了,接下来就是找一篇文章,然后咔咔咔,分尸了。。分词了,然后,跟咱们的各种词对比,猜测他是哪一类文章了。

上代码:

根据自己的分类进行增删就好了。然后去各类新闻网站复制各种类别的文章放到测试.txt文件里,运行,看看程序能不能猜得出来你复制的是哪一类文章。

#encoding=gbk

from jieba.analyse import *

import jieba

import os

#读取测试文章

with open('测试.txt',encoding='utf8') as f:

data = f.read()

#读取各类别的关键词

with open('D:\处理新闻数据\财经_key.txt',encoding='utf8') as f:

data_caijing = f.read()[:-1].split(',')

with open('D:\处理新闻数据\彩票_key.txt',encoding='utf8') as f:

data_caipiao= f.read()[:-1].split(',')

with open('D:\处理新闻数据\房产_key.txt',encoding='utf8') as f:

data_fangcan = f.read()[:-1].split(',')

with open('D:\处理新闻数据\股票_key.txt',encoding='utf8') as f:

data_gupiao = f.read()[:-1].split(',')

with open('D:\处理新闻数据\家居_key.txt',encoding='utf8') as f:

data_jiaju = f.read()[:-1].split(',')

with open('D:\处理新闻数据\教育_key.txt',encoding='utf8') as f:

data_jiaoyu = f.read()[:-1].split(',')

with open('D:\处理新闻数据\科技_key.txt',encoding='utf8') as f:

data_keji= f.read()[:-1].split(',')

with open('D:\处理新闻数据\社会_key.txt',encoding='utf8') as f:

data_shehui = f.read()[:-1].split(',')

with open('D:\处理新闻数据\时尚_key.txt',encoding='utf8') as f:

data_shishang = f.read()[:-1].split(',')

with open('D:\处理新闻数据\时政_key.txt',encoding='utf8') as f:

data_shizheng= f.read()[:-1].split(',')

with open('D:\处理新闻数据\体育_key.txt',encoding='utf8') as f:

data_tiyu = f.read()[:-1].split(',')

with open('D:\处理新闻数据\星座_key.txt',encoding='utf8') as f:

data_xingzuo = f.read()[:-1].split(',')

with open('D:\处理新闻数据\游戏_key.txt',encoding='utf8') as f:

data_youxi= f.read()[:-1].split(',')

with open('D:\处理新闻数据\娱乐_key.txt',encoding='utf8') as f:

data_yule = f.read()[:-1].split(',')

#定义空列表,待会存入和测试集相同的关键词

caijing_list=[]

caipiao_list=[]

fangcan_list=[]

gupiao_list=[]

jiaju_list=[]

jiaoyu_list=[]

keji_list=[]

shehui_list=[]

shishang_list=[]

shizheng_list=[]

tiyu_list=[]

xingzuo_list=[]

youxi_list=[]

yule_list=[]

# 加载停用词

stopwords = [line.strip() for line in open('stopwords.txt', 'r', encoding='utf-8').readlines()]

#分词,取出测试数据的关键词并判断这些关键词都在哪些类别的关键词文件内存在,然后把这些词存到对应的列表中

test1 = jieba.cut(data, cut_all=True)

list_test1 = list(test1)

for x in list_test1:

if len(x) >= 2:

if x not in stopwords:

if x in data_keji:

print(x,'在科技')

keji_list.append(x)

if x in data_tiyu:

print(x, '在体育')

tiyu_list.append(x)

if x in data_caijing:

print(x, '在财经')

caijing_list.append(x)

if x in data_caipiao:

print(x, '在彩票')

caipiao_list.append(x)

if x in data_fangcan:

print(x, '在房产')

fangcan_list.append(x)

if x in data_gupiao:

print(x, '在股票')

gupiao_list.append(x)

if x in data_jiaju:

print(x, '在家居')

jiaju_list.append(x)

if x in data_jiaoyu:

print(x, '在教育')

jiaoyu_list.append(x)

if x in data_shehui:

print(x, '在社会')

shehui_list.append(x)

if x in data_shishang:

print(x, '在时尚')

shishang_list.append(x)

if x in data_shizheng:

print(x, '在时政')

shizheng_list.append(x)

if x in data_xingzuo:

print(x, '在星座')

xingzuo_list.append(x)

if x in data_youxi:

print(x, '在游戏')

youxi_list.append(x)

if x in data_yule:

print(x, '在娱乐')

yule_list.append(x)

# len(keji_list):这样可以知道咱们的测试文章里的词语,有多少跟科技相关

# dic1['科技']=len_keji: 组成键值对放入字典中

#建一个空字典

dic1={}

len_keji=len(keji_list)

dic1['科技']=len_keji

len_tiyu=len(tiyu_list)

dic1['体育']=len_tiyu

len_caijing=len(caijing_list)

dic1['财经']=len_caijing

len_caipiao=len(caipiao_list)

dic1['彩票']=len_caipiao

len_fangcan=len(fangcan_list)

dic1['房产']=len_fangcan

len_gupiao=len(gupiao_list)

dic1['股票']=len_gupiao

len_jiaju=len(jiaju_list)

dic1['家居']=len_jiaju

len_jiaoyu=len(jiaoyu_list)

dic1['教育']=len_jiaoyu

len_shehui=len(shehui_list)

dic1['社会']=len_shehui

len_shishang=len(shishang_list)

dic1['时尚']=len_shishang

len_shizheng=len(shizheng_list)

dic1['时政']=len_shizheng

len_xingzuo=len(xingzuo_list)

dic1['星座']=len_xingzuo

len_youxi=len(youxi_list)

dic1['游戏']=len_youxi

len_yule=len(yule_list)

dic1['娱乐']=len_yule

print(dic1)

#找出value值最大的,那么也就是说,对应的该类别在测试文章里出现的次数最多,就可以猜测,这篇文章就是该类别文章

for key,value in dic1.items():

if(value == max(dic1.values()))and value!=0:

print(key,value)

if key=='科技':

print('这篇文章分类应该是科技')

if key=='体育':

print('这篇文章分类应该是体育')

if key == '财经':

print('这篇文章分类应该是财经')

if key=='彩票':

print('这篇文章分类应该是彩票')

if key=='房产':

print('这篇文章分类应该是房产')

if key=='股票':

print('这篇文章分类应该是股票')

if key=='家居':

print('这篇文章分类应该是家居')

if key=='教育':

print('这篇文章分类应该是教育')

if key=='社会':

print('这篇文章分类应该是社会')

if key=='时尚':

print('这篇文章分类应该是时尚')

if key=='时政':

print('这篇文章分类应该是时政')

if key=='星座':

print('这篇文章分类应该是星座')

if key=='游戏':

print('这篇文章分类应该是游戏')

if key == '娱乐':

print('这篇文章分类应该是娱乐')

else:

pass

运行结果:

先复制一篇科技类的

再来一篇娱乐类

经过我的测试,结果还可以

所用训练文章的不同和多少,会影响最终的预测结果。我这边的股票和科技类的文章,预测准确率要低得多

下班了,明天把看的fasttest的理解发出来,共同学习。

有建议或意见的小伙伴,欢迎留言,欢迎喷,欢迎请教