1. http://www.swazzy.com/docs/hadoop/index.php可以输入hadoop类, 查看它的UML关系图.

2. https://issues.apache.org/jira/browse/MAPREDUCE-279 Hadoop Map-Reduce 2.0(Yarn)的架构文档,详细说明等.

2013.07.14 LeaseManager--文件写入时中断, 各数据节点需要进行那些操作, 找到写入数据最少的节点, 提交到NameNode, 详细看类说明.

2013.08.08 HDFS portion of ZK-based FailoverController 基于zookeeper的自切换Namenode的active与standy状态, https://issues.apache.org/jira/browse/HDFS-2185 有详细的设计文档.这里有一篇翻译文档, http://blog.csdn.net/chenpingbupt/article/details/7922042, 角色像下面:

个人理解: 整个流程就像控制多个坦克打仗,攻击一个目标有一辆坦克发炮就行, 如果接收指令的坦克没发炮, 那么就要由其它备用坦克来打,HealthMonitor就像是坦克操作员, 负责检查坦克是不是可以打炮, ActiveStandbyElector就像时刻将坦克现状发送给指挥系统, 接收系统指令, 把它转给指挥官ZKFailoverController(4.3版本为abstract类, 具体实现DFSZKFailoverController与MRZKFailoverController), 由指挥官来决定来发炮与否及将结果或等待状态由ActiveStandbyElector回馈给指挥系统.

2013.08.09 INodeDirectory中children使用new ArrayList<INode>(5), 因为INode实现Comparable<byte[]>接口, compareTo(byte[] .)对比INode的name(getBytes("UTF8")), 向dir下加入增加文件时, 调用INodeDirectory.addChild()方法, 利用Collections中的static <T> int binarySearch(List<? extends Comparable<? super T>> list, T key) 查找要插入的下标, binarySearch的前提是list已经sort过.

推导:name名称不宜长, 目录下内容不宜多, 查找特定目录下耗时log(o).

疑问:INodeDirectory child为什么用List而不用Set呢?

2013.08.10

Understanding Hadoop Clusters and the Network:

http://bradhedlund.com/2011/09/10/understanding-hadoop-clusters-and-the-network/从将文件写入到hdfs开始, 准备写文件(存放数据应该考虑的拓扑结构(Rack Awareness), 写文件过程中, 写完后, Job 运行Map/Reduce, 因为新增服务器致使的数据不均衡及均衡工具.

Writing Files to HDFS,

,

,

Hadoop Rack Awareness,

,

,

Preparing HDFS Writes,

,

,

HDFS Write Pipeline,

,

,

HDFS Pipeline Write Success,

,

,

HDFS Multi-block Replication Pipeline,

,

,

NameNode Heartbeats,

,

,

Re-replicating Missing Replicas(有数据复本丢失时),

,

,

Client Read from HDFS,

,

,

Data Node reads from HDFS,

,

,

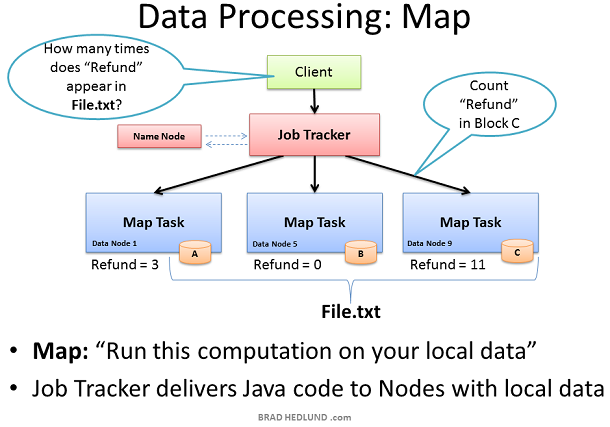

Map Task,

,

,

What if Map Task data isn’t local?

,

,

Reduce Task computes data received from Map Tasks,

,

,

Unbalanced Hadoop Cluster,

,

,

Hadoop Cluster Balancer,

,

,