## 由于走到ETL 环节

操作ETL: 数据清洗后放入hbase zkServer.sh start; start-dfs.sh ; [root@node1 ~]# ./shells/start-yarn-ha.sh start-yarn.sh ssh root@node3 "$HADOOP_HOME/sbin/yarn-daemon.sh start resourcemanager" ssh root@node4 "$HADOOP_HOME/sbin/yarn-daemon.sh start resourcemanager" start-hbase.sh

-------------------创建hbase表

hbase shell hbase(main):001:0> create 'eventlog','log' ## 执行如下程序后,再scan表查看数据已经etl后的数据。

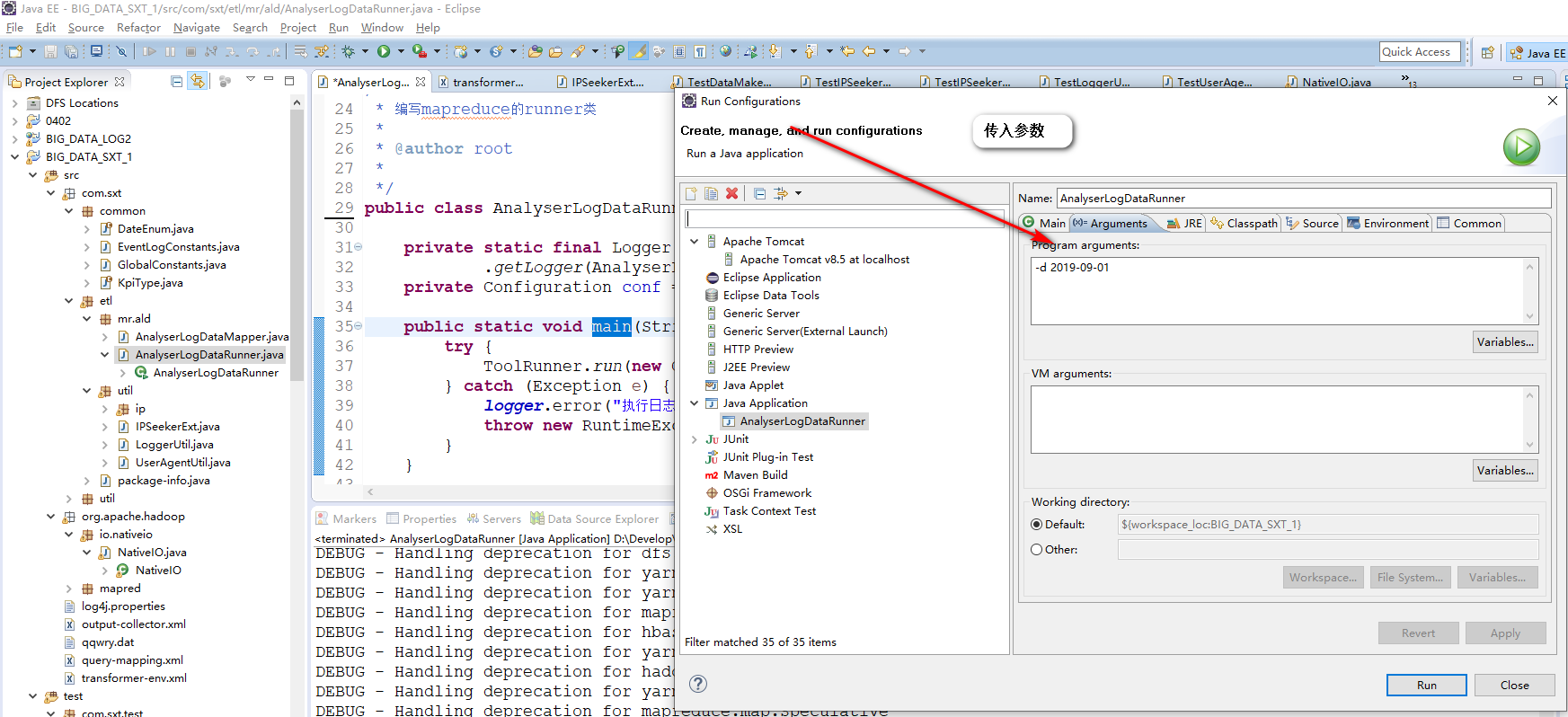

------------------------------ 运行 项目 BIG_DATA_SXT_1 修改如下配置 public class AnalyserLogDataRunner implements Tool { private static final Logger logger = Logger .getLogger(AnalyserLogDataRunner.class); private Configuration conf = null; public static void main(String[] args) { try { ToolRunner.run(new Configuration(), new AnalyserLogDataRunner(), args); } catch (Exception e) { logger.error("执行日志解析job异常", e); throw new RuntimeException(e); } } @Override public void setConf(Configuration conf) { conf.set("fs.defaultFS", "hdfs://node1:8020"); // conf.set("yarn.resourcemanager.hostname", "node3"); conf.set("hbase.zookeeper.quorum", "node2,node3,node4"); this.conf = HBaseConfiguration.create(conf); } ....... }

-------------下一节: 如上数据也不能满足要求,(ip和日期太少) 需要数据生成类。/BIG_DATA_SXT_1/test/com/sxt/test/TestDataMaker.java