代价函数,原理参考

https://www.jianshu.com/p/4cfb4f734358

代码

import re import matplotlib.pyplot as plt import numpy as np import pandas as pd from numpy import exp def data():#读取txt文本内的数据并转成需要的list f=open('data.txt') data=[] for each_line in f: each_line=each_line.strip('\n') res=re.split(" ",each_line) res=list(filter(None,res)) res= list(map(float,res)) data.append(res) for i in range(len(data)):#加一个偏执项 data[i].insert(0,1) f.close() return data def sin_cos(data,weights):#画图 x0=[] x1=[] y0=[] y1=[] for i in data: if(i[3]==0): x0.append(i[1]) y0.append(i[2]) else: x1.append(i[1]) y1.append(i[2]) fig = plt.figure() ax = fig.add_subplot(111) ax.scatter(x0,y0,marker='+',label='1',s=40,c='r') ax.scatter(x1,y1,marker='*',label='1',s=40,c='b') x = np.arange(-3, 3, 0.1) y = (-weights[0, 0] - weights[1, 0] * x) / weights[2, 0] #matix ax.plot(x, y) plt.xlabel('X1') plt.ylabel('X2') plt.savefig('test.png') plt.show() def sigmoid(inX):#逻辑函数 return 1.0/(1+exp(-inX)) '''def to_csv(data): name=['x1','x2','y'] test=pd.DataFrame(columns=name,data=data) test.to_csv('data.csv',encoding='utf-8')''' def tiduxiajiang_sigmoid(data): x=[] y=[] for i in range(len(data)): x.append(data[i][:-1]) y.append(data[i][-1:]) alpha=0.002 maxcycle=100 x=np.mat(x)#转成矩阵 y=np.mat(y) x2=x.T#矩阵转逆 weights=np.mat([[1],[1],[1]]) for i in range(maxcycle): h = sigmoid(x* weights) error=y-h weights=weights+alpha*x2*error print(sum(error))#看是否收敛 return weights data=data() weights=tiduxiajiang_sigmoid(data) sin_cos(data,weights) print('\n') print(weights) #to_csv(data)

error输出:

[[-36.41425331]]

[[-12.72376078]]

[[33.81527249]]

[[22.76406708]]

[[13.06316319]]

[[18.43125645]]

[[13.940586]]

[[16.64375254]]

[[14.0792666]]

[[15.48731269]]

[[13.90872727]]

[[14.62827295]]

[[13.59898126]]

[[13.93720427]]

[[13.23050463]]

[[13.35316688]]

[[12.84395627]]

[[12.84416803]]

[[12.46028295]]

[[12.3918111]]

[[12.09008779]]

[[11.98454135]]

[[11.73835456]]

[[11.61447392]]

[[11.4069633]]

[[11.27583756]]

[[11.09608423]]

[[10.96417886]]

[[10.80497149]]

[[10.67593363]]

[[10.53242193]]

[[10.40818021]]

[[10.27704098]]

[[10.15848557]]

[[10.03739503]]

[[9.92480135]]

[[9.81209603]]

[[9.70538874]]

[[9.59984542]]

[[9.49876158]]

[[9.39945381]]

[[9.30364189]]

[[9.20984624]]

[[9.11892452]]

[[9.03005934]]

[[8.94364882]]

[[8.85923404]]

[[8.77697561]]

[[8.6966063]]

[[8.61816858]]

[[8.54149713]]

[[8.46657885]]

[[8.39330291]]

[[8.32163239]]

[[8.25148629]]

[[8.18281944]]

[[8.11556799]]

[[8.04968572]]

[[7.98511945]]

[[7.92182501]]

[[7.85975645]]

[[7.79887283]]

[[7.73913354]]

[[7.68050112]]

[[7.62293922]]

[[7.5664137]]

[[7.51089176]]

[[7.45634232]]

[[7.40273562]]

[[7.35004333]]

[[7.29823835]]

[[7.2472948]]

[[7.19718792]]

[[7.14789401]]

[[7.09939038]]

[[7.0516553]]

[[7.0046679]]

[[6.9584082]]

[[6.91285699]]

[[6.86799582]]

[[6.82380698]]

[[6.78027341]]

[[6.73737871]]

[[6.6951071]]

[[6.65344337]]

[[6.61237286]]

[[6.57188145]]

[[6.53195552]]

[[6.4925819]]

[[6.45374791]]

[[6.41544128]]

[[6.37765016]]

[[6.34036308]]

[[6.30356898]]

[[6.26725712]]

[[6.23141712]]

[[6.19603895]]

[[6.16111286]]

[[6.12662941]]

[[6.09257947]]

达到收敛的目的

weights输出

[[ 2.78742697]

[ 0.36340767]

[-0.45020801]]

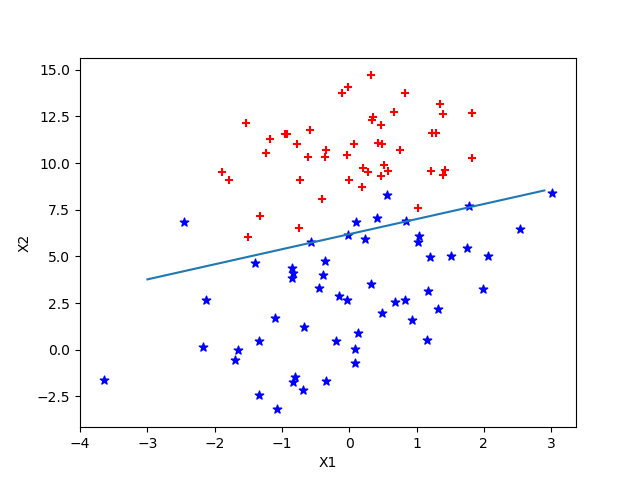

结果图;

data.txt样式;

-0.017612 14.053064 0 -1.395634 4.662541 1 -0.752157 6.538620 0 -1.322371 7.152853 0 0.423363 11.054677 0 0.406704 7.067335 1 0.667394 12.741452 0 -2.460150 6.866805 1 0.569411 9.548755 0 -0.026632 10.427743 0 0.850433 6.920334 1 1.347183 13.175500 0 1.176813 3.167020 1 -1.781871 9.097953 0 -0.566606 5.749003 1 0.931635 1.589505 1 -0.024205 6.151823 1 -0.036453 2.690988 1 -0.196949 0.444165 1 1.014459 5.754399 1 1.985298 3.230619 1 -1.693453 -0.557540 1 -0.576525 11.778922 0 -0.346811 -1.678730 1 -2.124484 2.672471 1 1.217916 9.597015 0 -0.733928 9.098687 0 -3.642001 -1.618087 1 0.315985 3.523953 1 1.416614 9.619232 0 -0.386323 3.989286 1 0.556921 8.294984 1 1.224863 11.587360 0 -1.347803 -2.406051 1 1.196604 4.951851 1 0.275221 9.543647 0 0.470575 9.332488 0 -1.889567 9.542662 0 -1.527893 12.150579 0 -1.185247 11.309318 0 -0.445678 3.297303 1 1.042222 6.105155 1 -0.618787 10.320986 0 1.152083 0.548467 1 0.828534 2.676045 1 -1.237728 10.549033 0 -0.683565 -2.166125 1 0.229456 5.921938 1 -0.959885 11.555336 0 0.492911 10.993324 0 0.184992 8.721488 0 -0.355715 10.325976 0 -0.397822 8.058397 0 0.824839 13.730343 0 1.507278 5.027866 1 0.099671 6.835839 1 -0.344008 10.717485 0 1.785928 7.718645 1 -0.918801 11.560217 0 -0.364009 4.747300 1 -0.841722 4.119083 1 0.490426 1.960539 1 -0.007194 9.075792 0 0.356107 12.447863 0 0.342578 12.281162 0 -0.810823 -1.466018 1 2.530777 6.476801 1 1.296683 11.607559 0 0.475487 12.040035 0 -0.783277 11.009725 0 0.074798 11.023650 0 -1.337472 0.468339 1 -0.102781 13.763651 0 -0.147324 2.874846 1 0.518389 9.887035 0 1.015399 7.571882 0 -1.658086 -0.027255 1 1.319944 2.171228 1 2.056216 5.019981 1 -0.851633 4.375691 1 -1.510047 6.061992 0 -1.076637 -3.181888 1 1.821096 10.283990 0 3.010150 8.401766 1 -1.099458 1.688274 1 -0.834872 -1.733869 1 -0.846637 3.849075 1 1.400102 12.628781 0 1.752842 5.468166 1 0.078557 0.059736 1 0.089392 -0.715300 1 1.825662 12.693808 0 0.197445 9.744638 0 0.126117 0.922311 1 -0.679797 1.220530 1 0.677983 2.556666 1 0.761349 10.693862 0 -2.168791 0.143632 1 1.388610 9.341997 0 0.317029 14.739025 0