版权声明:本文为博主原创文章,欢迎转载,并请注明出处。联系方式:[email protected]

对数据量较少的深度学习,为了避免过拟合,可以对训练数据进行增强及添加Dropout层。

对训练数据进行变换增强:

train_datagen = ImageDataGenerator( rescale=1. / 255, rotation_range=40, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, )

训练模型添加Dropout层:

model = models.Sequential() # 输出图片尺寸:150-3+1=148*148,参数数量:32*3*3*3+32=896 model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3))) model.add(layers.MaxPooling2D((2, 2))) # 输出图片尺寸:148/2=74*74 # 输出图片尺寸:74-3+1=72*72,参数数量:64*3*3*32+64=18496 model.add(layers.Conv2D(64, (3, 3), activation='relu')) model.add(layers.MaxPooling2D((2, 2))) # 输出图片尺寸:72/2=36*36 # 输出图片尺寸:36-3+1=34*34,参数数量:128*3*3*64+128=73856 model.add(layers.Conv2D(128, (3, 3), activation='relu')) model.add(layers.MaxPooling2D((2, 2))) # 输出图片尺寸:34/2=17*17 # 输出图片尺寸:17-3+1=15*15,参数数量:128*3*3*128+128=147584 model.add(layers.Conv2D(128, (3, 3), activation='relu')) model.add(layers.MaxPooling2D((2, 2))) # 输出图片尺寸:15/2=7*7 # 多维转为一维:7*7*128=6272 model.add(layers.Flatten()) # 添加Dropout层 model.add(layers.Dropout(0.5)) # 参数数量:6272*512+512=3211776 model.add(layers.Dense(512, activation='relu')) # 参数数量:512*1+1=513 model.add(layers.Dense(1, activation='sigmoid'))

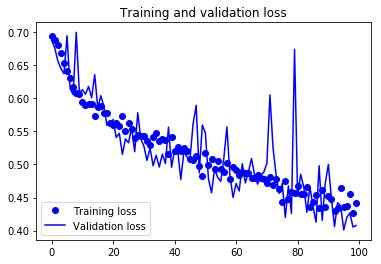

训练100次迭代:

history = model.fit_generator( train_generator, steps_per_epoch=train_generator.samples // batch_size, epochs=100, validation_data=validation_generator, validation_steps=validation_generator.samples // batch_size)

Epoch 1/100 62/62 [==============================] - 42s 674ms/step - loss: 0.6945 - acc: 0.5055 - val_loss: 0.6876 - val_acc: 0.5030 Epoch 2/100 62/62 [==============================] - 42s 680ms/step - loss: 0.6879 - acc: 0.5383 - val_loss: 0.6764 - val_acc: 0.6116 Epoch 3/100 62/62 [==============================] - 42s 682ms/step - loss: 0.6810 - acc: 0.5544 - val_loss: 0.6566 - val_acc: 0.6054 Epoch 4/100 62/62 [==============================] - 42s 685ms/step - loss: 0.6683 - acc: 0.5902 - val_loss: 0.6454 - val_acc: 0.5950 Epoch 5/100

......

Epoch 95/100 62/62 [==============================] - 41s 661ms/step - loss: 0.4641 - acc: 0.7792 - val_loss: 0.4375 - val_acc: 0.8027 Epoch 96/100 62/62 [==============================] - 42s 670ms/step - loss: 0.4336 - acc: 0.8075 - val_loss: 0.4010 - val_acc: 0.8233 Epoch 97/100 62/62 [==============================] - 42s 680ms/step - loss: 0.4359 - acc: 0.7933 - val_loss: 0.4201 - val_acc: 0.8185 Epoch 98/100 62/62 [==============================] - 43s 689ms/step - loss: 0.4559 - acc: 0.7878 - val_loss: 0.4266 - val_acc: 0.8130 Epoch 99/100 62/62 [==============================] - 42s 676ms/step - loss: 0.4267 - acc: 0.7994 - val_loss: 0.4054 - val_acc: 0.8161 Epoch 100/100 62/62 [==============================] - 43s 690ms/step - loss: 0.4413 - acc: 0.8009 - val_loss: 0.4077 - val_acc: 0.8192

训练曲线:

用测试集对模型进行测试:

test_generator = test_datagen.flow_from_directory( test_dir, target_size=(150, 150), batch_size=batch_size, class_mode='binary') test_loss, test_acc = model.evaluate_generator(test_generator, steps=test_generator.samples // batch_size) print('test acc:', test_acc)

Found 400 images belonging to 2 classes. test acc: 0.83

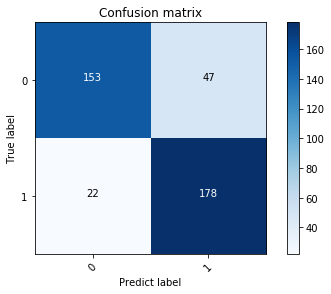

混淆矩阵: