一,Kaggle猫狗大战数据集:

下载地址:https://www.kaggle.com/c/dogs-vs-cats

下载解压后会有两个文件目录,一个测试数据,一个训练数据:

训练数据:

二,训练代码:

第一部分:读取数据:

from PIL import Image

import numpy as np

import tensorflow as tf

import os

import glob

import tensorflow.contrib.layers as layers

os.environ['CUDA_VISIBLE_DEVICES']='0'

path = r'F:\dataset\dogs-vs-cats'

w = 224

h = 224

c = 3

epochs = 2

def get_train_imgs(path):

imgs_list = []

labels_list = []

train_dir = os.path.join(path,'train')

print(train_dir)

for imgs in os.listdir(train_dir):

img_path = os.path.join(train_dir,imgs)

if 'cat' in imgs:

label = 1

elif 'dog' in imgs:

label = 0

imgs_list.append(img_path)

labels_list.append(label)

'''打乱数据'''

temp_data = np.array([imgs_list,labels_list]) #shape:(2, 25000)

temp_data = temp_data.transpose() #shape:(25000, 2),每个元素都是这种形式['F:\\dataset\\dogs-vs-cats\\train\\cat.0.jpg' '1']

np.random.shuffle(temp_data) #打乱数据顺序

imgs_list = list(temp_data[:,0])

labels_list = list(temp_data[:,1])

labels_list = [int(i) for i in labels_list]

return imgs_list,labels_list第二部分:得到一个batch的数据:

def get_batch(imgs,labels,w,h,batch_size):

imgs = tf.cast(imgs,tf.string)

labels = tf.cast(labels,tf.int32)

# 生成队列

input_queue = tf.train.slice_input_producer([imgs, labels])

image_contents = tf.read_file(input_queue[0])

label = input_queue[1]

image = tf.image.decode_jpeg(image_contents, channels=3)

image = tf.image.resize_images(image, [h, w], method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)#由于每张图片的大小不同,所以要弄成一致

image = tf.cast(image, tf.float32) #数据输入网络之前,要弄成tf形式的数据格式

image_batch, label_batch = tf.train.batch([image, label], #一个batch地取数据

batch_size=batch_size,

num_threads=64, # 线程

capacity=256)

return image_batch,label_batch这里读取数据用了一个“队列”的知识点:(这属于tensorflow的一个数据读取机制)

tensorflow中为了充分利用GPU,减少GPU等待数据的空闲时间,使用了两个线程分别执行数据读入和数据计算。具体来说就是使用一个线程源源不断的将硬盘中的图片数据读入到一个内存队列中,另一个线程负责计算任务,所需数据直接从内存队列中获取。

tf在内存队列之前,还设立了一个文件名队列(我们只需要关心文件名队列),文件名队列存放的是参与训练的文件名,要训练 N个epoch,则文件名队列中就含有N个批次的所有文件名。 示例图如下:

在N个epoch的文件名最后是一个结束标志,当tf读到这个结束标志的时候,会抛出一个 OutofRange 的异常,外部捕获到这个异常之后就可以结束程序了。而创建tf的文件名队列就需要使用到 tf.train.slice_input_producer 函数。

代码中:

input_queue = tf.train.slice_input_producer([imgs, labels])表示建立的队列有两种数据,一个是imgs,一个是labels

自然而然,input_queue[0]就表示取的是imgs数据,input_queue[1]则是labels数据。

三,网络训练:

def inference(images,batch_size,n_classes):

with tf.variable_scope('conv1') as scope :

weights = tf.get_variable('weights',shape=[3,3,3,16],dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1,dtype=tf.float32))

biases = tf.get_variable('biases',shape=[16],dtype=tf.float32,initializer=tf.constant_initializer(0.1))

conv = tf.nn.conv2d(images,weights,strides=[1,1,1,1],padding='SAME')

pre_activation = tf.nn.bias_add(conv,biases)

conv1 = tf.nn.relu(pre_activation,name='conv1')

#pool1

with tf.variable_scope('pooling') as scope:

pool1 = tf.nn.max_pool(conv1,ksize=[1,3,3,1],strides=[1,2,2,1],padding='SAME',name='pooling1')

# conv2

with tf.variable_scope('conv2') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[3, 3, 16, 16], stddev=0.1, dtype=tf.float32),

name='weights', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[16]),

name='biases', dtype=tf.float32)

conv = tf.nn.conv2d(pool1, weights, strides=[1, 1, 1, 1], padding='SAME')

pre_activation = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(pre_activation, name='conv2')

print('conv2:{}'.format(conv2.shape))

# pooling2

with tf.variable_scope('pooling2_lrn') as scope:

pool2 = tf.nn.max_pool(conv2, ksize=[1, 3, 3, 1], strides=[1, 1, 1, 1], padding='SAME', name='pooling2')

#fc3

with tf.variable_scope('local3') as scope:

print('pool2:{}'.format(pool2.shape))

reshape = tf.reshape(pool2,shape=[batch_size,-1])

print('pool2,reshape:{}'.format(reshape.shape))

dim = reshape.get_shape()[1].value

weights = tf.Variable(tf.truncated_normal(shape=[dim,128],stddev=0.005,dtype=tf.float32),

name='weights',dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[128]),

name='biases', dtype=tf.float32)

local3 = tf.nn.relu(tf.matmul(reshape,weights)+biases,name=scope.name)

# fc4

with tf.variable_scope('local4') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[128, 128], stddev=0.005, dtype=tf.float32),

name='weights', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[128]),

name='biases', dtype=tf.float32)

local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name='local4')

with tf.variable_scope('softmax_linear') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[128, n_classes], stddev=0.005, dtype=tf.float32),

name='softmax_linear', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[n_classes]),

name='biases', dtype=tf.float32)

softmax_linear = tf.add(tf.matmul(local4, weights), biases, name='softmax_linear')

return softmax_linear

这个网络只有两个卷积层,两个池化层,两个全连接层。

四,损失函数,定义训练操作,对比输出和label得到准确率函数:

#cal loss

def losses(logits,labels):

with tf.variable_scope('loss') as scope:

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits,labels=labels,

name='xentropy_per_example')

loss = tf.reduce_mean(cross_entropy,name='loss')

return loss

# train_op

def trainning(loss, learning_rate):

with tf.name_scope('optimizer'):

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

global_step = tf.Variable(0, name='global_step', trainable=False)

train_op = optimizer.minimize(loss, global_step=global_step)

return train_op

#输出和label对照,得到准确率

def evaluation(logits, labels):

with tf.variable_scope('accuracy') as scope:

correct = tf.nn.in_top_k(logits, labels, 1)

correct = tf.cast(correct, tf.float16)

accuracy = tf.reduce_mean(correct)

return accuracy

其中evalution()函数中的 tf.nn.in_top_k(logits, labels, 1) 返回输入logits中最大值的标签,若跟labels一致,则返回True,否则返回False。

五,完整代码:

from PIL import Image

import numpy as np

import tensorflow as tf

import os

import glob

import tensorflow.contrib.layers as layers

import matplotlib.pyplot as plt

os.environ['CUDA_VISIBLE_DEVICES']='0'

path = r'F:\dataset\dogs-vs-cats'

w = 224

h = 224

c = 3

def get_train_imgs(path):

imgs_list = []

labels_list = []

train_dir = os.path.join(path,'train')

for imgs in os.listdir(train_dir):

img_path = os.path.join(train_dir,imgs)

if 'cat' in imgs:

label = 1

elif 'dog' in imgs:

label = 0

imgs_list.append(img_path)

labels_list.append(label)

'''打乱数据'''

temp_data = np.array([imgs_list,labels_list])

temp_data = temp_data.transpose()

np.random.shuffle(temp_data)

imgs_list = list(temp_data[:,0])

labels_list = list(temp_data[:,1])

labels_list = [int(i) for i in labels_list]

return imgs_list,labels_list

def get_batch(imgs,labels,w,h,batch_size):

imgs = tf.cast(imgs,tf.string)

labels = tf.cast(labels,tf.int32)

# 生成队列

input_queue = tf.train.slice_input_producer([imgs, labels])

image_contents = tf.read_file(input_queue[0])

label = input_queue[1]

image = tf.image.decode_jpeg(image_contents, channels=3)

image = tf.image.resize_images(image, [h, w], method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)#由于每张图片的大小不同,所以要弄成一致

image = tf.cast(image, tf.float32) #数据输入网络之前,要弄成tf形式的数据格式

image_batch, label_batch = tf.train.batch([image, label],

batch_size=batch_size,

num_threads=64, # 线程

capacity=256)

return image_batch,label_batch

def inference(images,batch_size,n_classes):

with tf.variable_scope('conv1') as scope :

weights = tf.get_variable('weights',shape=[3,3,3,16],dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1,dtype=tf.float32))

biases = tf.get_variable('biases',shape=[16],dtype=tf.float32,initializer=tf.constant_initializer(0.1))

conv = tf.nn.conv2d(images,weights,strides=[1,1,1,1],padding='SAME')

pre_activation = tf.nn.bias_add(conv,biases)

conv1 = tf.nn.relu(pre_activation,name='conv1')

#pool1

with tf.variable_scope('pooling') as scope:

pool1 = tf.nn.max_pool(conv1,ksize=[1,3,3,1],strides=[1,2,2,1],padding='SAME',name='pooling1')

# conv2

with tf.variable_scope('conv2') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[3, 3, 16, 16], stddev=0.1, dtype=tf.float32),

name='weights', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[16]),

name='biases', dtype=tf.float32)

conv = tf.nn.conv2d(pool1, weights, strides=[1, 1, 1, 1], padding='SAME')

pre_activation = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(pre_activation, name='conv2')

# pooling2

with tf.variable_scope('pooling2_lrn') as scope:

pool2 = tf.nn.max_pool(conv2, ksize=[1, 3, 3, 1], strides=[1, 1, 1, 1], padding='SAME', name='pooling2')

#fc3

with tf.variable_scope('local3') as scope:

reshape = tf.reshape(pool2,shape=[batch_size,-1])

dim = reshape.get_shape()[1].value

weights = tf.Variable(tf.truncated_normal(shape=[dim,128],stddev=0.005,dtype=tf.float32),

name='weights',dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[128]),

name='biases', dtype=tf.float32)

local3 = tf.nn.relu(tf.matmul(reshape,weights)+biases,name=scope.name)

# fc4

with tf.variable_scope('local4') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[128, 128], stddev=0.005, dtype=tf.float32),

name='weights', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[128]),

name='biases', dtype=tf.float32)

local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name='local4')

with tf.variable_scope('softmax_linear') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[128, n_classes], stddev=0.005, dtype=tf.float32),

name='softmax_linear', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[n_classes]),

name='biases', dtype=tf.float32)

softmax_linear = tf.add(tf.matmul(local4, weights), biases, name='softmax_linear')

return softmax_linear

#cal loss

def losses(logits,labels):

with tf.variable_scope('loss') as scope:

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits,labels=labels,

name='xentropy_per_example')

loss = tf.reduce_mean(cross_entropy,name='loss')

return loss

# train_op

def trainning(loss, learning_rate):

with tf.name_scope('optimizer'):

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

global_step = tf.Variable(0, name='global_step', trainable=False)

train_op = optimizer.minimize(loss, global_step=global_step)

return train_op

#输出和label对照,得到准确率

def evaluation(logits, labels):

with tf.variable_scope('accuracy') as scope:

correct = tf.nn.in_top_k(logits, labels, 1)

correct = tf.cast(correct, tf.float16)

accuracy = tf.reduce_mean(correct)

return accuracy

imgs,labels = get_train_imgs(path)

imgs_batch,labels_batch = get_batch(imgs,labels,w,h,64)

train_logits = inference(imgs_batch, 64, 2) #64是batchsize,2是2分类

train_loss = losses(train_logits, labels_batch)

train_op = trainning(train_loss, 0.001)

train_acc = evaluation(train_logits, labels_batch)

with tf.Session() as sess:

saver = tf.train.Saver()

sess.run(tf.global_variables_initializer())

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

try:

acc=[]

loss=[]

stepl=np.arange(0,100)

for step in np.arange(1000):

if coord.should_stop():

break

_, tra_loss, tra_acc = sess.run([train_op, train_loss, train_acc])

if step % 10 == 0:

print('Step %d, train loss=%.2f, train accuracy = %.2f%%' % (step, tra_loss, tra_acc))

acc.append(tra_acc)

loss.append(tra_loss)

if (step + 1) == 1000:

checkpoint_path = os.path.join('./model', 'model_ckpt')

saver.save(sess, checkpoint_path, global_step=step)#保存模型

plt.plot(stepl, acc, color='r', label='acc')

plt.plot(stepl, loss, color=(0, 0, 0), label='loss')

plt.legend()

plt.show()

except tf.errors.OutOfRangeError:

print('Done training')

finally:

coord.request_stop()

coord.join(threads)

运行效果:

训练集的准确率虽然波动比较大,但最后能到0.77。

在./model下会保存模型。

六,预测代码:

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import tensorflow as tf

import os

import glob

import tensorflow.contrib.layers as layers

CHECK_POINT_DIR = './model'

def inference(images,batch_size,n_classes):

with tf.variable_scope('conv1') as scope :

weights = tf.get_variable('weights',shape=[3,3,3,16],dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1,dtype=tf.float32))

biases = tf.get_variable('biases',shape=[16],dtype=tf.float32,initializer=tf.constant_initializer(0.1))

conv = tf.nn.conv2d(images,weights,strides=[1,1,1,1],padding='SAME')

pre_activation = tf.nn.bias_add(conv,biases)

conv1 = tf.nn.relu(pre_activation,name='conv1')

#pool1

with tf.variable_scope('pooling') as scope:

pool1 = tf.nn.max_pool(conv1,ksize=[1,3,3,1],strides=[1,2,2,1],padding='SAME',name='pooling1')

# conv2

with tf.variable_scope('conv2') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[3, 3, 16, 16], stddev=0.1, dtype=tf.float32),

name='weights', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[16]),

name='biases', dtype=tf.float32)

conv = tf.nn.conv2d(pool1, weights, strides=[1, 1, 1, 1], padding='SAME')

pre_activation = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(pre_activation, name='conv2')

# pooling2

with tf.variable_scope('pooling2_lrn') as scope:

pool2 = tf.nn.max_pool(conv2, ksize=[1, 3, 3, 1], strides=[1, 1, 1, 1], padding='SAME', name='pooling2')

#fc3

with tf.variable_scope('local3') as scope:

reshape = tf.reshape(pool2,shape=[batch_size,-1])

dim = reshape.get_shape()[1].value

weights = tf.Variable(tf.truncated_normal(shape=[dim,128],stddev=0.005,dtype=tf.float32),

name='weights',dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[128]),

name='biases', dtype=tf.float32)

local3 = tf.nn.relu(tf.matmul(reshape,weights)+biases,name=scope.name)

# fc4

with tf.variable_scope('local4') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[128, 128], stddev=0.005, dtype=tf.float32),

name='weights', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[128]),

name='biases', dtype=tf.float32)

local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name='local4')

with tf.variable_scope('softmax_linear') as scope:

weights = tf.Variable(tf.truncated_normal(shape=[128, n_classes], stddev=0.005, dtype=tf.float32),

name='softmax_linear', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[n_classes]),

name='biases', dtype=tf.float32)

softmax_linear = tf.add(tf.matmul(local4, weights), biases, name='softmax_linear')

return softmax_linear

def evaluate_one_image(image_array):

with tf.Graph().as_default():

image = tf.cast(image_array, tf.float32)

image = tf.image.per_image_standardization(image)

image = tf.reshape(image, [1, 224, 224, 3])

logit = inference(image, 1, 2)

logit = tf.nn.softmax(logit)

x = tf.placeholder(tf.float32, shape=[224, 224, 3])

saver = tf.train.Saver()

with tf.Session() as sess:

print('Reading checkpoints...')

ckpt = tf.train.get_checkpoint_state(CHECK_POINT_DIR)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

else:

print('No checkpoint file found')

prediction = sess.run(logit, feed_dict={x: image_array})

max_index = np.argmax(prediction)

print(prediction)

if max_index == 0:

result = ('this is dog rate: %.6f, result prediction is [%s]' % (

prediction[:, 0], ','.join(str(i) for i in prediction[0])))

else:

result = ('this is cat rate: %.6f, result prediction is [%s]' % (

prediction[:, 1], ','.join(str(i) for i in prediction[0])))

return result

if __name__ == '__main__':

image = Image.open('./cat.jpg')

plt.imshow(image)

plt.show()

image = image.resize([224, 224])

image = np.array(image)

print(evaluate_one_image(image))

网上找一张猫的图片:

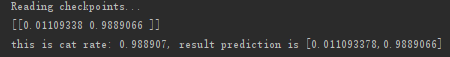

运行效果:

可以看到,预测是猫的概率高达0.98

##########################################################

本文是在https://blog.csdn.net/jiangyingfeng/article/details/81704909处作出的一点修改。出处也是链接处。