感谢无人的回忆的转载的博客以及半闲居士做的系列博客。

这个博客是希望将《视觉slam十四讲》中所有用到的g2o弄明白,我会尽力而为的。

文章目录

概论

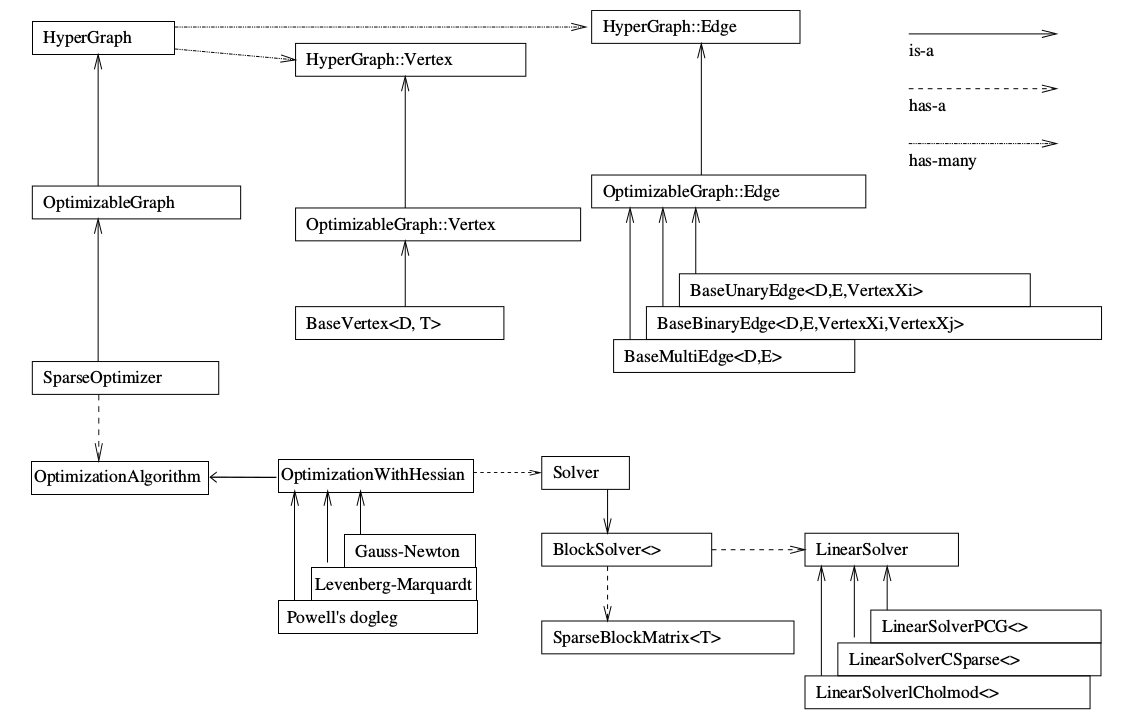

g2o是一个图优化库,其将非线性优化与图论相结合。节点为优化变量,边为误差项。关于在g2o中节点和边的定义。例如在视觉slam中,相机的位姿与观测的路标构成图优化的顶点。相机的运动,路标的观测构成图优化的边。

g2o的结构如下所示:

g2o的流程为:

- 定义顶点和边的类型;

- 构建图并选择优化算法;

- 调用g2o进行优化,返回结果。

接下来展开说具体每一步都是做什么的:

定义顶点和边的类型

自定义顶点时,需要重写4个函数:

virtual bool read(std::istream& is);

virtual bool write(std::ostream& os) const;

virtual void oplusImpl(const number_t* update);

virtual void setToOriginImpl();

其中read,write函数可以不进行覆写,仅仅声明一下就可以,setToOriginImpl设定被优化变量的原始值,oplusImpl比较重要,我们根据增量方程计算出增量之后,就是通过这个函数对估计值进行调整的,因此这个函数的内容一定要写对,否则会造成一直优化却得不到好的优化结果的现象。

自定义边时,需要重写4个函数:

virtual bool read(std::istream& is);

virtual bool write(std::ostream& os) const;

virtual void computeError();

virtual void linearizeOplus();

read和write函数同上,computeError函数是使用当前顶点的值计算的测量值与真实的测量值之间的误差,linearizeOplus函数是在当前顶点的值下,该误差对优化变量的偏导数,即jacobian。

构建图并选择优化算法

线性求解器,求解求*Δx=−b的线性求解器,完全采用第三方的线性代数库,主要采用Cholesky分解和PCG迭代。

选择梯度下降方法(从GN,LM, DogLeg选择)。

调用g2o进行优化,返回结果

添加边,添加节点,执行优化。

第6讲 拟合曲线

曲线拟合中,整个问题只有一个顶点(优化变量)a, b, c,带噪音的点构成一个个误差项,也就是边。

这个程序中边只连接一个顶点(自己连接自己)。

1. 定义顶点和边的类型

//g2o::BaseVertex<3, Eigen::Vector3d>中的3并非是顶点(更确切的说是状态变量)的维度,而是其在流形空间(manifold)的最小表示。

//Eigen::Vector3d是顶点(状态变量)的类型。

class CurveFittingVertex: public g2o::BaseVertex<3, Eigen::Vector3d>

{

public:

//该宏将重载 new 运算符,使其产生对齐的指针

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

virtual void setToOriginImpl() // 重置

{

_estimate << 0,0,0;

}

virtual void oplusImpl( const double* update ) // 更新

{

_estimate += Eigen::Vector3d(update);

}

// 存盘和读盘:留空

virtual bool read( istream& in ) {}

virtual bool write( ostream& out ) const {}

};

// 误差模型 模板参数:观测值维度,类型,连接顶点类型

class CurveFittingEdge: public g2o::BaseUnaryEdge<1,double,CurveFittingVertex>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

CurveFittingEdge( double x ): BaseUnaryEdge(), _x(x) {}

// 计算曲线模型误差

void computeError()

{

//强制类型转换为节点

const CurveFittingVertex* v = static_cast<const CurveFittingVertex*> (_vertices[0]);

//得到上一次的估计值

const Eigen::Vector3d abc = v->estimate();

//计算误差

_error(0,0) = _measurement - std::exp( abc(0,0)*_x*_x + abc(1,0)*_x + abc(2,0) ) ;

}

virtual bool read( istream& in ) {}

virtual bool write( ostream& out ) const {}

public:

double _x; // x 值, y 值为 _measurement

};

2 构建图并选择优化算法

这里书上有问题。原因出在指针不能强制类型转换为unique_ptr,所以这里的linearSolver得声明为unique_ptr。std::unique_ptr是一个仅能移动(move_only)的类型,所以使用std::move()。

typedef g2o::BlockSolver<g2o::BlockSolverTraits<3, 1>> Block;

std::unique_ptr<Block::LinearSolverType> linearSolver(new g2o::LinearSolverDense<Block::PoseMatrixType>());

std::unique_ptr<Block> solve_ptr(new Block(std::move(linearSolver)));

g2o::OptimizationAlgorithmLevenberg *solver = new g2o::OptimizationAlgorithmLevenberg(std::move(solve_ptr));

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm(solver);

optimizer.setVerbose(true);

//增加顶点(第一个点)

CurveFittingVertex *v = new CurveFittingVertex();

v->setEstimate(Eigen::Vector3d(0, 0,0 ));

v->setId(0);

optimizer.addVertex(v);

//向图中增加边。这个程序中,程序边只连接一个顶点(自己连接自己)。

for (int i = 0; i < N; ++i) {

CurveFittingEdge *edge = new CurveFittingEdge(x_data[i]);

edge->setId(i);

edge->setVertex(0, v);

edge->setMeasurement(y_data[i]);

edge->setInformation(Eigen::Matrix<double, 1, 1>::Identity() * 1 / (w_sigma * w_sigma));

optimizer.addEdge(edge);

}

3.调用g2o进行优化,返回结果

//初始化与优化

optimizer.initializeOptimization();

optimizer.optimize(100);

//得到结果

Eigen::Vector3d abc_est = v->estimate();

cout << "Estimate = " << abc_est.transpose() << endl;

第7讲 Bundle Adjustment

需要优化的即式(7.35),重投影误差对象寄位姿的李代数的雅克比式(7.45),对特帧点空间位置的雅克比式(7.47)。

1. 定义顶点和边的类型

g2o本身提供关于BA节点与边。这里我们需要使用VertexSE3Expmap(李代数位姿)、VertexSBAPointXYZ(空间点位置)和EdgeProjectXYZ2UV(投影方程边)。

VertexSE3Expmap的定义如下:

//第一个参数6表示内部存储的优化变量维度,这里是一个6维李代数。第二个参数为优化变量的类型。

//SE3Quat为g2o中定义的相机位姿支持log与update操作。

class G2O_TYPES_SBA_API VertexSE3Expmap : public BaseVertex<6, SE3Quat>{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

VertexSE3Expmap();

bool read(std::istream& is);

bool write(std::ostream& os) const;

virtual void setToOriginImpl() {

_estimate = SE3Quat();

}

virtual void oplusImpl(const number_t* update_) {

Eigen::Map<const Vector6> update(update_);

//这里做了三件事:1、设定从世界坐标系到摄像头坐标系的转换;

//2、设定世界坐标系到图像的转换矩阵;

//3、设定角的倒数

setEstimate(SE3Quat::exp(update)*estimate());

}

};

VertexSBAPointXYZ内容很简单,就不细说了。

边为EdgeProjectXYZ2UV:

//是一个Binary Edge,后面的模板参数表示,它的数据是2维的,来自Eigen::Vector2D,它连接的两个顶点必须是 VertexSBAPointXYZ, VertexSE3Expmap。

class G2O_TYPES_SBA_API EdgeProjectXYZ2UV : public BaseBinaryEdge<2, Vector2, VertexSBAPointXYZ, VertexSE3Expmap>{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeProjectXYZ2UV();

bool read(std::istream& is);

bool write(std::ostream& os) const;

//计算误差,包含相机位姿与特征点的位置

void computeError() {

const VertexSE3Expmap* v1 = static_cast<const VertexSE3Expmap*>(_vertices[1]);

const VertexSBAPointXYZ* v2 = static_cast<const VertexSBAPointXYZ*>(_vertices[0]);

const CameraParameters * cam

= static_cast<const CameraParameters *>(parameter(0));

Vector2 obs(_measurement);

_error = obs-cam->cam_map(v1->estimate().map(v2->estimate()));

}

//计算雅可比矩阵,详细看下面

virtual void linearizeOplus();

CameraParameters * _cam;

};

计算雅克比矩阵:

void EdgeProjectXYZ2UV::linearizeOplus() {

//_vertices为 typedef std::unordered_map<int, Vertex*>。

//与上方定义相对应,所以_vertices[1]为VertexSE3Expmap,_vertices[0]为VertexSBAPointXYZ

VertexSE3Expmap * vj = static_cast<VertexSE3Expmap *>(_vertices[1]);

SE3Quat T(vj->estimate());

VertexSBAPointXYZ* vi = static_cast<VertexSBAPointXYZ*>(_vertices[0]);

Vector3 xyz = vi->estimate();

//路标点xyz在转换t之后应该在什么位置,即书上的p`。

Vector3 xyz_trans = T.map(xyz);

number_t x = xyz_trans[0];

number_t y = xyz_trans[1];

number_t z = xyz_trans[2];

number_t z_2 = z*z;

const CameraParameters * cam = static_cast<const CameraParameters *>(parameter(0));

Matrix<number_t,2,3,Eigen::ColMajor> tmp;

tmp(0,0) = cam->focal_length;

tmp(0,1) = 0;

tmp(0,2) = -x/z*cam->focal_length;

tmp(1,0) = 0;

tmp(1,1) = cam->focal_length;

tmp(1,2) = -y/z*cam->focal_length;

_jacobianOplusXi = -1./z * tmp * T.rotation().toRotationMatrix();

//式(7.45)

_jacobianOplusXj(0,0) = x*y/z_2 *cam->focal_length;

_jacobianOplusXj(0,1) = -(1+(x*x/z_2)) *cam->focal_length;

_jacobianOplusXj(0,2) = y/z *cam->focal_length;

_jacobianOplusXj(0,3) = -1./z *cam->focal_length;

_jacobianOplusXj(0,4) = 0;

_jacobianOplusXj(0,5) = x/z_2 *cam->focal_length;

_jacobianOplusXj(1,0) = (1+y*y/z_2) *cam->focal_length;

_jacobianOplusXj(1,1) = -x*y/z_2 *cam->focal_length;

_jacobianOplusXj(1,2) = -x/z *cam->focal_length;

_jacobianOplusXj(1,3) = 0;

_jacobianOplusXj(1,4) = -1./z *cam->focal_length;

_jacobianOplusXj(1,5) = y/z_2 *cam->focal_length;

}

2 构建图并选择优化算法

初始化g2o,这里书上仍然有问题:

typedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose 维度为 6, landmark 维度为 3

std::unique_ptr<Block::LinearSolverType> linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器

std::unique_ptr<Block> solver_ptr = new Block ( std::move(linearSolver) ); // 矩阵块求解器

std::unique_ptr<g2o::OptimizationAlgorithmLevenberg> solver = new g2o::OptimizationAlgorithmLevenberg ( std::move(solver_ptr) );

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm ( std::move(solver) );

添加顶点顶点(优化变量):

// 优化SE3

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera pose

Eigen::Matrix3d R_mat;

R_mat <<

R.at<double> ( 0,0 ), R.at<double> ( 0,1 ), R.at<double> ( 0,2 ),

R.at<double> ( 1,0 ), R.at<double> ( 1,1 ), R.at<double> ( 1,2 ),

R.at<double> ( 2,0 ), R.at<double> ( 2,1 ), R.at<double> ( 2,2 );

pose->setId ( 0 );

pose->setEstimate ( g2o::SE3Quat (

R_mat,

Eigen::Vector3d ( t.at<double> ( 0,0 ), t.at<double> ( 1,0 ), t.at<double> ( 2,0 ) )

) );

optimizer.addVertex ( pose );

//添加路标

int index = 1;

for ( const Point3f p:points_3d ) // landmarks

{

g2o::VertexSBAPointXYZ* point = new g2o::VertexSBAPointXYZ();

point->setId ( index++ );

point->setEstimate ( Eigen::Vector3d ( p.x, p.y, p.z ) );

// g2o 中必须设置边缘化。设定为true时,将会被边缘化加速求解。见式(10.56)至式(10.59)。

point->setMarginalized ( true );

optimizer.addVertex ( point );

}

//设定相机内参

g2o::CameraParameters* camera = new g2o::CameraParameters (

K.at<double> ( 0,0 ), Eigen::Vector2d ( K.at<double> ( 0,2 ), K.at<double> ( 1,2 ) ), 0

);

camera->setId ( 0 );

optimizer.addParameter ( camera );

//添加边

index = 1;

for ( const Point2f p:points_2d )

{

g2o::EdgeProjectXYZ2UV* edge = new g2o::EdgeProjectXYZ2UV();

edge->setId ( index );

//设定两个节点,与构造时的模板类对应

edge->setVertex ( 0, dynamic_cast<g2o::VertexSBAPointXYZ*> ( optimizer.vertex ( index ) ) );

edge->setVertex ( 1, pose );

//设定观测量z

edge->setMeasurement ( Eigen::Vector2d ( p.x, p.y ) );

edge->setParameterId ( 0,0 );

edge->setInformation ( Eigen::Matrix2d::Identity() );

optimizer.addEdge ( edge );

index++;

}

3. 调用g2o进行优化,返回结果

//开始优化

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose ( true );

optimizer.initializeOptimization();

optimizer.optimize ( 100 );

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>> ( t2-t1 );

cout<<"optimization costs time: "<<time_used.count() <<" seconds."<<endl;

cout<<endl<<"after optimization:"<<endl;

cout<<"T="<<endl<<Eigen::Isometry3d ( pose->estimate() ).matrix() <<endl;

第10讲 后端1

1. 定义顶点和边的类型

两种节点定义如下,讲更新量看作加法加上就行:

//Camera中包含相机参数

class VertexCameraBAL : public g2o::BaseVertex<9,Eigen::VectorXd>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

VertexCameraBAL() {}

virtual bool read ( std::istream& /*is*/ )

{

return false;

}

virtual bool write ( std::ostream& /*os*/ ) const

{

return false;

}

virtual void setToOriginImpl() {}

virtual void oplusImpl ( const double* update )

{

////update是更新量,VertexCameraBAL::Dimension是更新量的维度

Eigen::VectorXd::ConstMapType v ( update, VertexCameraBAL::Dimension );

_estimate += v;

}

};

class VertexPointBAL : public g2o::BaseVertex<3, Eigen::Vector3d>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

VertexPointBAL() {}

virtual bool read ( std::istream& /*is*/ )

{

return false;

}

virtual bool write ( std::ostream& /*os*/ ) const

{

return false;

}

virtual void setToOriginImpl() {}

virtual void oplusImpl ( const double* update )

{

Eigen::Vector3d::ConstMapType v ( update );

_estimate += v;

}

};

边代表代价函数,为了避免复杂的求导,借用Ceres库中的Autodiff(自动求导)功能。定义如下:

class EdgeObservationBAL : public g2o::BaseBinaryEdge<2, Eigen::Vector2d,VertexCameraBAL, VertexPointBAL>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeObservationBAL() {}

virtual bool read ( std::istream& /*is*/ )

{

return false;

}

virtual bool write ( std::ostream& /*os*/ ) const

{

return false;

}

//override关键词(摘自c++ primer),派生类中定义的虚函数如果与基类定义同名虚函数有先痛得行参列表,则派生类版本将覆盖基类版本

virtual void computeError() override

{

const VertexCameraBAL* cam = static_cast<const VertexCameraBAL*> ( vertex ( 0 ) );

const VertexPointBAL* point = static_cast<const VertexPointBAL*> ( vertex ( 1 ) );

( *this ) ( cam->estimate().data(), point->estimate().data(), _error.data() );

}

//为了使用Ceres求导功能定义的函数,让本类成为拟函数类

template<typename T>

bool operator() ( const T* camera, const T* point, T* residuals ) const

{

T predictions[2];

CamProjectionWithDistortion ( camera, point, predictions );

residuals[0] = predictions[0] - T ( measurement() ( 0 ) );

residuals[1] = predictions[1] - T ( measurement() ( 1 ) );

return true;

}

virtual void linearizeOplus() override

{

//使用Ceras数值化计算雅克比,需要重载()运算符

const VertexCameraBAL* cam = static_cast<const VertexCameraBAL*> ( vertex ( 0 ) );

const VertexPointBAL* point = static_cast<const VertexPointBAL*> ( vertex ( 1 ) );

typedef ceres::internal::AutoDiff<EdgeObservationBAL, double, VertexCameraBAL::Dimension, VertexPointBAL::Dimension> BalAutoDiff;

Eigen::Matrix<double, Dimension, VertexCameraBAL::Dimension, Eigen::RowMajor> dError_dCamera;

Eigen::Matrix<double, Dimension, VertexPointBAL::Dimension, Eigen::RowMajor> dError_dPoint;

double *parameters[] = { const_cast<double*> ( cam->estimate().data() ), const_cast<double*> ( point->estimate().data() ) };

double *jacobians[] = { dError_dCamera.data(), dError_dPoint.data() };

double value[Dimension];

bool diffState = BalAutoDiff::Differentiate ( *this, parameters, Dimension, value, jacobians );

// 将导数的行优先变为列优先

if ( diffState )

{

_jacobianOplusXi = dError_dCamera;

_jacobianOplusXj = dError_dPoint;

}

else

{

assert ( 0 && "Error while differentiating" );

_jacobianOplusXi.setZero();

_jacobianOplusXj.setZero();

}

}

};

2 构建图并选择优化算法

void BuildProblem(const BALProblem* bal_problem, g2o::SparseOptimizer* optimizer, const BundleParams& params)

{

const int num_points = bal_problem->num_points();

const int num_cameras = bal_problem->num_cameras();

const int camera_block_size = bal_problem->camera_block_size();

const int point_block_size = bal_problem->point_block_size();

// 用数据集初始化相机节点的值

const double* raw_cameras = bal_problem->cameras();

for(int i = 0; i < num_cameras; ++i)

{

ConstVectorRef temVecCamera(raw_cameras + camera_block_size * i,camera_block_size);

VertexCameraBAL* pCamera = new VertexCameraBAL();

//为每个相机设定初始值

pCamera->setEstimate(temVecCamera);

//为每个相机设定节点

pCamera->setId(i);

optimizer->addVertex(pCamera);

}

for(int j = 0; j < num_points; ++j)

{

ConstVectorRef temVecPoint(raw_points + point_block_size * j, point_block_size);

VertexPointBAL* pPoint = new VertexPointBAL();

pPoint->setEstimate(temVecPoint);

//每个节点应该有独特的id

pPoint->setId(j + num_cameras);

pPoint->setMarginalized(true);

optimizer->addVertex(pPoint);

}

//为图增加边

const int num_observations = bal_problem->num_observations();

const double* observations = bal_problem->observations(); // pointer for the first observation..

for(int i = 0; i < num_observations; ++i)

{

EdgeObservationBAL* bal_edge = new EdgeObservationBAL();

const int camera_id = bal_problem->camera_index()[i];

const int point_id = bal_problem->point_index()[i] + num_cameras;

if(params.robustify)

{

g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber;

rk->setDelta(1.0);

bal_edge->setRobustKernel(rk);

}

bal_edge->setVertex(0,dynamic_cast<VertexCameraBAL*>(optimizer->vertex(camera_id)));

bal_edge->setVertex(1,dynamic_cast<VertexPointBAL*>(optimizer->vertex(point_id)));

bal_edge->setInformation(Eigen::Matrix2d::Identity());

bal_edge->setMeasurement(Eigen::Vector2d(observations[2*i+0],observations[2*i + 1]));

optimizer->addEdge(bal_edge) ;

}

}

3. 调用g2o进行优化,返回结果

typedef g2o::BlockSolver<g2o::BlockSolverTraits<9,3> > BalBlockSolver;

void SetSolverOptionsFromFlags(BALProblem* bal_problem, const BundleParams& params, g2o::SparseOptimizer* optimizer)

{

BalBlockSolver* solver_ptr;

g2o::LinearSolver<BalBlockSolver::PoseMatrixType>* linearSolver = 0;

//采用稠密计算

if(params.linear_solver == "dense_schur" ){

linearSolver = new g2o::LinearSolverDense<BalBlockSolver::PoseMatrixType>();

}

//采用稀疏计算

else if(params.linear_solver == "sparse_schur"){

linearSolver = new g2o::LinearSolverCholmod<BalBlockSolver::PoseMatrixType>();

//让solver对矩阵进行排序保证稀疏性

//dynamic_cast这里是为了将基类的指针或引用安全地转换成派生类的指针或引用

//并用派生类的指针或引用调用非虚函数。如果是基类指针或引用调用的是虚函数无需转换就能在运行时调用派生类的虚函数。

dynamic_cast<g2o::LinearSolverCholmod<BalBlockSolver::PoseMatrixType>* >(linearSolver)->setBlockOrdering(true);

}

solver_ptr = new BalBlockSolver(linearSolver);

//SetLinearSolver(solver_ptr, params);

//SetMinimizerOptions(solver_ptr, params, optimizer);

g2o::OptimizationAlgorithmWithHessian* solver;

if(params.trust_region_strategy == "levenberg_marquardt"){

solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr);

}

else if(params.trust_region_strategy == "dogleg"){

solver = new g2o::OptimizationAlgorithmDogleg(solver_ptr);

}

else

{

std::cout << "Please check your trust_region_strategy parameter again.."<< std::endl;

exit(EXIT_FAILURE);

}

optimizer->setAlgorithm(solver);

//执行优化

optimizer.initializeOptimization();

optimizer.setVerbose(true);

optimizer.optimize(params.num_iterations);

}