最近在做一个kaggle比赛,是利用一家银行的用户样本的各项数据来对用户是否会贷款进行预测。

探索性数据分析(EDA)

数据总览

train_df = pd.read_csv('train.csv')

test_df = pd.read_csv('test.csv')

train_df.head()| ID_code | target | var_0 | var_1 | var_2 | var_3 | var_4 | var_5 | var_6 | var_7 | ... | var_190 | var_191 | var_192 | var_193 | var_194 | var_195 | var_196 | var_197 | var_198 | var_199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | train_0 | 0 | 8.9255 | -6.7863 | 11.9081 | 5.0930 | 11.4607 | -9.2834 | 5.1187 | 18.6266 | ... | 4.4354 | 3.9642 | 3.1364 | 1.6910 | 18.5227 | -2.3978 | 7.8784 | 8.5635 | 12.7803 | -1.0914 |

| 1 | train_1 | 0 | 11.5006 | -4.1473 | 13.8588 | 5.3890 | 12.3622 | 7.0433 | 5.6208 | 16.5338 | ... | 7.6421 | 7.7214 | 2.5837 | 10.9516 | 15.4305 | 2.0339 | 8.1267 | 8.7889 | 18.3560 | 1.9518 |

| 2 | train_2 | 0 | 8.6093 | -2.7457 | 12.0805 | 7.8928 | 10.5825 | -9.0837 | 6.9427 | 14.6155 | ... | 2.9057 | 9.7905 | 1.6704 | 1.6858 | 21.6042 | 3.1417 | -6.5213 | 8.2675 | 14.7222 | 0.3965 |

| 3 | train_3 | 0 | 11.0604 | -2.1518 | 8.9522 | 7.1957 | 12.5846 | -1.8361 | 5.8428 | 14.9250 | ... | 4.4666 | 4.7433 | 0.7178 | 1.4214 | 23.0347 | -1.2706 | -2.9275 | 10.2922 | 17.9697 | -8.9996 |

| 4 | train_4 | 0 | 9.8369 | -1.4834 | 12.8746 | 6.6375 | 12.2772 | 2.4486 | 5.9405 | 19.2514 | ... | -1.4905 | 9.5214 | -0.1508 | 9.1942 | 13.2876 | -1.5121 | 3.9267 | 9.5031 | 17.9974 | -8.8104 |

train_df.shape, train_df.shape((200000, 202), (200000, 202))

检查是否有缺失值

def missing_data(data):

total = data.isnull().sum()

percent = (data.isnull().sum()/data.isnull().count()*100)

tt = pd.concat([total, percent], axis=1, keys=['Total', 'Percent'])

types = []

for col in data.columns:

dtype = str(data[col].dtype)

types.append(dtype)

tt['Types'] = types

return(np.transpose(tt))

%%time

missing_data(train_df)| ID_code | target | var_0 | var_1 | var_2 | var_3 | var_4 | var_5 | var_6 | var_7 | ... | var_190 | var_191 | var_192 | var_193 | var_194 | var_195 | var_196 | var_197 | var_198 | var_199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Percent | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Types | object | int64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | ... | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 |

%%time

missing_data(test_df)| ID_code | var_0 | var_1 | var_2 | var_3 | var_4 | var_5 | var_6 | var_7 | var_8 | ... | var_190 | var_191 | var_192 | var_193 | var_194 | var_195 | var_196 | var_197 | var_198 | var_199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Percent | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Types | object | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | ... | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 | float64 |

结论

可见无缺失值

检查数值

train_df.describe()

| target | var_0 | var_1 | var_2 | var_3 | var_4 | var_5 | var_6 | var_7 | var_8 | ... | var_190 | var_191 | var_192 | var_193 | var_194 | var_195 | var_196 | var_197 | var_198 | var_199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | ... | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 |

| mean | 0.100490 | 10.679914 | -1.627622 | 10.715192 | 6.796529 | 11.078333 | -5.065317 | 5.408949 | 16.545850 | 0.284162 | ... | 3.234440 | 7.438408 | 1.927839 | 3.331774 | 17.993784 | -0.142088 | 2.303335 | 8.908158 | 15.870720 | -3.326537 |

| std | 0.300653 | 3.040051 | 4.050044 | 2.640894 | 2.043319 | 1.623150 | 7.863267 | 0.866607 | 3.418076 | 3.332634 | ... | 4.559922 | 3.023272 | 1.478423 | 3.992030 | 3.135162 | 1.429372 | 5.454369 | 0.921625 | 3.010945 | 10.438015 |

| min | 0.000000 | 0.408400 | -15.043400 | 2.117100 | -0.040200 | 5.074800 | -32.562600 | 2.347300 | 5.349700 | -10.505500 | ... | -14.093300 | -2.691700 | -3.814500 | -11.783400 | 8.694400 | -5.261000 | -14.209600 | 5.960600 | 6.299300 | -38.852800 |

| 25% | 0.000000 | 8.453850 | -4.740025 | 8.722475 | 5.254075 | 9.883175 | -11.200350 | 4.767700 | 13.943800 | -2.317800 | ... | -0.058825 | 5.157400 | 0.889775 | 0.584600 | 15.629800 | -1.170700 | -1.946925 | 8.252800 | 13.829700 | -11.208475 |

| 50% | 0.000000 | 10.524750 | -1.608050 | 10.580000 | 6.825000 | 11.108250 | -4.833150 | 5.385100 | 16.456800 | 0.393700 | ... | 3.203600 | 7.347750 | 1.901300 | 3.396350 | 17.957950 | -0.172700 | 2.408900 | 8.888200 | 15.934050 | -2.819550 |

| 75% | 0.000000 | 12.758200 | 1.358625 | 12.516700 | 8.324100 | 12.261125 | 0.924800 | 6.003000 | 19.102900 | 2.937900 | ... | 6.406200 | 9.512525 | 2.949500 | 6.205800 | 20.396525 | 0.829600 | 6.556725 | 9.593300 | 18.064725 | 4.836800 |

| max | 1.000000 | 20.315000 | 10.376800 | 19.353000 | 13.188300 | 16.671400 | 17.251600 | 8.447700 | 27.691800 | 10.151300 | ... | 18.440900 | 16.716500 | 8.402400 | 18.281800 | 27.928800 | 4.272900 | 18.321500 | 12.000400 | 26.079100 | 28.500700 |

test_df.describe()| var_0 | var_1 | var_2 | var_3 | var_4 | var_5 | var_6 | var_7 | var_8 | var_9 | ... | var_190 | var_191 | var_192 | var_193 | var_194 | var_195 | var_196 | var_197 | var_198 | var_199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | ... | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 | 200000.000000 |

| mean | 10.658737 | -1.624244 | 10.707452 | 6.788214 | 11.076399 | -5.050558 | 5.415164 | 16.529143 | 0.277135 | 7.569407 | ... | 3.189766 | 7.458269 | 1.925944 | 3.322016 | 17.996967 | -0.133657 | 2.290899 | 8.912428 | 15.869184 | -3.246342 |

| std | 3.036716 | 4.040509 | 2.633888 | 2.052724 | 1.616456 | 7.869293 | 0.864686 | 3.424482 | 3.333375 | 1.231865 | ... | 4.551239 | 3.025189 | 1.479966 | 3.995599 | 3.140652 | 1.429678 | 5.446346 | 0.920904 | 3.008717 | 10.398589 |

| min | 0.188700 | -15.043400 | 2.355200 | -0.022400 | 5.484400 | -27.767000 | 2.216400 | 5.713700 | -9.956000 | 4.243300 | ... | -14.093300 | -2.407000 | -3.340900 | -11.413100 | 9.382800 | -4.911900 | -13.944200 | 6.169600 | 6.584000 | -39.457800 |

| 25% | 8.442975 | -4.700125 | 8.735600 | 5.230500 | 9.891075 | -11.201400 | 4.772600 | 13.933900 | -2.303900 | 6.623800 | ... | -0.095000 | 5.166500 | 0.882975 | 0.587600 | 15.634775 | -1.160700 | -1.948600 | 8.260075 | 13.847275 | -11.124000 |

| 50% | 10.513800 | -1.590500 | 10.560700 | 6.822350 | 11.099750 | -4.834100 | 5.391600 | 16.422700 | 0.372000 | 7.632000 | ... | 3.162400 | 7.379000 | 1.892600 | 3.428500 | 17.977600 | -0.162000 | 2.403600 | 8.892800 | 15.943400 | -2.725950 |

| 75% | 12.739600 | 1.343400 | 12.495025 | 8.327600 | 12.253400 | 0.942575 | 6.005800 | 19.094550 | 2.930025 | 8.584825 | ... | 6.336475 | 9.531100 | 2.956000 | 6.174200 | 20.391725 | 0.837900 | 6.519800 | 9.595900 | 18.045200 | 4.935400 |

| max | 22.323400 | 9.385100 | 18.714100 | 13.142000 | 16.037100 | 17.253700 | 8.302500 | 28.292800 | 9.665500 | 11.003600 | ... | 20.359000 | 16.716500 | 8.005000 | 17.632600 | 27.947800 | 4.545400 | 15.920700 | 12.275800 | 26.538400 | 27.907400 |

结论

1.标准差std都很大

2. min, max, mean, sdt训练集和测试集比较接近

3.mean values平均值分布跨度较大

图表展示

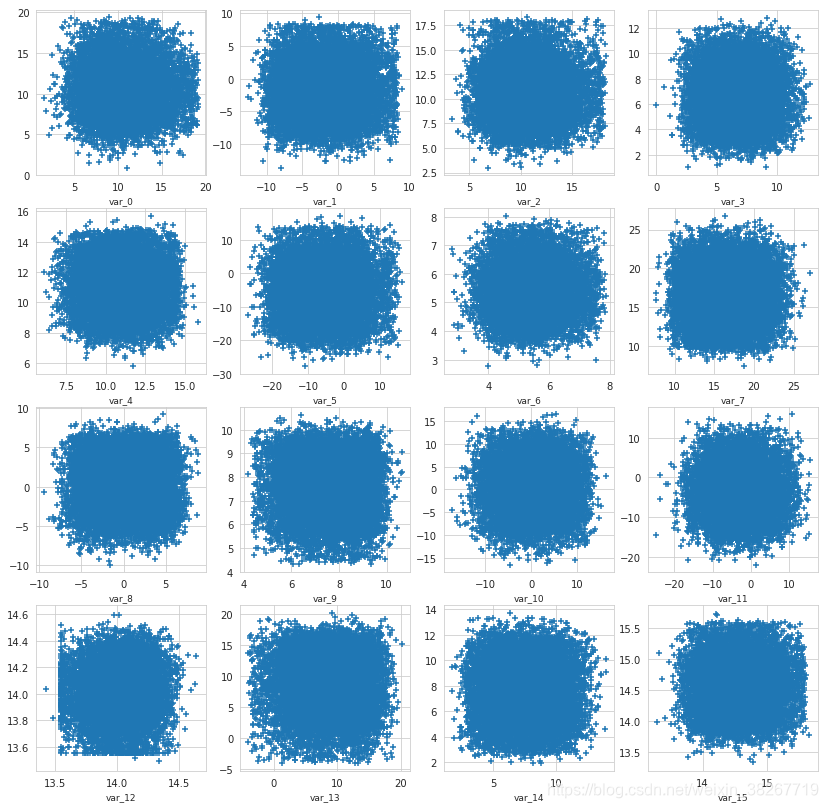

散点图展示部分特征的分布情况

def plot_feature_scatter(df1, df2, features):

i = 0

sns.set_style('whitegrid')

plt.figure()

fig, ax = plt.subplots(4,4,figsize=(14,14))

for feature in features:

i += 1

plt.subplot(4,4,i)

plt.scatter(df1[feature], df2[feature], marker='+')

plt.xlabel(feature, fontsize=9)

plt.show();

上面好像看不出来啥,感觉都比较聚集

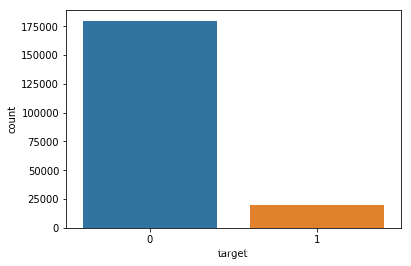

样本是否平衡

import matplotlib.pyplot as plt

import seaborn as sns

sns.countplot(train_df['target'])

positive_num = data_train.target[data_train.target==0].value_counts()

negative_num = data_train.target[data_train.target==1].value_counts()

正样本: positive_num = 1 20098

负样本: negative_num = 0 179902

print("target为1的比例:{}% ".format(100 * train_df["target"].value_counts()[1]/train_df.shape[0]))target为1的比例:10.049%

显然这个样本非常不平衡,可以考虑采用样本均衡化

看每个feature的样本分布情况

首先看target0和target之间的区别

def plot_feature_distribution(df1, df2, label1, label2, features):

i = 0

sns.set_style('whitegrid')

plt.figure()

fig, ax = plt.subplots(10,10,figsize=(18,22))

for feature in features:

i += 1

plt.subplot(10,10,i)

sns.kdeplot(df1[feature], bw=0.5,label=label1)

sns.kdeplot(df2[feature], bw=0.5,label=label2)

plt.xlabel(feature, fontsize=9)

locs, labels = plt.xticks()

plt.tick_params(axis='x', which='major', labelsize=6, pad=-6)

plt.tick_params(axis='y', which='major', labelsize=6)

plt.show();t0 = train_df.loc[train_df['target'] == 0]

t1 = train_df.loc[train_df['target'] == 1]

features = train_df.columns.values[2:102]

plot_feature_distribution(t0, t1, '0', '1', features)

features = train_df.columns.values[102:202]

plot_feature_distribution(t0, t1, '0', '1', features)

从上面我们可以看出哪些特征target0,1分布有较大差异

同样特征在train和test的分布

features = train_df.columns.values[2:102]

plot_feature_distribution(train_df, test_df, 'train', 'test', features)

features = train_df.columns.values[102:202]

plot_feature_distribution(train_df, test_df, 'train', 'test', features)几乎看不出来差别,说明测试集和训练集非常吻合,有利于预测

查看统计数据的分布情况

train和test平均值

#画图展示train和test每一行的平均值的分布

plt.figure(figsize=(16,6))

features = train_df.columns.values[2:202]

plt.title("Distribution of mean values per row in the train and test set")

sns.distplot(train_df[features].mean(axis=1),color="green", kde=True,bins=120, label='train')

sns.distplot(test_df[features].mean(axis=1),color="blue", kde=True,bins=120, label='test')

plt.legend()

plt.show()

#画图展示train和test每一列的平均值的分布

plt.figure(figsize=(16,6))

plt.title("Distribution of mean values per column in the train and test set")

sns.distplot(train_df[features].mean(axis=0),color="magenta",kde=True,bins=120, label='train')

sns.distplot(test_df[features].mean(axis=0),color="darkblue", kde=True,bins=120, label='test')

plt.legend()

plt.show()train和test标准差std

#画图展示train和test每一行的std的分布

plt.figure(figsize=(16,6))

plt.title("Distribution of std values per row in the train and test set")

sns.distplot(train_df[features].std(axis=1),color="black", kde=True,bins=120, label='train')

sns.distplot(test_df[features].std(axis=1),color="red", kde=True,bins=120, label='test')

plt.legend();plt.show()

#画图展示train和test每一列的std的分布

plt.figure(figsize=(16,6))

plt.title("Distribution of std values per column in the train and test set")

sns.distplot(train_df[features].std(axis=0),color="blue",kde=True,bins=120, label='train')

sns.distplot(test_df[features].std(axis=0),color="green", kde=True,bins=120, label='test')

plt.legend(); plt.show()

target0和1平均值

t0 = train_df.loc[train_df['target'] == 0]

t1 = train_df.loc[train_df['target'] == 1]

plt.figure(figsize=(16,6))

plt.title("Distribution of mean values per row in the train set")

sns.distplot(t0[features].mean(axis=1),color="red", kde=True,bins=120, label='target = 0')

sns.distplot(t1[features].mean(axis=1),color="blue", kde=True,bins=120, label='target = 1')

plt.legend(); plt.show()train和test的min

#画图展示train和test每一行的min的分布

plt.figure(figsize=(16,6))

features = train_df.columns.values[2:202]

plt.title("Distribution of min values per row in the train and test set")

sns.distplot(train_df[features].min(axis=1),color="red", kde=True,bins=120, label='train')

sns.distplot(test_df[features].min(axis=1),color="orange", kde=True,bins=120, label='test')

plt.legend()

plt.show()

#画图展示train和test每一列的min的分布

plt.figure(figsize=(16,6))

features = train_df.columns.values[2:202]

plt.title("Distribution of min values per column in the train and test set")

sns.distplot(train_df[features].min(axis=0),color="magenta", kde=True,bins=120, label='train')

sns.distplot(test_df[features].min(axis=0),color="darkblue", kde=True,bins=120, label='test')

plt.legend()

plt.show()train和test的max分布

train中target0和1min的分布

train中target0和1max的分布

skew和kurtosis偏度和峰度

#画图展示train和test每一行的skew的分布

plt.figure(figsize=(16,6))

plt.title("Distribution of skew per row in the train and test set")

sns.distplot(train_df[features].skew(axis=1),color="red", kde=True,bins=120, label='train')

sns.distplot(test_df[features].skew(axis=1),color="orange", kde=True,bins=120, label='test')

plt.legend()

plt.show()

#画图展示train和test每一列的skew的分布

plt.figure(figsize=(16,6))

plt.title("Distribution of skew per column in the train and test set")

sns.distplot(train_df[features].skew(axis=0),color="magenta", kde=True,bins=120, label='train')

sns.distplot(test_df[features].skew(axis=0),color="darkblue", kde=True,bins=120, label='test')

plt.legend()

plt.show()继续分别为train和test的skew分布,train和test的kurtosis分布,train中target0和1中skew、kurtosis的分布

特征相关度

%%time

features = [c for c in train_df.columns if c not in ['ID_code', 'target']]

correlations = train_df[features].corr().abs().unstack().sort_values(kind="quicksort").reset_index()

correlations = correlations[correlations['level_0'] != correlations['level_1']]

correlations.head(10)

#打印出相关系数最高的10组特征| level_0 | level_1 | 0 | |

|---|---|---|---|

| 39790 | var_183 | var_189 | 0.009359 |

| 39791 | var_189 | var_183 | 0.009359 |

| 39792 | var_174 | var_81 | 0.009490 |

| 39793 | var_81 | var_174 | 0.009490 |

| 39794 | var_81 | var_165 | 0.009714 |

| 39795 | var_165 | var_81 | 0.009714 |

| 39796 | var_53 | var_148 | 0.009788 |

| 39797 | var_148 | var_53 | 0.009788 |

| 39798 | var_26 | var_139 | 0.009844 |

| 39799 | var_139 | var_26 | 0.009844 |

可见相关性都很小

检查每一列的重复值

%%time

features = train_df.columns.values[2:202]

unique_max_train = []

unique_max_test = []

for feature in features:

values = train_df[feature].value_counts()

unique_max_train.append([feature, values.max(), values.idxmax()])

values = test_df[feature].value_counts()

unique_max_test.append([feature, values.max(), values.idxmax()])

#查看train前15的重复值

np.transpose((pd.DataFrame(unique_max_train, columns=['Feature', 'Max duplicates', 'Value'])).\

sort_values(by = 'Max duplicates', ascending=False).head(15))

#查看test前15的重复值

np.transpose((pd.DataFrame(unique_max_test, columns=['Feature', 'Max duplicates', 'Value'])).\

sort_values(by = 'Max duplicates', ascending=False).head(15))| 68 | 126 | 108 | 12 | 91 | 103 | 148 | 161 | 25 | 71 | 43 | 166 | 125 | 169 | 133 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Feature | var_68 | var_126 | var_108 | var_12 | var_91 | var_103 | var_148 | var_161 | var_25 | var_71 | var_43 | var_166 | var_125 | var_169 | var_133 |

| Max duplicates | 1104 | 307 | 302 | 188 | 86 | 78 | 74 | 69 | 60 | 60 | 58 | 53 | 53 | 51 | 50 |

| Value | 5.0197 | 11.5357 | 14.1999 | 13.5546 | 6.9939 | 1.4659 | 4.0004 | 5.7114 | 13.5965 | 0.5389 | 11.5738 | 2.8446 | 12.2189 | 5.8455 | 6.6873 |

可见:train和test的重复值特征排列、出现的次数、特征对应的值都差不多

本文参考:https://www.kaggle.com/gpreda/santander-eda-and-prediction