这部分利用pytorch搭建一个神经网络模型来实现书写数字识别

代码基于python3.7, pytorch 1.0,cuda 10.0 .

手写数字识别

先使用已经提供的训练数据对搭建好的神经网络模型进行训练并完成参数优化;

然后使用优化好的模型对测试数据进行预测,对比预测值和真实值之间的损失

值,同时计算出结果预测的准确率。在将要搭建的模型中会用到卷积神经网络

模型,下面让我们开始吧。

import torch

import numpy

import torchvision

import matplotlib.pyplot as plt

%matplotlib inline

from torchvision import datasets, transforms # torchvision包的主要功能是实现数据的处理、导入和预览等

from torch.autograd import Variable

在torch.transforms中有大量的数据变换类,其中有很大一部分可以用于实现数据增强(Data Argumentation)。若在我们需要解决的问题上

能够参与到模型训练中的图片数据非常有限,则这时就要通过对有限的图片数据进行各种变换,来生成新的训练集了,这些变换可以是缩小或

者放大图片的大小、对图片进行水平或者垂直翻转等,都是数据增强的方法

# transform用于指定导入数据集时需要对数据进行哪种变换操作,在后面会介绍详细的变换操作类型,注意,要提前定义这些变换操作

transform = transforms.Compose([transforms.ToTensor(),

transforms.Lambda(lambda x: x.repeat(3,1,1)),

transforms.Normalize(mean = [0.5,0.5,0.5], std = [0.5,0.5,0.5])])

我们可以将以上代码中的torchvision.transforms.Compose类看作一种容器,它能够同时对多种数据变换进行组合。传入的参数是一个列表,

列表中的元素就是对载入的数据进行的各种变换操作。在以上代码中,在 torchvision.transforms.Compose中只使用了一个类型的转换变换transforms.ToTensor和一个数据标准化变换transforms.Normalize。(特别注意,这里由于图片的格式是单通道的,因此通过transforms.Lambda(lambda x: x.repeat(3,1,1))复制使其达到3通道)这里使用的标准化变换也叫作标准差变换法,这种方法需要使用原始数

据的均值(Mean)和标准差(StandardDeviation)来进行数据的标准化,在经过标准化变换之后,数据全部符合均值为0、标准差为1的标准

正态分布。计算公式如下:

不过我们在这里偷了一个懒,均值和标准差的值并非来自原始数据的,而是自行定义了一个,不过仍然能够达到

我们的目的。

data_train = datasets.MNIST(root = './data/',

transform = transform,

train = True,

download = False)

data_test = datasets.MNIST(root = './data/',

transform = transform,

train = False)

数据预览和数据装载

在数据下载完成并且载入后,我们还需要对数据进行装载。我们可以将数据的载入理解为对图片的处理,在处理完成后,我们就需要将这

些图片打包好送给我们的模型进行训练了,而装载就是这个打包的过程。在装载时通过batch_size的值来确认每个包的大小,通过shuffle

的值来确认是否在装载的过程中打乱图片的顺序。装载图片的代码如下:

data_loader_train = torch.utils.data.DataLoader(dataset = data_train, batch_size = 64, shuffle = True)

data_loader_test = torch.utils.data.DataLoader(dataset = data_test, batch_size = 64, shuffle = True)

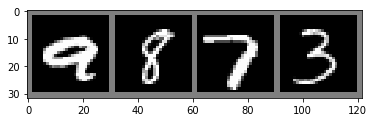

# 装载完成后,我们可以选取其中一个批次的数据进行预览。进行数据预览的代码如下:

images, labels = next(iter(data_loader_train))

img = torchvision.utils.make_grid(images) # 这里的图像数据的size是(channel,height,weight),而我们显示图像的size顺序为(height,weight,channel)

img = img.numpy().transpose(1,2,0) # 转为(height,weight,channel)

std = [0.5,0.5,0.5]

mean = [0.5,0.5,0.5]

img = img * std + mean # 将上面Normalize后的图像还原

print([labels[i].item() for i in range(64)]) # labels是tensor数据,要显示他的值要用.item()

plt.imshow(img)

[0, 3, 6, 6, 7, 1, 0, 9, 0, 0, 3, 2, 9, 1, 4, 1, 8, 9, 6, 3, 7, 3, 7, 9, 4, 4, 9, 4, 7, 5, 3, 1, 5, 3, 1, 3, 4, 4, 2, 4, 8, 9, 5, 3, 8, 1, 8, 5, 6, 4, 0, 9, 2, 6, 0, 6, 4, 1, 2, 5, 9, 8, 7, 1]

<matplotlib.image.AxesImage at 0x21765868f60>

构建网络

需要注意的是conv2d输入的参数为: 输入层数,输出层数… 要对接好。

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = torch.nn.Sequential(

torch.nn.Conv2d(3,64,kernel_size = 3,stride = 1,padding = 1),

torch.nn.ReLU(),

torch.nn.Conv2d(64,128,kernel_size = 3,stride = 1,padding = 1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(stride = 2, kernel_size = 2))

self.dense = torch.nn.Sequential(

torch.nn.Linear(14*14*128,1024),

torch.nn.ReLU(),

torch.nn.Dropout(p = 0.5),

torch.nn.Linear(1024,10))

def forward(self, x):

x = self.conv1(x)

x = x.view(-1, 14*14*128) # 对得到的多层的参数进行扁平化处理,使之能与全连接层连接

x = self.dense(x)

return x

model = Model()

cost = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters())

print(model)

epoch_n = 5

for epoch in range(epoch_n):

running_loss = 0.0

running_correct = 0

print('Epoch {}/{}'.format(epoch, epoch_n))

print('-'*10)

for data in data_loader_train:

X_train, Y_train = data

X_train, Y_train = Variable(X_train), Variable(Y_train)

outputs = model(X_train)

_, pred = torch.max(outputs.data, 1) # 注意:这句的意思是:返回每一行中最大值的那个元素,且返回其索引(返回最大元素在这一行的列索引)

optimizer.zero_grad()

loss = cost(outputs, Y_train)

loss.backward()

optimizer.step()

running_loss += loss.data.item()

running_correct += torch.sum(pred == Y_train.data)

testing_correct = 0

for data in data_loader_test:

X_test, Y_test = data

X_test, Y_test = Variable(X_test), Variable(Y_test)

outputs = model(X_test)

_, pred = torch.max(outputs.data, 1)

testing_correct += torch.sum(pred == Y_test.data)

print('Loss is :{:.4f},Train Accuracy is : {:.4f}%, Test Accuracy is :{:.4f}'.format(running_loss/len(data_train),

100*running_correct/len(data_train),

100*testing_correct/len(data_test)))

Model(

(conv1): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU()

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(dense): Sequential(

(0): Linear(in_features=25088, out_features=1024, bias=True)

(1): ReLU()

(2): Dropout(p=0.5)

(3): Linear(in_features=1024, out_features=10, bias=True)

)

)

Epoch 0/5

----------

Loss is :0.0021,Train Accuracy is : 96.0000%, Test Accuracy is :98.0000

Epoch 1/5

----------

Loss is :0.0007,Train Accuracy is : 98.0000%, Test Accuracy is :98.0000

Epoch 2/5

----------

Loss is :0.0005,Train Accuracy is : 99.0000%, Test Accuracy is :98.0000

Epoch 3/5

----------

Loss is :0.0003,Train Accuracy is : 99.0000%, Test Accuracy is :98.0000

Epoch 4/5

----------

Loss is :0.0003,Train Accuracy is : 99.0000%, Test Accuracy is :98.0000

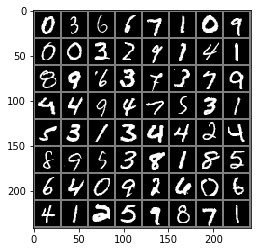

data_loader_test = torch.utils.data.DataLoader(dataset = data_test, batch_size = 4, shuffle = True)

X_test, Y_test = next(iter(data_loader_test))

inputs = Variable(X_test)

pred = model(inputs)

_, pred = torch.max(pred, 1) # 注意:这句的意思是:返回每一行中最大值的那个元素,且返回其索引(返回最大元素在这一行的列索引)

print('Predict Label is :', [i.item() for i in pred.data]) # i 是tensor类型,要显示数值要用.item()

print('Real Label is :', [i.item() for i in Y_test])

img = torchvision.utils.make_grid(X_test)

img = img.numpy().transpose(1,2,0)

std = [0.5,0.5,0.5]

mean = [0.5,0.5,0.5]

img = img * std + mean

plt.imshow(img)

Predict Label is : [9, 8, 7, 3]

Real Label is : [9, 8, 7, 3]

<matplotlib.image.AxesImage at 0x217049d1828>