版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/shujuelin/article/details/87895270

#定义agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1

#定义sources

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /usr/local/log/logs

#定义channels

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

#定义sink

# Describe the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://spark1:9000/aiqiyi

#上传文件的前缀

a1.sinks.k1.hdfs.filePrefix = %Y-%m-%d

a1.sinks.k1.hdfs.fileSuffix = .log

#是否按照时间滚动文件夹

a1.sinks.k1.hdfs.round = true

#多少时间单位创建一个新的文件夹

a1.sinks.k1.hdfs.roundValue = 1

#重新定义时间单位

#a1.sinks.k1.hdfs.roundUnit = hour

#是否使用本地时间戳

a1.sinks.k1.hdfs.useLocalTimeStamp = true

#积攒多少个 Event 才 flush 到 HDFS 一次

a1.sinks.k1.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a1.sinks.k1.hdfs.fileType = DataStream

#多久生成一个新的文件

a1.sinks.k1.hdfs.rollInterval = 600

#设置每个文件的滚动大小大概是 128M

a1.sinks.k1.hdfs.rollSize = 134217700

#文件的滚动与 Event 数量无关

a1.sinks.k1.hdfs.rollCount = 0

#最小冗余数

a1.sinks.k1.hdfs.minBlockReplicas = 1

#绑定

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

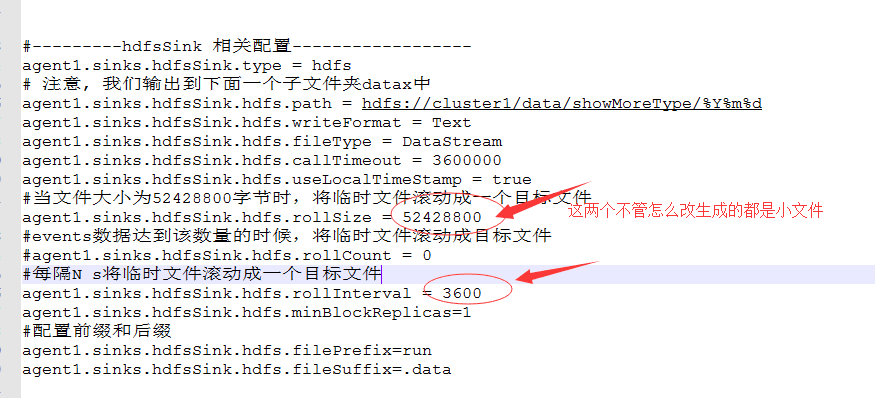

hdfs sink中跟产生文件数量相关的配置hdfs.rollSize、hdfs.rollInterval。