1、flume安装目录下新建文件夹 example

2、在example下新建文件

log-hdfs.conf

内容如下:

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

#exec 指的是命令

# Describe/configure the source

a1.sources.r1.type = exec

#F根据文件名追中, f根据文件的nodeid追中

a1.sources.r1.command = tail -F /home/hadoop/testdata/testflume.log

a1.sources.r1.channels = c1

# Describe the sink

#下沉目标

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = c1

#指定目录, flum帮做目的替换

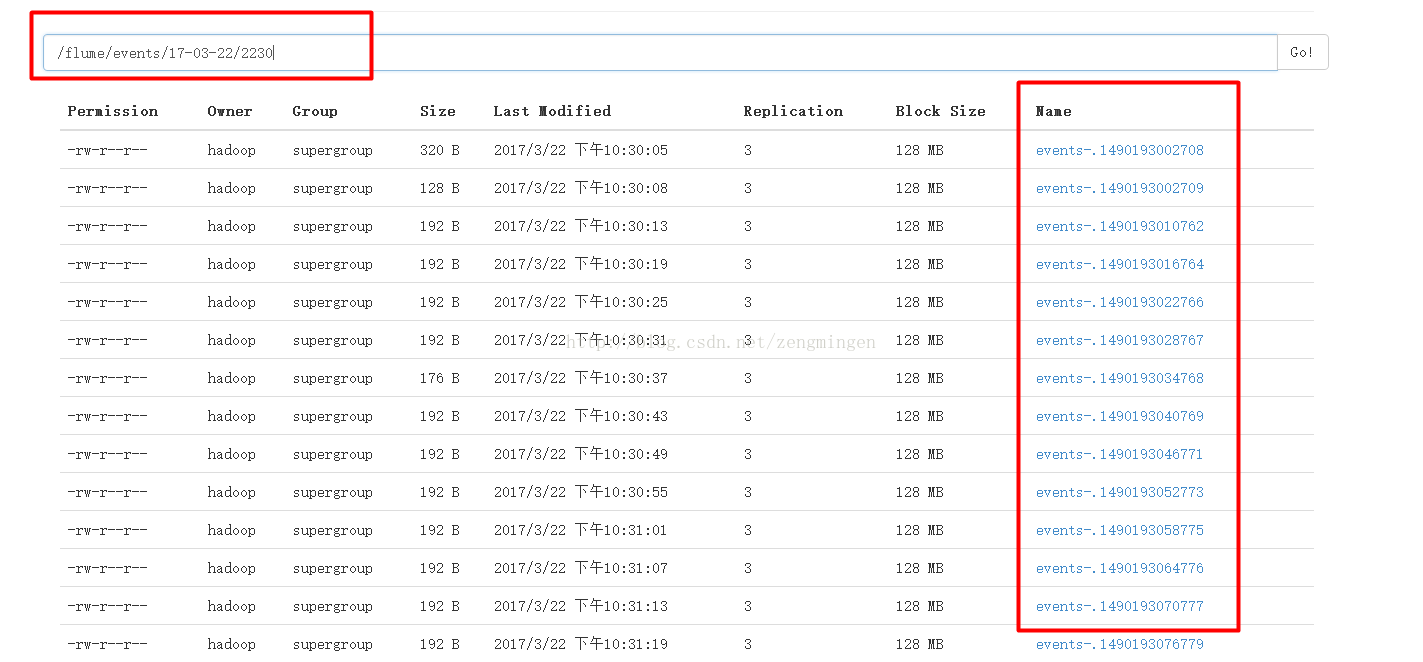

a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/

#文件的命名, 前缀

a1.sinks.k1.hdfs.filePrefix = events-

#10 分钟就改目录(创建目录), (这些参数影响/flume/events/%y-%m-%d/%H%M/)

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = minute

#目录里面有文件

#------start----两个条件,只要符合其中一个就满足---

#文件滚动之前的等待时间(秒)

a1.sinks.k1.hdfs.rollInterval = 3

#文件滚动的大小限制(bytes)

a1.sinks.k1.hdfs.rollSize = 500

#写入多少个event数据后滚动文件(事件个数)

a1.sinks.k1.hdfs.rollCount = 20

#-------end-----

#5个事件就往里面写入

a1.sinks.k1.hdfs.batchSize = 5

#用本地时间格式化目录

a1.sinks.k1.hdfs.useLocalTimeStamp = true

#下沉后, 生成的文件类型,默认是Sequencefile,可用DataStream,则为普通文本

a1.sinks.k1.hdfs.fileType = DataStream

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

3、shell命令不断写数据到文件

[hadoop@nbdo3 testdata]$ while true; do echo "hello ningbo do" >> testflume.log ; sleep 0.5; done

4、在新窗口用tail 命令查看到 testflume.log文件内容不断增加

[hadoop@nbdo3 testdata]$ tail -f testflume.log

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

hello ningbo do

5、启动hadoop

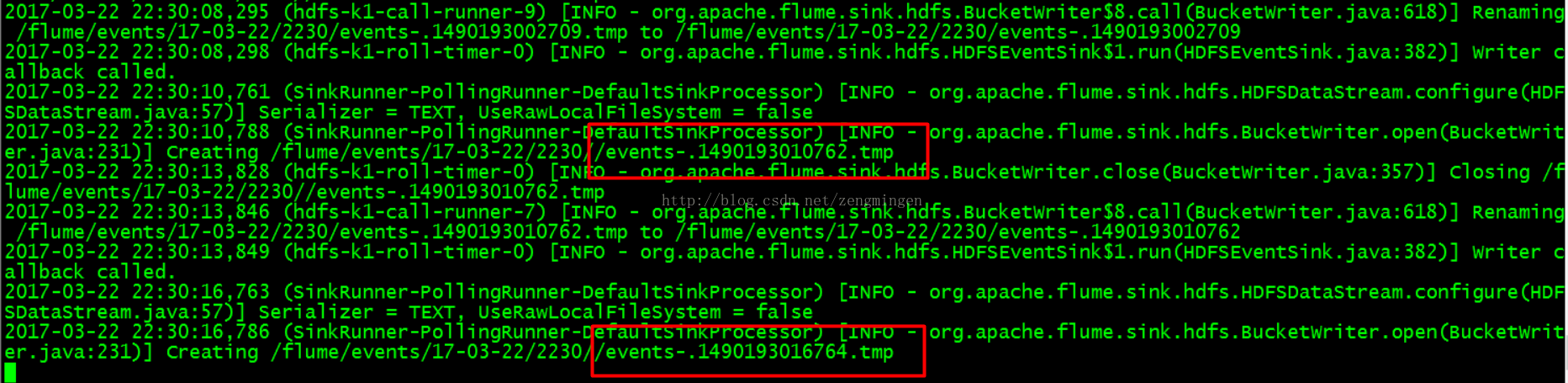

6、启动flume

flume-ng agent -c ../conf -flog-hdfs.conf -n a1 -Dflume.root.logger=INFO,console

7、浏览器进入hadoop管理界面。