今天记录一下之前做的用机器学习聚类算法中的学习向量量化方法做的实例,也是以此前的城市GDP数据为例。

算法如下:

输入: 样本集D={(x1,y1),(x2,y2),...,(xm,ym)};原型向量个数q,各原型向量预设的类别标记{t1,t2,...,tq};学习率e属于0-1。

过程:

1:初始化一组原型向量{P1,P2,...,Pq};

2 : repeat

3: 从样本集D随机选取样本(xj,yj);4 : 计算样本xj与Pi(1<i<q)的距离dji;

5: 找出与xj距离最近的原型向量Pi*,i*=argmindji;

6 : if yj=ti* then

7 : P'=Pi*+e(xj-Pi*)

8: else

扫描二维码关注公众号,回复:

5374344 查看本文章

9: P'=Pi*-e(xj-Pi*)

10: end if

11: 将原型向量Pi*更新为P'

12:until满足条件停止

输出:原型向量{P1,P2,...,Pq}

代码如下

#coding:utf-8

from __future__ import division

from math import sqrt

import xlrd

import random

import numpy

#读取数据

def storage():

table = xlrd.open_workbook('E:/citydata.xls')

sheet = table.sheets()[0]

col = sheet.col_values(0)

list1 = []

for i in range(4, 40):

row_data = sheet.row_values(i)

list1.append(row_data)

l = len(list1)

list2 = []

for j in range(l):

temp = list1[j]

list2.append(temp[0])

del temp[0]

list1[j] = temp

dict1 = {}

for k in range(l):

temp1 = list2[k]

dict1[temp1] = list1[k]

return dict1

#距离函数

def distance(elem, K_mean):

global Sum

length = len(elem)

Sum = 0

# print type(K_mean)

for i in range(length):

Sum = Sum + (elem[i] - K_mean[i])**2

return Sum

#最小向量

def minus_vector(vector1, vector2):

l = len(vector1)

minus = [vector1[i]-vector2[i] for i in range(l)]

return minus

#城市聚类

def city_cluster(dict1, k_cluster):

clu_list = []

city = dict1.keys()

for every in k_cluster:

for one in city:

if every == dict1[one]:

clu_list.append(one)

return clu_list

#聚类结果

def cluster(dict1, K1, K2, K3):

K1_cluster = []

K2_cluster = []

K3_cluster = []

for each in dict1:

dist1 = distance(dict1[each], K1)

dist2 = distance(dict1[each], K2)

dist3 = distance(dict1[each], K3)

Min = min(dist1, dist2, dist3)

if Min == dist1:

K1_cluster.append(dict1[each])

if Min == dist2:

K2_cluster.append(dict1[each])

if Min == dist3:

K3_cluster.append(dict1[each])

# print K1_cluster

return K1_cluster, K2_cluster, K3_cluster

# 判断标签是否相同

def judge_label(min_prototype, sample_label, prototype):

global p, m

q = int(sample_label)

for elem in prototype:

if elem[1] == min_prototype:

p = elem[0]

list1 = [p, min_prototype]

if p == q:

m = 1

else:

m = 0

return p, m, list1

#算法过程

def LVQ(sample, prototype):

global min_prototype, p1

constant = 0.1

i = 1

if i <= 900:

specimen = random.sample(sample, 1)[0]

sample_vector = specimen[1]

sample_label = specimen[0]

num_dict = {}

num_list = []

for each in prototype:

prototype_vector = each[1]

dis = distance(sample_vector, prototype_vector)

num_list.append(dis)

num_dict[dis] = prototype_vector

min_number = min(num_list)

g = num_dict.items()

for every in g:

r = every[0]

temp = float(r)

if temp == min_number:

min_prototype = every[1]

p, m, list1 = judge_label(min_prototype, sample_label, prototype)

minus = minus_vector(sample_vector, min_prototype)

difference = [constant*x for x in minus]

if m == 1:

p1 = [min_prototype[j] + difference[j] for j in range(5)]

else:

p1 = [min_prototype[k] - difference[k] for k in range(5)]

for single in prototype:

if single[1] == min_prototype:

prototype.remove(single)

prototype.append(list1)

i += 1

return prototype

if __name__ == '__main__':

dict1 = storage()

sample = []

city = dict1.values()

for i in range(1, 4):

m = 12*i

for j in range(m):

sample1 = []

sample1.append(i)

sample1.append(city[j])

sample.append(sample1)

prototype = random.sample(sample, 3)

prototype = LVQ(sample, prototype)

K1_cluster, K2_cluster, K3_cluster = cluster(dict1, prototype[0][1], prototype[1][1], prototype[2][1])

# print K1_cluster

# print K2_cluster

# print K3_cluster

K1_city = city_cluster(dict1, K1_cluster)

K2_city = city_cluster(dict1, K2_cluster)

K3_city = city_cluster(dict1, K3_cluster)

# print K1_city

for i in K1_city:

print i,

print

for i in K2_city:

print i,

print

for i in K3_city:

print i,

print

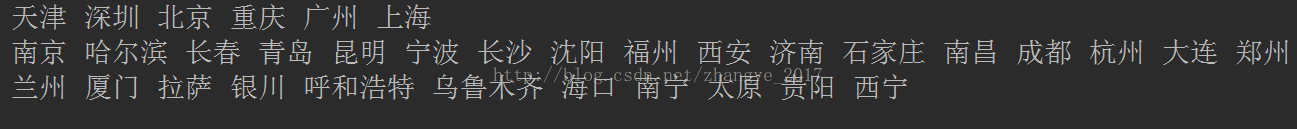

结果如下:

结果比kmeans好一些,因为应用了样本的监督信息来辅助聚类。