backbone

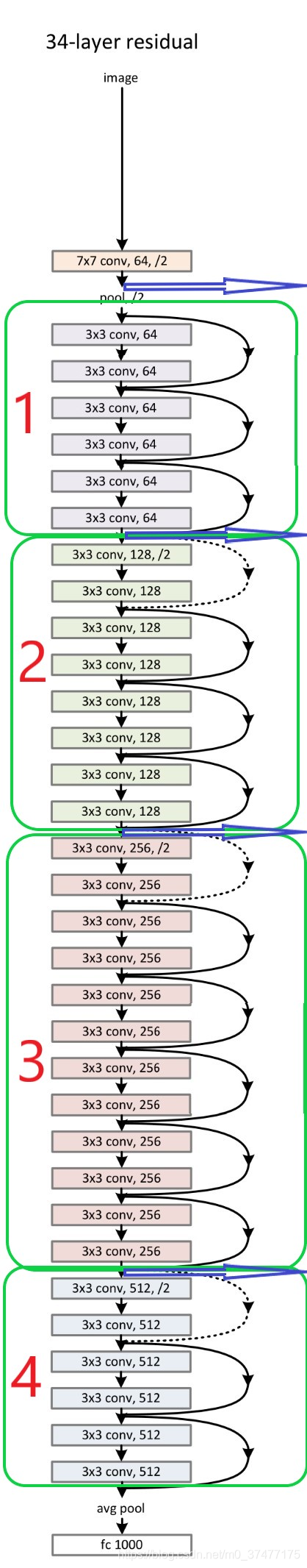

Resnet34网络结构图:

其中在网络搭建的过程中分为4个stage,蓝色箭头是在Unet中要进行合并的层。注意:前向的运算encoder过程一共经过了4次降采样,所以decoder过程要有4次上采样的过程。

Resnet34代码搭建(keras)

卷积block搭建

有两种形式:

A: 单纯的shortcut

B: 虚线的shortcut是对特征图的维度做了调整(

卷积)

def basic_identity_block(filters, stage, block):

"""The identity block is the block that has no conv layer at shortcut.

# Arguments

kernel_size: default 3, the kernel size of

middle conv layer at main path

filters: list of integers, the filters of 3 conv layer at main path

stage: integer, current stage label, used for generating layer names

block: 'a','b'..., current block label, used for generating layer names

# Returns

Output tensor for the block.

"""

def layer(input_tensor):

conv_params = get_conv_params()

bn_params = get_bn_params()

conv_name, bn_name, relu_name, sc_name = handle_block_names(stage, block)

x = BatchNormalization(name=bn_name + '1', **bn_params)(input_tensor)

x = Activation('relu', name=relu_name + '1')(x)

x = ZeroPadding2D(padding=(1, 1))(x)

x = Conv2D(filters, (3, 3), name=conv_name + '1', **conv_params)(x)

x = BatchNormalization(name=bn_name + '2', **bn_params)(x)

x = Activation('relu', name=relu_name + '2')(x)

x = ZeroPadding2D(padding=(1, 1))(x)

x = Conv2D(filters, (3, 3), name=conv_name + '2', **conv_params)(x)

x = Add()([x, input_tensor])

return x

return layer

def basic_conv_block(filters, stage, block, strides=(2, 2)):

"""The identity block is the block that has no conv layer at shortcut.

# Arguments

input_tensor: input tensor

kernel_size: default 3, the kernel size of

middle conv layer at main path

filters: list of integers, the filters of 3 conv layer at main path

stage: integer, current stage label, used for generating layer names

block: 'a','b'..., current block label, used for generating layer names

# Returns

Output tensor for the block.

"""

def layer(input_tensor):

conv_params = get_conv_params()

bn_params = get_bn_params()

conv_name, bn_name, relu_name, sc_name = handle_block_names(stage, block)

x = BatchNormalization(name=bn_name + '1', **bn_params)(input_tensor)

x = Activation('relu', name=relu_name + '1')(x)

shortcut = x

x = ZeroPadding2D(padding=(1, 1))(x)

x = Conv2D(filters, (3, 3), strides=strides, name=conv_name + '1', **conv_params)(x)

x = BatchNormalization(name=bn_name + '2', **bn_params)(x)

x = Activation('relu', name=relu_name + '2')(x)

x = ZeroPadding2D(padding=(1, 1))(x)

x = Conv2D(filters, (3, 3), name=conv_name + '2', **conv_params)(x)

shortcut = Conv2D(filters, (1, 1), name=sc_name, strides=strides, **conv_params)(shortcut)

x = Add()([x, shortcut])

return x

return layer

Resnet34网络搭建

网络结构即如上图所示。

def build_resnet(

repetitions=(2, 2, 2, 2),

include_top=True,

input_tensor=None,

input_shape=None,

classes=1000,

block_type='usual'):

# Determine proper input shape

input_shape = _obtain_input_shape(input_shape,

default_size=224,

min_size=197,

data_format='channels_last',

require_flatten=include_top)

if input_tensor is None:

img_input = Input(shape=input_shape, name='data')

else:

if not K.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

# get parameters for model layers

no_scale_bn_params = get_bn_params(scale=False)

bn_params = get_bn_params()

conv_params = get_conv_params()

init_filters = 64

if block_type == 'basic':

conv_block = basic_conv_block

identity_block = basic_identity_block

else:

conv_block = usual_conv_block

identity_block = usual_identity_block

# renet bottom

x = BatchNormalization(name='bn_data', **no_scale_bn_params)(img_input)

x = ZeroPadding2D(padding=(3, 3))(x)

x = Conv2D(init_filters, (7, 7), strides=(2, 2), name='conv0', **conv_params)(x)

x = BatchNormalization(name='bn0', **bn_params)(x)

x = Activation('relu', name='relu0')(x)

x = ZeroPadding2D(padding=(1, 1))(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='valid', name='pooling0')(x)

# resnet body repetitions = (3,4,6,3)

for stage, rep in enumerate(repetitions):

for block in range(rep):

# print(block)

filters = init_filters * (2**stage)

# first block of first stage without strides because we have maxpooling before

if block == 0 and stage == 0:

# x = conv_block(filters, stage, block, strides=(1, 1))(x)

x = identity_block(filters, stage, block)(x)

continue

elif block == 0:

x = conv_block(filters, stage, block, strides=(2, 2))(x)

else:

x = identity_block(filters, stage, block)(x)

x = BatchNormalization(name='bn1', **bn_params)(x)

x = Activation('relu', name='relu1')(x)

# resnet top

if include_top:

x = GlobalAveragePooling2D(name='pool1')(x)

x = Dense(classes, name='fc1')(x)

x = Activation('softmax', name='softmax')(x)

# Ensure that the model takes into account any potential predecessors of `input_tensor`.

if input_tensor is not None:

inputs = get_source_inputs(input_tensor)

else:

inputs = img_input

# Create model.

model = Model(inputs, x)

return model

def ResNet34(input_shape, input_tensor=None, weights=None, classes=1000, include_top=True):

model = build_resnet(input_tensor=input_tensor,

input_shape=input_shape,

repetitions=(3, 4, 6, 3),

classes=classes,

include_top=include_top,

block_type='basic')

model.name = 'resnet34'

if weights:

load_model_weights(weights_collection, model, weights, classes, include_top)

return model

decoder过程

def build_unet(backbone, classes, skip_connection_layers,

decoder_filters=(256,128,64,32,16),

upsample_rates=(2,2,2,2,2),

n_upsample_blocks=5,

block_type='upsampling',

activation='sigmoid',

use_batchnorm=True):

input = backbone.input

x = backbone.output

if block_type == 'transpose':

up_block = Transpose2D_block

else:

up_block = Upsample2D_block

# convert layer names to indices

skip_connection_idx = ([get_layer_number(backbone, l) if isinstance(l, str) else l

for l in skip_connection_layers])

# print(skip_connection_idx) [128, 73, 36, 5]

for i in range(n_upsample_blocks):

# print(i)

# check if there is a skip connection

skip_connection = None

if i < len(skip_connection_idx):

skip_connection = backbone.layers[skip_connection_idx[i]].output

# print(backbone.layers[skip_connection_idx[i]])

# <keras.layers.core.Activation object at 0x00000164CC562A20>

upsample_rate = to_tuple(upsample_rates[i])

x = up_block(decoder_filters[i], i, upsample_rate=upsample_rate,

skip=skip_connection, use_batchnorm=use_batchnorm)(x)

x = Conv2D(classes, (3,3), padding='same', name='final_conv')(x)

x = Activation(activation, name=activation)(x)

model = Model(input, x)

return model

参考: