版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/Chang_Shuang/article/details/82082023

import keras

from keras.layers import Conv2D, MaxPool2D, Activation, Flatten, Dense

from keras.datasets import mnist

from keras.models import Sequential

from keras.utils import to_categorical

from keras.optimizers import SGD, RMSprop, Adam

import numpy as np

import matplotlib.pyplot as plt

class LeNet:

@staticmethod

def build(input_shape, classes):

model = Sequential()

model.add(Conv2D(20, (5, 5), padding='same', input_shape=input_shape))

model.add(Activation('relu'))

model.add(MaxPool2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Conv2D(50, (5, 5), padding='same'))

model.add(Activation('relu'))

model.add(MaxPool2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Flatten())

model.add(Dense(100))

model.add(Activation('relu'))

model.add(Dense(classes))

model.add(Activation('softmax'))

return model

NB_EPOCH = 1

BATCH_SIZE = 128

VERBOSSE = 1

OPTIMIZER = Adam()

VALIDATION_SPLIT = 0.2

IMG_ROWS, IMG_COLS = 28, 28

NB_CLASSES = 10

INPUT_SHAPE = (IMG_ROWS, IMG_COLS, 1)

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

x_train = np.expand_dims(x_train, 3)

x_test = np.expand_dims(x_test, 3)

y_train = to_categorical(y_train, NB_CLASSES)

y_test = to_categorical(y_test, NB_CLASSES)

model1 = LeNet()

model = model1.build(input_shape=INPUT_SHAPE, classes=NB_CLASSES)

model.compile(loss='categorical_crossentropy', optimizer=OPTIMIZER, metrics=['accuracy'])

history = model.fit(x_train, y_train, batch_size=BATCH_SIZE, nb_epoch=NB_EPOCH, verbose=VERBOSSE, validation_split=VALIDATION_SPLIT)

score = model.evaluate(x_test, y_test, verbose=VERBOSSE)

print('Test score:', score[0])

print('Test accuracy:', score[1])

print(history.history.keys())

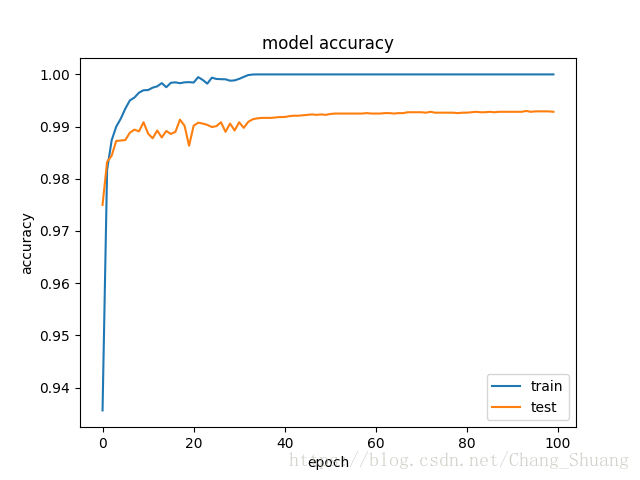

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'])

plt.show()

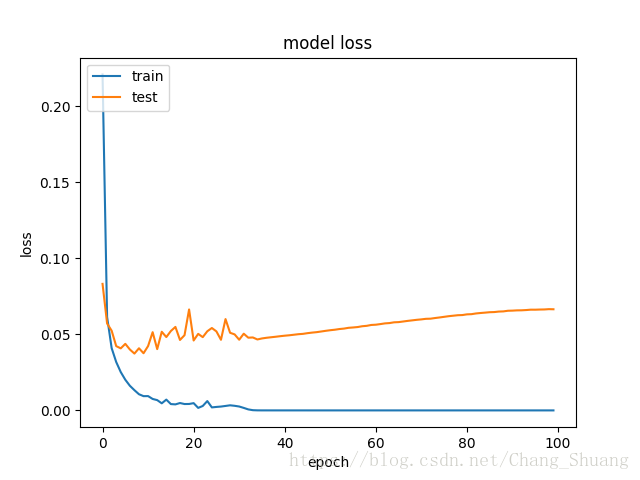

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()