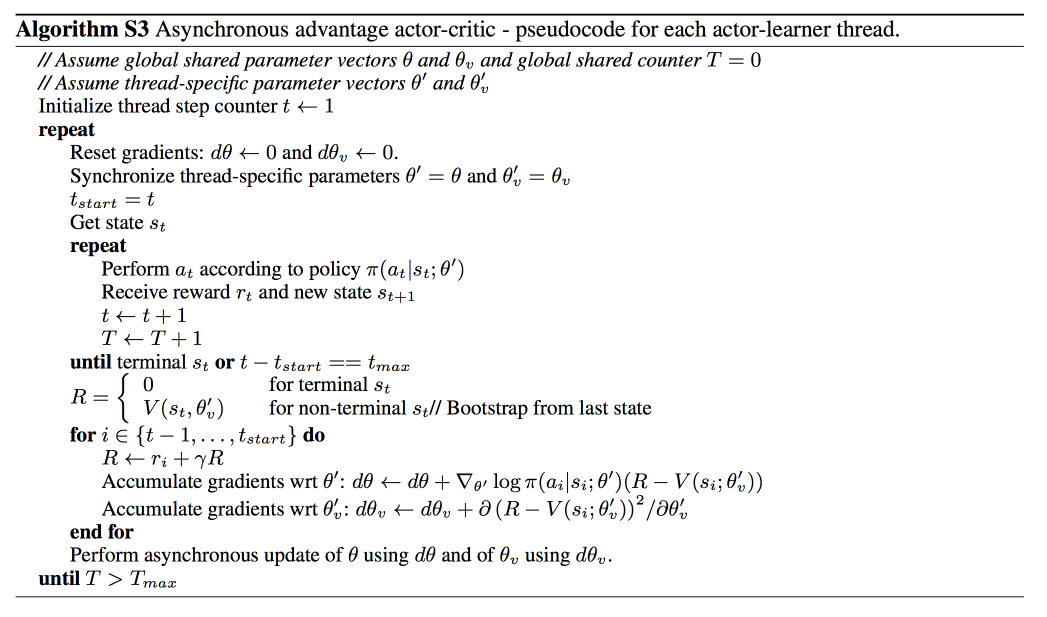

它会创建多个并行的环境, 让多个拥有副结构的 agent 同时在这些并行环境上更新主结构中的参数. 并行中的 agent 们互不干扰, 而主结构的参数更新受到副结构提交更新的不连续性干扰, 所以更新的相关性被降低, 收敛性提高

1 import threading 2 import numpy as np 3 import tensorflow as tf 4 import pylab 5 import time 6 import gym 7 from keras.layers import Dense, Input 8 from keras.models import Model 9 from keras.optimizers import Adam 10 from keras import backend as K 11 12 13 # global variables for threading 14 episode = 0 15 scores = [] 16 17 EPISODES = 2000 18 19 # This is A3C(Asynchronous Advantage Actor Critic) agent(global) for the Cartpole 20 # In this example, we use A3C algorithm 21 class A3CAgent: 22 def __init__(self, state_size, action_size, env_name): 23 # get size of state and action 24 self.state_size = state_size 25 self.action_size = action_size 26 27 # get gym environment name 28 self.env_name = env_name 29 30 # these are hyper parameters for the A3C 31 self.actor_lr = 0.001 32 self.critic_lr = 0.001 33 self.discount_factor = .99 34 self.hidden1, self.hidden2 = 24, 24 35 self.threads = 8 #8个线程并行 36 37 # create model for actor and critic network 38 self.actor, self.critic = self.build_model() 39 40 # method for training actor and critic network 41 self.optimizer = [self.actor_optimizer(), self.critic_optimizer()] 42 43 self.sess = tf.InteractiveSession() 44 K.set_session(self.sess) 45 self.sess.run(tf.global_variables_initializer()) 46 47 # approximate policy and value using Neural Network 48 # actor -> state is input and probability of each action is output of network 49 # critic -> state is input and value of state is output of network 50 # actor and critic network share first hidden layer 51 def build_model(self): 52 state = Input(batch_shape=(None, self.state_size)) 53 shared = Dense(self.hidden1, input_dim=self.state_size, activation='relu', kernel_initializer='glorot_uniform')(state) 54 55 actor_hidden = Dense(self.hidden2, activation='relu', kernel_initializer='glorot_uniform')(shared) 56 action_prob = Dense(self.action_size, activation='softmax', kernel_initializer='glorot_uniform')(actor_hidden) 57 58 value_hidden = Dense(self.hidden2, activation='relu', kernel_initializer='he_uniform')(shared) 59 state_value = Dense(1, activation='linear', kernel_initializer='he_uniform')(value_hidden) 60 61 actor = Model(inputs=state, outputs=action_prob) 62 critic = Model(inputs=state, outputs=state_value) 63 64 actor._make_predict_function() 65 critic._make_predict_function() 66 67 actor.summary() 68 critic.summary() 69 70 return actor, critic 71 72 # make loss function for Policy Gradient 73 # [log(action probability) * advantages] will be input for the back prop 74 # we add entropy of action probability to loss 75 def actor_optimizer(self): 76 action = K.placeholder(shape=(None, self.action_size)) 77 advantages = K.placeholder(shape=(None, )) 78 79 policy = self.actor.output 80 81 good_prob = K.sum(action * policy, axis=1) 82 eligibility = K.log(good_prob + 1e-10) * K.stop_gradient(advantages) 83 loss = -K.sum(eligibility) 84 85 entropy = K.sum(policy * K.log(policy + 1e-10), axis=1) 86 87 actor_loss = loss + 0.01*entropy 88 89 optimizer = Adam(lr=self.actor_lr) 90 updates = optimizer.get_updates(self.actor.trainable_weights, [], actor_loss) 91 train = K.function([self.actor.input, action, advantages], [], updates=updates) 92 return train 93 94 # make loss function for Value approximation 95 def critic_optimizer(self): 96 discounted_reward = K.placeholder(shape=(None, )) 97 98 value = self.critic.output 99 100 loss = K.mean(K.square(discounted_reward - value)) 101 102 optimizer = Adam(lr=self.critic_lr) 103 updates = optimizer.get_updates(self.critic.trainable_weights, [], loss) 104 train = K.function([self.critic.input, discounted_reward], [], updates=updates) 105 return train 106 107 # make agents(local) and start training 108 def train(self): 109 # self.load_model('./save_model/cartpole_a3c.h5') 110 agents = [Agent(i, self.actor, self.critic, self.optimizer, self.env_name, self.discount_factor, 111 self.action_size, self.state_size) for i in range(self.threads)]#建立8个local agent 112 113 for agent in agents: 114 agent.start() 115 116 while True: 117 time.sleep(20) 118 119 plot = scores[:] 120 pylab.plot(range(len(plot)), plot, 'b') 121 pylab.savefig("./save_graph/cartpole_a3c.png") 122 123 self.save_model('./save_model/cartpole_a3c.h5') 124 125 def save_model(self, name): 126 self.actor.save_weights(name + "_actor.h5") 127 self.critic.save_weights(name + "_critic.h5") 128 129 def load_model(self, name): 130 self.actor.load_weights(name + "_actor.h5") 131 self.critic.load_weights(name + "_critic.h5") 132 133 # This is Agent(local) class for threading 134 class Agent(threading.Thread): 135 def __init__(self, index, actor, critic, optimizer, env_name, discount_factor, action_size, state_size): 136 threading.Thread.__init__(self) 137 138 self.states = [] 139 self.rewards = [] 140 self.actions = [] 141 142 self.index = index 143 self.actor = actor 144 self.critic = critic 145 self.optimizer = optimizer 146 self.env_name = env_name 147 self.discount_factor = discount_factor 148 self.action_size = action_size 149 self.state_size = state_size 150 151 # Thread interactive with environment 152 def run(self): 153 global episode 154 env = gym.make(self.env_name) 155 while episode < EPISODES: 156 state = env.reset() 157 score = 0 158 while True: 159 action = self.get_action(state) 160 next_state, reward, done, _ = env.step(action) 161 score += reward 162 163 self.memory(state, action, reward) 164 165 state = next_state 166 167 if done: 168 episode += 1 169 print("episode: ", episode, "/ score : ", score) 170 scores.append(score) 171 self.train_episode(score != 500) 172 break 173 174 # In Policy Gradient, Q function is not available. 175 # Instead agent uses sample returns for evaluating policy 176 def discount_rewards(self, rewards, done=True): 177 discounted_rewards = np.zeros_like(rewards) 178 running_add = 0 179 if not done: 180 running_add = self.critic.predict(np.reshape(self.states[-1], (1, self.state_size)))[0] 181 for t in reversed(range(0, len(rewards))): 182 running_add = running_add * self.discount_factor + rewards[t] 183 discounted_rewards[t] = running_add 184 return discounted_rewards 185 186 # save <s, a ,r> of each step 187 # this is used for calculating discounted rewards 188 def memory(self, state, action, reward): 189 self.states.append(state) 190 act = np.zeros(self.action_size) 191 act[action] = 1 192 self.actions.append(act) 193 self.rewards.append(reward) 194 195 # update policy network and value network every episode 196 def train_episode(self, done): 197 discounted_rewards = self.discount_rewards(self.rewards, done) 198 199 values = self.critic.predict(np.array(self.states)) 200 values = np.reshape(values, len(values)) 201 202 advantages = discounted_rewards - values 203 204 self.optimizer[0]([self.states, self.actions, advantages]) 205 self.optimizer[1]([self.states, discounted_rewards]) 206 self.states, self.actions, self.rewards = [], [], [] 207 208 def get_action(self, state): 209 policy = self.actor.predict(np.reshape(state, [1, self.state_size]))[0] 210 return np.random.choice(self.action_size, 1, p=policy)[0] 211 212 213 if __name__ == "__main__": 214 env_name = 'CartPole-v1' 215 env = gym.make(env_name) 216 217 state_size = env.observation_space.shape[0] 218 action_size = env.action_space.n 219 220 env.close() 221 222 global_agent = A3CAgent(state_size, action_size, env_name) 223 global_agent.train()