1 导入数据,查看特征

from sklearn import svm

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

import pandas as pd

train=pd.read_csv('Digit/train.csv')

test=pd.read_csv('Digit/test.csv')

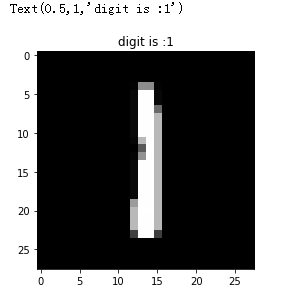

i=2

img=train.iloc[i,1:].as_matrix()

img=img.reshape(28,28)

plt.imshow(img,cmap='gray')

plt.title('digit is :'+str(train.iloc[i,0]))

plt.hist(train.iloc[i,1:])

特征按灰度表示,范围在0-255之间

2 划分特征和标签,初步训练

img=train.iloc[:5000,1:]

label=train.iloc[:5000,0]

train_data,test_data,train_label,test_label=train_test_split(

img,label,test_size=0.2,random_state=0)

# 训练

svc=svm.SVC(C=3)

svc.fit(train_data,train_label)

print svc.score(train_data,train_label)

print svc.score(test_data,test_label)

1.0

0.1

准确率相当于随机猜测,说明SVC默认参数并不适用,需要调参

3 调参

from sklearn.model_selection import GridSearchCV

svc_param={'C':[1,2,3,4,5,6,7,8,9,10],

'kernel': ['linear','rbf'],

'gamma': [0.5, 0.2, 0.1, 0.001, 0.0001]}

def grid(model,data,label,param):

grid=GridSearchCV(model,param,cv=5,scoring='accuracy')

grid.fit(data,label)

return grid.best_params_,grid.best_score_

超级慢,svm特征较多时会比较慢

best_params,best_score=grid(svc,train_data,train_label,svc_param)

print best_params

print best_score

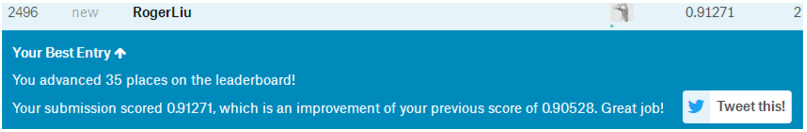

{'kernel': 'linear', 'C': 1, 'gamma': 0.5}

0.91475

svc=svm.SVC(**best_params)

svc.fit(train_data,train_label)

print svc.score(train_data,train_label)

print svc.score(test_data,test_label)

1.0

0.91

最佳参数在测试集上表现较默认提升明显,

4 预测并提交

test.info()

pred=svc.predict(test)

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 28000 entries, 0 to 27999

Columns: 784 entries, pixel0 to pixel783

dtypes: int64(784)

memory usage: 167.5 MB

sub_svc=pd.DataFrame({'ImageId':list(range(1,len(pred)+1)),'Label':pred})

sub_svc.to_csv('sub_svc.csv',header=True,index=False)

5 改进

5.1 增加训练的样本数量(开始只fit5000)

number=20000

img=train.iloc[:number,1:]

label=train.iloc[:number,0]

train_data,test_data,train_label,test_label=train_test_split(

img,label,test_size=0.2,random_state=0)

svc=svm.SVC(**best_params)

svc.fit(train_data,train_label)

print svc.score(train_data,train_label)

print svc.score(test_data,test_label)

1.0

0.90375

pred2=svc.predict(test)

sub_svc2=pd.DataFrame({'ImageId':list(range(1,len(pred2)+1)),'Label':pred2})

sub_svc2.to_csv('sub_svc2.csv',header=True,index=False)

**

**

增加训练样本数,有微小提升

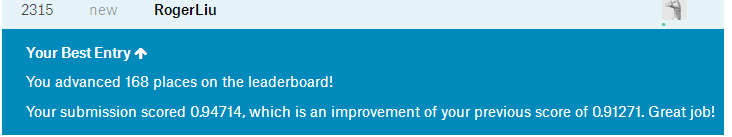

5.2 特征缩放

将灰度值0-255 缩放到0-1之间

test=test/255.0

number=5000

img=train.iloc[:number,1:]/255.0

label=train.iloc[:number,0]

train_data,test_data,train_label,test_label=train_test_split(

img,label,test_size=0.2,random_state=0)

svc_param={'C':[1,2,3,4,5,6,7,8,9,10],

'kernel': ['linear','rbf'],

'gamma': [0.5, 0.2, 0.01, 0.001, 0.0001]}

def grid2(model,data,label,param):

grid=GridSearchCV(model,param,cv=2,scoring='accuracy')

grid.fit(data,label)

return grid.best_params_,grid.best_score_

best_params,best_score=grid2(svc,train_data,train_label,svc_param)

print best_params

print best_score

{'kernel': 'rbf', 'C': 4, 'gamma': 0.01}

0.94325

svc=svm.SVC(**best_params)

svc.fit(train_data,train_label)

print svc.score(train_data,train_label)

print svc.score(test_data,test_label)

0.99825

0.946

test=pd.read_csv('Digit/test.csv')

test.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 28000 entries, 0 to 27999

Columns: 784 entries, pixel0 to pixel783

dtypes: int64(784)

memory usage: 167.5 MB

test=pd.read_csv('Digit/test.csv')

test=test.iloc[:,:]/255.0

pred3=svc.predict(test)

sub_svc3=pd.DataFrame({'ImageId':list(range(1,len(pred3)+1)),'Label':pred3})

sub_svc3.to_csv('sub_svc3.csv',header=True,index=False)

提升明显,可见特征缩放对svm是很重要的

6 总结:

1 svm可以进行数字识别分类,但由于每个像素作为一个特征,28*28个特征比较多, 训练起来变慢,调参更是慢

2 增加训练样本数对精度提升有效果,但并不显著

3 特征缩放对svm算法是必要的!缩放后的提升效果明显,最优超参数发生了变化(没缩放时,0-255,最好的kernel是linear,缩放后0-1,最好kernel是rbf, 且准确率提升约0.3)*