分两步:

第一步:实现hankcs.hanlp/corpus.io.IIOAdapter

public class HadoopFileIoAdapter implements IIOAdapter {

@Override

public InputStream open(String path) throws IOException {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(path), conf);

return fs.open(new Path(path));

}

@Override

public OutputStream create(String path) throws IOException {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(path), conf);

OutputStream out = fs.create(new Path(path));

return out;

}

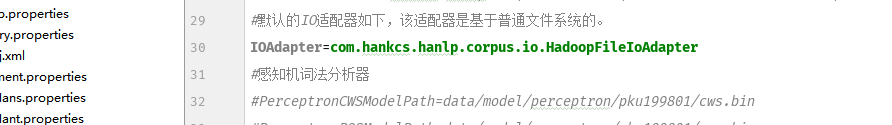

}第二步:修改配置文件。root为hdfs上的数据包,把IOAdapter改为咱们上面实现的类

b

ok,这样你就能在分布式集群上使用hanlp进行分词了。

希望能帮到需要的朋友。。