HBase实战(5):Spark SQL+Hive +HBASE 使用Spark 操作分布式集群HBASE

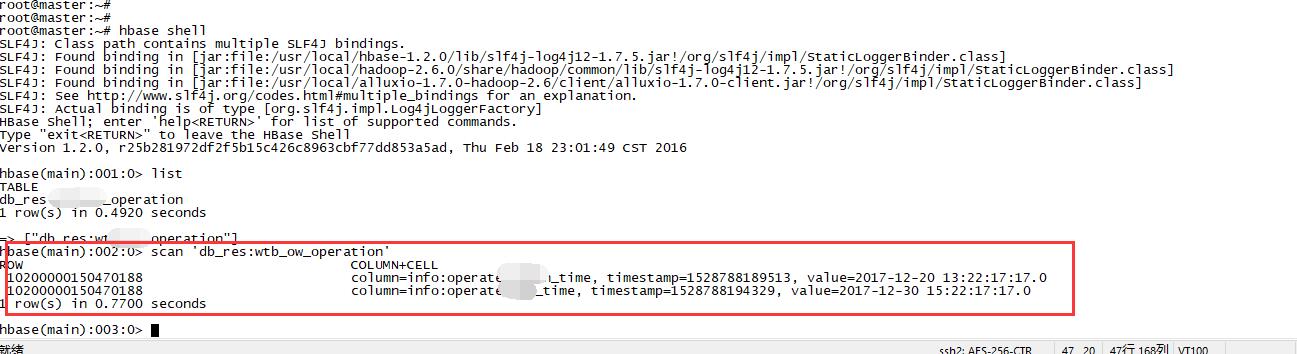

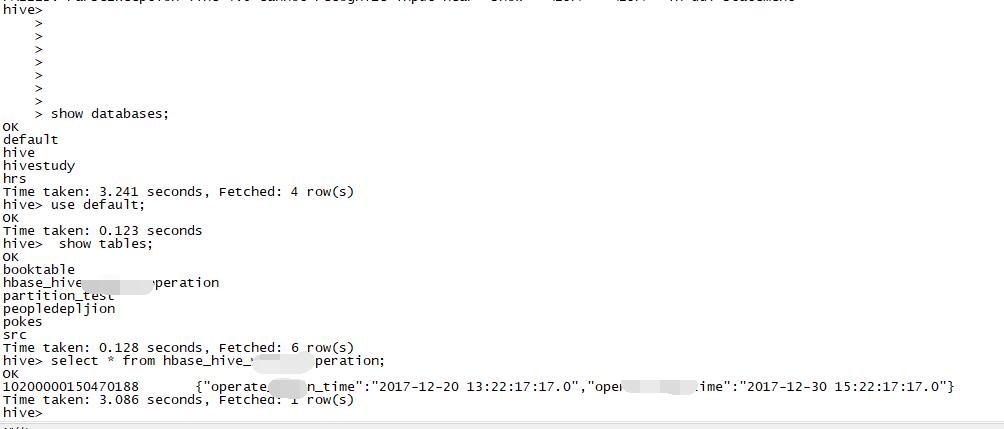

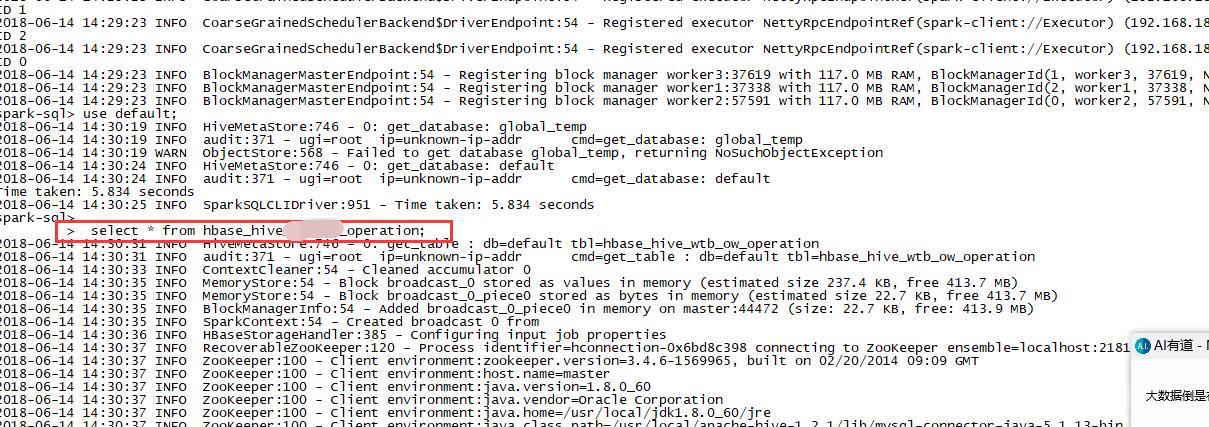

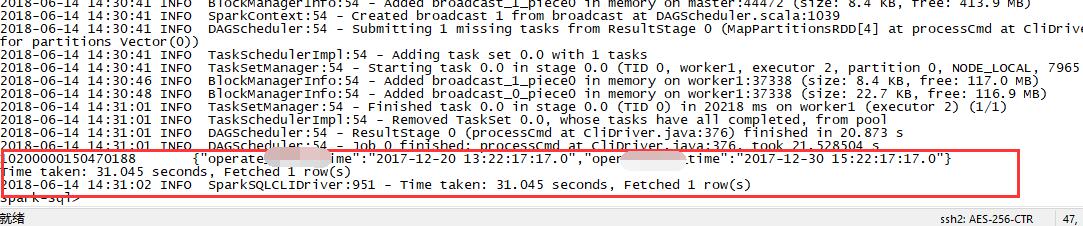

本文的操作是使用spark 自带的spark sql工具 通过Hive去操作Hbase的数据。

在spark 集群中提交spark sql运行语句。分别使用了本地模式、集群模式提交,遇到的一些报错是JAR

包没加载全,提交过程中加载HBASE的相关Jar包运行就可以。

root@master:~# spark-sql --master spark://192.168.189.1:7077 --driver-class-path /usr/local/apache-hive-1.2.1/lib/mysql-connector-java-5.1.13-bin.jar --jars /usr/local/apache-hive-1.2.1/lib/mysql-connector-java-5.1.13-bin.jar,/usr/local/apache-hive-1.2.1/lib/hive-hbase-handler-1.2.1.jar,/usr/local/hbase-1.2.0/lib/hbase-client-1.2.0.jar,/usr/local/hbase-1.2.0/lib/hbase-common-1.2.0.jar,/usr/local/hbase-1.2.0/lib/hbase-protocol-1.2.0.jar,/usr/local/hbase-1.2.0/lib/hbase-server-1.2.0.jar,/usr/local/hbase-1.2.0/lib/htrace-core-3.1.0-incubating.jar,/usr/local/hbase-1.2.0/lib/metrics-core-2.2.0.jar,/usr/local/hbase-1.2.0/lib/hbase-hadoop2-compat-1.2.0.jar,/usr/local/hbase-1.2.0/lib/guava-12.0.1.jar,/usr/local/hbase-1.2.0/lib/protobuf-java-2.5.0.jar --executor-memory 512m --total-executor-cores 4 操作步骤:

1,基于spark 2.3.0 本地模式直接进入spark sql 客户端,提示出错,找不到“com.mysql.jdbc.Driver”

root@master:/usr/local/spark-2.3.0-bin-hadoop2.6/bin# spark-sql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/alluxio-1.7.0-hadoop-2.6/client/alluxio-1.7.0-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/spark-2.3.0-bin-hadoop2.6/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-06-14 10:22:53 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-06-14 10:22:57 WARN HiveConf:2753 - HiveConf of name hive.server2.http.endpoint does not exist

2018-06-14 10:22:59 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-06-14 10:22:59 INFO ObjectStore:289 - ObjectStore, initialize called

2018-06-14 10:23:00 INFO Persistence:77 - Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

2018-06-14 10:23:00 INFO Persistence:77 - Property datanucleus.cache.level2 unknown - will be ignored

2018-06-14 10:23:00 WARN HiveMetaStore:622 - Retrying creating default database after error: Error creating transactional connection factory

javax.jdo.JDOFatalInternalException: Error creating transactional connection factory

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:587)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:788) ... 52 more

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "BONECP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:259)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.initialiseDataSources(ConnectionFactoryImpl.java:131)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.<init>(ConnectionFactoryImpl.java:85)

... 70 more

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

at org.datanucleus.store.rdbms.connectionpool.AbstractConnectionPoolFactory.loadDriver(AbstractConnectionPoolFactory.java:58)

at org.datanucleus.store.rdbms.connectionpool.BoneCPConnectionPoolFactory.createConnectionPool(BoneCPConnectionPoolFactory.java:54)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:238)

... 72 more

2018-06-14 10:23:01 INFO ShutdownHookManager:54 - Shutdown hook called

2018-06-14 10:23:01 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-2fa09172-d8d5-4f4d-ba5f-84faee7c7d52

root@master:/usr/local/spark-2.3.0-bin-hadoop2.6/bin# 2,启动Spark集群。

root@master:~# /usr/local/spark-2.3.0-bin-hadoop2.6/sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/local/spark-2.3.0-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

worker2: starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-2.3.0-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker2.out

worker1: starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-2.3.0-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

worker1: failed to launch: nice -n 0 /usr/local/spark-2.3.0-bin-hadoop2.6/bin/spark-class org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://master:7077

worker1: full log in /usr/local/spark-2.3.0-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

worker3: starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-2.3.0-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker3.out

root@master:~# jps

3075 ResourceManager

3715 HRegionServer

3395 QuorumPeerMain

2932 SecondaryNameNode

4613 Jps

3543 HMaster

2715 NameNode

3823 RunJar

4543 Master

root@master:~# 3,在spark集群中使用spark-sql。

root@master:~# spark-sql --master spark://192.168.189.1:7077 --driver-class-path /usr/local/apache-hive-1.2.1/lib/mysql-connector-java-5.1.13-bin.jar --executor-memory 512m --total-executor-cores 4

2018-06-14 10:31:36 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-06-14 10:31:37 WARN HiveConf:2753 - HiveConf of name hive.server2.http.endpoint does not exist

2018-06-14 10:31:38 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-06-14 10:31:38 INFO ObjectStore:289 - ObjectStore, initialize called

2018-06-14 10:31:39 INFO Persistence:77 - Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

2018-06-14 10:31:39 INFO Persistence:77 - Property datanucleus.cache.level2 unknown - will be ignored

2018-06-14 10:31:43 WARN HiveConf:2753 - HiveConf of name hive.server2.http.endpoint does not exist

2018-06-14 10:31:43 INFO ObjectStore:370 - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2018-06-14 10:31:45 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 10:31:45 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 10:31:49 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 10:31:49 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 10:31:50 INFO Query:77 - Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing

2018-06-14 10:31:50 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is MYSQL

2018-06-14 10:31:51 INFO ObjectStore:272 - Initialized ObjectStore

2018-06-14 10:31:51 INFO HiveMetaStore:663 - Added admin role in metastore

2018-06-14 10:31:51 INFO HiveMetaStore:672 - Added public role in metastore

2018-06-14 10:31:51 INFO HiveMetaStore:712 - No user is added in admin role, since config is empty

2018-06-14 10:31:52 INFO HiveMetaStore:746 - 0: get_all_databases

2018-06-14 10:31:52 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_all_databases

2018-06-14 10:31:52 INFO HiveMetaStore:746 - 0: get_functions: db=default pat=*

2018-06-14 10:31:52 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=default pat=*

2018-06-14 10:31:52 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 10:31:52 INFO HiveMetaStore:746 - 0: get_functions: db=hive pat=*

2018-06-14 10:31:52 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=hive pat=*

2018-06-14 10:31:52 INFO HiveMetaStore:746 - 0: get_functions: db=hivestudy pat=*

2018-06-14 10:31:52 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=hivestudy pat=*

2018-06-14 10:31:52 INFO HiveMetaStore:746 - 0: get_functions: db=hrs pat=*

2018-06-14 10:31:52 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=hrs pat=*

2018-06-14 10:31:55 INFO SessionState:641 - Created local directory: /tmp/999d5941-24ad-4579-a61c-ba719868dc9b_resources

2018-06-14 10:31:55 INFO SessionState:641 - Created HDFS directory: /tmp/hive/root/999d5941-24ad-4579-a61c-ba719868dc9b

2018-06-14 10:31:55 INFO SessionState:641 - Created local directory: /tmp/root/999d5941-24ad-4579-a61c-ba719868dc9b

2018-06-14 10:31:55 INFO SessionState:641 - Created HDFS directory: /tmp/hive/root/999d5941-24ad-4579-a61c-ba719868dc9b/_tmp_space.db

2018-06-14 10:31:56 INFO SparkContext:54 - Running Spark version 2.3.0

2018-06-14 10:31:56 INFO SparkContext:54 - Submitted application: SparkSQL::master

2018-06-14 10:31:57 INFO SecurityManager:54 - Changing view acls to: root

2018-06-14 10:31:57 INFO SecurityManager:54 - Changing modify acls to: root

2018-06-14 10:31:57 INFO SecurityManager:54 - Changing view acls groups to:

2018-06-14 10:31:57 INFO SecurityManager:54 - Changing modify acls groups to:

2018-06-14 10:31:57 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2018-06-14 10:31:58 INFO Utils:54 - Successfully started service 'sparkDriver' on port 49760.

2018-06-14 10:31:58 INFO SparkEnv:54 - Registering MapOutputTracker

2018-06-14 10:31:59 INFO SparkEnv:54 - Registering BlockManagerMaster

2018-06-14 10:31:59 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2018-06-14 10:31:59 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2018-06-14 10:31:59 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-e9250684-2aee-4f04-8466-28c951fa52cf

2018-06-14 10:31:59 INFO MemoryStore:54 - MemoryStore started with capacity 413.9 MB

2018-06-14 10:31:59 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2018-06-14 10:32:00 INFO log:192 - Logging initialized @26885ms

2018-06-14 10:32:00 INFO Server:346 - jetty-9.3.z-SNAPSHOT

2018-06-14 10:32:00 INFO Server:414 - Started @27370ms

2018-06-14 10:32:01 INFO AbstractConnector:278 - Started ServerConnector@4aa31ffc{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2018-06-14 10:32:01 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4a9860{/jobs,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7f85217c{/jobs/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1fd7a37{/jobs/job,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@58a84a12{/jobs/job/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@e700eba{/stages,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6436e181{/stages/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7186b202{/stages/stage,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@36068727{/stages/stage/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@72543547{/stages/pool,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3d88e6b9{/stages/pool/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22bf9122{/storage,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@208205ed{/storage/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@73afe2b7{/storage/rdd,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@9687f55{/storage/rdd/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5700c9db{/environment,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@671d03bb{/environment/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6babffb5{/executors,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2173a742{/executors/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@706ceca6{/executors/threadDump,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7f6329cb{/executors/threadDump/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4b8137c5{/static,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@31973858{/,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@65514add{/api,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@10850d17{/jobs/job/kill,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@51e754e1{/stages/stage/kill,null,AVAILABLE,@Spark}

2018-06-14 10:32:01 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://master:4040

2018-06-14 10:32:02 INFO StandaloneAppClient$ClientEndpoint:54 - Connecting to master spark://192.168.189.1:7077...

2018-06-14 10:32:02 INFO TransportClientFactory:267 - Successfully created connection to /192.168.189.1:7077 after 164 ms (0 ms spent in bootstraps)

2018-06-14 10:32:03 INFO StandaloneSchedulerBackend:54 - Connected to Spark cluster with app ID app-20180614103203-0000

2018-06-14 10:32:03 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 35493.

2018-06-14 10:32:03 INFO NettyBlockTransferService:54 - Server created on master:35493

2018-06-14 10:32:03 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2018-06-14 10:32:03 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, master, 35493, None)

2018-06-14 10:32:03 INFO BlockManagerMasterEndpoint:54 - Registering block manager master:35493 with 413.9 MB RAM, BlockManagerId(driver, master, 35493, None)

2018-06-14 10:32:03 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, master, 35493, None)

2018-06-14 10:32:03 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, master, 35493, None)

2018-06-14 10:32:04 INFO StandaloneAppClient$ClientEndpoint:54 - Executor added: app-20180614103203-0000/0 on worker-20180614103000-worker1-36930 (worker1:36930) with 1 core(s)

2018-06-14 10:32:04 INFO StandaloneSchedulerBackend:54 - Granted executor ID app-20180614103203-0000/0 on hostPort worker1:36930 with 1 core(s), 512.0 MB RAM

2018-06-14 10:32:04 INFO StandaloneAppClient$ClientEndpoint:54 - Executor added: app-20180614103203-0000/1 on worker-20180614103020-worker3-48018 (worker3:48018) with 1 core(s)

2018-06-14 10:32:04 INFO StandaloneSchedulerBackend:54 - Granted executor ID app-20180614103203-0000/1 on hostPort worker3:48018 with 1 core(s), 512.0 MB RAM

2018-06-14 10:32:04 INFO StandaloneAppClient$ClientEndpoint:54 - Executor added: app-20180614103203-0000/2 on worker-20180614103016-worker2-53644 (worker2:53644) with 1 core(s)

2018-06-14 10:32:04 INFO StandaloneSchedulerBackend:54 - Granted executor ID app-20180614103203-0000/2 on hostPort worker2:53644 with 1 core(s), 512.0 MB RAM

2018-06-14 10:32:05 INFO StandaloneAppClient$ClientEndpoint:54 - Executor updated: app-20180614103203-0000/2 is now RUNNING

2018-06-14 10:32:05 INFO StandaloneAppClient$ClientEndpoint:54 - Executor updated: app-20180614103203-0000/0 is now RUNNING

2018-06-14 10:32:05 INFO StandaloneAppClient$ClientEndpoint:54 - Executor updated: app-20180614103203-0000/1 is now RUNNING

2018-06-14 10:32:07 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@644d1b61{/metrics/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:07 INFO StandaloneSchedulerBackend:54 - SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

2018-06-14 10:32:10 INFO SharedState:54 - loading hive config file: file:/usr/local/spark-2.3.0-bin-hadoop2.6/conf/hive-site.xml

2018-06-14 10:32:10 INFO SharedState:54 - spark.sql.warehouse.dir is not set, but hive.metastore.warehouse.dir is set. Setting spark.sql.warehouse.dir to the value of hive.metastore.warehouse.dir ('/user/hive/warehouse').

2018-06-14 10:32:10 INFO SharedState:54 - Warehouse path is '/user/hive/warehouse'.

2018-06-14 10:32:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@46a795de{/SQL,null,AVAILABLE,@Spark}

2018-06-14 10:32:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@256a0d95{/SQL/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@44f0ff2b{/SQL/execution,null,AVAILABLE,@Spark}

2018-06-14 10:32:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22ead351{/SQL/execution/json,null,AVAILABLE,@Spark}

2018-06-14 10:32:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@601eb4af{/static/sql,null,AVAILABLE,@Spark}

2018-06-14 10:32:13 INFO HiveUtils:54 - Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

2018-06-14 10:32:15 INFO HiveClientImpl:54 - Warehouse location for Hive client (version 1.2.2) is /user/hive/warehouse

2018-06-14 10:32:16 INFO HiveMetaStore:746 - 0: get_database: default

2018-06-14 10:32:16 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_database: default

2018-06-14 10:32:37 INFO StateStoreCoordinatorRef:54 - Registered StateStoreCoordinator endpoint

spark-sql> 2018-06-14 10:32:53 INFO CoarseGrainedSchedulerBackend$DriverEndpoint:54 - Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.189.4:56970) with ID 1

2018-06-14 10:32:55 INFO CoarseGrainedSchedulerBackend$DriverEndpoint:54 - Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.189.3:50799) with ID 2

2018-06-14 10:32:56 INFO BlockManagerMasterEndpoint:54 - Registering block manager worker2:53191 with 117.0 MB RAM, BlockManagerId(2, worker2, 53191, None)

2018-06-14 10:33:06 INFO BlockManagerMasterEndpoint:54 - Registering block manager worker3:47807 with 117.0 MB RAM, BlockManagerId(1, worker3, 47807, None)

spark-sql> show databases;

2018-06-14 10:39:20 INFO HiveMetaStore:746 - 0: get_database: global_temp

2018-06-14 10:39:20 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_database: global_temp

2018-06-14 10:39:21 WARN ObjectStore:568 - Failed to get database global_temp, returning NoSuchObjectException

2018-06-14 10:39:30 INFO HiveMetaStore:746 - 0: get_databases: *

2018-06-14 10:39:30 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_databases: *

2018-06-14 10:39:33 INFO CodeGenerator:54 - Code generated in 525.870215 ms

default

hive

hivestudy

hrs

Time taken: 12.771 seconds, Fetched 4 row(s)

2018-06-14 10:39:33 INFO SparkSQLCLIDriver:951 - Time taken: 12.771 seconds, Fetched 4 row(s)spark-sql> use default;

2018-06-14 10:39:41 INFO HiveMetaStore:746 - 0: get_database: default

2018-06-14 10:39:41 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_database: default

Time taken: 0.102 seconds

2018-06-14 10:39:41 INFO SparkSQLCLIDriver:951 - Time taken: 0.102 seconds

spark-sql> show tables;

2018-06-14 10:39:46 INFO HiveMetaStore:746 - 0: get_database: default

2018-06-14 10:39:46 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_database: default

2018-06-14 10:39:46 INFO HiveMetaStore:746 - 0: get_database: default

2018-06-14 10:39:46 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_database: default

2018-06-14 10:39:46 INFO HiveMetaStore:746 - 0: get_tables: db=default pat=*

2018-06-14 10:39:46 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_tables: db=default pat=*

2018-06-14 10:39:47 INFO ContextCleaner:54 - Cleaned accumulator 0

2018-06-14 10:39:47 INFO ContextCleaner:54 - Cleaned accumulator 1

2018-06-14 10:39:47 INFO CodeGenerator:54 - Code generated in 34.691478 ms

default booktable false

default hbase_hive.....operation false

default partition_test false

default peopledepljion false

default pokes false

default src false

Time taken: 0.442 seconds, Fetched 6 row(s)

2018-06-14 10:39:47 INFO SparkSQLCLIDriver:951 - Time taken: 0.442 seconds, Fetched 6 row(s)spark-sql> select * from hbase_hive_wtb_ow_operation;

2018-06-14 10:42:38 INFO HiveMetaStore:746 - 0: get_table : db=default tbl=hbase_hive_wtb_ow_operation

2018-06-14 10:42:38 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_table : db=default tbl=hbase_hive_wtb_ow_operation

2018-06-14 10:42:39 ERROR log:397 - error in initSerDe: java.lang.ClassNotFoundException Class org.apache.hadoop.hive.hbase.HBaseSerDe not found

java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.hbase.HBaseSerDe not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2060)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.getDeserializer(MetaStoreUtils.java:385)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializerFromMetaStore(Table.java:276)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializer(Table.java:258)

at org.apache.hadoop.hive.ql.metadata.Table.getCols(Table.java:605)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:358)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:355)

at scala.Option.map(Option.scala:146)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:355)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:210)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:209)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:255)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTableOption(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClient$class.getTable(HiveClient.scala:81)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTable(HiveClientImpl.scala:83)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getRawTable(HiveExternalCatalog.scala:117)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:683)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupRelation(SessionCatalog.scala:669)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveRelations$$lookupTableFromCatalog(Analyzer.scala:660)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.resolveRelation(Analyzer.scala:615)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:645)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:288)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:306)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:187)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:304)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:584)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:87)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:84)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:76)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:76)

at org.apache.spark.sql.catalyst.analysis.Analyzer.org$apache$spark$sql$catalyst$analysis$Analyzer$$executeSameContext(Analyzer.scala:123)

at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:117)

at org.apache.spark.sql.catalyst.analysis.Analyzer.executeAndCheck(Analyzer.scala:102)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:74)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:638)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:694)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:62)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:355)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:879)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:197)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:227)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

2018-06-14 10:42:39 ERROR Table:608 - Unable to get field from serde: org.apache.hadoop.hive.hbase.HBaseSerDe

java.lang.RuntimeException: MetaException(message:java.lang.ClassNotFoundException Class org.apache.hadoop.hive.hbase.HBaseSerDe not found)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializerFromMetaStore(Table.java:278)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializer(Table.java:258)

at org.apache.hadoop.hive.ql.metadata.Table.getCols(Table.java:605)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:358)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:355)

at scala.Option.map(Option.scala:146)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:355)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:210)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:209)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:255)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTableOption(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClient$class.getTable(HiveClient.scala:81)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTable(HiveClientImpl.scala:83)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getRawTable(HiveExternalCatalog.scala:117)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:683)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupRelation(SessionCatalog.scala:669)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveRelations$$lookupTableFromCatalog(Analyzer.scala:660)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.resolveRelation(Analyzer.scala:615)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:645)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:288)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:306)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:187)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:304)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:584)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:87)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:84)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:76)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:76)

at org.apache.spark.sql.catalyst.analysis.Analyzer.org$apache$spark$sql$catalyst$analysis$Analyzer$$executeSameContext(Analyzer.scala:123)

at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:117)

at org.apache.spark.sql.catalyst.analysis.Analyzer.executeAndCheck(Analyzer.scala:102)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:74)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:638)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:694)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:62)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:355)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:879)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:197)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:227)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: MetaException(message:java.lang.ClassNotFoundException Class org.apache.hadoop.hive.hbase.HBaseSerDe not found)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.getDeserializer(MetaStoreUtils.java:399)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializerFromMetaStore(Table.java:276)

... 71 more

2018-06-14 10:42:39 ERROR SparkSQLDriver:91 - Failed in [select * from hbase_hive_wtb_ow_operation]

java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Error in loading storage handler.org.apache.hadoop.hive.hbase.HBaseStorageHandler

at org.apache.hadoop.hive.ql.metadata.Table.getStorageHandler(Table.java:292)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:388)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:355)

at scala.Option.map(Option.scala:146)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:355)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:210)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:209)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:255)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTableOption(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClient$class.getTable(HiveClient.scala:81)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTable(HiveClientImpl.scala:83)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getRawTable(HiveExternalCatalog.scala:117)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:683)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupRelation(SessionCatalog.scala:669)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveRelations$$lookupTableFromCatalog(Analyzer.scala:660)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.resolveRelation(Analyzer.scala:615)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:645)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:288)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:306)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:187)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:304)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:584)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:87)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:84)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:76)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:76)

at org.apache.spark.sql.catalyst.analysis.Analyzer.org$apache$spark$sql$catalyst$analysis$Analyzer$$executeSameContext(Analyzer.scala:123)

at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:117)

at org.apache.spark.sql.catalyst.analysis.Analyzer.executeAndCheck(Analyzer.scala:102)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:74)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:638)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:694)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:62)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:355)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:879)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:197)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:227)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Error in loading storage handler.org.apache.hadoop.hive.hbase.HBaseStorageHandler

at org.apache.hadoop.hive.ql.metadata.HiveUtils.getStorageHandler(HiveUtils.java:315)

at org.apache.hadoop.hive.ql.metadata.Table.getStorageHandler(Table.java:287)

... 69 more

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.hbase.HBaseStorageHandler

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.hive.ql.metadata.HiveUtils.getStorageHandler(HiveUtils.java:309)

... 70 more

java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Error in loading storage handler.org.apache.hadoop.hive.hbase.HBaseStorageHandler

at org.apache.hadoop.hive.ql.metadata.Table.getStorageHandler(Table.java:292)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:388)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:355)

at scala.Option.map(Option.scala:146)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:355)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:210)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:209)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:255)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTableOption(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClient$class.getTable(HiveClient.scala:81)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTable(HiveClientImpl.scala:83)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getRawTable(HiveExternalCatalog.scala:117)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:683)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupRelation(SessionCatalog.scala:669)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveRelations$$lookupTableFromCatalog(Analyzer.scala:660)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.resolveRelation(Analyzer.scala:615)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:645)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:288)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:306)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:187)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:304)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:584)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:87)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:84)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:76)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:76)

at org.apache.spark.sql.catalyst.analysis.Analyzer.org$apache$spark$sql$catalyst$analysis$Analyzer$$executeSameContext(Analyzer.scala:123)

at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:117)

at org.apache.spark.sql.catalyst.analysis.Analyzer.executeAndCheck(Analyzer.scala:102)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:74)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:638)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:694)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:62)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:355)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:879)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:197)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:227)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Error in loading storage handler.org.apache.hadoop.hive.hbase.HBaseStorageHandler

at org.apache.hadoop.hive.ql.metadata.HiveUtils.getStorageHandler(HiveUtils.java:315)

at org.apache.hadoop.hive.ql.metadata.Table.getStorageHandler(Table.java:287)

... 69 more

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.hbase.HBaseStorageHandler

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.hive.ql.metadata.HiveUtils.getStorageHandler(HiveUtils.java:309)

... 70 more

spark-sql> 检查hive-hbase-handler-1.2.1.jar路径:

root@master:/usr/local/apache-hive-1.2.1/lib# ls

accumulo-core-1.6.0.jar commons-httpclient-3.0.1.jar hive-hbase-handler-1.2.1.jar libfb303-0.9.2.jar

accumulo-fate-1.6.0.jar commons-io-2.4.jar hive-hwi-1.2.1.jar libthrift-0.9.2.jar

accumulo-start-1.6.0.jar commons-lang-2.6.jar hive-jdbc-1.2.1.jar log4j-1.2.16.jar

accumulo-trace-1.6.0.jar commons-logging-1.1.3.jar hive-jdbc-1.2.1-standalone.jar mail-1.4.1.jar

activation-1.1.jar commons-math-2.1.jar hive-metastore-1.2.1.jar maven-scm-api-1.4.jar

ant-1.9.1.jar commons-pool-1.5.4.jar hive-serde-1.2.1.jar maven-scm-provider-svn-commons-1.4.jar

ant-launcher-1.9.1.jar commons-vfs2-2.0.jar hive-service-1.2.1.jar maven-scm-provider-svnexe-1.4.jar

antlr-2.7.7.jar curator-client-2.6.0.jar hive-shims-0.20S-1.2.1.jar mysql-connector-java-5.1.13-bin.jar

antlr-runtime-3.4.jar curator-framework-2.6.0.jar hive-shims-0.23-1.2.1.jar netty-3.7.0.Final.jar

apache-curator-2.6.0.pom curator-recipes-2.6.0.jar hive-shims-1.2.1.jar opencsv-2.3.jar

apache-log4j-extras-1.2.17.jar datanucleus-api-jdo-3.2.6.jar hive-shims-common-1.2.1.jar oro-2.0.8.jar

asm-commons-3.1.jar datanucleus-core-3.2.10.jar hive-shims-scheduler-1.2.1.jar paranamer-2.3.jar

asm-tree-3.1.jar datanucleus-rdbms-3.2.9.jar hive-testutils-1.2.1.jar parquet-hadoop-bundle-1.6.0.jar

avro-1.7.5.jar derby-10.10.2.0.jar httpclient-4.4.jar pentaho-aggdesigner-algorithm-5.1.5-jhyde.jar

bonecp-0.8.0.RELEASE.jar eigenbase-properties-1.1.5.jar httpcore-4.4.jar php

calcite-avatica-1.2.0-incubating.jar geronimo-annotation_1.0_spec-1.1.1.jar ivy-2.4.0.jar plexus-utils-1.5.6.jar

calcite-core-1.2.0-incubating.jar geronimo-jaspic_1.0_spec-1.0.jar janino-2.7.6.jar py

calcite-linq4j-1.2.0-incubating.jar geronimo-jta_1.1_spec-1.1.1.jar jcommander-1.32.jar regexp-1.3.jar

commons-beanutils-1.7.0.jar groovy-all-2.1.6.jar jdo-api-3.0.1.jar servlet-api-2.5.jar

commons-beanutils-core-1.8.0.jar guava-14.0.1.jar jetty-all-7.6.0.v20120127.jar snappy-java-1.0.5.jar

commons-cli-1.2.jar hamcrest-core-1.1.jar jetty-all-server-7.6.0.v20120127.jar ST4-4.0.4.jar

commons-codec-1.4.jar hive-accumulo-handler-1.2.1.jar jline-2.12.jar stax-api-1.0.1.jar

commons-collections-3.2.1.jar hive-ant-1.2.1.jar joda-time-2.5.jar stringtemplate-3.2.1.jar

commons-compiler-2.7.6.jar hive-beeline-1.2.1.jar jpam-1.1.jar super-csv-2.2.0.jar

commons-compress-1.4.1.jar hive-cli-1.2.1.jar json-20090211.jar tempus-fugit-1.1.jar

commons-configuration-1.6.jar hive-common-1.2.1.jar jsr305-3.0.0.jar velocity-1.5.jar

commons-dbcp-1.4.jar hive-contrib-1.2.1.jar jta-1.1.jar xz-1.0.jar

commons-digester-1.8.jar hive-exec-1.2.1.jar junit-4.11.jar zookeeper-3.4.6.jar

root@master:/usr/local/apache-hive-1.2.1/lib# ls | grep hbase

hive-hbase-handler-1.2.1.jar

root@master:/usr/local/apache-hive-1.2.1/lib# 加载hive-hbase-handler-1.2.1.jar的路径,重新在spark集群提交spark sql。

root@master:~# spark-sql --master spark://192.168.189.1:7077 --driver-class-path /usr/local/apache-hive-1.2.1/lib/mysql-connector-java-5.1.13-bin.jar:/usr/local/apache-hive-1.2.1/lib/hive-hbase-handler-1.2.1.jar --executor-memory 512m --total-executor-cores 4

2018-06-14 13:58:33 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-06-14 13:58:36 WARN HiveConf:2753 - HiveConf of name hive.server2.http.endpoint does not exist

2018-06-14 13:58:36 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2018-06-14 13:58:36 INFO ObjectStore:289 - ObjectStore, initialize called

2018-06-14 13:58:37 INFO Persistence:77 - Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

2018-06-14 13:58:37 INFO Persistence:77 - Property datanucleus.cache.level2 unknown - will be ignored

2018-06-14 13:58:39 WARN HiveConf:2753 - HiveConf of name hive.server2.http.endpoint does not exist

2018-06-14 13:58:39 INFO ObjectStore:370 - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2018-06-14 13:58:40 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 13:58:40 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 13:58:41 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 13:58:41 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 13:58:42 INFO Query:77 - Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing

2018-06-14 13:58:42 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is MYSQL

2018-06-14 13:58:42 INFO ObjectStore:272 - Initialized ObjectStore

2018-06-14 13:58:42 INFO HiveMetaStore:663 - Added admin role in metastore

2018-06-14 13:58:42 INFO HiveMetaStore:672 - Added public role in metastore

2018-06-14 13:58:42 INFO HiveMetaStore:712 - No user is added in admin role, since config is empty

2018-06-14 13:58:43 INFO HiveMetaStore:746 - 0: get_all_databases

2018-06-14 13:58:43 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_all_databases

2018-06-14 13:58:43 INFO HiveMetaStore:746 - 0: get_functions: db=default pat=*

2018-06-14 13:58:43 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=default pat=*

2018-06-14 13:58:43 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

2018-06-14 13:58:43 INFO HiveMetaStore:746 - 0: get_functions: db=hive pat=*

2018-06-14 13:58:43 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=hive pat=*

2018-06-14 13:58:43 INFO HiveMetaStore:746 - 0: get_functions: db=hivestudy pat=*

2018-06-14 13:58:43 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=hivestudy pat=*

2018-06-14 13:58:43 INFO HiveMetaStore:746 - 0: get_functions: db=hrs pat=*

2018-06-14 13:58:43 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_functions: db=hrs pat=*

2018-06-14 13:58:44 INFO SessionState:641 - Created local directory: /tmp/54a94cff-e4a8-4500-bd56-7eda47f5b72f_resources

2018-06-14 13:58:45 INFO SessionState:641 - Created HDFS directory: /tmp/hive/root/54a94cff-e4a8-4500-bd56-7eda47f5b72f

2018-06-14 13:58:45 INFO SessionState:641 - Created local directory: /tmp/root/54a94cff-e4a8-4500-bd56-7eda47f5b72f

2018-06-14 13:58:45 INFO SessionState:641 - Created HDFS directory: /tmp/hive/root/54a94cff-e4a8-4500-bd56-7eda47f5b72f/_tmp_space.db

2018-06-14 13:58:45 INFO SparkContext:54 - Running Spark version 2.3.0

2018-06-14 13:58:45 INFO SparkContext:54 - Submitted application: SparkSQL::master

2018-06-14 13:58:46 INFO SecurityManager:54 - Changing view acls to: root

2018-06-14 13:58:46 INFO SecurityManager:54 - Changing modify acls to: root

2018-06-14 13:58:46 INFO SecurityManager:54 - Changing view acls groups to:

2018-06-14 13:58:46 INFO SecurityManager:54 - Changing modify acls groups to:

2018-06-14 13:58:46 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2018-06-14 13:58:46 INFO Utils:54 - Successfully started service 'sparkDriver' on port 35632.

2018-06-14 13:58:47 INFO SparkEnv:54 - Registering MapOutputTracker

2018-06-14 13:58:47 INFO SparkEnv:54 - Registering BlockManagerMaster

2018-06-14 13:58:47 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2018-06-14 13:58:47 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2018-06-14 13:58:47 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-4040f00b-d75a-4d79-be9c-fdd17fc20bae

2018-06-14 13:58:47 INFO MemoryStore:54 - MemoryStore started with capacity 413.9 MB

2018-06-14 13:58:47 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2018-06-14 13:58:47 INFO log:192 - Logging initialized @23015ms

2018-06-14 13:58:48 INFO Server:346 - jetty-9.3.z-SNAPSHOT

2018-06-14 13:58:48 INFO Server:414 - Started @23287ms

2018-06-14 13:58:48 INFO AbstractConnector:278 - Started ServerConnector@230fbd5{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2018-06-14 13:58:48 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4ac8768e{/jobs,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@58a84a12{/jobs/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@e700eba{/jobs/job,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7186b202{/jobs/job/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6b649efa{/stages,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@65ef48f2{/stages/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@36068727{/stages/stage,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22bf9122{/stages/stage/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@208205ed{/stages/pool,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@73afe2b7{/stages/pool/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@9687f55{/storage,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5700c9db{/storage/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@671d03bb{/storage/rdd,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6babffb5{/storage/rdd/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2173a742{/environment,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@706ceca6{/environment/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7f6329cb{/executors,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4b8137c5{/executors/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@77f4c040{/executors/threadDump,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@606a1bc4{/executors/threadDump/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6a15b73{/static,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@773014d3{/,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7fedb795{/api,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@34451ed8{/jobs/job/kill,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@c1050f2{/stages/stage/kill,null,AVAILABLE,@Spark}

2018-06-14 13:58:48 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://master:4040

2018-06-14 13:58:48 INFO StandaloneAppClient$ClientEndpoint:54 - Connecting to master spark://192.168.189.1:7077...

2018-06-14 13:58:49 INFO TransportClientFactory:267 - Successfully created connection to /192.168.189.1:7077 after 183 ms (0 ms spent in bootstraps)

2018-06-14 13:58:49 INFO StandaloneSchedulerBackend:54 - Connected to Spark cluster with app ID app-20180614135849-0001

2018-06-14 13:58:49 INFO StandaloneAppClient$ClientEndpoint:54 - Executor added: app-20180614135849-0001/0 on worker-20180614135333-worker2-34319 (worker2:34319) with 1 core(s)

2018-06-14 13:58:49 INFO StandaloneSchedulerBackend:54 - Granted executor ID app-20180614135849-0001/0 on hostPort worker2:34319 with 1 core(s), 512.0 MB RAM

2018-06-14 13:58:49 INFO StandaloneAppClient$ClientEndpoint:54 - Executor added: app-20180614135849-0001/1 on worker-20180614135333-worker3-40134 (worker3:40134) with 1 core(s)

2018-06-14 13:58:49 INFO StandaloneSchedulerBackend:54 - Granted executor ID app-20180614135849-0001/1 on hostPort worker3:40134 with 1 core(s), 512.0 MB RAM

2018-06-14 13:58:49 INFO StandaloneAppClient$ClientEndpoint:54 - Executor added: app-20180614135849-0001/2 on worker-20180614135333-worker1-35690 (worker1:35690) with 1 core(s)

2018-06-14 13:58:49 INFO StandaloneSchedulerBackend:54 - Granted executor ID app-20180614135849-0001/2 on hostPort worker1:35690 with 1 core(s), 512.0 MB RAM

2018-06-14 13:58:49 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 49678.

2018-06-14 13:58:49 INFO NettyBlockTransferService:54 - Server created on master:49678

2018-06-14 13:58:49 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2018-06-14 13:58:49 INFO StandaloneAppClient$ClientEndpoint:54 - Executor updated: app-20180614135849-0001/2 is now RUNNING

2018-06-14 13:58:49 INFO StandaloneAppClient$ClientEndpoint:54 - Executor updated: app-20180614135849-0001/1 is now RUNNING

2018-06-14 13:58:49 INFO StandaloneAppClient$ClientEndpoint:54 - Executor updated: app-20180614135849-0001/0 is now RUNNING

2018-06-14 13:58:49 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, master, 49678, None)

2018-06-14 13:58:49 INFO BlockManagerMasterEndpoint:54 - Registering block manager master:49678 with 413.9 MB RAM, BlockManagerId(driver, master, 49678, None)

2018-06-14 13:58:49 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, master, 49678, None)

2018-06-14 13:58:49 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, master, 49678, None)

2018-06-14 13:58:51 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@30bbcf91{/metrics/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:51 INFO StandaloneSchedulerBackend:54 - SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

2018-06-14 13:58:52 INFO SharedState:54 - loading hive config file: file:/usr/local/spark-2.3.0-bin-hadoop2.6/conf/hive-site.xml

2018-06-14 13:58:52 INFO SharedState:54 - spark.sql.warehouse.dir is not set, but hive.metastore.warehouse.dir is set. Setting spark.sql.warehouse.dir to the value of hive.metastore.warehouse.dir ('/user/hive/warehouse').

2018-06-14 13:58:52 INFO SharedState:54 - Warehouse path is '/user/hive/warehouse'.

2018-06-14 13:58:52 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@256a0d95{/SQL,null,AVAILABLE,@Spark}

2018-06-14 13:58:52 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2f3928ac{/SQL/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:52 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22ead351{/SQL/execution,null,AVAILABLE,@Spark}

2018-06-14 13:58:52 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@68af87ad{/SQL/execution/json,null,AVAILABLE,@Spark}

2018-06-14 13:58:52 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@11ede87f{/static/sql,null,AVAILABLE,@Spark}

2018-06-14 13:58:52 INFO HiveUtils:54 - Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

2018-06-14 13:58:52 INFO HiveClientImpl:54 - Warehouse location for Hive client (version 1.2.2) is /user/hive/warehouse

2018-06-14 13:58:52 INFO HiveMetaStore:746 - 0: get_database: default

2018-06-14 13:58:52 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_database: default

2018-06-14 13:58:55 INFO CoarseGrainedSchedulerBackend$DriverEndpoint:54 - Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.189.4:48078) with ID 1

2018-06-14 13:58:55 INFO BlockManagerMasterEndpoint:54 - Registering block manager worker3:52725 with 117.0 MB RAM, BlockManagerId(1, worker3, 52725, None)

2018-06-14 13:59:00 INFO StateStoreCoordinatorRef:54 - Registered StateStoreCoordinator endpoint

spark-sql> 2018-06-14 13:59:11 INFO CoarseGrainedSchedulerBackend$DriverEndpoint:54 - Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.189.2:47331) with ID 2

2018-06-14 13:59:11 INFO BlockManagerMasterEndpoint:54 - Registering block manager worker1:45606 with 117.0 MB RAM, BlockManagerId(2, worker1, 45606, None)

2018-06-14 13:59:15 INFO CoarseGrainedSchedulerBackend$DriverEndpoint:54 - Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.189.3:46036) with ID 0

2018-06-14 13:59:15 INFO BlockManagerMasterEndpoint:54 - Registering block manager worker2:55353 with 117.0 MB RAM, BlockManagerId(0, worker2, 55353, None)继续报错:Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hbase.util.Bytes

扫描二维码关注公众号,回复:

2150032 查看本文章

>

> select * from hbase_hive_wtb_ow_operation;

2018-06-14 14:00:25 INFO HiveMetaStore:746 - 0: get_table : db=default tbl=hbase_hive_wtb_ow_operation

2018-06-14 14:00:25 INFO audit:371 - ugi=root ip=unknown-ip-addr cmd=get_table : db=default tbl=hbase_hive_wtb_ow_operation

2018-06-14 14:00:27 ERROR SparkSQLDriver:91 - Failed in [ select * from hbase_hive_wtb_ow_operation]

java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/util/Bytes

at org.apache.hadoop.hive.hbase.HBaseSerDe.parseColumnsMapping(HBaseSerDe.java:184)

at org.apache.hadoop.hive.hbase.HBaseSerDeParameters.<init>(HBaseSerDeParameters.java:73)

at org.apache.hadoop.hive.hbase.HBaseSerDe.initialize(HBaseSerDe.java:117)

at org.apache.hadoop.hive.serde2.AbstractSerDe.initialize(AbstractSerDe.java:53)

at org.apache.hadoop.hive.serde2.SerDeUtils.initializeSerDe(SerDeUtils.java:521)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.getDeserializer(MetaStoreUtils.java:391)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializerFromMetaStore(Table.java:276)

at org.apache.hadoop.hive.ql.metadata.Table.getDeserializer(Table.java:258)

at org.apache.hadoop.hive.ql.metadata.Table.getCols(Table.java:605)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:358)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1$$anonfun$apply$7.apply(HiveClientImpl.scala:355)

at scala.Option.map(Option.scala:146)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:355)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$getTableOption$1.apply(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:210)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:209)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:255)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTableOption(HiveClientImpl.scala:353)

at org.apache.spark.sql.hive.client.HiveClient$class.getTable(HiveClient.scala:81)

at org.apache.spark.sql.hive.client.HiveClientImpl.getTable(HiveClientImpl.scala:83)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getRawTable$1.apply(HiveExternalCatalog.scala:118)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getRawTable(HiveExternalCatalog.scala:117)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:684)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:683)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupRelation(SessionCatalog.scala:669)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveRelations$$lookupTableFromCatalog(Analyzer.scala:660)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.resolveRelation(Analyzer.scala:615)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:645)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:288)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:306)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:187)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:304)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:286)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:638)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:584)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:87)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:84)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:76)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:76)

at org.apache.spark.sql.catalyst.analysis.Analyzer.org$apache$spark$sql$catalyst$analysis$Analyzer$$executeSameContext(Analyzer.scala:123)

at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:117)

at org.apache.spark.sql.catalyst.analysis.Analyzer.executeAndCheck(Analyzer.scala:102)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:74)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:638)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:694)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:62)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:355)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:879)