def conv_backward(dZ, cache):

#从缓冲中恢复参数,前向传播时保存的

(A_prev, W, b, hparameters) = cache

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

(f, f, n_C_prev, n_C) = W.shape

stride = hparameters['stride']

pad = hparameters['pad']

(m, n_H, n_W, n_C) = dZ.shape

dA_prev = np.zeros((m, n_H_prev, n_W_prev, n_C_prev))

dW = np.zeros((f, f, n_C_prev, n_C))

db = np.zeros((1, 1, 1, n_C))

#补零操作

A_prev_pad = zero_pad(A_prev, pad)

dA_prev_pad = zero_pad(dA_prev, pad)

for i in range(m):

# 4重循环 第一个样本,第二个高度,第三个宽度,第四个厚度

a_prev_pad = A_prev_pad[i]

da_prev_pad = dA_prev_pad[i]

for h in range(n_H):

for w in range(n_W):

for c in range(n_C):

#得到过滤器的二维坐标

vert_start = h * stride

vert_end = vert_start + f

horiz_start = w * stride

horiz_end = horiz_start + f

#吧过滤器切出来

a_slice = a_prev_pad[vert_start:vert_end, horiz_start:horiz_end, :]

da_prev_pad[vert_start:vert_end, horiz_start:horiz_end, :] += W[:,:,:,c] * dZ[i, h, w, c]

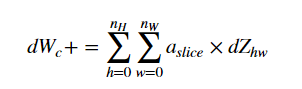

dW[:,:,:,c] += a_slice * dZ[i, h, w, c]#主要注意这里dw值是所有过滤器算出来相加的

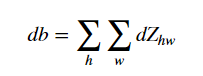

db[:,:,:,c] += dZ[i, h, w, c]

# Set the ith training example's dA_prev to the unpaded da_prev_pad (Hint: use X[pad:-pad, pad:-pad, :])

dA_prev[i, :, :, :] = dA_prev_pad[i, pad:-pad, pad:-pad, :]

return dA_prev, dW, db主要是理解卷积反向传播dw计算是 所有过滤器加起来

池化层没有参数更新,但是要把值传递回去,需要把每个值映射回卷积大小

def pool_backward(dA, cache, mode = "max"):

(A_prev, hparameters) = cache

stride = hparameters['stride']

f = hparameters['f']

m, n_H_prev, n_W_prev, n_C_prev = A_prev.shape

m, n_H, n_W, n_C = dA.shape

dA_prev = np.zeros_like(A_prev)

for i in range(m):

a_prev = A_prev[i]

for h in range(n_H):

for w in range(n_W):

for c in range(n_C):

vert_start = h * stride

vert_end = vert_start + f

horiz_start = w * stride

horiz_end = horiz_start + f

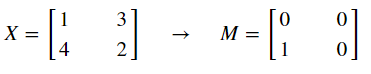

if mode == "max":

a_prev_slice = a_prev[vert_start:vert_end, horiz_start:horiz_end, c]

mask = create_mask_from_window(a_prev_slice)

dA_prev[i, vert_start: vert_end, horiz_start: horiz_end, c] += mask * dA[i, vert_start, horiz_start, c]

#这里是把每个值投射到[f, f]大小,小变大

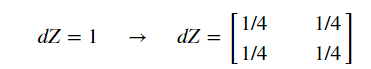

elif mode == "average":

da = dA[i, vert_start, horiz_start, c]

shape = (f, f)

dA_prev[i, vert_start: vert_end, horiz_start: horiz_end, c] += distribute_value(da, shape)

return dA_prev