TensorFlow实现神经网络

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

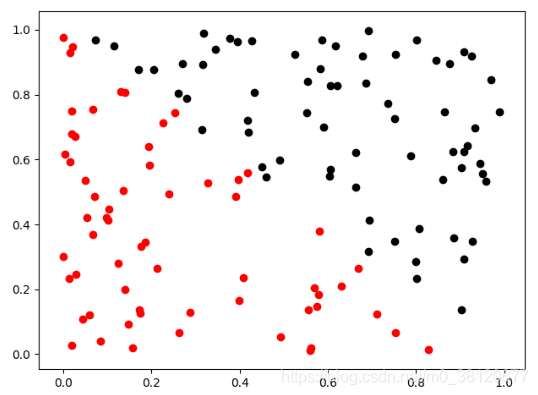

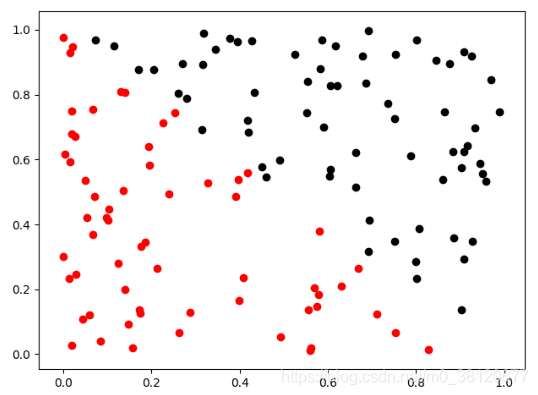

# 1.生成训练样本

dataset_size=128

X=np.random.RandomState(1).uniform(0,1,(dataset_size,2))

Y=[[int(x1+x2<1)] for (x1,x2) in X]

for i in range(len(X)):

if Y[i][0]==1:

plt.scatter(X[i][0],X[i][1],c='r')

else:

plt.scatter(X[i][0],X[i][1],c='k')

plt.show()

# 2.定义训练数据batch大小

batchsize=8

# 3.定义神经网络参数

w1=tf.Variable(tf.random_normal([2,3],mean=0,stddev=1,seed=1))

w2=tf.Variable(tf.random_normal([3,1],mean=0,stddev=1,seed=1))

x=tf.placeholder(tf.float32,shape=(None,2),name='x-input')

y_=tf.placeholder(tf.float32,shape=(None,1),name='y-input')

# 前向传播

a=tf.matmul(x,w1)

y=tf.matmul(a,w2)

# 损失函数

cross_entropy=-tf.reduce_mean(y_*tf.log(tf.clip_by_value(y,1e-10,1.0)))

train_step=tf.train.AdamOptimizer(0.001).minimize(cross_entropy)

# 创建会话运行tensorflow

with tf.Session() as sess:

# 初始化变量

init_op=tf.initialize_all_variables()

sess.run(init_op)

STEPS=5000

print('Start training>............')

for i in range(STEPS):

start=(i*batchsize)%dataset_size

end=min(start+batchsize,dataset_size)

# 训练

sess.run(train_step,feed_dict={x:X[start:end],y_:Y[start:end]})

# 计算交叉熵并输出

if i%1000==0:

total_cross_entropy=sess.run(cross_entropy,feed_dict={x:X,y_:Y})

print('第%d次训练,总体交叉熵为:%f'%(i,total_cross_entropy))