任务

根据客户贷款数据预测客户是否会逾期,1表示会,0表示不会。

实现

# -*- coding: utf-8 -*-

"""

Created on Thu Nov 15 13:02:11 2018

@author: keepi

"""

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import f1_score

import xgboost as xgb

import warnings

warnings.filterwarnings('ignore')

pd.set_option('display.max_row',1000)

#导入数据

data = pd.read_csv('data.csv',encoding='gb18030')

print("data.shape:",data.shape)

#数据处理

miss_rate = data.isnull().sum() / len(data)

print("缺失率:",miss_rate.sort_values(ascending=False))

X_num = data.select_dtypes('number').copy()

X_num.fillna(X_num.mean(),inplace=True)

print("数值型特征的shape:",X_num.shape)

print(X_num.columns)

X_num.drop(['Unnamed: 0','status'],axis=1,inplace=True)

X_str = data.select_dtypes(exclude='number').copy()

X_str.fillna(0,inplace=True)

print("非数值型特征:",X_str.columns)

print(X_str.head())

X_dummy = pd.get_dummies(X_str['reg_preference_for_trad'])

X = pd.concat([X_num,X_dummy],axis=1,sort=False)

y = data['status']

#划分训练集、测试集

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.3,random_state=1117)

#归一化

ss = StandardScaler()

X_train_std = ss.fit_transform(X_train)

X_test_std = ss.transform(X_test)

print("f1_score:")

#xgboost

xgb_train = xgb.DMatrix(X_train_std,label = y_train)

xgb_test = xgb.DMatrix(X_test_std)

xgb_params = {

'learning_rate':0.1,

'n_estimators':1000,

'max_depth':6,

'min_child_weight':1,

'gamma':0,

'subsample':0.8,

'colsample_bytree':0.8,

'objective':'binary:logistic',

'nthread':4,

'scale_pos_weight':1,

'seed':1118

}

xgb_model = xgb.train(xgb_params, xgb_train, num_boost_round=xgb_params['n_estimators'])

test_xgb_pred_prob = xgb_model.predict(xgb_test)

test_xgb_pred = (test_xgb_pred_prob >= 0.5) + 0

print("xgboost:",f1_score(y_test,test_xgb_pred))

#xgboost sklearn版

from xgboost.sklearn import XGBClassifier

xgbc = XGBClassifier(**xgb_params)

xgbc.fit(X_train_std,y_train)

test_xgbc_pred = xgbc.predict(X_test_std)

print('xgbc:',f1_score(y_test,test_xgbc_pred))

#lightgbm

import lightgbm as lgb

lgb_params = {

'learning_rate':0.1,

'n_estimators':50,

'max_depth':4,

'min_child_weight':1,

'gamma':0,

'subsample':0.8,

'colsample_bytree':0.8,

'objective':'binary',

'nthread':4,

'scale_pos_weight':1,

'seed':1117

}

dtrain = lgb.Dataset(X_train_std,y_train)

lgb_model = lgb.train(lgb_params, dtrain, num_boost_round=lgb_params['n_estimators'])

test_lgb_pred_prob = lgb_model.predict(X_test_std)

test_lgb_pred = (test_lgb_pred_prob >=0.5) + 0

print('lgb:',f1_score(y_test,test_lgb_pred))

#lightgbm sklearn版

from lightgbm.sklearn import LGBMClassifier

lgb_model2 = LGBMClassifier(**lgb_params)

lgb_model2.fit(X_train_std,y_train)

test_lgbsk_pred = lgb_model2.predict(X_test_std)

print('lgb sklearn:',f1_score(y_test,test_lgbsk_pred))

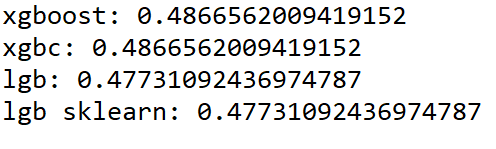

结果:f1_score:

遇到的问题

1.DeprecationWarning: The truth value of an empty array is ambiguous. Returning False, but in future this will result in an error. Use `array.size > 0` to check that an array is not empty.

这是一个关于numpy的警告,在空数组上弃用了真值检查,在新版的numpy中已经修复,这里我选择忽略警告。

import warnings

warnings.filterwarnings('ignore')现在只是学了如何调用xgboost和lightgbm,不知道如何调整参数,之后需要加强原理理解。

还有如何选择评分指标也是一个问题。机器学习