任务三

构建xgboost和lightgbm模型进行预测。

遇到的问题

- 参数不知道怎么调用

- xgboost的接口和sklearn接口不明白

- LGB和XGB自带接口预测(predict)的都是概率

- 训练之前都要将数据转化为相应模型所需的格式

代码

特征处理

import pickle

import pandas as pd #数据分析

from pandas import Series,DataFrame

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

import time

print("开始......")

t_start = time.time()

path = "E:/mypython/moxingxuexi/data/"

"""=====================================================================================================================

1 读取数据

"""

print("数据预处理")

data = pd.read_csv(path + 'data.csv',encoding='gbk')

"""=====================================================================================================================

2 数据处理

"""

"""将每一个样本的缺失值的个数作为一个特征"""

temp1=data.isnull()

num=(temp1 == True).astype(bool).sum(axis=1)

is_null=DataFrame(list(zip(num)))

is_null=is_null.rename(columns={0:"is_null_num"})

data = pd.merge(data,is_null,left_index = True, right_index = True, how = 'outer')

"""

1.1 缺失值用100填充

"""

data=DataFrame(data.fillna(100))

"""

1.2 对reg_preference_for_trad 的处理 【映射】

nan=0 境外=1 一线=5 二线=2 三线 =3 其他=4

"""

n=set(data['reg_preference_for_trad'])

dic={}

for i,j in enumerate(n):

dic[j]=i

data['reg_preference_for_trad'] = data['reg_preference_for_trad'].map(dic)

"""

1.2 对source 的处理 【映射】

"""

n=set(data['source'])

dic={}

for i,j in enumerate(n):

dic[j]=i

data['source'] = data['source'].map(dic)

"""

1.3 对bank_card_no 的处理 【映射】

"""

n=set(data['bank_card_no'])

dic={}

for i,j in enumerate(n):

dic[j]=i

data['bank_card_no'] = data['bank_card_no'].map(dic)

"""

1.2 对 id_name的处理 【映射】

"""

n=set(data['id_name'])

dic={}

for i,j in enumerate(n):

dic[j]=i

data['id_name'] = data['id_name'].map(dic)

"""

1.2 对 time 的处理 【删除】

"""

data.drop(["latest_query_time"],axis=1,inplace=True)

data.drop(["loans_latest_time"],axis=1,inplace=True)

data.drop(['trade_no'],axis=1,inplace=True)

status = data.status

# """=====================================================================================================================

# 4 time时间归一化 小时

# """

# data['time'] = pd.to_datetime(data['time'])

# time_now = data['time'].apply(lambda x:int((x-datetime(2018,11,14,0,0,0)).seconds/3600))

# data['time']= time_now

"""=====================================================================================================================

2 划分训练集和验证集,验证集比例为test_size

"""

print("划分训练集和验证集,验证集比例为test_size")

train, test = train_test_split(data, test_size=0.3, random_state=666)

"""

标准化数据

"""

standardScaler = StandardScaler()

train_fit = standardScaler.fit_transform(train)

test_fit = standardScaler.transform(test)

"""=====================================================================================================================

3 分标签和 训练数据

"""

y_train= train.status

train.drop(["status"],axis=1,inplace=True)

y_test= test.status

test.drop(["status"],axis=1,inplace=True)

print("3 保存至本地")

data = (train, test, y_train,y_test)

fp = open( 'E:/mypython/moxingxuexi/feature/V3.pkl', 'wb')

pickle.dump(data, fp)

fp.close()XGB

#!/user/bin/env python

#-*- coding:utf-8 -*-

import pickle

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from pandas import Series,DataFrame

from xgboost import XGBClassifier

from sklearn.metrics import accuracy_score,f1_score,r2_score

"""

读取特征数据

"""

path= "E:/mypython/moxingxuexi/"

f = open(path + 'feature/V3.pkl','rb')

train,test,y_train,y_test= pickle.load(f)

f.close()

"""

模型训练

"""

print("xgb训练模型")

xgb_model = XGBClassifier()

xgb_model.fit(train,y_train)

"""

模型预测

"""

y_test_pre =xgb_model.predict(test)

"""

模型评估

"""

f1 = f1_score(y_test,y_test_pre,average='macro')

print("f1的分数: {}".format(f1))

r2 = r2_score(y_test,y_test_pre)

print("f2分数:{}".format(r2))

score = xgb_model.score(test,y_test)

print("验证集分数:{}".format(score))

结果

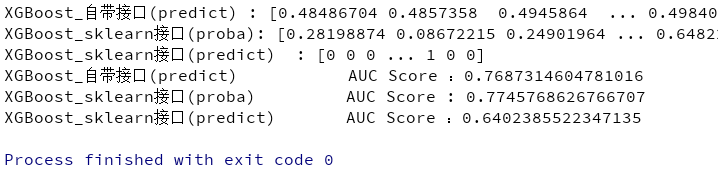

XGB1

用的自带接口与sklearn接口

#!/user/bin/env python

#-*- coding:utf-8 -*-

# @Time : 2018/11/18 15:07

# @Author : 刘

# @Site :

# @File : xgb1.py

# @Software: PyCharm

import pickle

import xgboost as xgb

import pandas as pd

from sklearn.model_selection import train_test_split

from xgboost.sklearn import XGBClassifier

from sklearn import metrics

from sklearn.externals import joblib

print("开始")

"""

读取特征

"""

path = "E:/mypython/moxingxuexi/"

f = open(path+ 'feature/V3.pkl','rb')

train ,test,y_train,y_test=pickle.load(f)

f.close()

"""

将数据格式转换成XGB所需的格式

"""

xgb_val = xgb.DMatrix(test,label= y_test)

xgb_train = xgb.DMatrix(train,y_train)

xgb_test = xgb.DMatrix(test)

"""

模型参数设置

"""

##XGB自带接口

params={

'booster': 'gbtree',#常用的booster有树模型(tree)和线性模型(linear model)

'objective': 'reg:linear',

'gamma': 0.1,#用于控制是否后剪枝的参数,越大越保守一般是0.1,0.2

'max_depth': 10,#构建树的深度,越大越容易过拟合

'lambda': 2,#控制权重值的L2正则化项参数,参数越大,模型越不容易过拟合

'subsample': 0.7,#随机训练样本

'colsample_bytree': 0.7,#生成树时进行的列采样

'min_child_weight': 3,

# 这个参数默认是 1,是每个叶子里面 h 的和至少是多少,对正负样本不均衡时的 0-1 分类而言

#,假设 h 在 0.01 附近,min_child_weight 为 1 意味着叶子节点中最少需要包含 100 个样本。

#这个参数非常影响结果,控制叶子节点中二阶导的和的最小值,该参数值越小,越容易 overfitting。

'silent': 0,#设置成1则没有运行信息输出,最好是设置为0

'eta': 0.001,# 如同学习率

'seed': 1000,#随机种子

# 'nthread':7,# cpu 线程数

#'eval_metric': # 'auc'

}

plst = list(params.items())## 转化为list

num_rounds = 50 # 设置迭代次数

#sklearn接口

##分类使用XGBClassifier

##回归使用XGBRegression

clf = XGBClassifier(

n_estimators =30,

learning_rate =0.3,

max_depth=3,

min_child_weight=1,

gamma=0.3,

subsample=0.8,

colsample_bytree=0.8,

objective= 'binary:logistic',

nthread=12,

scale_pos_weight=1,

reg_lambda=1,

seed=27)

watchlist = [(xgb_train, 'train'),(xgb_val, 'val')]

"""

模型训练

"""

# training model

#early_stopping_rounds 当设置的迭代次数较大时,early_stopping_rounds 可在一定的迭代次数内准确率没有提升就停止训练

# 使用XGBoost有自带接口

"""使用XGBOOST自带训练接口"""

model_xgb= xgb.train(plst,xgb_train,num_rounds,watchlist,early_stopping_rounds=100)

"""使用sklenar接口训练"""

model_xgb_sklearn =clf.fit(train,y_train)

"""

模型保存

"""

print('模型保存')

joblib.dump(model_xgb,path+"model/xgb.pkl")

joblib.dump(model_xgb_sklearn,path+"model/xgb_sklearn.pkl")

"""

模型预测

"""

"""【使用XGB自带接口预测】"""

y_xgb=model_xgb.predict(xgb_test)

"""【使用xgb sklearn预测】"""

y_sklearn_pre= model_xgb_sklearn.predict(test)

y_sklearn_proba= model_xgb_sklearn.predict_proba(test)[:,1]

"""5 模型评分"""

print("XGBoost_自带接口(predict) : %s" % y_xgb)

print("XGBoost_sklearn接口(proba): %s" % y_sklearn_proba)

print("XGBoost_sklearn接口(predict) : %s" % y_sklearn_pre)

# print("XGBoost_自带接口(predict) AUC Score : %f" % metrics.roc_auc_score(y_test, y_xgb))

# print("XGBoost_sklearn接口(proba) AUC Score : %f" % metrics.roc_auc_score(y_test, y_sklearn_proba))

# print("XGBoost_sklearn接口(predict) AUC Score : %f" % metrics.roc_auc_score(y_test, y_sklearn_pre))

"""【roc_auc_score】"""

#直接根据真实值(必须是二值)、预测值(可以是0/1,也可以是proba值)计算出auc值,中间过程的roc计算省略。

# f1 = f1_score(y_test, predictions, average='macro')

print("XGBoost_自带接口(predict) AUC Score :{}".format(metrics.roc_auc_score(y_test, y_xgb)))

print("XGBoost_sklearn接口(proba) AUC Score : {}".format(metrics.roc_auc_score(y_test, y_sklearn_proba)))

print("XGBoost_sklearn接口(predict) AUC Score :{}".format(metrics.roc_auc_score(y_test, y_sklearn_pre)))

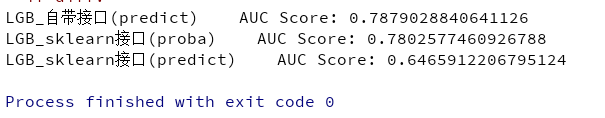

评分

LGB

#!/user/bin/env python

#-*- coding:utf-8 -*-

# @Time : 2018/11/17 23:03

# @Author : 刘

# @Site :

# @File : lig.py

# @Software: PyCharm

import pickle

import pandas as pd

from pandas import Series,DataFrame

from sklearn.model_selection import train_test_split

from lightgbm import LGBMClassifier

from sklearn.metrics import f1_score,r2_score

"""

读取特征

"""

path= "E:/mypython/moxingxuexi/"

f =open(path + 'feature/V3.pkl','rb')

train,test,y_train,y_test= pickle.load(f)

f.close()

"""

模型训练

"""

print("lgb模型训练")

lgb_model = LGBMClassifier()

lgb_model.fit(train,y_train)

"""

模型预测

"""

y_test_pre = lgb_model.predict(test)

"""

模型评估

"""

f1 = f1_score(y_test,y_test_pre,average='macro')

print("f1的分数: {}".format(f1))

r2 = r2_score(y_test,y_test_pre)

print("f2分数:{}".format(r2))

score = lgb_model.score(test,y_test)

print("验证集分数:{}".format(score))评分

LGB1

#!/user/bin/env python

#-*- coding:utf-8 -*-

# @Time : 2018/11/17 23:03

# @Author : 刘

# @Site :

# @File : lig.py

# @Software: PyCharm

import pickle

import pandas as pd

from pandas import Series,DataFrame

from sklearn.model_selection import train_test_split

from lightgbm import LGBMClassifier

from sklearn.metrics import f1_score,r2_score

"""

读取特征

"""

path= "E:/mypython/moxingxuexi/"

f =open(path + 'feature/V3.pkl','rb')

train,test,y_train,y_test= pickle.load(f)

f.close()

"""

模型训练

"""

print("lgb模型训练")

lgb_model = LGBMClassifier()

lgb_model.fit(train,y_train)

"""

模型预测

"""

y_test_pre = lgb_model.predict(test)

"""

模型评估

"""

f1 = f1_score(y_test,y_test_pre,average='macro')

print("f1的分数: {}".format(f1))

r2 = r2_score(y_test,y_test_pre)

print("f2分数:{}".format(r2))

score = lgb_model.score(test,y_test)

print("验证集分数:{}".format(score))评分