一、我的小书屋

这个爬虫能爬取 http://mebook.cc/ 网站的电子书下载路径。(只是小练习,侵删)

爬取网站使用了 BeautifulSoup 进行解析,

二、爬取源码

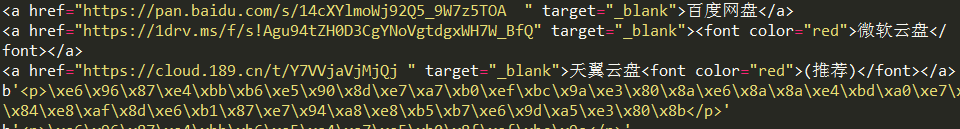

1 #!/usr/bin/python 2 # -*- coding: UTF-8 -*- 3 import re 4 import urllib.request 5 from bs4 import BeautifulSoup 6 #编程书籍 7 url = "http://mebook.cc/category/gjs/bckf/" 8 #获得各个书本的链接 9 def getbook(url): 10 html_doc = urllib.request.urlopen(url).read() 11 soup = BeautifulSoup(html_doc,"html.parser",from_encoding="GB18030") 12 links = soup.select('#primary .img a') 13 for link in links: 14 str = link['href'] + link['title'] + '\n' 15 print (str) 16 bookfile(str) 17 #将各个书本的链接追加保存到txt文件(待处理) 18 def bookfile(str): 19 fo = open("file.txt","a") 20 fo.write(str) 21 fo.close() 22 #获取所有书本链接 23 def test(): 24 getbook(url) 25 for x in range(2,18): 26 url = "http://mebook.cc/category/gjs/bckf/page/" + str(x) 27 try: 28 getbook(url) 29 bookfile("第"+str(x)+"页\n") 30 except UnicodeEncodeError: 31 pass 32 continue 33 # 获取各个书本的下载链接 34 def getDownload(id): 35 url = "http://mebook.cc/download.php?id="+id 36 html_doc = urllib.request.urlopen(url).read() 37 soup = BeautifulSoup(html_doc,"html.parser",from_encoding="GB18030") 38 links = soup.select('.list a') 39 for link in links: 40 print (link) 41 pwds = soup.select('.desc p') 42 for pwd in pwds: 43 print (pwd.encode(encoding='utf-8' ,errors = 'strict')) 44 45 #test 46 getDownload(str(25723))

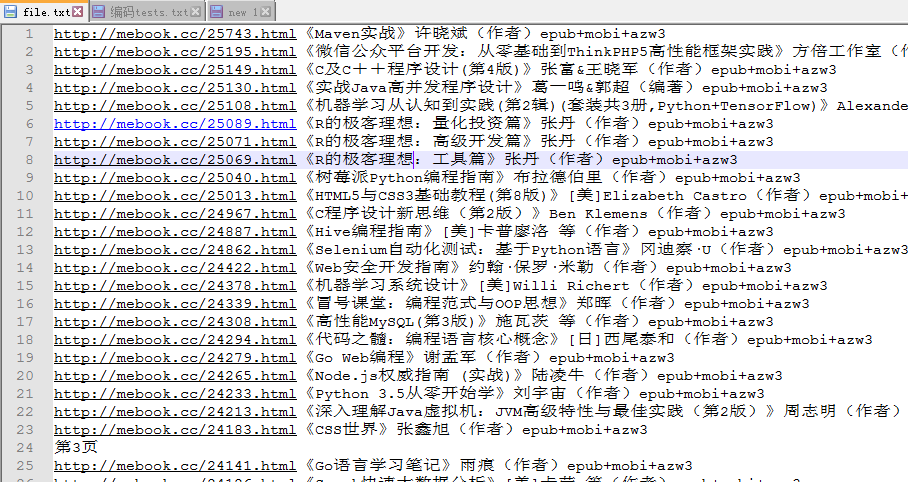

三、爬取结果

四、问题发现

4.1、Python3爬取网站信息时的gbk编码问题

Python默认字符是ASCII的,decode('GBK')或decode('GB18030')都不成

考虑进行字符串处理,参考:https://www.yiibai.com/python/python_strings.html