首先导入各种TensorFlow等工具及设置画图的大小及字体

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (10.0, 8.0)

plt.rcParams['font.sans-serif'] = 'NSimSun,Times New Roman'生成用于进行线性回归的模型的数据

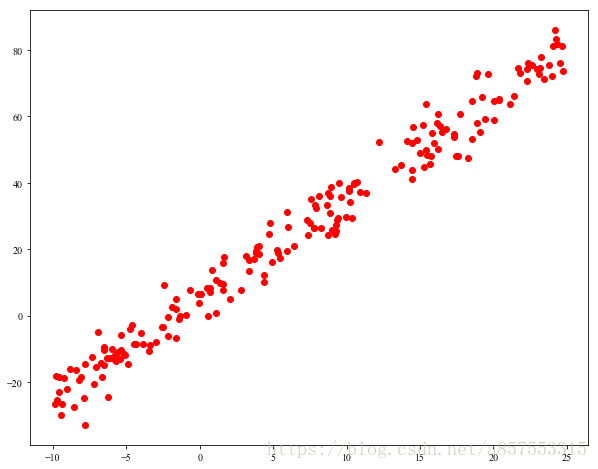

# 随机生成100个点,围绕在y=3x+5的直线周围

num_points = 200

vectors_set = []

for i in range(num_points):

x1 = np.random.uniform(-10, 25)

y1 = x1 * 3 + 5 + np.random.normal(0.0, 5)

vectors_set.append([x1, y1])

# 生成一些样本

x_data = [v[0] for v in vectors_set]

y_data = [v[1] for v in vectors_set]

plt.scatter(x_data,y_data,c='r')

plt.show()生成的数据及画出的点像图如下:

设置模型的原始数据,编写现行回归的训练模型代码,并使用梯度下降算法进行训练

W = tf.Variable(tf.random_uniform([1], -1.0, 1.0), name='W')

b = tf.Variable(tf.zeros([1]), name='b')

y = W * x_data + b

loss = tf.reduce_mean(tf.square(y - y_data), name='loss')

optimizer = tf.train.GradientDescentOptimizer(0.005)

train = optimizer.minimize(loss, name='train')

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)

# 初始化的W和b是多少

print ("W =", sess.run(W), "\tb =", sess.run(b), "\tloss =", sess.run(loss))

# 执行20次训练

for step in range(1000):

sess.run(train)

# 输出训练好的W和b

if(step % 50 == 0):

print ("W =", sess.run(W), "\tb =", sess.run(b), "\tloss =", sess.run(loss))

print ("最终的结果 W =", sess.run(W), "\tb =", sess.run(b), "\tloss =", sess.run(loss))训练过程显示的参数结果如下:

W = [ 0.26262569] b = [ 0.] loss = 1366.89

W = [ 4.73117256] b = [ 0.23248257] loss = 386.389

W = [ 3.15473843] b = [ 1.66317821] loss = 32.05

W = [ 3.10857034] b = [ 2.7120173] loss = 27.6106

W = [ 3.07622719] b = [ 3.44677091] loss = 25.432

W = [ 3.05356979] b = [ 3.96149445] loss = 24.3628

W = [ 3.03769755] b = [ 4.32207775] loss = 23.8381

W = [ 3.02657843] b = [ 4.57468128] loss = 23.5806

W = [ 3.01878905] b = [ 4.7516408] loss = 23.4542

W = [ 3.01333213] b = [ 4.87560892] loss = 23.3922

W = [ 3.00950956] b = [ 4.96245146] loss = 23.3617

W = [ 3.00683141] b = [ 5.02328825] loss = 23.3468

W = [ 3.00495553] b = [ 5.06590843] loss = 23.3395

W = [ 3.00364113] b = [ 5.09576511] loss = 23.3359

W = [ 3.00272036] b = [ 5.11667919] loss = 23.3341

W = [ 3.00207567] b = [ 5.13133097] loss = 23.3332

W = [ 3.00162363] b = [ 5.14159679] loss = 23.3328

W = [ 3.00130725] b = [ 5.14878702] loss = 23.3326

W = [ 3.00108552] b = [ 5.15382385] loss = 23.3325

W = [ 3.00093007] b = [ 5.15735531] loss = 23.3324

W = [ 3.00082135] b = [ 5.1598258] loss = 23.3324

最终的结果 W = [ 3.00074625] b = [ 5.16152811] loss = 23.3324

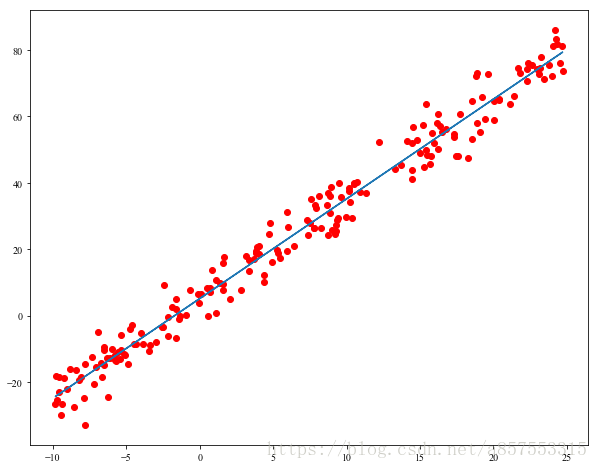

plt.scatter(x_data,y_data,c='r')

plt.plot(x_data,sess.run(W)*x_data+sess.run(b))

plt.show()