该方法尽可能简化神经网络的结构,从而减少过拟合的发生。(编注:正则化的简化实质也是丢弃网络节点,不过正则化是按照某种规则丢弃,而dropout是随机丢弃)

This method works as it simplifies theneural network’ architecture as much as possible, and hence reduces thepossible onset of overfitting.

第3章说明了这一点。

Chapter 3 explains this aspect.

此外,大量训练数据的使用也非常有帮助,因为减少了由于特定数据引起的潜在偏差。

Furthermore, the use of massive trainingdata is also very helpful as the potential bias due to particular data isreduced.

计算量(Computational Load)

最后一个挑战是完成训练所需的时间。

The last challenge is the time required tocomplete the training.

权重的数量随着隐藏层数量的增加而呈现几何增长,因此需要更多的训练数据。

The number of weights increasesgeometrically with the number of hidden layers, thus requiring more trainingdata.

最终就需要进行更多的计算。

This ultimately requires more calculationsto be made.

神经网络的计算量越大,训练时间就越长。

The more computations the neural networkperforms, the longer the training takes.

这个问题在神经网络的实际发展中是非常严重的。

This problem is a serious concern in thepractical development of the neural network.

如果一个深度神经网络需要一个月来训练,那么每年只能修改20次。(?应该是12次吧)

If a deep neural network requires a monthto train, it can only be modified 20 times a year.

在这种情况下,一项有用的研究几乎是不可能的。(太慢了!!!)

A useful research study is hardly possiblein this situation.

通过引入高性能硬件,如GPU和快速算法(批处理归一化),在很大程度上缓解了这一麻烦。

This trouble has been relieved to aconsiderable extent by the introduction of high-performance hardware, such asGPU, and algorithms, such as batch normalization.

本节介绍的小改进方法是使得深度学习成为机器学习英雄的驱动力。

The minor improvements that this sectionintroduced are the drivers that has made Deep Learning the hero of MachineLearning.

机器学习的三个主要研究领域通常为图像识别、语音识别和自然语言处理。

The three primary research areas of MachineLearning are usually said to be the image recognition, speech recognition, andnatural language processing.

这些领域都需要进行独立的研究,以寻找合适的技术方法。

Each of these areas had been separatelystudied with specifically suitable techniques.

然而,目前深度学习优于这三个领域中使用的所有技术。

However, Deep Learning currentlyoutperforms all the techniques of all three areas.

示例:ReLU与dropout(Example: ReLU and Dropout)

本节实现了ReLU激活函数和dropout这两种代表性的深度学习技术。

This section implements the ReLU activationfunction and dropout, the representative techniques of Deep Learning.

这里借用了第4章中数字分类的例子。

It reuses the example of the digitclassification from Chapter 4.

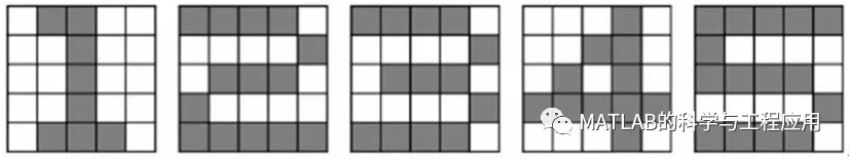

训练数据仍然采用相同的5 x5方块图像。

The training data is the same five-by-fivesquare images.

图5-5 用5 x 5方块图像训练数据Training data in five-by-five square images

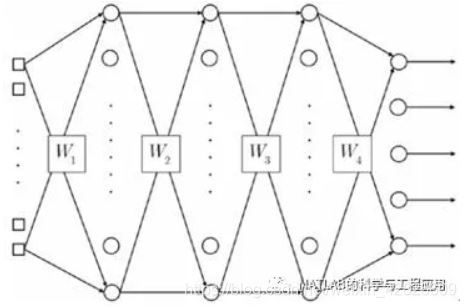

考虑具有三个隐藏层的深度神经网络,如图5-6所示。

Consider the deep neural network with thethree hidden layers, as shown in Figure 5-6.

图5-6 三隐层的深度神经网络The deep neural networkwith three hidden layers

每个隐藏层包含20个节点。

Each hidden layer contains 20 nodes.

网络有25个输入节点用于矩阵输入,五个输出节点对应五个类别。

The network has 25 input nodes for thematrix input and five output nodes for the five classes.

输出节点采用softmax激活函数。

The output nodes employ the softmaxactivation function.

——本文译自Phil Kim所著的《Matlab Deep Learning》

更多精彩文章请关注微信号: