因此,我们有8000幅MNIST图像用于训练,2000幅图像用于神经网络的性能验证。

Therefore, we have 8,000 MNIST images fortraining and 2,000 images for validation of the performance of the neuralnetwork.

正如你目前所知道的,MNIST问题是将28x28像素图像分类为0 – 9中的10个数字之一。

As you may know well by now, the MNIST problemis caused by the multiclass classification of the 28x28 pixel image into one ofthe ten digit classes of 0-9.

让我们考虑一个识别MNIST图像的ConvNet。

Let’s consider a ConvNet that recognizesthe MNIST images.

由于输入是一个28×28像素的黑白图像,因此我们需要784(=28×28)个输入节点。

As the input is a 28×28 pixel black-and-white image, weallow 784(=28x28) input nodes.

特征提取网络包含一个具有20个9×9卷积滤波器的单卷积层。

The feature extraction network contains asingle convolution layer with 20 9×9 convolution filters.

卷积层的输出通过ReLU函数,然后经过池化层。

The output from the convolution layerpasses through the ReLU function, followed by the pooling layer.

池化层采用2x2子矩阵的平均池化实现。

The pooling layer employs the mean poolingprocess of two by two submatrices.

分类神经网络由单隐藏层和输出层组成。

The classification neural network consistsof the single hidden layer and output layer.

该隐藏层由100个使用ReLU激活函数的节点组成。

This hidden layer has 100 nodes that usethe ReLU activation function.

由于我们将输入图像分为10类,因此输出层由10个节点构成。

Since we have 10 classes to classify, theoutput layer is constructed with 10 nodes.

输出节点使用softmax激活函数。

We use the softmax activation function forthe output nodes.

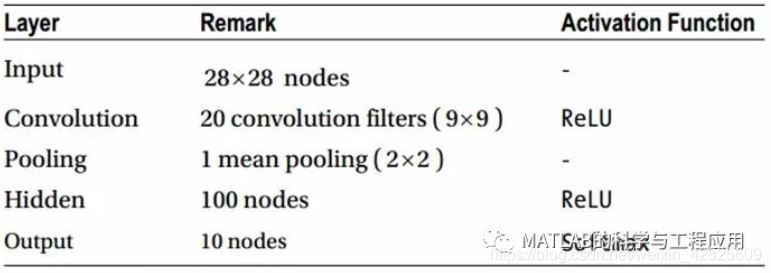

下表总结了示例神经网络的构成。

The following table summarizes the exampleneural network.

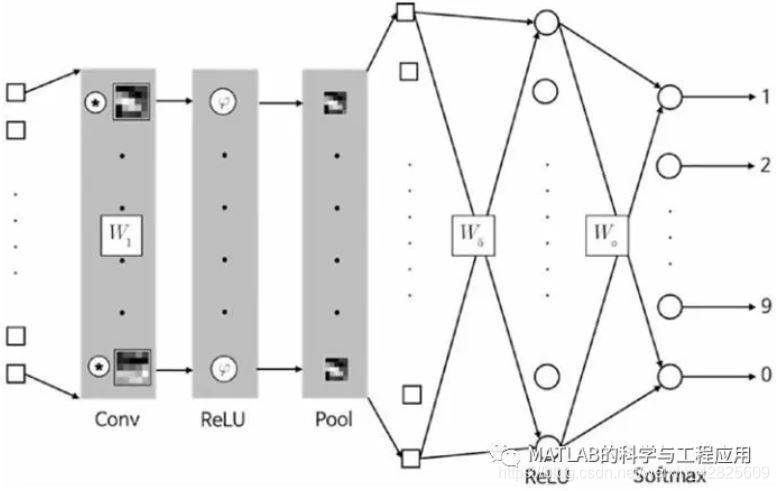

图6-18为该神经网络的体系结构。

Figure 6-18 shows the architecture of thisneural network.

图6-18 示例神经网络的体系结构The architecture of thisneural network

虽然该神经网络包含许多层,但只有三层包含需要训练的权重矩阵;它们分别是方块中的W1、W5和Wo。

Although it has many layers, only three ofthem contain the weight matrices that require training; they are W1, W5, and Woin the square blocks.

W5和Wo包含分类神经网络的连接权重,而W1是卷积层的权重,用于图像处理的卷积滤波器。

W5 and Wo contain the connection weights ofthe classification neural network, while W1 is the convolution layer’s weight,which is used by the convolution filters for image processing.

池化层与隐藏层之间的输入节点,即W5左边的正方形图标,其作用是将二维图像转换为一维矢量。

The input nodes between the pooling layerand the hidden layer, which are the square nodes left of the W5block, are the transformations of the two-dimensional image into a vector.

由于该层只是纯粹的矩阵转换,不涉及任何运算操作,因此这些节点被表示为正方形。

As this layer does not involve anyoperations, these nodes are denoted as squares.

——本文译自Phil Kim所著的《Matlab Deep Learning》

更多精彩文章请关注微信号: