在深度神经网络的训练过程中,反向传播算法经历了以下三个主要困难:

The backpropagation algorithm experiencesthe following three primary difficulties in the training process of the deepneural network:

梯度消失

Vanishing gradient

过度拟合

Overfitting

计算量

Computational load

消失的梯度(Vanishing Gradient)

在此上下文中的梯度可以被认为是与反向传播算法的增量类似的概念。

The gradient in this context can be thoughtas a similar concept to the delta of the back-propagation algorithm.

当输出误差可能无法到达更远的节点时,使用反向传播算法的训练过程中发生梯度消失。

The vanishing gradient in the trainingprocess with the back-propagation algorithm occurs when the output error ismore likely to fail to reach the farther nodes.

反向传播算法训练神经网络,因为它将输出误差向后传播到隐藏层。

The back-propagation algorithm trains theneural network as it propagates the output error backward to the hidden layers.

然而,由于误差几乎不能到达第一隐藏层,所以权重无法被调整。

However, as the error hardly reaches thefirst hidden layer, the weight cannot be adjusted.

因此,接近输入层的隐藏层没有被正确地训练。

Therefore, the hidden layers that are closeto the input layer are not properly trained.

如果无法训练隐藏层,那么隐藏层的添加就没有必要了(参见图5-2)。

There is no point of adding hidden layersif they cannot be trained (see Figure 5-2).

图5-2 消失的梯度The vanishing gradient

消失梯度的代表性解决方案是使用整流线性单元(ReLU)函数作为激活函数。

The representative solution to thevanishing gradient is the use of the Rectified Linear Unit (ReLU) function asthe activation function.

众所周知ReLU比sigmoid函数能够更好地传递误差。

It is known to better transmit the errorthan the sigmoid function.

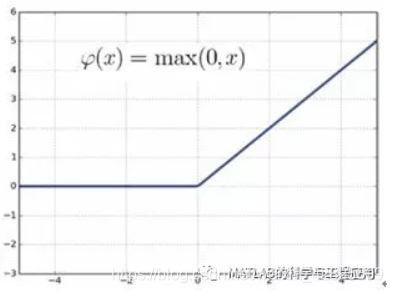

ReLU函数的定义如下:

The ReLU function is defined as follows:

图5-3描述了ReLU函数。

Figure 5-3 depicts the ReLU function.

图5-3 ReLU函数The ReLU function

当输入为负数时,该函数输出0;当输入为正数时,则输出为输入的正数。

It produces zero for negative inputs andconveys the input for positive inputs.

它的名字是因为它的功能类似于整流器,一种在切断负电压时将交流转换成直流的电气元件。

It earned its name as its behavior issimilar to that of the rectifier, an electrical element that converts thealternating current into direct current as it cuts out negative voltage.

——本文译自Phil Kim所著的《Matlab Deep Learning》

更多精彩文章请关注微信号: