之前一直在看机器学习,遇到了一些需要爬取数据的内容,于是稍微看了看Python爬虫,在此适当做一个记录。我也没有深入研究爬虫,大部分均是参考了网上的资源。

先推荐两个Python爬虫的教程,网址分别是http://cuiqingcai.com/1052.html 和 http://ddswhu.com/2015/03/25/python-downloadhelper-premium/ ,我就是看这两个当做基本的入门。

有兴趣才有动力,既然学了爬虫,那就先爬取美女照片吧。当然,这里先并不是爬取好友的照片了,比如QQ空间、人人之类的,因为这些还涉及模拟登录之类的操作。为了方便,还是爬取贴吧里的图片。主要是参考了Github上这个项目:https://github.com/Yixiaohan/show-me-the-code (Python 练习册,每天一个小项目),感觉还是很不错的。

先来看上面那个项目里的第13题,要求是爬取这个网址中的图片:http://tieba.baidu.com/p/2166231880,参考了网友renzongxian 的代码(使用版本为Python 3.4),如下:

# 爬取贴吧图片,网址:http://tieba.baidu.com/p/2166231880

import urllib.request

import re

import os

def fetch_pictures(url):

html_content = urllib.request.urlopen(url).read()

r = re.compile('<img pic_type="0" class="BDE_Image" src="(.*?)"')

picture_url_list = r.findall(html_content.decode('utf-8'))

os.mkdir('pictures')

os.chdir(os.path.join(os.getcwd(), 'pictures'))

for i in range(len(picture_url_list)):

picture_name = str(i) + '.jpg'

try:

urllib.request.urlretrieve(picture_url_list[i], picture_name)

print("Success to download " + picture_url_list[i])

except:

print("Fail to download " + picture_url_list[i])

if __name__ == '__main__':

fetch_pictures("http://tieba.baidu.com/p/2166231880")运行之后,会生成一个名为pictures的文件夹,自动下载图片并存放进去(图片就不截图了)。

为了测试该程序,我看了另一个Python爬虫的代码,准备把网址替换一下,即爬取这个网址中的图片:http://tieba.baidu.com/p/2460150866 ,只需要将上述代码稍作修改(把网址换一下,文件夹改一下)即可,如下

# 爬取贴吧图片,网址:http://tieba.baidu.com/p/2460150866

import urllib.request

import re

import os

def fetch_pictures(url):

html_content = urllib.request.urlopen(url).read()

r = re.compile('<img pic_type="0" class="BDE_Image" src="(.*?)"')

picture_url_list = r.findall(html_content.decode('utf-8'))

os.mkdir('photos')

os.chdir(os.path.join(os.getcwd(), 'photos'))

for i in range(len(picture_url_list)):

picture_name = str(i) + '.jpg'

try:

urllib.request.urlretrieve(picture_url_list[i], picture_name)

print("Success to download " + picture_url_list[i])

except:

print("Fail to download " + picture_url_list[i])

if __name__ == '__main__':

fetch_pictures("http://tieba.baidu.com/p/2460150866")爬取的图片会存放在photos文件夹中,截图如下:

好的,那下面就来爬取一组明星的图片吧,比如刘诗诗,网址如下:http://tieba.baidu.com/p/2854146750 ,如果仍然直接把代码中的网址和文件夹名改一下,会发现并没有下载成功。所以,编程还是得老老实实看代码啊,光想着直接换个网址实在太偷懒(当然,想法可以有,这样才可以写出更智能、更进步的程序嘛)。

代码在抓取网页之后,利用正则表达式找到了图片的网址(URL),即关键在代码的这一行

r = re.compile('<img pic_type="0" class="BDE_Image" src="(.*?)"')上面那两个例子之所以会成功,是因为网页源代码中(可以用谷歌浏览器查看)图片的网址确实是这个形式的,所以匹配到了。而刘诗诗照片的网址呢,查看一下,是这样的:

也就是里面img标签里的代码形式是不一样的,不是

<img pic_type="0" class="BDE_Image" src="(.*?)"而是

<img class="BDE_Image" src="(.*?)" pic_ext="jpeg" pic_type="0"所以要把正则表达式的内容修改一下,整体代码如下:

# 爬取贴吧刘诗诗的图片,网址:http://tieba.baidu.com/p/2854146750

import urllib.request

import re

import os

def fetch_pictures(url):

html_content = urllib.request.urlopen(url).read()

r = re.compile('<img class="BDE_Image" src="(.*?)" pic_ext="jpeg" pic_type="0"')

picture_url_list = r.findall(html_content.decode('utf-8'))

os.mkdir('liushishi')

os.chdir(os.path.join(os.getcwd(), 'liushishi'))

for i in range(len(picture_url_list)):

picture_name = str(i) + '.jpg'

try:

urllib.request.urlretrieve(picture_url_list[i], picture_name)

print("Success to download " + picture_url_list[i])

except:

print("Fail to download " + picture_url_list[i])

if __name__ == '__main__':

fetch_pictures("http://tieba.baidu.com/p/2854146750")运行后,爬取成功,截图如下:

嗯,这种用代码自动下载的感觉就是爽!(哈哈,逃)

所以下载图片的关键在于正则表达式的匹配。下面再举两个例子。

先来一个简单的。网址是:http://tieba.baidu.com/p/3884688092 ,代码如下:

# 爬取贴吧图片,网址:http://tieba.baidu.com/p/3884688092

import urllib.request

import re

import os

def fetch_pictures(url):

html_content = urllib.request.urlopen(url).read()

r = re.compile('<img class="BDE_Image" pic_type="\d" width="\d\d\d" height="\d\d\d" src="(.*?)"')

picture_url_list = r.findall(html_content.decode('utf-8'))

os.mkdir('test')

os.chdir(os.path.join(os.getcwd(), 'test'))

for i in range(len(picture_url_list)):

picture_name = str(i) + '.jpg'

try:

urllib.request.urlretrieve(picture_url_list[i], picture_name)

print("Success to download " + picture_url_list[i])

except:

print("Fail to download " + picture_url_list[i])

if __name__ == '__main__':

fetch_pictures("http://tieba.baidu.com/p/3884688092")关键仍在于正则表达式那一行:

r = re.compile('<img class="BDE_Image" pic_type="\d" width="\d\d\d" height="\d\d\d" src="(.*?)"')不过我写的匹配比较拙劣,也就爬取了前4张图片而已,有待完善。

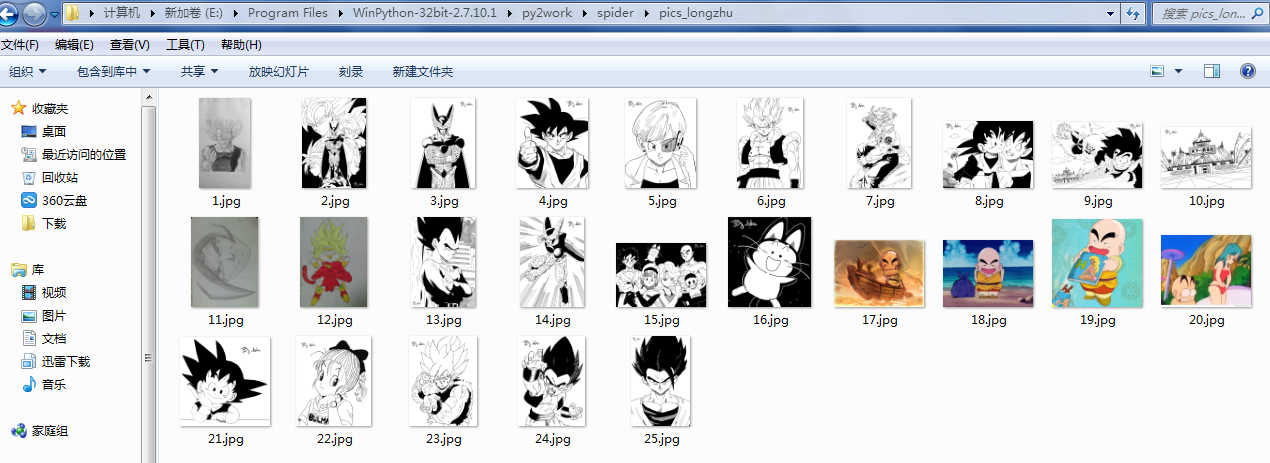

再来一个自己喜欢的。之前看到了一个人手绘龙珠的图片,就想爬取下来,网址在:http://tieba.baidu.com/p/3720487356 ,代码如下:

# 爬取贴吧龙珠图片,网址:http://tieba.baidu.com/p/3720487356

import urllib.request

import re

import os

def fetch_pictures(url):

html_content = urllib.request.urlopen(url).read()

r = re.compile('<img class="BDE_Image" pic_type="0" width="\d\d\d" height="\d\d\d" src="(.*?)"')

picture_url_list = r.findall(html_content.decode('utf-8'))

os.mkdir('longzhu')

os.chdir(os.path.join(os.getcwd(), 'longzhu'))

for i in range(len(picture_url_list)):

picture_name = str(i) + '.jpg'

try:

urllib.request.urlretrieve(picture_url_list[i], picture_name)

print("Success to download " + picture_url_list[i])

except:

print("Fail to download " + picture_url_list[i])

if __name__ == '__main__':

fetch_pictures("http://tieba.baidu.com/p/3720487356")效果也不是很好,只是爬取了首页的5张图而已:

以上代码均是用Python 3.4写的。不过,用Python 2爬虫也很方便,于是也参考了网上Python 2爬虫的代码。

先看一个网上的示例(不好意思,又是爬取美女图片,网上爬美女程序太多),是用Python 2.7写的:

#!/bin/env python

# -*- coding: utf-8 -*-

# 爬取网上图片,网址:http://jandan.net/ooxx

#导入模块

import urllib2

import re

import os

import glob

#设定抓取页数

page_amount = 2

#抓取首页的html代码

def get_page(url):

req = urllib2.Request(url)

req.add_header('User-Agent','Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari/537.36') #缺省部分填上浏览器字符串

response = urllib2.urlopen(req)

html = response.read().decode('utf-8')

return html

#抓取图片

def read_image(url):

req = urllib2.Request(url)

req.add_header('User-Agent','Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari/537.36') #缺省部分填上浏览器字符串

response = urllib2.urlopen(url)

html = response.read()

return html

#得到当前的最新页面数,从这个页面开始倒着爬,因为用了这个脚本以后以前的图可能已经看过了

def get_current_page_number(html):

match = re.search(r'<span class="current-comment-page">\[(.*)\]</span>',html)

return match.group(1)

#得到图片列表

def get_picturs_url_list(url):

html = get_page(url)

l = re.findall(r'<p><img src="http://.*.sinaimg.cn/mw600/.*jpg" /></p>',html)

result = []

for string in l:

src = re.search(r'"(.*)"',string)

result.append(str(src.group(1))) #解决Unicode编码开头问题,有空好好补下编码和字符规范

return result

#下载图片并存储到本地文件夹

def image_save(url,number):

number = str(number)

print '正在抓取第',number,'张'

filename = number + '.jpg'

with open(filename,'wb') as fp:

img = read_image(url)

fp.write(img)

#准备存放图片的文件夹,并进入到指定路径

def floder_prepare(floder):

a = glob.glob('*')

if floder not in a:

os.mkdir(floder)

os.chdir(floder)

#主函数

def main():

html = get_page('http://jandan.net/ooxx')

number = int(get_current_page_number(html))

l = []

amount = 0

for n in range(0,page_amount):

url = 'http://jandan.net/ooxx/page-' + str(number-n) + '#comments'

l += get_picturs_url_list(url)

floder_prepare('picture')

for url in l:

amount += 1

image_save(url,amount)

if __name__ == '__main__':

main()

print '全部抓完啦'上面的程序也是自动下载图片,并自动建立了一个pciture文件夹存放(图片就不截图了)。于是我就把它适当修改用来重新爬取上面的龙珠图片,代码如下:

#!/bin/env python

# -*- coding: utf-8 -*-

# 爬取网上图片,网址:http://tieba.baidu.com/p/3720487356

#导入模块

import urllib2

import re

import os

import glob

#设定抓取页数

page_amount = 5

#抓取首页的html代码

def get_page(url):

req = urllib2.Request(url)

req.add_header('User-Agent','Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari/537.36') #缺省部分填上浏览器字符串

response = urllib2.urlopen(req)

html = response.read().decode('utf-8')

return html

#抓取图片

def read_image(url):

req = urllib2.Request(url)

req.add_header('User-Agent','Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari/537.36') #缺省部分填上浏览器字符串

response = urllib2.urlopen(url)

html = response.read()

return html

#得到图片列表

def get_picturs_url_list(url):

html = get_page(url)

l = re.findall(r'<img class="BDE_Image" pic_type="0" width="\d\d\d" height="\d\d\d" src="(.*?)"',html)

return l

#下载图片并存储到本地文件夹

def image_save(url,number):

number = str(number)

print '正在抓取第',number,'张'

filename = number + '.jpg'

with open(filename,'wb') as fp:

img = read_image(url)

fp.write(img)

#准备存放图片的文件夹,并进入到指定路径

def floder_prepare(floder):

a = glob.glob('*')

if floder not in a:

os.mkdir(floder)

os.chdir(floder)

#主函数

def main():

number = 5

l = []

amount = 0

for n in range(0,page_amount):

url = 'http://tieba.baidu.com/p/3720487356?pn=' + str(number-n)

l += get_picturs_url_list(url)

floder_prepare('pics_longzhu')

for url in l:

amount += 1

image_save(url,amount)

if __name__ == '__main__':

main()

print '全部抓完啦'由于这个帖子目前总共有5页,所以就设定了爬取页数,然后从最后一页倒着逐页全部爬取下来了,截图如下:

嗯,果然效果好了不少。当然,用Python 2爬取图片的代码还有很多,以Github上那个项目里的13题为例,最后再贴两个代码以供交流学习。

代码(1)

# -*- coding: utf-8 -*-

# 爬取贴吧图片,网址:http://tieba.baidu.com/p/2166231880

import urllib2

from HTMLParser import HTMLParser

from traceback import print_exc

from sys import stderr

class _DeHTMLParser(HTMLParser):

'''

利用HTMLParse来解析网页元素

'''

def __init__(self):

HTMLParser.__init__(self)

self.img_links = []

def handle_starttag(self, tag, attrs):

if tag == 'img':

# print(attrs)

try:

if ('pic_type','0') in attrs:

for name, value in attrs:

if name == 'src':

self.img_links.append(value)

except Exception as e:

print(e)

return self.img_links

def dehtml(text):

try:

parser = _DeHTMLParser()

parser.feed(text)

parser.close()

return parser.img_links

except:

print_exc(file=stderr)

return text

def main():

html = urllib2.urlopen('http://tieba.baidu.com/p/2166231880')

content = html.read()

print(dehtml(content))

i = 0

for img_list in dehtml(content):

img_content = urllib2.urlopen(img_list).read()

path_name = str(i)+'.jpg'

with open(path_name,'wb') as f:

f.write(img_content)

i+=1

if __name__ == '__main__':

main()代码(2)

# coding:utf-8

# 爬取贴吧图片,网址:http://tieba.baidu.com/p/2166231880

import requests, re, os

url = 'http://tieba.baidu.com/p/2166231880'

header = {

'Accept': '*/*',

'Accept-Encoding':'gzip,deflate,sdch',

'Accept-Language':'zh-CN,zh;q=0.8',

'Connection':'keep-alive'

}

html = requests.get(url,headers = header)

data = html.content.decode('utf-8')

find = re.compile(r'<img pic_type="0" class="BDE_Image" src="(.*?).jpg" bdwater')

result = find.findall(data)

for img_url in result:

name = img_url.split('/')[-1]

img_url = img_url+'.jpg'

html = requests.get(img_url,headers = header)

im = html.content

with open(name+'.jpg','wb')as f:

f.write(im)