python爬取张国荣吧张国荣图片

一直喜欢哥哥的歌,也一直听哥哥的歌,突然想着收集一些哥哥的照片,所以写了一个爬虫爬取哥哥的图片,也给大家参考一下;

这里我用的request-html这个包

from requests_html import HTMLSession from requests_html import HTML

构造请求

class CrawlSpider(object): def __init__(self): self.sess = HTMLSession() self.headers = { "Host": "tieba.baidu.com", "Referer": "https://www.baidu.com/", "User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36" }

分析请求的api,发现它是get请求,带有参数

self.params = { "kw": "张国荣", "tab": "album", "subtab": "album_good", "cat_id": "" }

发出请求,找到分类的id;

resp = self.sess.get(url="http://tieba.baidu.com/f?", params=self.params, headers=self.headers) category_list = re.findall('<li cat-id="(.*)"><span>', resp.text)

对每个分类构造api请求,获取每个图册的id;

all_tid_list = [] for cat_id in category_list: self.params["cat_id"] = cat_id self.params["pagelets"] = 'album/pagelet/album_good' self.params["pagelets_stamp"] = "%013d"%(1000 * time.time()) try: resp = self.sess.get(url="http://tieba.baidu.com/f?", params=self.params, headers=self.headers) resp.html.render() html = HTML(html=resp.html.text) tid_list = re.findall(r"/p/\d+", re.sub(r"\\", '', str(html.links))) all_tid_list.extend(tid_list) except Exception as err: print("获取tid失败{}".format(err)) return all_tid_list

拿到图册的id后我们就可以去请求图册的数据了;tid就是图册的id,“_”是当前的时间戳,“pe”是每页返回40条数据,”pn“是当前页数;

params = { "kw": "张国荣", "alt": "jview", "rn": "200", "tid": tid, "pn": "1", "ps": "1", "pe": "40", "info": "1", "_": "%013d" % (1000 * time.time()) }

构造请求获取图册的数据;这里我们可以拿到每个图册的标题和图册中图片的信息;

base_url = "http://tieba.baidu.com/photo/g/bw/picture/list?" resp = self.sess.get(url=base_url, params=params, headers=self.headers).text time.sleep(random.random() + 1) resp = json.loads(resp)

title = resp["data"]["title"]

pic_list = resp["data"]["pic_list"]

保存我们需要的图册标题和图片链接;

try: image_list = [] for item in self.total_images: image_dict = {} image_url = [] image_dict["title"] = item["title"] for each in item["images_info"]: image_url.append(each["purl"]) image_dict["image_url"] = image_url image_list.append(image_dict) with open("image_urls.json", "w", encoding='GBK') as f: f.write(json.dumps(image_list)) except Exception as err: print("写入数据失败{}".format(err))

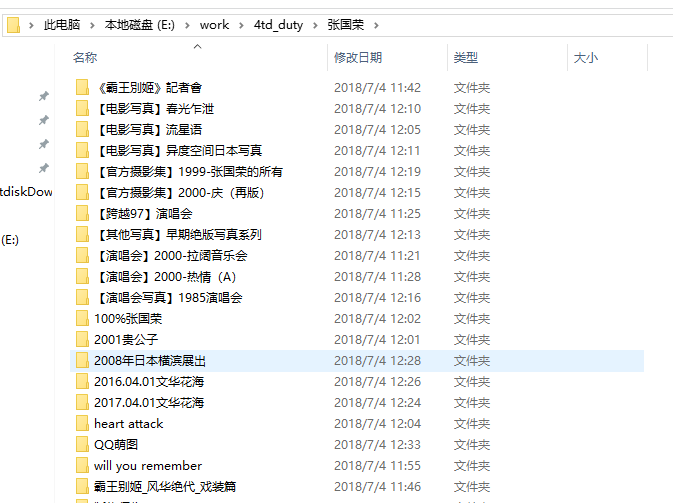

这样我们就拿到了哥哥的图片链接了,然后我们请求图片链接,下载图片即可;

完整代码可以看我的gitub链接:

https://github.com/gongjiaqiang/my_spider