From:https://zhuanlan.zhihu.com/p/33112359

js分析 猫_眼_电_影 字体文件 @font-face:https://www.cnblogs.com/my8100/p/js_maoyandianying.html

解析某电影和某招聘网站的web-font自定义字体:https://www.jianshu.com/p/5400bbc8b634

FontTools 安装与使用简明指南:https://darknode.in/font/font-tools-guide

github fonttools库详解:https://github.com/fonttools/fonttools

一、概览

自前期写过汽车之家字体反爬破解实践之后,发现字体反爬应用还是很普遍。这两天有知乎朋友咨询如何实现猫眼票房数据的爬取,这里其实与上面的文章核心思想是一致的,但是操作更复杂一些,本文做一个更详细的破解实践。

有对字体反爬还比较陌生的,请参考前文。

二、查找字体源

猫眼电影是美团旗下的一家集媒体内容、在线购票、用户互动社交、电影衍生品销售等服务的一站式电影互联网平台。2015年6月,猫眼电影覆盖影院超过4000家,这些影院的票房贡献占比超过90%。目前,猫眼占网络购票70%的市场份额,每三张电影票就有一张出自猫眼电影,是影迷下载量较多、使用率较高的电影应用软件。同时,猫眼电影为合作影院和电影制片发行方提供覆盖海量电影消费者的精准营销方案,助力影片票房。

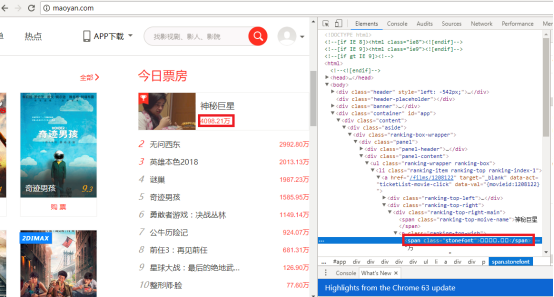

我们使用Chrome浏览页面,并查看源码,发现票房中涉及数字的,在页面显示正常,在源码中显示一段span包裹的不可见文本。

上面其实就是自定义字体搞的鬼。根据网页源码中,

<span class="stonefont">.</span>

使用了自定义的stonefont字体,我们在网页中查找stonefont,很快有了发现,这就是标准的@font-face定义方法。且每次访问,字体文件访问地址都会随机变化。

我们访问其中woff文件的地址,可将woff字体文件下载到本地。前文中fonttools并不能直接解析woff字体,我们需要将woff字体转换成otf字体。还好,在github上找到了python转换工具woff2otf,成功实现字体的转换。

( Font Creator 好像 otf、woff 都可以解析)

python3 的 urlretrieve 使用方法

urllib 模块提供的 urlretrieve() 函数。urlretrieve()方法直接将远程数据下载到本地。

urlretrieve(url, filename=None, reporthook=None, data=None)

- 参数filename指定了保存本地路径(如果参数未指定,urllib会生成一个临时文件保存数据。)

- 参数reporthook是一个回调函数,当连接上服务器、以及相应的数据块传输完毕时会触发该回调,我们可以利用这个回调函数来显示当前的下载进度。

- 参数data指post导服务器的数据,该方法返回一个包含两个元素的(filename, headers) 元组,filename 表示保存到本地的路径,header表示服务器的响应头

将 baidu 的html 抓取到本地,保存在 ''./baidu.html" 文件中,同时显示下载的进度。

#!/usr/bin/python3

# -*- coding: utf-8 -*-

import os

from urllib import request

def cbk(a, b, c):

"""

回调函数

:param a: 已经下载的数据块

:param b: 数据块的大小

:param c: 远程文件的大小

:return:

"""

per = 100.0 * a * b / c

if per > 100:

per = 100

print('%.2f%%' % per)

def test_1():

url = 'https://www.baidu.com'

current_dir = os.path.abspath('.')

work_path = os.path.join(current_dir, 'baidu.html')

request.urlretrieve(url, work_path, cbk)

def test_2():

url = 'http://www.python.org/ftp/python/2.7.5/Python-2.7.5.tar.bz2'

current_dir = os.path.abspath('.')

work_path = os.path.join(current_dir, 'Python-2.7.5.tar.bz2')

request.urlretrieve(url, work_path, cbk)

if __name__ == "__main__":

test_1()

test_2()

passurlopen()可以轻松获取远端html页面信息,然后通过Python正则对所需要的数据进行分析,匹配出想要用的数据,再利用urlretrieve()将数据下载到本地。对于访问受限或者对连接数有限制的远程url地址可以采用proxies(代理的方式)连接,如果远程连接数据量过大,单线程下载太慢的话可以采用多线程下载,这个就是传说中的爬虫

三、字体解析

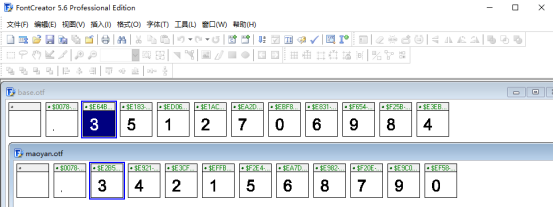

otf就是我们常用的字体文件,可以使用系统自带的字体查看器查看,但是难以看到更多有效的信息,我们使用一个专用工具Font Creator查看。( Font Creator汉化破解版下载地址:https://download.csdn.net/download/freeking101/10676006)

可以看到,这个字体里有12个字(含一个空白字),每个字显示其字形和其字形编码。这里比之前字体解析更复杂的是,这里不仅字体编码每次都会变,字体顺序每次也会变,很难直接通过编码和顺序获取实际的数字。

因此,我们需要预先下载一个字体文件,人工识别其对应数值和字体,然后针对每次获取的新的字体文件,通过比对字体字形数据,得到其真实的数字值。

下面是使用fontTools.ttLib获取的单个字符的字形数据。

<TTGlyph name="uniE183" xMin="0" yMin="-12" xMax="516" yMax="706">

<contour>

<pt x="134" y="195" on="1"/>

<pt x="144" y="126" on="0"/>

<pt x="217" y="60" on="0"/>

<pt x="271" y="60" on="1"/>

<pt x="335" y="60" on="0"/>

<pt x="423" y="158" on="0"/>

<pt x="423" y="311" on="0"/>

<pt x="337" y="397" on="0"/>

<pt x="270" y="397" on="1"/>

<pt x="227" y="397" on="0"/>

<pt x="160" y="359" on="0"/>

<pt x="140" y="328" on="1"/>

<pt x="57" y="338" on="1"/>

<pt x="126" y="706" on="1"/>

<pt x="482" y="706" on="1"/>

<pt x="482" y="622" on="1"/>

<pt x="197" y="622" on="1"/>

<pt x="158" y="430" on="1"/>

<pt x="190" y="452" on="0"/>

<pt x="258" y="475" on="0"/>

<pt x="293" y="475" on="1"/>

<pt x="387" y="475" on="0"/>

<pt x="516" y="346" on="0"/>

<pt x="516" y="243" on="1"/>

<pt x="516" y="147" on="0"/>

<pt x="459" y="75" on="1"/>

<pt x="390" y="-12" on="0"/>

<pt x="271" y="-12" on="1"/>

<pt x="173" y="-12" on="0"/>

<pt x="112" y="42" on="1"/>

<pt x="50" y="98" on="0"/>

<pt x="42" y="188" on="1"/>

</contour>

<instructions/>

</TTGlyph>

找到 TTGlyph 字段,TTGlyph 字段下面的 子字段 都是用来画字符(包括中英文数字)的坐标。同一个字符的坐标是一样的。(方法1:用fontTools 库将字体文件解析成 xml,然后把这些坐标的属性字典按顺序都存到一个list里面,然后序列化成json(加sort_keys=True参数)字符串。用这个字符串当key,value是实际的字符,存成一个 constant_dict。每次遇到新网页,取出这个字符串,然后根据字符串从 constant_dict 获取实际的字符。方法2:也可以直接使用字体库直接解析)

方法 1:

每次获取 font 里面坐标 list 字符串的代码:

# font_decryption.py

from fontTools.ttLib import TTFont

from lxml import etree

from io import BytesIO

import base64

import config

import os

import json

import pub.common.error as error

_xml_file_path = os.path.join(config.temp_file_path, "tongcheng58.xml")

def make_font_file(base64_string: str):

bin_data = base64.decodebytes(base64_string.encode())

return bin_data

def convert_font_to_xml(bin_data):

font = TTFont(BytesIO(bin_data))

font.saveXML(_xml_file_path)

def parse_xml():

xml = etree.parse(_xml_file_path)

root = xml.getroot()

font_dict = {}

all_data = root.xpath('//glyf/TTGlyph')

for index, data in enumerate(all_data):

font_key = data.attrib.get('name')[3:].lower()

contour_list = []

if index == 0:

continue

for contour in data:

for pt in contour:

contour_list.append(dict(pt.attrib))

font_dict[font_key] = json.dumps(contour_list, sort_keys=True)

return font_dict

def make_path():

if not os.path.isdir(config.temp_file_path):

os.makedirs(config.temp_file_path)

def get_font_dict(base64_string):

try:

make_path()

bin_data = make_font_file(base64_string)

convert_font_to_xml(bin_data)

font_dict = parse_xml()

except Exception as e:

return (error.ERROR_UNKNOWN_RESUME_CONTENT, 'cannot_get_font, err=[{}]'.format(str(e))), None

return None, font_dict调用

def decrypt_font(text, font_dict):

decryption_text = ""

for alpha in text:

hex_alpha = alpha.encode('unicode_escape').decode()[2:]

if hex_alpha in font_dict:

item_text = decryption_font_dict.get(font_dict[hex_alpha])

if item_text is None:

_logger.error("op=[DecryptFont], err={}".format("decryption_font_dict_have_no_this_font"))

else:

item_text = alpha

decryption_text += item_text

return decryption_text

def parse(html: str, request: ParseRequest):

user_info_dict = {}

# print(html)

base64_string = html.split("base64,")[1].split(')')[0].strip()

err, font_dict = get_font_dict(base64_string)

if err is not None:

return err, None

html = decrypt_font(html, font_dict)

if __name__ == "__main__":

html = open(file_name, "r", encoding="utf-8").read()

parse(html)方法 2:

使用下面语句可以获取顺序的字符编码值,

##############################################################################

# 访问字体的 url ,下载 字体文件 并 保存,这里保存文件名为 base.woff

base_font = TTFont('base.woff') # 解析字体库font文件

# 使用 "FontCreator字体查看软件" 查看字体的对应关系,然后设置对应关系

base_num_list = ['.', '3', '5', '1', '2', '7', '0', '6', '9', '8', '4']

base_unicode_list = [

'x', 'uniE64B', 'uniE183', 'uniED06', 'uniE1AC', 'uniEA2D',

'uniEBF8', 'uniE831', 'uniF654', 'uniF25B', 'uniE3EB'

]

"""

1. 字库对应的字形顺序不变,映射的 unicode 编码改变。

只需要找一次对应关系即可。

2. 字库对应的字形顺序改变,映射的 unicode 编码也改变。

需要找两次对应关系:

第一次可以当基准对应关系,找到 字形 和 unicode 的对应关系

第二次时,因为字形的数据都相同,可以找到字形的数据和第一次做基准的做对比,

因为字形数据相同,可以找到第一次对应的字形所对应的第二次的 unicode 对应关系

"""

##############################################################################

# 猫眼 属于 字形 顺序改变,unicode 编码也改变

mao_yan_font = TTFont('maoyan.woff')

mao_yan_unicode_list = mao_yan_font['cmap'].tables[0].ttFont.getGlyphOrder()

mao_yan_num_list = []

for i in range(1, 12):

mao_yan_glyph = mao_yan_font['glyf'][mao_yan_unicode_list[i]]

for j in range(11):

base_glyph = base_font['glyf'][base_unicode_list[j]]

if mao_yan_glyph == base_glyph:

mao_yan_num_list.append(base_num_list[j])

break

pass方法 3:

#!/usr/bin/python3

# -*- coding: utf-8 -*-

import requests

from fake_useragent import UserAgent

from lxml import etree

import re

import base64

from urllib.request import urlretrieve

from fontTools.ttLib import TTFont

def get_response():

"""

得到网页内容

:return:

"""

url = 'https://www.shixiseng.com/interns?k=python&p=1'

headers = {

'User-Agent': UserAgent().random

}

response = requests.get(url, headers=headers)

return response

def get_font_file():

"""

获取实习僧的字体文件

:return:

"""

response = get_response()

font_url_data = re.findall(r'myFont; src: url\("(.*?)"\)}', response.text, re.S)[0]

# font_encrypt = requests.get(font_url_data)

font_data = base64.b64decode(re.findall('base64,(.*)', font_url_data)[0])

file = open('./shixiseng_font.woff', 'wb')

file.write(font_data)

file.close()

def parse_font():

"""

解析字体文件 ,获取相应的字体映射关系

:return:

"""

font1 = TTFont('./shixiseng_font.woff')

# 把字体文件转化成 xml 格式文件,

# font1.saveXML('./shixiseng_font.xml')

keys, values = [], []

for k, v in font1.getBestCmap().items():

if v.startswith('uni'):

keys.append(eval("u'\\u{:x}".format(k) + "'"))

values.append(chr(int(v[3:], 16)))

else:

keys.append("&#x{:x}".format(k))

values.append(v)

return keys, values

# 获取数据并对特殊字体转码

def get_data():

response = get_response()

data = etree.HTML(response.text)

ul_data = data.xpath('//ul[@class="position-list"]/li')

for info in ul_data:

title = info.xpath('.//div[@class="info1"]/div[@class="name-box clearfix"]/a/text()')[0]

salary = ' | '.join(info.xpath('.//div[@class="info2"]/div[@class="more"]/span/text()'))

print(title, salary)

print('----------分界线----------')

keys, values = parse_font()

for k, v in zip(keys, values):

title = title.replace(k, v)

salary = salary.replace(k, v)

print(title, salary)

if __name__ == "__main__":

get_font_file()

get_data()

方法 4 :

# coding: utf-8

import os

import re

import base64

import requests

from parsel import Selector

from fontTools.ttLib import TTFont

from scrapy import Spider

from scrapy.http import Request

from scrapy.selector import Selector

# 获取对应关系

def get_dict():

# 从猫眼得到的使用base64加密的字体数据

font = "d09GRgABAAAAAAgcAAsAAAAAC7gAAQAAAAAAAAAAAAAAAAAAAAAAAAAAAABHU1VCAAABCAAAADMAAABCsP6z7U9TLzIAAAE8AAAARAAAAFZW7ld+Y21hcAAAAYAAAAC6AAACTDNal69nbHlmAAACPAAAA5AAAAQ0l9+jTWhlYWQAAAXMAAAALwAAADYSf7X+aGhlYQAABfwAAAAcAAAAJAeKAzlobXR4AAAGGAAAABIAAAAwGhwAAGxvY2EAAAYsAAAAGgAAABoGLgUubWF4cAAABkgAAAAfAAAAIAEZADxuYW1lAAAGaAAAAVcAAAKFkAhoC3Bvc3QAAAfAAAAAWgAAAI/mSOW8eJxjYGRgYOBikGPQYWB0cfMJYeBgYGGAAJAMY05meiJQDMoDyrGAaQ4gZoOIAgCKIwNPAHicY2Bk0mWcwMDKwMHUyXSGgYGhH0IzvmYwYuRgYGBiYGVmwAoC0lxTGBwYKn6wM+v812GIYdZhuAIUZgTJAQDX7QsReJzFkbENgzAQRb8DgQRSuPQAlFmFfZggDW0mSZUlGMISokBILpAlGkS+OZpI0CZnPUv3bd2d7gM4A4jIncSAekMhxIuqWvUI2arHeDA30FQuqKyxvvVd05fD7LQrxnpKl4U/jl/2QrHi3gkvV06XsVuMFAl7npBTTg4q/SDU/1p/x229n1vGraDa4IjWCNwfrBeCz60Xgp9dIwTv+1II/g+zwI3DaYG7hysEuoCxFugHplRA/gGlP0OcAAB4nD1Tz2/aVhx/z1R26lBCho0LaQEDsQ0kwfEvAjhAcaDNT0YChJCWhqilNFvbLGq6tI22lv2Q2ml/QHuptMMu1Q69d9K0nrZOWw77Aybtutsq9RLBnoHFt/ee/P38/AIIQPcfIAEKYADEZJryUAJAHzp138Fj7A/04gXAocRSUJYYJ+OkKZywwYCf52KUU9LsPOcn8LDL3VrZS56z2622seuFG3q+VnywFhYeBidho72wUtoMZ/Rb6Sa/srZQffvq7j7cSibkLADQBIPvEU4QgHGaRTgWBBXTFC7gxwk+BaUBImGzEPB9hx8mx4Q4lyjQoUU9vQRrpw9+P2AjlCEKEvPBUKnk9biiUdUnLpyfuT6/kCebN/fKk8sSkxbYybPMGfA/5j7CtALABkbRbFUzQWW4X/W1hPmZMWE4joke3V72Sy6R6fuB/jnGfgMkQDNYlVWhPCrTAZoftUCj8yvMX2o0qn+9LMKjjlh8eYzufjzxsYOwfGACTeB4pIsw9dCmoUib6SWnKjGtZy+kPOhaUxXOj8PnVjqohH1hxnrGtymvHyauZW8/XTI+KWuqtfOMz3FasXCvhDkVZpzxxs+vadNT7aZxd/bF66P6qjhV6rydKEdqy/PrlT4PDCAeARBFSZsoSHEKzkKFxwm8xwFR8MA+I57jYS8CmmJQyt8M62I4ydtwArqiE7GNB59vz+3ryXuFsqKRsLU6k6yEwvcLP+jqeEp1a2NDp/Cw2/1o59ZXi9+2n35XnoqWYXJpo76SD0XWQT+D7r+wi/hEBmw0pWdNjOmp74Wv9UzxQJS/ycskybdHLmqpMh/S3UHSFt9Ia/IcWbXHE6WENK1K0+mLT1pXD0//spitHPICuQyTs2I6lR2pRafdZ6tbi86Ry/krX+zWwEkPutgb4EANV1kaNQwnAmb7zDZE4VHAmJMdrqFNOGr3Jj0ZFrtdzgUb9x9mah+Fm/rBnfhlDo2wnHhr7sokmmV6aWbbp43MRGe0LbJk9tqPWyi0R0hx//Tq493XezvZXPvPC5m8mFXEAGs0L5zzj/tDPpkOlT4rwi+FnQ9v3llqCc6r2SuHKb2Rr3+vpH3eupHpPOFzlIOm+EerxYGv77BT2M/m1g587ZvpYGmWGHTOzBsl/DU5r2WqFSNiUGs5eK3zN++bC9Qfx3Ofbs+mht7kstvPKpyXhLuln5zM4xtbl9a1mRr4D3C64MJ4nGNgZGBgAOKQyuTT8fw2Xxm4WRhA4PoGS2UE/f8NCwPTeSCXg4EJJAoAIT0KPAB4nGNgZGBg1vmvwxDDwgACQJKRARXwAAAzYgHNeJxjYQCCFAYGJh3iMAA3jAI1AAAAAAAAAAwAQAB6AJQAsAD0ATwBfgGiAegCGgAAeJxjYGRgYOBhMGBgZgABJiDmAkIGhv9gPgMADoMBVgB4nGWRu27CQBRExzzyAClCiZQmirRN0hDMQ6lQOiQoI1HQG7MGI7+0XpBIlw/Id+UT0qXLJ6TPYK4bxyvvnjszd30lA7jGNxycnnu+J3ZwwerENZzjQbhO/Um4QX4WbqKNF+Ez6jPhFrp4FW7jBm+8wWlcshrjQ9hBB5/CNVzhS7hO/Ue4Qf4VbuLWaQqfoePcCbewcLrCbTw67y2lJkZ7Vq/U8qCCNLE93zMm1IZO6KfJUZrr9S7yTFmW50KbPEwTNXQHpTTTiTblbfl+PbI2UIFJYzWlq6MoVZlJt9q37sbabNzvB6K7fhpzPMU1gYGGB8t9xXqJA/cAKRJqPfj0DFdI30hPSPXol6k5vTV2iIps1a3Wi+KmnPqxVhjCxeBfasZUUiSrs+XY82sjqpbp46yGPTFpKr2ak0RkhazwtlR86i42RVfGn93nCip5t5gh/gPYnXLBAHicbcpLEkAwEATQ6fiEiLskBNkS5i42dqocX8ls9eZVdTcpkhj6j4VCgRIVamg0aGHQwaInPPq+Th7j9nnMac+uQfQ8Zdm7bGLpeQiy+5gN8uPoFqIXKTcXwQAA"

# 解密 base64加密的字体数据

fontdata = base64.b64decode(font)

file = open('./1.woff', 'wb')

file.write(fontdata)

file.close()

online_fonts = TTFont('./1.woff')

# 转换成 xml 格式文件

# online_fonts.saveXML("text.xml")

font_dict = dict()

base_num = {

"uniE6CD": "2",

"uniE1F5": "4",

"uniEF24": "3",

"uniEA4D": "1",

"uniF807": "5",

"uniEF10": "6",

"uniE118": "7",

"uniE4F5": "8",

"uniECFD": "9",

"uniF38B": "0"

}

_data = online_fonts.getGlyphSet()._glyphs.glyphs

for k, v in base_num.items():

font_dict[_data[k].data] = v

return font_dict

class MaoYan(Spider):

name = 'mao_yan_spider'

def __init__(self):

self.font_dict = get_dict()

super(MaoYan, self).__init__()

def start_requests(self):

url = 'https://piaofang.maoyan.com/?ver=normal'

yield Request(url, dont_filter=True, encoding='utf-8')

def parse(self, response):

body = response.text

movie_names = re.findall("li class='c1'>\s+<b>([\s\S]+?)</b>", body)

days = re.findall(" <li class=\"solid\">[\s\S]+?<br>[\s\S]+?上映(\S{1,5})<[\s\S]+?</li>\s+</ul>", body)

movie_ids = re.findall('<ul class="canTouch" data-com="hrefTo,href:\'/movie/(\d+)\?_v_=yes\'">', body)

mongy = re.findall("em style=\"margin-left: \.1rem\"><i class=\"cs\">([\s\S]+?)</i></em>", body)

mongy_1 = re.findall("<li class=\"c2 \">\s+?<b><i class=\"cs\">([\s\S]+?)</i></b><br/>\s+?</li>", body)

bai = re.findall("<li class=\"c3 \"><i class=\"cs\">([\s\S]+?)</i></li>", body)

bai_1 = re.findall("<li class=\"c4 \">[\s]+?<i class=\"cs\">([\s\S]+?%)</i>", body)

bai_2 = re.findall("li class=\"c5 \">[\s]+?<span style=\"margin-right:\-\.1rem\">[\s]+?<i class=\"cs\">([\s\S]+?%)</i>[\s]+?</span>", body)

data_woff = get_woff(body)

mo = list()

for i in mongy:

mo.append(format_num(i, self.font_dict, data_woff))

bais = list()

for i in bai:

bais.append(format_num(i, self.font_dict, data_woff))

mo_1 = list()

for i in mongy_1:

mo_1.append(format_num(i, self.font_dict, data_woff))

bais_2 = list()

for i in bai_1:

bais_2.append(format_num(i, self.font_dict, data_woff))

bais_3 = list()

for i in bai_2:

bais_3.append(format_num(i, self.font_dict, data_woff))

cont = zip(movie_ids, movie_names, mo, days, bais, mo_1, bais_2, bais_3)

for i in cont:

print(i)

def get_woff(body):

file_name = '2.woff'

font = re.findall("charset=utf-8;base64,([\s\S]+)\) format\(\"woff\"\)", body)

if font:

font = font[0]

font_data = base64.b64decode(font)

file = open(file_name, 'wb')

file.write(font_data)

file.close()

online_fonts = TTFont("2.woff")

data = online_fonts.getGlyphSet()._glyphs.glyphs

return data

def format_num(string, font_dict, data_woff):

if str(string).endswith("万") or str(string).endswith("%") or str(string).endswith("亿"):

unit = string[-1]

string = string.replace("万", '').replace("%", "").replace("亿", "")

num_list = string.split(";")

num = list()

for i in num_list:

if not i.startswith("."):

i = i[3:].upper()

if i:

i = font_dict[data_woff["uni%s" % i].data]

num.append(i)

else:

num.append(".")

i = i[4:].upper()

i = font_dict[data_woff["uni%s" % i].data]

num.append(i)

num.append(unit)

return "".join(num)

else:

num_list = string.split(";")

num = list()

for i in num_list:

if i and not i.startswith("."):

i = i[3:].upper()

i = font_dict[data_woff["uni%s" % i].data]

num.append(i)

elif i:

num.append(".")

i = i[4:].upper()

i = font_dict[data_woff["uni%s" % i].data]

num.append(i)

return "".join(num)

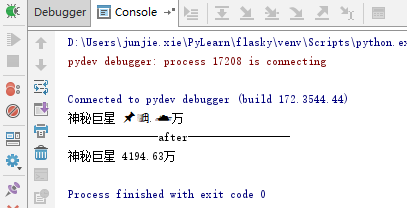

四、内容替换

关键点攻破了,整个工作就好做了。先访问需要爬取的页面,获取字体文件的动态访问地址并下载字体,读取用户帖子文本内容,替换其中的自定义字体编码为实际文本编码,就可复原网页为页面所见内容了。

完整代码如下:

# -*- coding:utf-8 -*-

import requests

from lxml import html

import re

import woff2otf

from fontTools.ttLib import TTFont

#抓取maoyan票房

class MaoyanSpider:

#页面初始化

def __init__(self):

self.headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "zh-CN,zh;q=0.8",

"Cache-Control": "max-age=0",

"Connection": "keep-alive",

"Upgrade-Insecure-Requests": "1",

"Content-Type": "application/x-www-form-urlencoded; charset=UTF-8",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.86 Safari/537.36"

}

# 获取票房

def getNote(self):

url = "http://maoyan.com"

host = {'host':'maoyan.com',

'refer':'http://maoyan.com/news',}

headers = dict(self.headers.items() + host.items())

# 获取页面内容

r = requests.get(url, headers=headers)

#print r.text

response = html.fromstring(r.text)

# 匹配ttf font

cmp = re.compile(",\n url\('(//.*.woff)'\) format\('woff'\)")

rst = cmp.findall(r.text)

ttf = requests.get("http:" + rst[0], stream=True)

with open("maoyan.woff", "wb") as pdf:

for chunk in ttf.iter_content(chunk_size=1024):

if chunk:

pdf.write(chunk)

# 转换woff字体为otf字体

woff2otf.convert('maoyan.woff', 'maoyan.otf')

# 解析字体库font文件

baseFont = TTFont('base.otf')

maoyanFont = TTFont('maoyan.otf')

uniList = maoyanFont['cmap'].tables[0].ttFont.getGlyphOrder()

numList = []

baseNumList = ['.', '3', '5', '1', '2', '7', '0', '6', '9', '8', '4']

baseUniCode = ['x', 'uniE64B', 'uniE183', 'uniED06', 'uniE1AC', 'uniEA2D', 'uniEBF8',

'uniE831', 'uniF654', 'uniF25B', 'uniE3EB']

for i in range(1, 12):

maoyanGlyph = maoyanFont['glyf'][uniList[i]]

for j in range(11):

baseGlyph = baseFont['glyf'][baseUniCode[j]]

if maoyanGlyph == baseGlyph:

numList.append(baseNumList[j])

break

uniList[1] = 'uni0078'

utf8List = [eval("u'\u" + uni[3:] + "'").encode("utf-8") for uni in uniList[1:]]

# 获取发帖内容

movie_name = response.cssselect(".ranking-box-wrapper li .ranking-top-moive-name")[0].text_content().replace(' ', '').replace('\n', '').encode('utf-8')

movie_wish = response.cssselect(".ranking-box-wrapper li .ranking-top-wish")[0].text_content().replace(' ', '').replace('\n', '').encode('utf-8')

print movie_name, movie_wish

print '---------------after-----------------'

for i in range(len(utf8List)):

movie_wish = movie_wish.replace(utf8List[i], numList[i])

print movie_name, movie_wish

spider = MaoyanSpider()

spider.getNote()

解析访问,获取票房数据。

五、参考文章

1、汽车之家字体反爬破解实践 (https://zhuanlan.zhihu.com/p/32087297)

2、fontTools.ttLib用法

http://pyopengl.sourceforge.net/pydoc/fontTools.ttLib.html

3、fonttools源码 (https://github.com/fonttools/fonttools)