数据准备:pyhton3 爬取电影影评

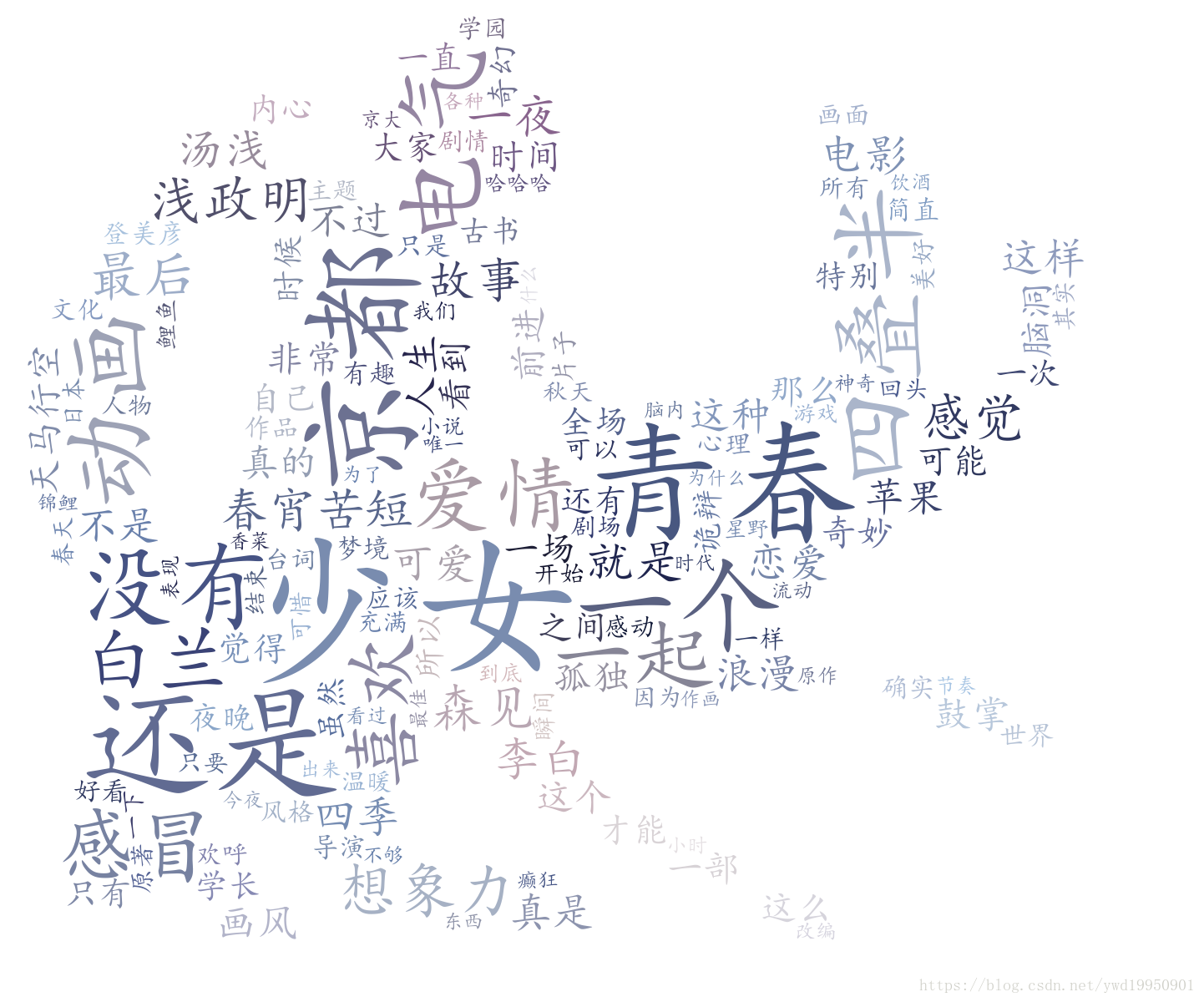

我们以春宵苦短,少女前进吧! 夜は短し歩けよ乙女 这部电影为例。

URL:https://movie.douban.com/subject/26935251/

代码:

创建文件夹存入爬取的图片

import os

dirs = 'F:\爬虫\词云'

if not os.path.exists(dirs):

os.makedirs(dirs)

os.chdir(dirs)

import json

import os

os.chdir(dirs)

def get_comments(url,headers,start,max_restart_num,movie_name,collection):

if start >= 5000:

print("已爬取5000条评论,结束爬取")

return

data = {

'start': start,

'limit': 20,

'sort': 'new_score',

'status': 'P',

}

response = requests.get(url=url, headers=headers, params=data)

tree = etree.HTML(response.text)

comment_item = tree.xpath('//div[@id ="comments"]/div[@class="comment-item"]')

len_comments = len(comment_item)

if len_comments > 0:

for i in range(1, len_comments + 1):

votes = tree.xpath('//div[@id ="comments"]/div[@class="comment-item"][{}]//span[@class="votes"]'.format(i))

commenters = tree.xpath(

'//div[@id ="comments"]/div[@class="comment-item"][{}]//span[@class="comment-info"]/a'.format(i))

ratings = tree.xpath(

'//div[@id ="comments"]/div[@class="comment-item"][{}]//span[@class="comment-info"]/span[contains(@class,"rating")]/@title'.format(

i))

comments_time = tree.xpath(

'//div[@id ="comments"]/div[@class="comment-item"][{}]//span[@class="comment-info"]/span[@class="comment-time "]'.format(

i))

comments = tree.xpath(

'//div[@id ="comments"]/div[@class="comment-item"][{}]/div[@class="comment"]/p/span'.format(i))

vote = (votes[0].text.strip())

commenter = (commenters[0].text.strip())

try:

rating = (str(ratings[0]))

except:

rating = 'null'

comment_time = (comments_time[0].text.strip())

comment = (comments[0].text.strip())

comment_dict = {}

comment_dict['vote'] = vote

comment_dict['commenter'] = commenter

comment_dict['rating'] = rating

comment_dict['comments_time'] = comment_time

comment_dict['comments'] = comment

comment_dict['movie_name'] = movie_name

#存入数据库

print("正在存取第{}条数据".format(start+i))

print(comment_dict)

jsObj = json.dumps(comment_dict,ensure_ascii=False)

with open('test1.txt', 'a+',encoding='utf-8') as file:

file.writelines(jsObj+'\n')

file.close()

headers['Referer'] = response.url

start += 20

data['start'] = start

time.sleep(5)

return get_comments(url, headers, start, max_restart_num,movie_name,collection)

else:

# print(response.status_code)

if max_restart_num>0 :

if response.status_code != 200:

print("fail to crawl ,waiting 10s to restart continuing crawl...")

time.sleep(10)

# headers['User-Agent'] = Headers.getUA()

# print(start)

return get_comments(url, headers, start, max_restart_num-1, movie_name, collection)

else:

print("finished crawling")

return

else:

print("max_restart_num has run out")

with open('log.txt',"a") as fp:

fp.write('\n{}--latest start:{}'.format(time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())), start))

return

if __name__ =='__main__':

base_url = 'https://movie.douban.com/subject/26935251'

headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36',

'Upgrade-Insecure-Requests': '1',

'Connection':'keep-alive',

'Upgrade-Insecure-Requests':'1',

'Host':'movie.douban.com',

}

start = 0

response = requests.get(base_url,headers)

tree = etree.HTML(response.text)

movie_name = tree.xpath('//div[@id="content"]/h1/span')[0].text.strip()

# print(movie_name)

url = base_url+'/comments'

try:

get_comments(url, headers,start, 5, movie_name,None)

finally:

pass

得到爬取的数据:存入到test1.txt

数据处理

with open('test1.txt' ,'r',encoding='utf-8') as f:

data=f.readlines()

f.close()

#得到想要的rating的评论

n=len(data)

array=[]

for i in range(n):

temp=data[i]

temp=eval(temp)

# print(temp['rating'])

# print(type(temp))

if temp['rating']=="力荐"or"推荐":

array.append(temp)

#生成评论字出现大于1小于5的数据

import jieba

from collections import Counter

words_list = []

num=0

for doc in array:

num+=1

# print(doc['comments'])

comment = doc['comments']

#把评论拆分成不同的词

t_list = jieba.lcut(str(comment),cut_all=False)

for word in t_list: #当词不在停用词集中出现,并且长度大于1小于5,将之视为课作为词频统计的词

if 5>len(word)>1:

words_list.append(word)

words_dict = dict(Counter(words_list))

``num = 5 - 1

dict1 = {k:v for k,v in words_dict.items() if v > num}

## 生成词云

from wordcloud import WordCloud,ImageColorGenerator

from scipy.misc import imread

import matplotlib.pyplot as plt

from matplotlib.font_manager import FontProperties

# 词云设置

mask_color_path = "bg_1.png" # 设置背景图片路径

font_path = 'C:\Windows\Fonts\simkai.ttf' # 为matplotlib设置中文字体路径没

imgname1 = "color_by_defualut.png" # 保存的图片名字1(只按照背景图片形状)

imgname2 = "color_by_img.png" # 保存的图片名字2(颜色按照背景图片颜色布局生成)

width = 1000

height = 860

margin = 2

# 设置背景图片

mask_coloring = imread(mask_color_path)

# 设置WordCloud属性

wc = WordCloud( font_path =font_path ,

background_color="white", # 背景颜色

max_words=150, # 词云显示的最大词数

mask=mask_coloring, # 设置背景图片

max_font_size=200, # 字体最大值

# random_state=42,

width=width, height=height, margin=margin, # 设置图片默认的大小,但是如果使用背景图片的话,那么保存的图片大小将会按照其大小保存,margin为词语边缘距离

)

# 生成词云

wc.generate_from_frequencies(dict1)

bg_color = ImageColorGenerator(mask_coloring)

# 重定义字体颜色

wc.recolor(color_func=bg_color)

# 定义自定义字体,文件名从1.b查看系统中文字体中来

myfont = FontProperties(fname=font_path)

plt.figure()

title='nihao'

plt.title(title, fontproperties=myfont)

plt.imshow(wc)

plt.axis("off")

plt.show()

save=True

if save is True:#保存到

os.chdir(dirs)

wc.to_file(imgname2)

#得到词云

## 代码:

import json

import os

import requests,time

from lxml import etree

import time

import jieba

from collections import Counter

from wordcloud import WordCloud,ImageColorGenerator

from scipy.misc import imread

import matplotlib.pyplot as plt

from matplotlib.font_manager import FontProperties

dirs = ‘F:\爬虫\词云’

if not os.path.exists(dirs):

os.makedirs(dirs)

os.chdir(dirs)

def get_comments(url,headers,start,max_restart_num,movie_name,collection):

if start >= 5000:

print(“已爬取5000条评论,结束爬取”)

return

data = {

‘start’: start,

‘limit’: 20,

‘sort’: ‘new_score’,

‘status’: ‘P’,

}

response = requests.get(url=url, headers=headers, params=data)

tree = etree.HTML(response.text)

comment_item = tree.xpath(’//div[@id =“comments”]/div[@class=“comment-item”]’)

len_comments = len(comment_item)

if len_comments > 0:

for i in range(1, len_comments + 1):

votes = tree.xpath(’//div[@id =“comments”]/div[@class=“comment-item”][{}]//span[@class=“votes”]’.format(i))

commenters = tree.xpath(

‘//div[@id =“comments”]/div[@class=“comment-item”][{}]//span[@class=“comment-info”]/a’.format(i))

ratings = tree.xpath(

‘//div[@id =“comments”]/div[@class=“comment-item”][{}]//span[@class=“comment-info”]/span[contains(@class,“rating”)]/@title’.format(

i))

comments_time = tree.xpath(

‘//div[@id =“comments”]/div[@class=“comment-item”][{}]//span[@class=“comment-info”]/span[@class="comment-time "]’.format(

i))

comments = tree.xpath(

‘//div[@id =“comments”]/div[@class=“comment-item”][{}]/div[@class=“comment”]/p/span’.format(i))

vote = (votes[0].text.strip())

commenter = (commenters[0].text.strip())

try:

rating = (str(ratings[0]))

except:

rating = 'null'

comment_time = (comments_time[0].text.strip())

comment = (comments[0].text.strip())

comment_dict = {}

comment_dict['vote'] = vote

comment_dict['commenter'] = commenter

comment_dict['rating'] = rating

comment_dict['comments_time'] = comment_time

comment_dict['comments'] = comment

comment_dict['movie_name'] = movie_name

#存入数据库

print("正在存取第{}条数据".format(start+i))

print(comment_dict)

jsObj = json.dumps(comment_dict,ensure_ascii=False)

with open('test1.txt', 'a+',encoding='utf-8') as file:

file.writelines(jsObj+'\n')

file.close()

headers['Referer'] = response.url

start += 20

data['start'] = start

time.sleep(5)

return get_comments(url, headers, start, max_restart_num,movie_name,collection)

else:

# print(response.status_code)

if max_restart_num>0 :

if response.status_code != 200:

print("fail to crawl ,waiting 10s to restart continuing crawl...")

time.sleep(10)

# headers['User-Agent'] = Headers.getUA()

# print(start)

return get_comments(url, headers, start, max_restart_num-1, movie_name, collection)

else:

print("finished crawling")

return

else:

print("max_restart_num has run out")

with open('log.txt',"a") as fp:

fp.write('\n{}--latest start:{}'.format(time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())), start))

return

def get_dict(filename,number):

with open(filename ,'r',encoding='utf-8') as f:

data=f.readlines()

f.close()

n=len(data)

array=[]

for i in range(n):

temp=data[i]

temp=eval(temp)

#print(temp['rating'])

#print(type(temp))

if temp['rating']=="力荐"or"推荐":

array.append(temp)

words_list = []

num=0

for doc in array:

num+=1

# print(doc['comments'])

comment = doc['comments']

t_list = jieba.lcut(str(comment),cut_all=False)

for word in t_list: #当词不在停用词集中出现,并且长度大于1小于5,将之视为课作为词频统计的词

if 5>len(word)>1:

words_list.append(word)

words_dict = dict(Counter(words_list))

num = number - 1

dict1 = {k:v for k,v in words_dict.items() if v > num}

return dict1

def get_wordcloud(dict1,save=False):

# 词云设置

mask_color_path = “bg_1.png” # 设置背景图片路径

font_path = ‘C:\Windows\Fonts\simkai.ttf’ # 为matplotlib设置中文字体路径没

imgname1 = “color_by_defualut.png” # 保存的图片名字1(只按照背景图片形状)

imgname2 = “color_by_img.png” # 保存的图片名字2(颜色按照背景图片颜色布局生成)

width = 1000

height = 860

margin = 2

# 设置背景图片

mask_coloring = imread(mask_color_path)

# 设置WordCloud属性

wc = WordCloud( font_path =font_path ,

background_color=“white”, # 背景颜色

max_words=150, # 词云显示的最大词数

mask=mask_coloring, # 设置背景图片

max_font_size=200, # 字体最大值

# random_state=42,

width=width, height=height, margin=margin, # 设置图片默认的大小,但是如果使用背景图片的话,那么保存的图片大小将会按照其大小保存,margin为词语边缘距离

)

# 生成词云

wc.generate_from_frequencies(dict1)

bg_color = ImageColorGenerator(mask_coloring)

# 重定义字体颜色

wc.recolor(color_func=bg_color)

# 定义自定义字体,文件名从1.b查看系统中文字体中来

myfont = FontProperties(fname=font_path)

plt.figure()

title='词云'

plt.title(title, fontproperties=myfont)

plt.imshow(wc)

plt.axis("off")

plt.show()

if save is True:#保存到

os.chdir(dirs)

wc.to_file(imgname2)

if name ==‘main’:

base_url = ‘https://movie.douban.com/subject/26935251’

headers = {

‘User-Agent’: ‘Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36’,

‘Upgrade-Insecure-Requests’: ‘1’,

‘Connection’:‘keep-alive’,

‘Upgrade-Insecure-Requests’:‘1’,

‘Host’:‘movie.douban.com’,

}

start = 0

response = requests.get(base_url,headers)

tree = etree.HTML(response.text)

movie_name = tree.xpath(’//div[@id=“content”]/h1/span’)[0].text.strip()

# print(movie_name)

url = base_url+'/comments'

filename='test1.txt'

try:

get_comments(url, headers,start, 5, movie_name,None)

dict1=get_dict(filename,5)

get_wordcloud(dict1,save=True)

finally:

pass