FaceNet: A Unified Embedding for Face Recognition and Clustering

FaceNet:一种用于人脸识别和聚类的统一嵌入

论文下载地址:https://arxiv.org/abs/1503.03832?context=cs

翻译如有不合适之处,欢迎评论留言~

疯了,昨晚翻译完了,居然网络没保存上!!!!周末再弄

https://blog.csdn.net/chzylucky/article/details/79716272

Abstract 摘要

Despite significant recent advances in the field of face recognition [10, 14, 15, 17], implementing face verification and recognition efficiently at scale presents serious challenges to current approaches. In this paper we present a system, called FaceNet, that directly learns a mapping from face images to a compact Euclidean space where distances directly correspond to a measure of face similarity. Once this space has been produced, tasks such as face recognition, verification and clustering can be easily implemented using standard techniques with FaceNet embeddings as feature vectors.

尽管最近在人脸识别领域取得了重大进展[10,14,15,17],在规模条件下有效的实施人脸验证和识别对当前的方法构成了严峻的挑战,在这个论文中,我们提出了一个称为FaceNet的系统,这个系统直接学习一种从人脸图像到一个紧凑的欧几里德空间的映射,其中距离直接对应于人脸相似性的度量。一旦欧几里德空间生成,类似人脸识别,验证和聚类的任务将通过FaceNet嵌入作为特征向量的标准技术轻易地实现。

Our method uses a deep convolutional network trained to directly optimize the embedding itself, rather than an intermediate bottleneck layer as in previous deep learning approaches. To train, we use triplets of roughly aligned matching / non-matching face patches generated using a novel online triplet mining method. The benefit of our approach is much greater representational efficiency: we achieve state-of-the-art face recognition performance using only 128-bytes per face.

我们的方法是使用深度卷积网络训练直接地优化嵌入本身,而不再是以前的深度学习方法那样的中间瓶颈层。 为了训练,我们用大致对齐匹配/不匹配的生成人脸块的三元组,它采用了一种新颖的在线三元组挖掘方法。我们方法的好处是更具代表性的效率:我们的人脸仅用128个字节就实现了最先进的人脸识别表现。

On the widely used Labeled Faces in the Wild (LFW) dataset, our system achieves a new record accuracy of 99.63%. On YouTube Faces DB it achieves 95.12%. Our system cuts the error rate in comparison to the best published result [15] by 30% on both datasets.

在广泛使用的Labeled Faces in the Wild(LFW)数据集中,我们的系统实现了99.63%准确率的新记录。 在YouTube Faces DB上,它达到了95.12%。 在Labeled Faces in the Wild(LFW)、YouTube Faces DB两个数据集上,我们的FaceNet系统与最佳发布结果[15]相比降低了30%的错误率。

We also introduce the concept of harmonic embeddings,and a harmonic triplet loss, which describe different versions of face embeddings (produced by different networks) that are compatible to each other and allow for direct comparison between each other.

我们还介绍了谐波嵌入和谐波三元组损失的概念,它描述了不同版本的人脸嵌入(由不同网络产生的人脸),它们彼此兼容并允许彼此直接比较。

1. Introduction 介绍

In this paper we present a unified system for face verification (is this the same person), recognition (who is this person) and clustering (find common people among these faces). Our method is based on learning a Euclidean embedding per image using a deep convolutional network. The network is trained such that the squared L2 distances in the embedding space directly correspond to face similarity:faces of the same person have small distances and faces of distinct people have large distances.

在本文中,我们介绍了一个统一的人脸验证系统(这是同一个人的验证),识别(这个人是谁)和聚类(在这些面孔中找出一般人)。 我们的方法基于使用深度卷积网络学习一个欧几里德空间嵌入到一张图像。网络被训练,即嵌入空间中的L2范数的平方直接对应人脸的相似性:同一人的人脸具有小距离,不同人的人脸具有大距离。

Once this embedding has been produced, then the aforementioned tasks become straight-forward: face verification simply involves thresholding the distance between the two embeddings; recognition becomes a k-NN classification problem; and clustering can be achieved using off-the-shelf techniques such as k-means or agglomerative clustering.

一旦产生了欧几里德空间嵌入,则上述任务变得直截了当:人脸验证仅涉及一个距离的阀值,即两个嵌入的欧几里德空间距离阀值;人脸识别变成了一个k-NN分类问题;聚类可以通过诸如k-means 或者agglomerative clustering(层次聚类凝聚)的先进技术去实现。

Previous face recognition approaches based on deep networks use a classification layer [15, 17] trained over a set of known face identities and then take an intermediate bottleneck layer as a representation used to generalize recognition beyond the set of identities used in training. The downsides of this approach are its indirectness and its inefficiency: one has to hope that the bottleneck representation generalizes well to new faces; and by using a bottleneck layer the representation size per face is usually very large (1000s of dimensions). Some recent work [15] has reduced this dimensionality using PCA, but this is a linear transformation that can be easily learnt in one layer of the network.

先前基于深度网络的人脸识别方法,它使用在一组已知人脸身份上训练的分类层[15,17],然后将一个中间瓶颈层作为一种表示,这个表示归纳识别:训练过程中超出身份的集合。 这种方法的缺点是间接性和效率低:人们不得不希望中间瓶颈表示能够很好地概括新面孔; 通过使用瓶颈层,每个人脸的表示大小通常非常大(1000维)。最近的一些工作[15]使用PCA降低了这种维度,但这是一种线性转换,可以在网络的一个层中轻松学习到特征。

In contrast to these approaches, FaceNet directly trains its output to be a compact 128-D embedding using a tripletbased loss function based on LMNN [19]. Our triplets consist of two matching face thumbnails and a non-matching face thumbnail and the loss aims to separate the positive pair from the negative by a distance margin. The thumbnails are tight crops of the face area, no 2D or 3D alignment, other than scale and translation is performed.

与这些方法相比,FaceNet直接训练其输出到一个紧凑的128-D欧几里得嵌入,采用一个基于LMNN [19]的三元组损失函数。我们的三元组包含两个匹配的人脸缩略图和一个非匹配的人脸缩略图,以及三元组的损失目标是通过距离边距去分开正例和负例。这些缩略图是人脸区域的密集裁剪,除了缩放和平移之外,并没做2D或3D对齐操作。

Choosing which triplets to use turns out to be very important for achieving good performance and, inspired by curriculum learning [1], we present a novel online negative exemplar mining strategy which ensures consistently increasing difficulty of triplets as the network trains. To improve clustering accuracy, we also explore hard-positive mining techniques which encourage spherical clusters for the embeddings of a single person.

选择那些应用了三元组结果表现表现好的三元组事实证明非常重要,受课程学习的启发[1],我们提出了一种新颖的在线负例挖掘策略,确保在网络训练时不断增加三元组的难度。为了提高聚类的精确度,我们也探索假正例挖掘技术,该技术促进单人的欧几里得空间嵌入的球形聚类。

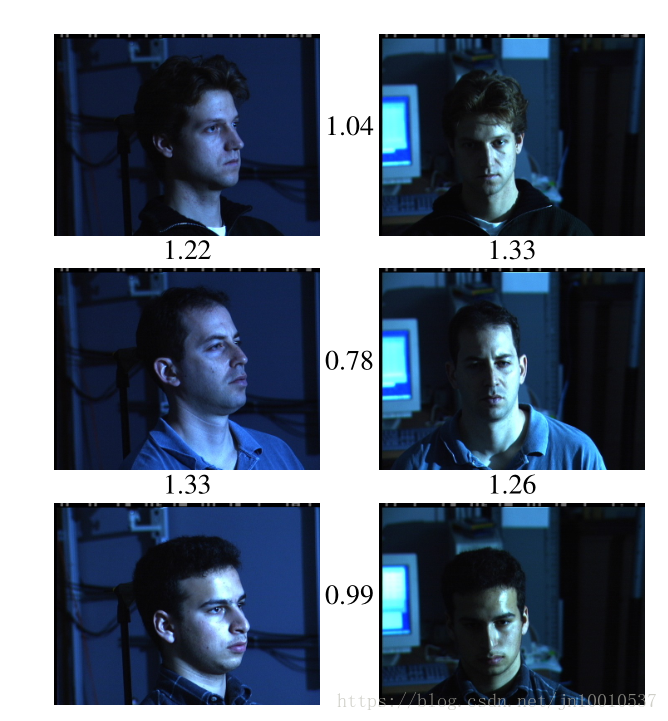

As an illustration of the incredible variability that our method can handle see Figure 1. Shown are image pairs from PIE [13] that previously were considered to be very difficult for face verification systems.

作为我们的方法可以处理的令人难以置信的变化的例证,请参见图1. 显示来自PIE [13]的图像对,之前被认为对于人脸验证系统来说非常困难的图像对。

Figure 1. Illumination and Pose invariance. Pose and illumination have been a long standing problem in face recognition. This figure shows the output distances of FaceNet between pairs of faces of the same and a different person in different pose and illumination combinations. A distance of 0.0 means the faces are identical, 4.0 corresponds to the opposite spectrum, two different identities. You can see that a threshold of 1.1 would classify every pair correctly.

图1.照明和姿势不变化。 姿势和照明是人脸识别中长期存在的问题。 该图显示了FaceNet在不同姿势和照明组合中相同和不同人的人脸对之间的输出距离。 距离0.0表示面相同,4.0对应于相反的系列,两个不同的身份。 你可以看到1.1的阈值会正确地对每对人脸进行分类。

An overview of the rest of the paper is as follows: in section 2 we review the literature in this area; section 3.1 defines the triplet loss and section 3.2 describes our novel triplet selection and training procedure; in section 3.3 we describe the model architecture used. Finally in section 4 and 5 we present some quantitative results of our embeddings and also qualitatively explore some clustering results.

本文其余部分的概述如下:在第2节中,我们回顾了该领域的文献; 第3.1节定义了三元组损失,第3.2节描述了我们新颖的三元组选择和训练过程; 在3.3节中,我们描述了使用的模型架构。 最后在第4节和第5节中,我们介绍了我们欧几里得空间嵌入的一些定量结果,并定性地探索了一些聚类结果。

2. Related Work 相关工作

Similarly to other recent works which employ deep networks [15, 17], our approach is a purely data driven method which learns its representation directly from the pixels of the face. Rather than using engineered features, we use a large dataset of labelled faces to attain the appropriate invariances to pose, illumination, and other variational conditions.

与其他最近使用深度网络的作品[15,17]类似,我们的方法是一种纯粹的数据驱动方法,它直接从人脸像素中学习它的表示。 我们使用标记人脸的大型数据集来获得对应姿势,光照和其他变化条件下的适当不变性,而不是采用使用工程特征去获得其不变性。

In this paper we explore two different deep network architectures that have been recently used to great success in the computer vision community. Both are deep convolutional networks [8, 11]. The first architecture is based on the Zeiler&Fergus [22] model which consists of multiple interleaved layers of convolutions, non-linear activations, local response normalizations, and max pooling layers. We additionally add several 1×1×d convolution layers inspired by the work of [9]. The second architecture is based on the Inception model of Szegedy et al. which was recently used as the winning approach for ImageNet 2014 [16]. These networks use mixed layers that run several different convolutional and pooling layers in parallel and concatenate their responses. We have found that these models can reduce the number of parameters by up to 20 times and have the potential to reduce the number of FLOPS required for comparable performance.

在本文中,我们探讨了最近在计算机视觉社区中取得巨大成功的两种不同的深度网络架构。 两者都是深度卷积网络[8,11]。 第一种架构基于Zeiler&Fergus [22]模型,该模型由多个交错的卷积层,非线性激活,局部响应归一化和最大池化层组成。 我们另外添加了几个1×1×d卷积层,灵感来自[9]的工作。 第二种架构基于Szegedy等人的Inception模型。 最近被用作ImageNet 2014的获胜方法[16]。 这些网络使用混合层,并行运行几个不同的卷积和池化层并连接它们的响应。 我们发现这些模型可以将参数数量减少多达20倍,并且有可能减少相当性能下所需的FLOPS数量。

There is a vast corpus of face verification and recognition works. Reviewing it is out of the scope of this paper so we will only briefly discuss the most relevant recent work.

有大量的面部验证和识别工作。 审查这些工作超出了本文的范围,因此我们将仅简要讨论最相关的最新工作。

The works of [15, 17, 23] all employ a complex system of multiple stages, that combines the output of a deep convolutional network with PCA for dimensionality reduction and an SVM for classification.

[15,17,23]的工作都使用了一个复杂的多级系统,它将深度卷积网络的输出与PCA相结合,以降低维数,并将SVM用于分类。

Zhenyao et al. [23] employ a deep network to “warp” faces into a canonical frontal view and then learn CNN that classifies each face as belonging to a known identity. For face verification, PCA on the network output in conjunction with an ensemble of SVMs is used.

Zhenyao等 [23]采用深度网络将人脸“warp(扭曲)”成规范的正面视图,然后学习CNN,将每个人脸进行分类为一个属于已知的身份。 对于人脸验证,PCA在网络的输出层,即结合一组SVM使用。

Taigman et al. [17] propose a multi-stage approach that aligns faces to a general 3D shape model. A multi-class network is trained to perform the face recognition task on over four thousand identities. The authors also experimented with a so called Siamese network where they directly optimize the L1-distance between two face features. Their best performance on LFW (97.35%) stems from an ensemble of three networks using different alignments and color channels. The predicted distances (non-linear SVM predictions based on the χ 2 kernel) of those networks are combined using a non-linear SVM.

Taigman等 [17]提出了一种多级方法,将人脸与一般的三维形状模型对齐。 执行超过四千个身份的人脸识别任务去训练一个多类别网络。 作者还试验了一个所谓的Siamese(连体)网络,他们直接优化了两个人脸特征之间的L1距离。 他们在LFW数据集上的最佳表现(97.35%)源于使用不同对齐和颜色通道的三个网络的集合。 使用非线性SVM组合预测这些网络的距离(基于χ2核的非线性SVM预测)。

Sun et al. [14, 15] propose a compact and therefore relatively cheap to compute network. They use an ensemble of 25 of these network, each operating on a different face patch. For their final performance on LFW (99.47% [15]) the authors combine 50 responses (regular and flipped).Both PCA and a Joint Bayesian model [2] that effectively correspond to a linear transform in the embedding space are employed. Their method does not require explicit 2D/3D alignment. The networks are trained by using a combination of classification and verification loss. The verification loss is similar to the triplet loss we employ [12, 19], in that it

minimizes the L2-distance between faces of the same identity and enforces a margin between the distance of faces of different identities. The main difference is that only pairs of images are compared, whereas the triplet loss encourages a relative distance constraint.

孙等人[14,15]提出了一种紧凑且因此相对容易的计算网络。 他们使用25个这样的网络集合,每个网络运行在不同的人脸小块上。作者组合了50个响应(常规和翻转), 对于他们在LFW上的最终表现(99.47%[15])。PCA和联合贝叶斯模型[2],它们都有效地对应于嵌入空间中的线性变换。他们的方法不需要明确的2D / 3D对齐。通过使用分类和验证损失的组合来训练网络。验证损失类似于我们采用的三元组损失[12,19],在验证损失中最小化相同身份的人脸之间的L2距离,并强化不同身份的人脸之间距离的一个余量。主要区别在于孙等人[14,15]提出的网络仅比较成对的图像,而三元组损失支持一个相对距离约束。

A similar loss to the one used here was explored in Wang et al. [18] for ranking images by semantic and visual similarity.

Wang等人[18]探索了一个类似的损失,即通过语义和视觉相似性对图像进行排序。

3. Method 方法

FaceNet uses a deep convolutional network. We discuss two different core architectures: The Zeiler&Fergus [22] style networks and the recent Inception [16] type networks. The details of these networks are described in section 3.3.

FaceNet采用深度卷积网络。 我们讨论了两种不同的核心架构:Zeiler&Fergus [22]型网络和最近的Inception [16]型网络。 这些网络的细节在3.3节中描述。

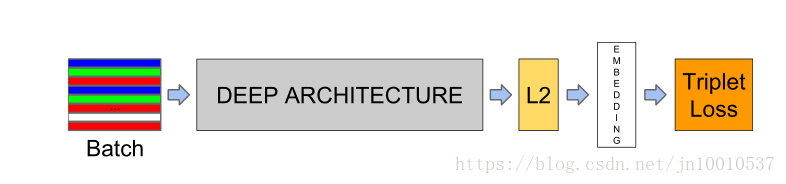

Given the model details, and treating it as a black box (see Figure 2), the most important part of our approach lies in the end-to-end learning of the whole system. To this end we employ the triplet loss that directly reflects what we want to achieve in face verification, recognition and clustering.Namely, we strive for an embedding f(x), from an image x into a feature space R d , such that the squared distance between all faces, independent of imaging conditions, of the same identity is small, whereas the squared distance between a pair of face images from different identities is large.

鉴于模型细节,并将其看成黑盒子(见图2),我们方法中最重要的部分在于整个系统的端到端学习。

为此,我们采用三元组损失直接反映了我们想要在面部验证,识别和聚类中实现的目标。即,我们努力嵌入f(x),从图像x到特征空间R d,这样 相同身份的所有面部之间的平方距离(与成像条件无关)很小,而来自不同身份的一对面部图像之间的平方距离很大。

Figure 2. Model structure. Our network consists of a batch input layer and a deep CNN followed by L2 normalization, which results in the face embedding. This is followed by the triplet loss during training.

Although we did not directly compare to other losses,e.g. the one using pairs of positives and negatives, as used in [14] Eq. (2), we believe that the triplet loss is more suitable for face verification. The motivation is that the loss from [14] encourages all faces of one identity to be projected onto a single point in the embedding space. The triplet loss, however, tries to enforce a margin between each pair of faces from one person to all other faces. This allows the faces for one identity to live on a manifold, while still enforcing the distance and thus discriminability to other identities.

The following section describes this triplet loss and how it can be learned efficiently at scale.

3.1. Triplet Loss

The embedding is represented by f(x) ∈ R d . It embeds an image x into a d-dimensional Euclidean space. Additionally, we constrain this embedding to live on the d-dimensional hypersphere, i.e. kf(x)k 2 = 1. This loss is motivated in [19] in the context of nearest-neighbor classification. Here we want to ensure that an image x a i

(anchor) of

a specific person is closer to all other images x p

i

(positive)

of the same person than it is to any image x n

i

(negative) of

any other person. This is visualized in Figure 3.

Generating all possible triplets would result in many triplets that are easily satisfied (i.e. fulfill the constraint in Eq. (1)). These triplets would not contribute to the training and result in slower convergence, as they would still be passed through the network. It is crucial to select hard triplets, that are active and can therefore contribute to improving the model. The following section talks about the different approaches we use for the triplet selection.

3.2. Triplet Selection

Acknowledgments 致谢

We would like to thank Johannes Steffens for his discussions and great insights on face recognition and Christian Szegedy for providing new network architectures like [16] and discussing network design choices. Also we are indebted to the DistBelief [4] team for their support especially to Rajat Monga for help in setting up efficient training schemes.

Also our work would not have been possible without the support of Chuck Rosenberg, Hartwig Adam, and Simon Han.

References 参考

[1] Y. Bengio, J. Louradour, R. Collobert, and J. Weston. Curriculum learning. In Proc. of ICML, New York, NY, USA,2009. 2

[2] D. Chen, X. Cao, L. Wang, F. Wen, and J. Sun. Bayesian face revisited: A joint formulation. In Proc. ECCV, 2012. 2

[3] D. Chen, S. Ren, Y. Wei, X. Cao, and J. Sun. Joint cascade face detection and alignment. In Proc. ECCV, 2014. 7

[4] J. Dean, G. Corrado, R. Monga, K. Chen, M. Devin, M. Mao,M. Ranzato, A. Senior, P. Tucker, K. Yang, Q. V. Le, and A. Y. Ng. Large scale distributed deep networks. In P. Bartlett, F. Pereira, C. Burges, L. Bottou, and K. Weinberger, editors, NIPS, pages 1232–1240. 2012. 10

[5] J. Duchi, E. Hazan, and Y. Singer. Adaptive subgradient methods for online learning and stochastic optimization. J.Mach. Learn. Res., 12:2121–2159, July 2011. 4

[6] I. J. Goodfellow, D. Warde-farley, M. Mirza, A. Courville,and Y. Bengio. Maxout networks. In In ICML, 2013. 4

[7] G. B. Huang, M. Ramesh, T. Berg, and E. Learned-Miller.Labeled faces in the wild: A database for studying face recognition in unconstrained environments. Technical Report 07-49, University of Massachusetts, Amherst, October 2007. 5

[8] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E.Howard, W. Hubbard, and L. D. Jackel. Backpropagation applied to handwritten zip code recognition. Neural Computation, 1(4):541–551, Dec. 1989. 2, 4

[9] M. Lin, Q. Chen, and S. Yan. Network in network. CoRR,abs/1312.4400, 2013. 2, 4, 6

[10] C. Lu and X. Tang. Surpassing human-level face verification performance on LFW with gaussianface. CoRR, abs/1404.3840, 2014. 1

[11] D. E. Rumelhart, G. E. Hinton, and R. J. Williams. Learning representations by back-propagating errors. Nature, 1986. 2,4

[12] M. Schultz and T. Joachims. Learning a distance metric from relative comparisons. In S. Thrun, L. Saul, and B. Schölkopf,

editors, NIPS, pages 41–48. MIT Press, 2004. 2

[13] T. Sim, S. Baker, and M. Bsat. The CMU pose, illumination,and expression (PIE) database. In In Proc. FG, 2002. 2

[14] Y. Sun, X. Wang, and X. Tang. Deep learning face representation by joint identification-verification. CoRR,abs/1406.4773, 2014. 1, 2, 3

[15] Y. Sun, X. Wang, and X. Tang. Deeply learned face representations are sparse, selective, and robust. CoRR,abs/1412.1265, 2014. 1, 2, 5, 8

[16] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed,D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich. Going deeper with convolutions. CoRR, abs/1409.4842,2014. 2, 3, 4, 5, 6, 10

[17] Y. Taigman, M. Yang, M. Ranzato, and L. Wolf. Deepface:Closing the gap to human-level performance in face verification. In IEEE Conf. on CVPR, 2014. 1, 2, 5, 7, 8, 9

[18] J. Wang, Y. Song, T. Leung, C. Rosenberg, J. Wang,J. Philbin, B. Chen, and Y. Wu. Learning fine-grained image similarity with deep ranking. CoRR, abs/1404.4661, 2014. 2

[19] K. Q. Weinberger, J. Blitzer, and L. K. Saul. Distance metric learning for large margin nearest neighbor classification. In NIPS. MIT Press, 2006. 2, 3

[20] D. R. Wilson and T. R. Martinez. The general inefficiency of batch training for gradient descent learning. Neural Networks, 16(10):1429–1451, 2003. 4

[21] L. Wolf, T. Hassner, and I. Maoz. Face recognition in unconstrained videos with matched background similarity. In IEEE Conf. on CVPR, 2011. 5

[22] M. D. Zeiler and R. Fergus. Visualizing and understanding convolutional networks. CoRR, abs/1311.2901, 2013. 2, 3,4, 6

[23] Z. Zhu, P. Luo, X. Wang, and X. Tang. Recover canonicalview faces in the wild with deep neural networks. CoRR,abs/1404.3543, 2014. 2