版权声明:转载请告知 https://blog.csdn.net/weixin_42404145/article/details/81584846

这次练习是跟着崔庆才老师的教程学的..在开始之前确定自己安装了pyquery库和相应的浏览器驱动. 因为有些条件不适用,就改了一些地方,没建项目所以没有存储到数据库中只爬取了数据…在以后的练习中再加入到数据库中吧!

以下是全部代码:

from selenium import webdriver

from selenium.webdriver.support.wait import WebDriverWait

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from pyquery import PyQuery as pq

import re

browser = webdriver.Firefox()#声明浏览器驱动

wait = WebDriverWait(browser, 10)

def search():

try:

browser.get('https://www.taobao.com')#打开页面

input = wait.until(

# EC.presence_of_all_elements_located((By.CSS_SELECTOR,'#q'))#在EC.后选择方法要看清自己的选择,之前用all得到的是list会出错

EC.presence_of_element_located((By.CSS_SELECTOR, '#q'))

)#找到输入框

submit = wait.until(EC.element_to_be_clickable((By.CSS_SELECTOR,'#J_TSearchForm > div.search-button > button')))#显式等待,button可点击

input.send_keys('裙子')

submit.click()

total = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > div.total')))

get_products()#获取第一页的信息

return total.text

except TimeoutException:

return search()

def next_page(page_number):#因为改变页面有很多种方法

input = wait.until(

EC.presence_of_element_located((By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > div.form > input'))

)

submit = wait.until(EC.element_to_be_clickable((By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > div.form > span.btn.J_Submit')))

input.clear()

input.send_keys(page_number)

submit.click()

wait.until(EC.text_to_be_present_in_element((By.CSS_SELECTOR,'#mainsrp-pager > div > div > div > ul > li.item.active > span'),str(page_number)))#高亮区域和要到达的页面一致

get_products()

def get_products():

wait.until(EC.presence_of_element_located((By.CSS_SELECTOR,'#mainsrp-itemlist .items .item')))

html = browser.page_source

doc = pq(html)

items = doc('#mainsrp-itemlist .items .item').items()#用pyQuery库解析

for item in items:

products={

'price' : item.find('.price').text(),

'deal' : item.find('.deal-cnt').text()[:-3],

'title' :item.find('.title').text(),

'shop' : item.find('.shop').text(),

'location' : item.find('.location').text()

}

print(products)

def main():

total = search()

total = int(re.compile('(\d+)').search(total).group(1))

for i in range(2,total + 1):

next_page(i)

if __name__ == '__main__':

main()

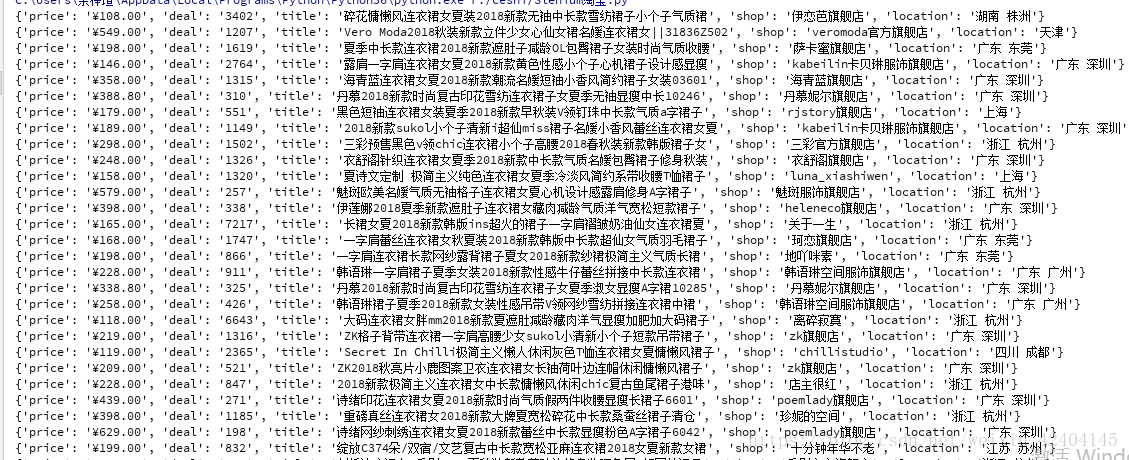

结果: