Clearing the Skies: A deep network architecture for single-image rain removal解读

Abstract

索引术语 - 除雨,深度学习,卷积神经网络,图像增强

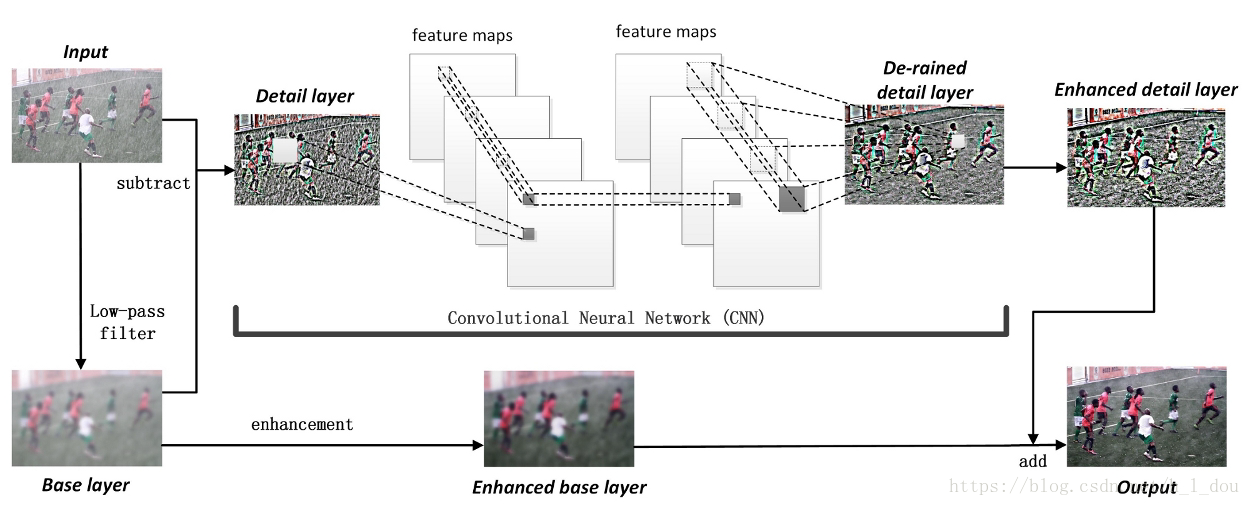

我们介绍了一种名为DerainNet的深度网络架构,用于消除图像中的雨条纹。基于深度卷积神经网络(CNN),我们直接从数据中学习雨天和干净图像细节层之间的映射关系。因为我们没有与现实世界的雨季图像相对应的地面真相,所以我们合成了带雨的图像进行训练。与增加网络深度或广度的其他常见策略相比,我们使用图像处理领域知识来修改目标函数并改善适度大小的CNN的延迟。具体来说,我们在细节(高通)层而不是在图像域中训练我们的DerainNet。尽管DerainNet接受了合成数据的培训,但我们发现学到的网络可以非常有效地转换为真实世界的图像进行测试。此外,我们通过图像增强来增强CNN框架,以改善视觉效果。与现有技术的单图像去除方法相比,我们的方法在网络训练后具有更好的除雨效果和更快的计算时间。

1.Introduction

rain streaks create not only a blurring effect in images, but also haziness due to light scattering.

Effective

methods for removing rain streaks are required for a wide range of practical applications, such as image enhancement and object tracking.

We present the first deep convolutional neural network (CNN) tailored to this task and show how the CNN framework can obtain state-of-the-art(最先进的) results

These methods can be categorized into two groups: video-based methods and single-image based methods.

A:Related work:Video v.s. single-image based rain removal

Due to the redundant temporal information that exists in video, rain streaks can be more easily identified and removed in this domain[1-4]

in [1] the authors first propose a rain streak detection algorithm based on a correlation (相关)model. After detecting the location of rain streaks, the method uses the average pixel value taken from the neighboring frames to remove streaks.

In [2], the authors analyze the properties of rain and establish a model of visual effect of rain in frequency space.

In [3], the histogram(直方图) of streak orientation(方向) is used to detect rain and a Gaussian mixture model is used to extract the rain layer.

In [4], based on the minimization of registration error between frames(帧之间配准误差的最小化), phase congruency(相的一致性) is used to detect and remove the rain streaks.

1.K. Garg and S. K. Nayar, “Detection and removal of rain from videos,” in International Conference on Computer Vision and Pattern Recognition (CVPR), 2004.

2.P. C. Barnum, S. Narasimhan, and T. Kanade, “Analysis of rain and snow in frequency space,” International Journal on Computer Vision, vol. 86, no. 2-3, pp. 256–274, 2010.

3.J. Bossu, N. Hautiere, and J.P. Tarel, “Rain or snow detection in image sequences through use of a histogram of orientation of streaks,” International Journal on Computer Vision, vol. 93, no. 3, pp. 348–367, 2011.

4.V. Santhaseelan and V. K. Asari, “Utilizing local phase information to remove rain from video,” International Journal on Computer Vision, vol. 112, no. 1, pp. 71–89, 2015.

Many of these methods work well, but are significantly aided by the temporal content of video.(很多效果都很好,因为视频的时间内容得到了显著的帮助)(难道视频的处理起来还更简单???)

Compared with video-based methods, removing rain from individual images is much more challenging since much less information is available for detecting and removing rain streaks.(作者的意思是视频更简单)

success is less noticeable than in video-based algorithms, and there is still much room for improvement. To give three examples:

in [5] rain streak detection and removal is achieved using kernel regression and a non-local mean filtering.

In [6], a related work based on deep learning was introduced to remove static raindrops and dirt spots from pictures taken through windows(这篇文章用的物理模型和作者用的不是一个,限制了模型去除雨条的能力)

In [7], a generalized lowrank model(一般的低级别的模型); both single-image and video rain removal can be achieved through this the spatial and temporal correlations (空间和时间相关性)learned by this method.

[5] J. H. Kim, C. Lee, J. Y. Sim, and C. S. Kim, “Single-image deraining using an adaptive nonlocal means filter,” in IEEE International Conference on Image Processing (ICIP), 2013.

[6] D. Eigen, D. Krishnan, and R. Fergus, “Restoring an image taken through a window covered with dirt or rain,” in International Conference on Computer Vision (ICCV), 2013.

[7] Y. L. Chen and C. T. Hsu, “A generalized low-rank appearance model for spatio-temporally correlated rain streaks,” in International Conference on Computer Vision (ICCV), 2013.

Recently, several methods based on dictionary learning have been proposed[8]–[12]

In [9], the input rainy image is first decomposed into its base layer and detail layer.Rain streaks and object details are isolated in the detail layer while the structure remains in the base layer. Then sparse coding dictionary learning is used to detect and remove rain streaks from the detail layer.(从细节层进行学习)The output is obtained by combining the de-rained detail layer and base layer.The output is obtained by combining the de-rained detail layer and base layer.(结果再把细节层与基础层叠加)

In [10], a selflearning based image decomposition method is introduced to automatically distinguish rain streaks from the detail layer(基于自学习的图像分解方法自动识别雨线从细节层)

In

[11], the authors use discriminative sparse coding to recover a clean image from a rainy image.(判别稀疏编码)

[9], [10] is that they tend to generate over-smoothed results when dealing with images containing complex structures that are similar to rain streaks,(在处理包含与雨条相似的复杂结构的图像时,它们往往想要产生过度平滑的结果)

all four dictionary learning based frameworks [9]–[12] require significant computation time.(计算时间长)

[8] D. A. Huang, L. W. Kang, M. C. Yang, C. W. Lin, and Y. C. F. Wang, “Context-aware single image rain removal,” in International Conference on Multimedia and Expo (ICME), 2012.

[9] L. W. Kang, C. W. Lin, and Y. H. Fu, “Automatic single image-based rain streaks removal via image decomposition,” IEEE Transactions on Image Processing, vol. 21, no. 4, pp. 1742–1755, 2012.

[10] D. A. Huang, L. W. Kang, Y. C. F. Wang, and C. W. Lin, “Self-learning based image decomposition with applications to single image denoising,” IEEE Transactions on Multimedia, vol. 16, no. 1, pp. 83–93, 2014.

[11] Y. Luo, Y. Xu, and H. Ji, “Removing rain from a single image via discriminative sparse coding,” in International Conference on Computer Vision (ICCV), 2015.

[12] D. Y. Chen, C. C. Chen, and L. W. Kang, “Visual depth guided color image rain streaks removal using sparse coding,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 24, no. 8, pp. 1430–

1455, 2014.

More recently, patch-based priors(基于补丁先验) for both the clean and rain layers have been explored to remove rain streaks [13]. In this method, the multiple orientations and cales of rain streaks are addressed by pre-trained Gaussian mixture models.

B Contributions of our DerainNet approach

[9]–[11], [13] only separate rain streaks from object details by using low level features.

When an object’s structure and orientation are similar with that of rain streaks, these methods have difficulty simultaneously removing rain streaks and preserving structural information.(当物体的机构和方位与雨线相似的时候,这些方法很难同时去除雨线和提供物体信息)(显然没懂。。。)(应该是和雨线相似的物体很难被区分开)

Humans on the other hand can easily distinguish rain streaks within a single image using high-level features(雨线还是有高级特性的)

We are therefore motivated to design a rain detection and removal algorithm based on the deep convolutional neural network (CNN)[14], [15].

[14] A. Krizhevsky, I. Sutskever, and G.E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems (NIPS), 2012.

[15] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition(基于梯度的文档识别学习识别),” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

Our main contributions are threefold:

1.significant improvement over three recent state-of-the-art methods.making it more suitable for realtime applications.

2. We show how better results can be obtained without introducing more complex network architecture or more computing resources.(我们使用图像处理领域知识来修改目标函数并提高降雨质量,而不是使用增加神经元或堆叠隐藏层等常用策略来有效地近似所需的映射函数。 我们展示了如何在不引入更复杂的网络架构或更多计算资源的情况下获得更好的结果。)

3. We show that, though we train on synthesized rainy images, the resulting network is very effective when testing on real-world rainy images

2.DerainNet:Deep Learning for rain removal

we decompose each image into a low-frequency base layer and a high-frequency detail layer

.To further improve visual quality, we introduce an image enhancement step to sharpen the results of both layers since the effects of heavy rain naturally leads to a hazy effect.

A Traing on high-pass detail layers(在高通细节层训练)

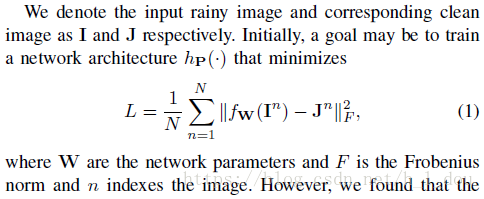

公式1:

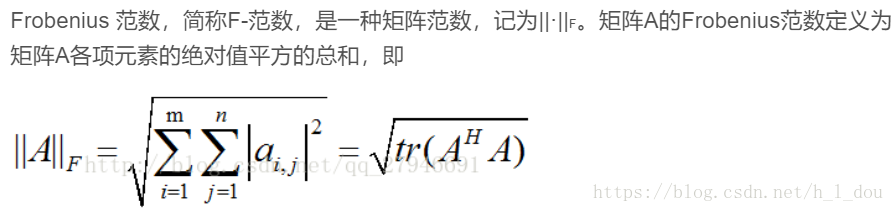

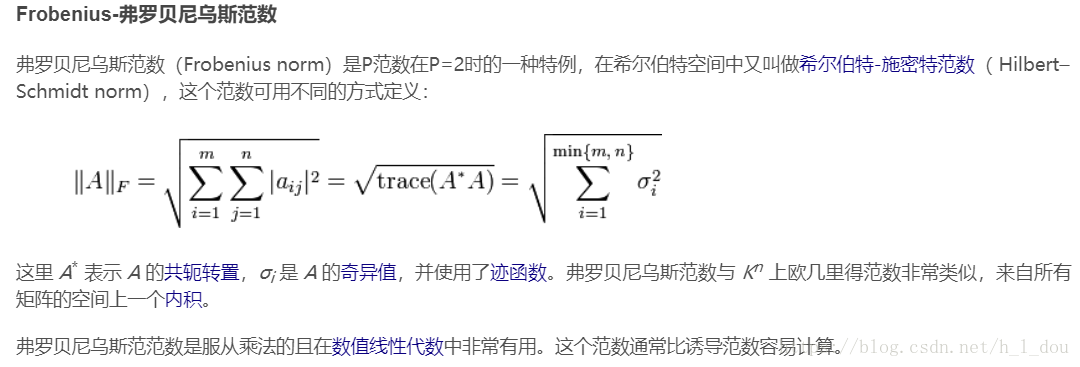

Frobenius norm(F范数的定义):

we found that the result obtained by directly training in the image domain is not satisfactory.(直接在图像域训练,不行!)

Figure 3(b) implies that the desired mapping function was not learned well when training on the image domain,(在图像域上进行训练时,没有很好地学习所需的映射函数)

there are two ways to improve a network’s capacity in the deep learning domain. One way is to increase the depth of network [22] by stacking more hidden layers.Usually, more hidden layers can help to obtain high-level features. However, the de-rain problem is a low-level image task and the deeper structure is not necessarily better for this image processing problems.Furthermore, training a feed- forward network with more layers suffers from gradient vanishing unless other training strategies or more complex network structures are introduced(两个解决办法:通过增加隐藏层来增加网络深度可以获得高级特征:去雨问题是一个低级图像处理任务,更深的结构不需要???增加模型深度可以增强模型能力?另外更多层可能导致梯度小时除非另外的训练策略或者风负责的网络结构。)(有自己的例子,增加了网络层数反而效果更差,导致了模糊)

The other approach is to increase the breadth of network [23] by using more neurons in each hidden layer.

However, to avoid over-fitting, this strategy requires more training data and computation time that may be intolerable under normal computing condition.(第二种方法把网络拓宽,为了避免过拟合(怎样避免过拟合?)这个方法需要更多数据和时间,正常计算机不行。)(越宽的网络需要的数据越多?一个网络训练起来需要多少数据?)

To effectively and efficiently tackle the de-rain problem, we instead use a priori image processing knowledge to modify the objective function rather than increase the complexity of the problem.

Conventional end-to-end procedures directly uses image patches to train the model by finding a mapping function f that transforms the input to output [6], [17].

[6] D. Eigen, D. Krishnan, and R. Fergus, “Restoring an image taken through a window covered with dirt or rain,” in International Conference on Computer Vision (ICCV), 2013.

[17] C. Dong, C. L. Chen, K. He, and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 2, pp. 295–307, 2016.

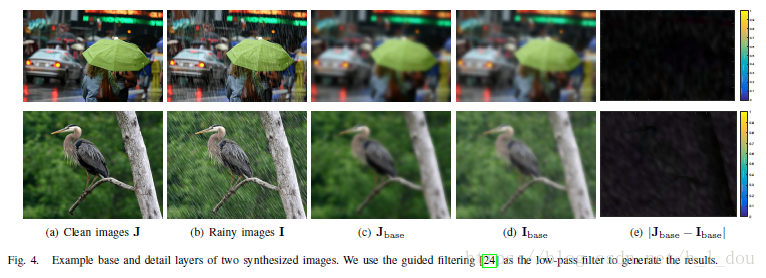

Motivated by Figure 3, rather than directly train on the image, we first decompose(分解) the image into the sum of a “base” layer and a “detail” layer by using a low-pass filter(通过低通滤波器将图像分解成两层)

Using on image processing techniques(图像处理技术), we found that after applying an appropriate low-pass filters such as [24]–[26], low-pass versions of both the rainy image Ibase and the clean image Jbase are smooth and are approximately equal, as shown below.(意思是rainy的Ibase和clean的Jbase是大约相等的,即Ibase ≈ Jbase。)

[24] K. He, J. Sun, and X. Tang, “Guided image filtering,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 6, pp. 1397–1409, 2013.

[26] Q. Zhang, X. Shen, L. Xu, and J. Jia, “Rolling guidance filter,” in European Conference on Computer Vision (ECCV), 2014.

so, we rewrite the objective function in (1) as:

This directly lead us to train the CNN network on the detail layer instead of the image domain(提供了一个训练思路,只训练细节层)

Advantages:

- 1.First, after subtracting the base layer, the detail layer is sparser than the image since most regions in the detail layer are close to zero.(细节层更稀疏)Taking advantage of the sparsity of the detail layer is a widely used technique in existing deraining methods [9]–[11].(稀疏细节层常被用到)

[9] L. W. Kang, C. W. Lin, and Y. H. Fu, “Automatic single image-based rain streaks removal via image decomposition,” IEEE Transactions on Image Processing, vol. 21, no. 4, pp. 1742–1755, 2012.

[10] D. A. Huang, L. W. Kang, Y. C. F. Wang, and C. W. Lin, “Self-learning based image decomposition with applications to single image denoising,” IEEE Transactions on Multimedia, vol. 16, no. 1, pp. 83–93, 2014.

[11] Y. Luo, Y. Xu, and H. Ji, “Removing rain from a single image via discriminative sparse coding(判别稀疏编码),” in International Conference on Computer Vision (ICCV), 2015.

In the context of(在上下文中) a neural network, training a CNN on the detail layer also follows the procedure of mapping an input patch to an output patch(遵循将输入补丁映射到输出补丁的过程), but since the mapping range(映射范围减小) has been significantly decreased, the regression problem is significantly easier to handle for a deep learning model(对于深度学习网络来说,回归问题更容易处理)(这里并没懂。。。????). Thus, training on the detail layer instead of the image domain can improve learning the network weights and thus the de-raining result without a large increase in training data or computational resources.

- 2.it can improve the convergence of the CNN.(第二点是可以提高CNN的收敛性)As we show in our experiments (Figure 17), training on the detail layer converges much faster than training on the image domain.(实验表明在细节层训练收敛的更快)

- 3.decomposing an image into base and

detail layers is widely used by the wider image enhancement community [27], [28]. These enhancement procedures are tailored to this decomposition and can be easily embedded into our architecture to further improve image quality, which we describe in Section II-D.(这些增强程序适用于此分解,可以轻松嵌入到我们的架构中,以进一步提高图像质量,我们在第II-D节中对此进行了描述。)

[27] B. Gu, W. Li, M. Zhu, and M. Wang, “Local edge-preserving multiscale decomposition for high dynamic range image tone mapping,” IEEE Transactions on Image Processing, vol. 22, no. 1, pp. 70–79, 2013.

[28] T. Qiu, A. Wang, N. Yu, and A. Song, “LLSURE: local linear surebased edge-preserving image filtering,” IEEE Transactions on Image Processing, vol. 22, no. 1, pp. 80–90, 2013.

The detail layer is equal to the difference between the image and the base layer.

We use the guided filtering method of [24] as the low-pass filter because it is simple and fast to implement.(采用24的低通滤波器)(这些低通滤波器有什么区别吗)

[24] K. He, J. Sun, and X. Tang, “Guided image filtering,” IEEE Transactions

on Pattern Analysis and Machine Intelligence, vol. 35, no. 6, pp. 1397–

1409, 2013.

In this paper, the guidance image is the input image itself. However, the choice of low-pass filter is not limited to guided filtering; other filtering approaches were also effective in our experiments, such as bilateral filtering [25] and rolling guidance filtering [26].Results with these filters were nearly identical, so we choose [24] for its low computational complexity.

(本文中的指导图像是它本身)(没懂?)(低通滤波器不限于24,25.26也很好,每个滤波器效果基本相同,所以选24这个低计算复杂度的)

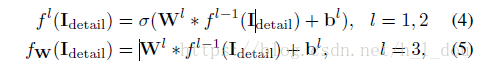

B.Our convolutional neural network

our network structure can be expressed as three operations:(这个公式是没懂的)