版权声明:转载请注明出处 https://blog.csdn.net/cotyyang/article/details/81844174

这是商品的原url(爬这个的时候刚好七夕左右,所以就想着看看毛绒玩具熊,但是没有gf,连送的人都没有。嘤嘤嘤)

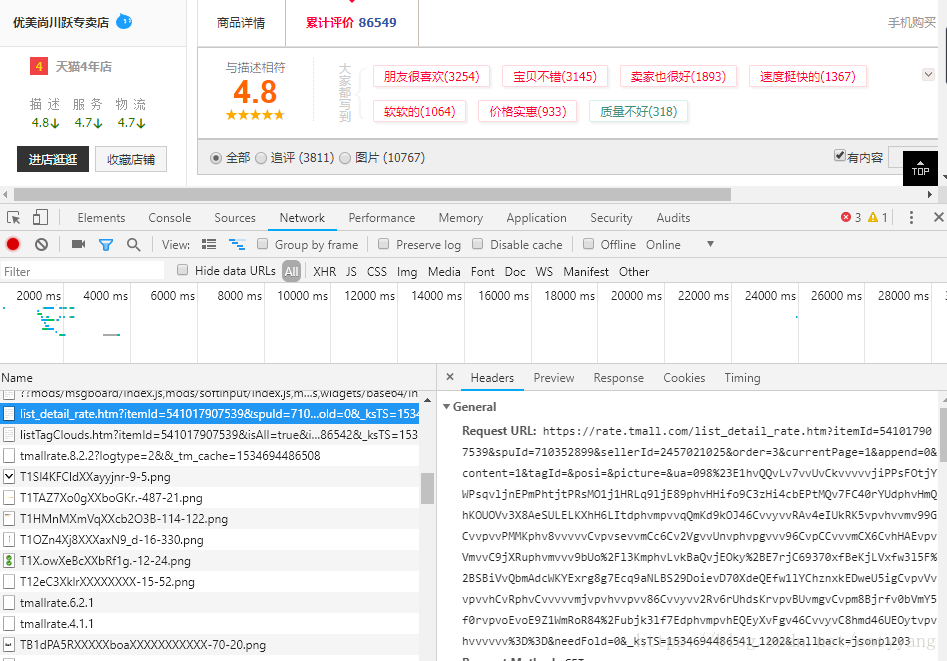

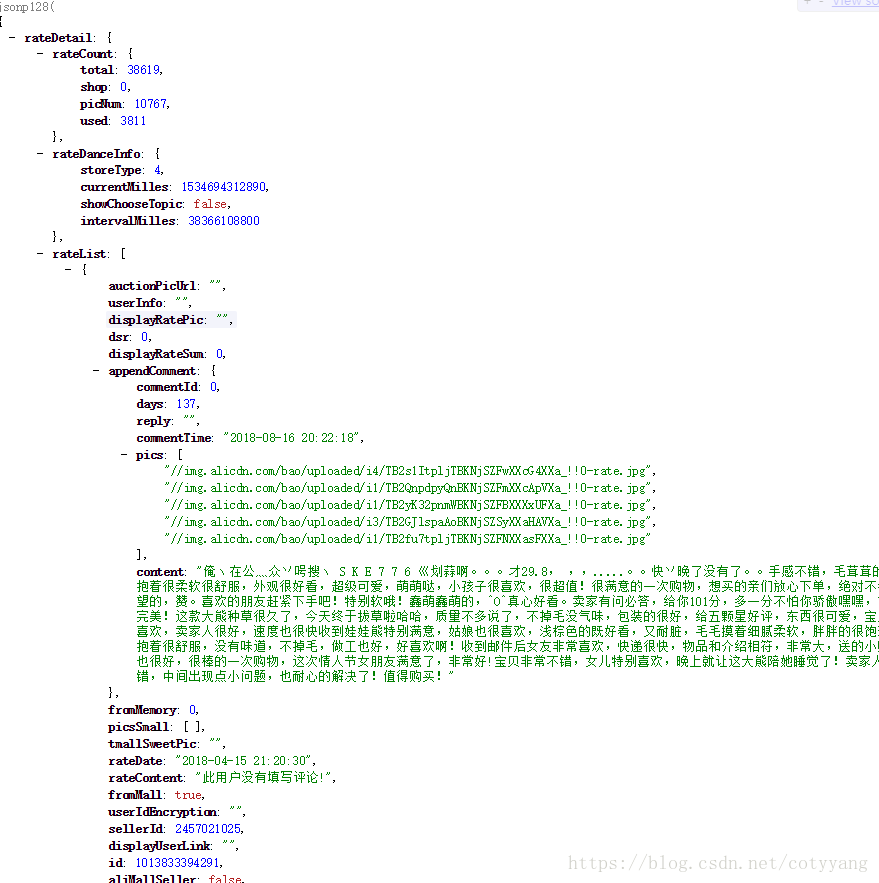

用chrome打开url,然后clrar一下,然后点击累计评论,下面就会出现一大堆加载的东西,一个一个找(偶目前只知道一个一个点开看,但那些img什么的就别试了),然后你就看到了我图中蓝色的那段,那个就是评论的json数据,点开url是这样的,(推荐一个插件JSONView,可以方便看json数据),然后可以根据需要,爬取数据了

话不多说,先贴代码

tianmaopinlun.py

import scrapy

import json

from tianmaopinlun.items import TianmaopinlunItem

class tianmaopinlun(scrapy.Spider):

name = 'tianmaopinlun'

def start_requests(self):

for i in range(1,11):

yield scrapy.Request('https://rate.tmall.com/list_detail_rate.htm?itemId=541017907539&spuId=710352899&sellerId=2457021025&order=3¤tPage='+str(i)+'&append=0&content=1',callback=self.parse)

def parse(self, response):

res=str(response.body,"utf-8")

res1=res[:-1]

res2=res1.split("(",1)[1]

#这里我对str的截取还不是很熟悉,所以采取了这种笨办法,有好方法的可以留言一下

res3=json.loads(res2)

for i in res3['rateDetail']['rateList']:

items=TianmaopinlunItem()

items['auctionSku']=i['auctionSku']

items['rateContent']=i['rateContent']

items['rateDate']=i['rateDate']

if i['appendComment']:

items['appendcontent']=i['appendComment']['content']

items['appendcommentTime']=i['appendComment']['commentTime']

else:

#本来一开始没有把空值设为0的,但后面考虑到要把数据写到mysql里去,还是这样写了

items['appendcontent']='0'

items['appendcommentTime']='0'

yield itemsitem.py

import scrapy

class TianmaopinlunItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

#下面的命名就是json数据里的命名(每次写代码最讨厌的就是命名了,emmm)

auctionSku=scrapy.Field()

rateContent=scrapy.Field()

rateDate=scrapy.Field()

appendcontent=scrapy.Field()

appendcommentTime=scrapy.Field()settings.py部分代码

ROBOTSTXT_OBEY = False

COOKIES_ENABLED=False

REDIRECT_ENABLED = True

MYSQL_HOST = 'localhost'

MYSQL_DBNAME = 'tianmaopinlun' #数据库名字

MYSQL_USER = 'root' #数据库账号

MYSQL_PASSWD = '111000' #数据库密码

MYSQL_PORT = 3306

ITEM_PIPELINES = {

'tianmaopinlun.pipelines.TianmaopinlunPipeline': 300,

}

# Configure maximum concurrent requests performed by Scrapy (default: 16)

CONCURRENT_REQUESTS = 50pipelines.py

import pymysql

class TianmaopinlunPipeline(object):

maorong_name = 'pinlun'

#这里要先到mysql里去创建表,推荐Navicat for mysql 。当然你也可以在代码里创建

maorong_insert="""

insert into pinlun(auctionSku,rateContent,rateDate,appendcontent,appendcommentTime)

values('{auctionSku}','{rateContent}','{rateDate}','{appendcontent}','{appendcommentTime}')

"""

def __init__(self,settings):

self.settings=settings

def process_item(self, item, spider):

sqltext = self.maorong_insert.format(

auctionSku=pymysql.escape_string(item['auctionSku']),

rateContent=pymysql.escape_string(item['rateContent']),

rateDate=pymysql.escape_string(item['rateDate']),

appendcontent=pymysql.escape_string(item['appendcontent']),

appendcommentTime=pymysql.escape_string(item['appendcommentTime']),

)

self.cursor.execute(sqltext)

return item

@classmethod

def from_crawler(cls, crawler):

return cls(crawler.settings)

def open_spider(self, spider):

# 连接数据库

self.connect = pymysql.connect(

host=self.settings.get('MYSQL_HOST'),

port=self.settings.get('MYSQL_PORT'),

db=self.settings.get('MYSQL_DBNAME'),

user=self.settings.get('MYSQL_USER'),

passwd=self.settings.get('MYSQL_PASSWD'),

charset='utf8mb4',

use_unicode=True)

# 通过cursor执行增删查改

self.cursor = self.connect.cursor();

self.connect.autocommit(True)

def close_spider(self, spider):

self.cursor.close()

self.connect.close()