Intro

k-NN 是最简单的 ML 算法,就是把训练数据展开到特征空间上,再扔进来一个测试样本 ,给 找到距离最近的 个samples,在这 个 samples 中哪个 出现的次数最多, 就标记成哪个 . 一句话形容就是 : 近朱者赤近墨者黑.

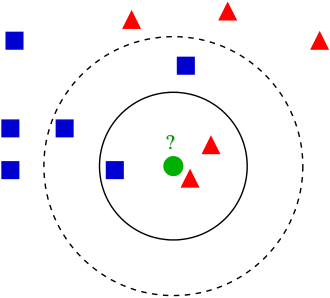

Example of k-NN classification. The test sample (green circle) should be classified either to the first class of blue squares or to the second class of red triangles. If k = 3 (solid line circle) it is assigned to the second class because there are 2 triangles and only 1 square inside the inner circle. If k = 5 (dashed line circle) it is assigned to the first class (3 squares vs. 2 triangles inside the outer circle). – Wikipedia

Tags 标签

- non-parametric method, 非参数方法

- instance-based learning / lazy learning

Hyper parameter – K

就一个超参数, .

距离 distance

另一个重要的影响因素就是对距离的定义了。最常用的就是欧氏距离了,次常用的还有 街区距离,马氏距离等。更多距离,看这里 :常用的距离度量