音乐爬虫

目的:爬取歌名,歌手,歌词,歌曲url。

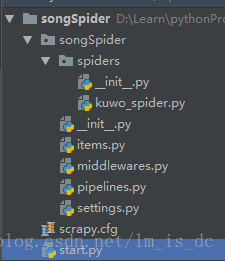

一、创建爬虫项目

创建一个文件夹,进入文件夹,打开cmd窗口,输入:

scrapy startproject songSpider依次输入:

cd songSpider

scrapy genspider kuwo_spider kuwo.cn在路径songSpider\songSpider\spiders\下多了一个文件kuwo_spider.py。

二、定义items.py中的字段名

import scrapy

class SongspiderItem(scrapy.Item):

# define the fields for your item here like:

name = scrapy.Field() # 歌名

singer = scrapy.Field() # 歌手

lyric = scrapy.Field() # 歌词

url = scrapy.Field() # 能拿到音乐文件的url

originId = scrapy.Field() # 来源在Mysql数据库中建立一个表,表中的字段与上面的一样,方便接下来把数据保存进数据库(持久化)。

三、修改settings.py中的内容

ROBOTSTXT_OBEY = False

#使用代理

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36',

}

#使用管道把item保存进数据库

ITEM_PIPELINES = {

'songSpider.pipelines.SongspiderPipeline': 300,

}四、修改pipelines.py

#保存进MySQL数据库

import pymysql

class SongspiderPipeline(object):

def __init__(self):

#连接数据库

self.conn = None

#游标

self.cur = None

# 打开爬虫时调用,只调用一次

def open_spider(self,spider):

self.conn = pymysql.connect(host='127.0.0.1',

user='root',

password="123456",

database='songSearch',

port=3306,

charset='utf8')

self.cur = self.conn.cursor()

def process_item(self, item, spider):

clos,value = zip(*item.items())

# print('sql语句',sql,value)

query_sql = f"""select sid from Song where name='{value[0]}'

and singer='{value[1]}'and originId={value[4]} """

# 如果歌曲已存在就不执行sql

if not self.cur.execute(query_sql):

sql = "INSERT INTO `%s`(%s) VALUES (%s)" % ('Song',

','.join(clos),

','.join(['%s'] * len(value)))

self.cur.execute(sql, value)

self.conn.commit()

return item

def close_spider(self, spider):

self.cur.close()

self.conn.close()五、修改kuwo_spider.py

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

from songSpider.items import SongspiderItem

#使用多页爬取

class KuwoSpider(CrawlSpider):

name = 'kuwo_spider'

allowed_domains = ['kuwo.cn']

start_urls = ['http://yinyue.kuwo.cn/yy/category.htm',

'http://www.kuwo.cn/mingxing',

]

# 设置爬取规则,可迭代对象,可设置多个规则

rules = [

Rule(LinkExtractor(allow=(

"http://www.kuwo.cn/yinyue/(\d+)")),

callback='get_song', follow=True),

Rule(LinkExtractor(allow=(".*"))),

]

# 获取歌曲信息

def get_song(self,response):

item = SongspiderItem()

item['name'] = response.xpath('//*[@id="lrcName"]/text()').extract()[0]

item['singer'] = response.xpath('//*[@id="musiclrc"]/div[1]/p[2]/span/a/text()').extract()[0]

# lyric = # 歌词

lyrics = response.xpath('//*[@id="llrcId"]/p')

lyricstr = item['name']

for lyric in lyrics:

lyricstr += '\n'

lyricstr += lyric.xpath('text()').extract()[0]

item['lyric'] = lyricstr

item['url'] = response.url

item['originId'] = 2

# with open(r'D:\Learn\pythonPro\qianfeng\MusicSearch\songSpider\songs.csv', 'a', newline='',encoding='utf-8') as f:

# writer = csv.writer(f)

# # 按行写入

# writer.writerow([item['name'],item['singer'],item['lyric'],item['url'],])

return item

六、在项目目录下添加start.py

主要使用来启动爬虫。

import scrapy.cmdline

def main():

scrapy.cmdline.execute(['scrapy','crawl','kuwo_spider'])

if __name__ == '__main__':

main()七、使用Redis进行分布式爬虫

1、修改kuwo_spider.py

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

from songSpider.items import SongspiderItem

from scrapy_redis.spiders import RedisCrawlSpider

# 使用Redis爬取

class KuwoSpider(RedisCrawlSpider):

name = 'kuwo_spider'

allowed_domains = ['kuwo.cn']

# 增加一个redis_key

redis_key = "kuwo:start_url"

# 设置爬取规则,可迭代对象,可设置多个规则

rules = [

Rule(LinkExtractor(allow=(

"http://www.kuwo.cn/yinyue/(\d+)")),

callback='get_song', follow=True),

Rule(LinkExtractor(allow=(".*"))),

]

# 获取歌曲信息

def get_song(self,response):

item = SongspiderItem()

item['name'] = response.xpath('//*[@id="lrcName"]/text()').extract()[0]

item['singer'] = response.xpath('//*[@id="musiclrc"]/div[1]/p[2]/span/a/text()').extract()[0]

# lyric = # 歌词

lyrics = response.xpath('//*[@id="llrcId"]/p')

lyricstr = item['name']

for lyric in lyrics:

lyricstr += '\n'

lyricstr += lyric.xpath('text()').extract()[0]

item['lyric'] = lyricstr

item['url'] = response.url

item['originId'] = 2

return item

2、修改settings.py

ITEM_PIPELINES = {

'songSpider.pipelines.SongspiderPipeline': 300,

'scrapy_redis.pipelines.RedisPipeline': 400, # 通向redis

}

# 分布式配置

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

SCHEDULER_PERSIST = True

REDIS_URL = "redis://:[email protected]:6379"3、在CMD命令终端输入

开启redis服务。

然后在另一个cmd窗口输入:

redis-cli

auth 123456

lpush kuwo:start_url http://yinyue.kuwo.cn/yy/category.htm4、在PyCharm中的Terminal中输入:

scrapy runspider kuwo_spider.py5、实现分布式爬取

把这个程序在另外一台电脑运行,redis连接主机的服务器,启动爬虫即可。

具体操作为:

5.1 主机设置

在主机上启动redis服务:

redis-server redis.conf打开CMD窗口输入redis_key:

redis-cli

auth 123456

lpush kuwo:start_url http://yinyue.kuwo.cn/yy/category.htm在主机上启动爬虫:

scrapy runspider kuwo_spider.py5.2 从机设置(可以有多个从机)

在从机上连接主机redis服务器:

# 在CMD窗口中输入

# 连接注解reids服务器

# 我当前的主机ip为10.3.141.228 端口为6379 密码为123456

redis-cli -h 10.3.141.228 -p 6379 -a 123456打开CMD窗口输入redis_key:

redis-cli

auth 123456

lpush kuwo:start_url http://www.kuwo.cn/mingxing不同的从机,kuwo:start_url的值可以设置不同的链接。

在从机上启动爬虫:

scrapy runspider kuwo_spider.py八、使用IP代理池

1、修改settings.py,增加

DOWNLOADER_MIDDLEWARES = {

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware':None,

'songSpider.middlewares.ProxyMiddleWare':125,

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware':None

}2、修改middlewares.py,增加

from scrapy import signals

import random

import scrapy

from scrapy import log

import pymysql

# ip代理池

class ProxyMiddleWare(object):

"""docstring for ProxyMiddleWare"""

def process_request(self,request, spider):

'''对request对象加上proxy'''

proxy = self.get_random_proxy()

print("this is request ip:"+proxy)

request.meta['proxy'] = proxy

def process_response(self, request, response, spider):

'''对返回的response处理'''

# 如果返回的response状态不是200,重新生成当前request对象

if response.status != 200:

proxy = self.get_random_proxy()

print("this is response ip:"+proxy)

# 对当前reque加上代理

request.meta['proxy'] = proxy

return request

return response

def get_random_proxy(self):

'''随机从IP数据库中读取proxy'''

# 连接数据库

con = pymysql.connect(

host='localhost', # 数据库所在地址URL

user='root', # 用户名

password='123456', # 密码

database='proxypool', # 数据库名称

port=3306, # 端口号

charset='utf8'

)

# 拿到查询游标

cursor = con.cursor()

#获取ip

ip_ports = cursor.execute('select ip,ip_port from proxypool ')

if ip_ports:

# 从游标中取出查询结果

ip_ports = cursor.fetchall()

# 断开连接

cursor.close()

con.close()

proxies = []

for ip,port in ip_ports:

proxies.append("http://"+ip+':'+port)

proxy = random.choice(proxies)

return proxy