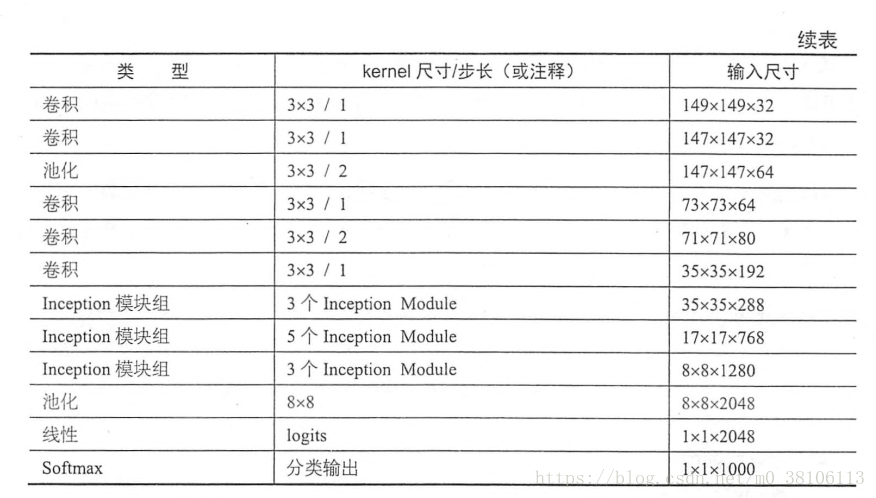

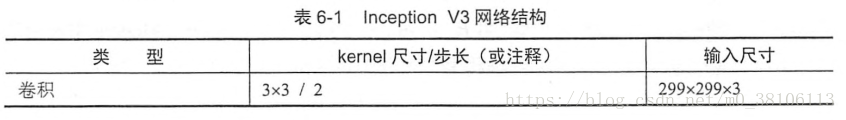

网络结构分析

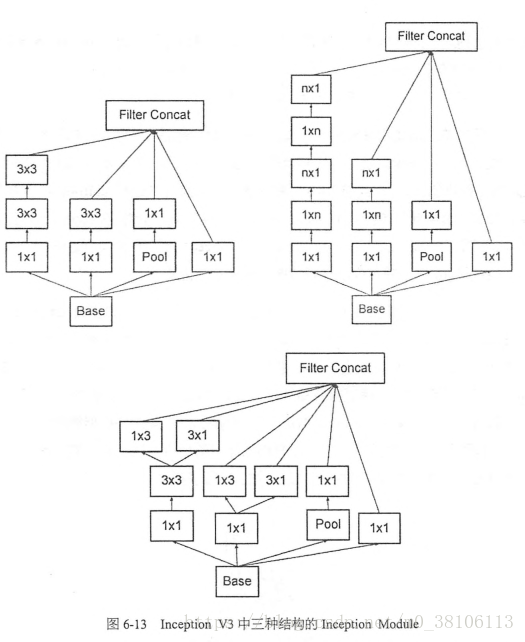

其中Inception模块组结构如下:

完整模型如下:

代码实现

1、导入模块

import tensorflow as tf

import tensorflow.contrib.slim as slim

from datetime import datetime

import math

import time2、实现一个简单的函数trunc_normal,产生截断的正态分布

trunc_normal = lambda stddev: tf.truncated_normal_initializer(0.0, stddev)3、定义函数inception_v3_arg_scope,用来生成网络中经常用到的函数的默认参数

def inception_v3_arg_scope(weight_decay=0.00004, stddev=0.1,

batch_norm_var_collection='moving_vars'):

"""生成网络中经常用到的函数的默认参数"""

batch_norm_params = {

'decay': 0.9997,

'epsilon': 0.001,

'updates_collections': tf.GraphKeys.UPDATE_OPS,

'variables_collections': {

'beta': None,

'gamma': None,

'moving_mean': [batch_norm_var_collection],

'moving_variance': [batch_norm_var_collection]

}

}

# slim.arg_scope可以给函数的参数自动赋予某些默认值,例如with slim.arg_scope([slim.conv2d,

# slim.fully_connected],weights_regularizer=slim.l2_regularizer(weight_decay))这句会对

# slim.conv2d, slim.fully_connected这两个函数的参数自动赋值,将参数weights_regularizer默认

# 设置为slim.l2_regularizer(weight_decay)。此后不需要每次都设置参数了只需要在修改的时候设置

with slim.arg_scope([slim.conv2d, slim.fully_connected],

weights_regularizer=slim.l2_regularizer(weight_decay)):

# 再对卷积层函数进行默认参数配置

with slim.arg_scope([slim.conv2d],

weights_initializer=tf.truncated_normal_initializer(stddev=stddev),

activation_fn=tf.nn.relu,

normalizer_fn=slim.batch_norm,

normalizer_params=batch_norm_params) as sc:

return sc4、定义Inception_v3_base函数,用以生成Inception V3网络的卷积部分

def inception_v3_base(inputs, scope=None):

"""一个inception_v3基本模块"""

end_points = {} # 用来保存常用的关键节点

# qs1:这里的variable_scope的三个参数是什么意思

# 非Inception层的构建

with tf.variable_scope(scope, 'InceptionV3', [inputs]):

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding='VALID'):

# 299 x 299 x 3

net = slim.conv2d(inputs, 32, [3, 3], stride=2, scope='Conv2d_1a_3x3')

# 149 x 149 x 32

net = slim.conv2d(net, 32, [3, 3], scope='Conv2d_2a_3x3')

# 147 x 147 x 32

net = slim.conv2d(net, 64, [3, 3], padding='SAME', scope='Conv2d_2b_3x3')

# 147 x 147 x 64

net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_3a_3x3')

# 73 x 73 x 64

net = slim.conv2d(net, 80, [3, 3], scope='Conv2d_3b_1x1')

# 73 x 73 x 80.

net = slim.conv2d(net, 192, [3, 3], stride=2, scope='Conv2d_4a_3x3')

# 71 x 71 x 192.

net = slim.max_pool2d(net, [3, 3], scope='MaxPool_5a_3x3')

# 35 x 35 x 192.

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding="SAME"):

# 第一个Inception模块组的第一个Inception模块构建

# 35x35x256

with tf.variable_scope('Mixed_5b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# 第一个模块组的第二个Inception模块——Mixed_5c构建

# 35x35x288

with tf.variable_scope('Mixed_5c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope='Conv_1_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

# qs3:这里的3是什么意思

# an:是axis,轴的意思

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# 第一个模块组的第三个模块——Mixed_5d和上一个模块一样

# 35x35x288

with tf.variable_scope('Mixed_5d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope='Conv_1_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# 第二个模块组的第一个模块——Mixed_6a

# 17x17x768

with tf.variable_scope('Mixed_6a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

# 第二个Inception模块组第二个Inception模块——Mixed_6b

# mixed4: 17 x 17 x 768.

with tf.variable_scope('Mixed_6b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 128, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 128, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# 第二个模块组的第二个Inception模块——Mixed_6c

# mixed_5: 17 x 17 x 768.

with tf.variable_scope('Mixed_6c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_6: 17 x 17 x 768.

with tf.variable_scope('Mixed_6d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_7: 17 x 17 x 768.

with tf.variable_scope('Mixed_6e'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 192, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 192, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

end_points['Mixed_6e'] = net

# 开始构筑第三个模块组

# mixed_8: 8 x 8 x 1280.

with tf.variable_scope('Mixed_7a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0, 320, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1, 192, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

# mixed_9: 8 x 8 x 2048.

with tf.variable_scope('Mixed_7b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope='Conv2d_0b_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(

branch_2, 384, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope='Conv2d_0d_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(

branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_10: 8 x 8 x 2048.

with tf.variable_scope('Mixed_7c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope='Conv2d_0c_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(

branch_2, 384, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope='Conv2d_0d_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(

branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

return net, end_points5、生成Inception_v3网络的函数,其中添加了辅助分类层和最后最后的池化层,线性层和softmax层

def inception_v3(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.8,

prediction_fn=slim.softmax,

spatial_squeeze=True,

reuse=None,

scope='InceptionV3'):

with tf.variable_scope(scope, 'InceptionV3',

[inputs, num_classes], reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm, slim.dropout],

is_training=is_training):

net, end_points = inception_v3_base(inputs, scope=scope)

# 辅助分类节点Auxiliaary Logits

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding='SAME'):

aux_logits = end_points['Mixed_6e']

with tf.variable_scope('AuxLogits'):

aux_logits = slim.avg_pool2d(aux_logits, [5, 5], stride=3,

padding='VALID', scope='AvgPool_1a_5x5')

aux_logits = slim.conv2d(aux_logits, 128, [1, 1], scope='Conv2d_1b_1x1')

aux_logits = slim.conv2d(aux_logits, 768, [5, 5],

weights_initializer=trunc_normal(0.01),

padding='VALID', scope='Conv2d_2a_5x5')

aux_logits = slim.conv2d(aux_logits, num_classes, [1, 1],

activation_fn=None, normalizer_fn=None,

weights_initializer=trunc_normal(0.001),

scope='Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits, [1, 2], name='SpatiaSqueeze')

end_points['AuxLogits'] = aux_logits

# 正常分类预测的逻辑

with tf.variable_scope('Logits'):

net = slim.avg_pool2d(net, [8, 8], padding='VALID', scope='AvgPool_1a_8x8')

net = slim.dropout(net, keep_prob=dropout_keep_prob, scope='Dropout_1b')

end_points['PreLogits'] = net

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, scope='Conv2d_1c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits, [1, 2], name='SpatialSqueeze')

end_points['Logits'] = logits

end_points['Predictions'] = prediction_fn(logits, scope='Predications')

return logits, end_points6、定义测试用工具,可以测试每轮的训练时间

def time_tensorflow_run(session, target, info_string):

"""

评估Inception_V3每轮计算时间的函数

:param session: TensorFlow的Session

:param target: 需要预测的算子

:param info_string: 测试的名称

:return:

"""

num_steps_burn_in = 10 # 预热轮数,因为头几轮迭代有显存加载,cache命中等问题,所以不考虑

total_duration = 0.0 # 总时间

total_duration_squared = 0.0 # 总时间平方和

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in: # 预热轮数之后再显示每轮消耗时间

if not i % 10:

print('%s: step %d, duration = %.3f' %

(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration # 累加用于计算后面每轮耗时的均值

total_duration_squared += duration * duration # 累加用于计算后面每轮函数的标准差

mn = total_duration / num_batches # 计算每轮平均耗时

vr = total_duration_squared / num_batches - mn * mn

sd = math.sqrt(vr) # 计算标准差

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %

(datetime.now(), info_string, num_batches, mn, sd))7、执行训练

batch_size = 32

height, width = 299, 299

inputs = tf.random_uniform((batch_size, height, width, 3))

with slim.arg_scope(inception_v3_arg_scope()):

logits, end_points = inception_v3(inputs, is_training=False)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

num_batches = 100

time_tensorflow_run(sess, logits, "Forward")P.S.因为用的是笔记本CPU,所以只做了前向传播的部分,最终跑出来的速度并不好看。想要完全实现的可以自己添加损失函数和优化器,然后喂入数据就可以运行。

2018-08-13 12:08:11.923101: step 0, duration = 12.203

2018-08-13 12:10:13.557725: step 10, duration = 12.145

2018-08-13 12:12:15.377852: step 20, duration = 12.168

2018-08-13 12:14:17.043393: step 30, duration = 12.158

2018-08-13 12:16:18.598230: step 40, duration = 12.145

2018-08-13 12:18:20.088241: step 50, duration = 12.148

2018-08-13 12:20:21.695936: step 60, duration = 12.139

2018-08-13 12:22:23.915995: step 70, duration = 12.159

2018-08-13 12:24:25.987449: step 80, duration = 12.178

2018-08-13 12:26:28.586507: step 90, duration = 12.221

2018-08-13 12:28:18.476547: Forward across 100 steps, 12.188 +/- 0.067 sec / batch8、InceptionV3总结

(1)Factorization into samll convolutions很有效,可以降低参数量,减轻过拟合,增加网络非线性的表达能力。

(2)卷积网络从输入到输出,应该让图片尺寸逐渐减小,输出通道数逐渐增加,即让空间结构简化,将空间信息转化为高阶抽象的特征信息。

(3)Inception Module用多个分支提取不同抽象程度的高阶特征的思路很有效,可以丰富网络的表达能力

9、问题总结

1、对slim.arg_scope的理解

slim.arg_scope可以给函数的参数自动赋予某些默认值,例如with slim.arg_scope([slim.conv2d,slim.fully_connected],weights_regularizer=slim.l2_regularizer(weight_decay))这句会对slim.conv2d, slim.fully_connected这两个函数的参数自动赋值,将参数weights_regularizer默认设置为slim.l2_regularizer(weight_decay)。此后不需要每次都设置参数了只需要在修改的时候设置。对于复杂网络的代码简化非常有效。

2、这里的variable_scope的三个参数是什么意思

with tf.variable_scope(scope, 'InceptionV3', [inputs]):参考TensorFlow文档中

__init__(

name_or_scope,

default_name=None,

values=None,

.....

)

name_or_scope: string or VariableScope: the scope to open.

default_name: The default name to use if the name_or_scope argument is None, this name will be uniquified. If name_or_scope is provided it won't be used and therefore it is not required and can be None.

values: The list of Tensor arguments that are passed to the op function.大意是第一个参数指定要打开的变量空间;

第二个参数是默认的该变量空间的名字,如果第一个参数不为None则第二个参数无效;

第三个参数是该变量空间中的函数需要用到的张量,必须显示得传到空间中。

3、为什么代码实现与书上结构对不上?

# 299 x 299 x 3

net = slim.conv2d(inputs, 32, [3, 3], stride=2, scope='Conv2d_1a_3x3')

# 149 x 149 x 32

net = slim.conv2d(net, 32, [3, 3], scope='Conv2d_2a_3x3')

# 147 x 147 x 32

net = slim.conv2d(net, 64, [3, 3], padding='SAME', scope='Conv2d_2b_3x3')

# 147 x 147 x 64

net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_3a_3x3')

# 73 x 73 x 64

net = slim.conv2d(net, 80, [1, 1], scope='Conv2d_3b_1x1')

# 73 x 73 x 80.

net = slim.conv2d(net, 192, [3, 3], scope='Conv2d_4a_3x3')

# 71 x 71 x 192.

net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_5a_3x3')

# 35 x 35 x 192.书中代码错误,改为:

# 299 x 299 x 3

net = slim.conv2d(inputs, 32, [3, 3], stride=2, scope='Conv2d_1a_3x3')

# 149 x 149 x 32

net = slim.conv2d(net, 32, [3, 3], scope='Conv2d_2a_3x3')

# 147 x 147 x 32

net = slim.conv2d(net, 64, [3, 3], padding='SAME', scope='Conv2d_2b_3x3')

# 147 x 147 x 64

net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_3a_3x3')

# 73 x 73 x 64

net = slim.conv2d(net, 80, [3, 3], scope='Conv2d_3b_1x1')

# 73 x 73 x 80.

net = slim.conv2d(net, 192, [3, 3], stride=2, scope='Conv2d_4a_3x3')

# 71 x 71 x 192.

net = slim.max_pool2d(net, [3, 3], scope='MaxPool_5a_3x3')

# 35 x 35 x 192.4、这里的3是什么意思,怎么定义的

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)3是axis,轴的意思。根据Inception模块的分支数来决定,有时候为了保证维度的一致性会产生空轴,最后再去除。