前言

前些日子在忙其他的事情,一直没有更新自己学习神经网络的博客,就在端午这天更吧!也祝大家端午节愉快,身体安康。

这篇博客主要讲的是Google Inception Net,Google Net从Inception V1到Inception V4,可以说都是干货满满,充满了各种知识点,我自己也学习和消化了很长一段时。今天就向大家简单地介绍一下我认为比较有意思的地方。

Google Net的发展

Inception V1

Inception V1首次出现是在ILSVRC 2014的比赛中,它主要的创新两点。第一,去除了以往神经网络最后的全连接层,而改用平均池化层。因为全连接层往往占据了神经网络的大部分的参数量,它耗费了大量的计算和存储资源,而且还容易引起过拟合,这一改进让网络训练的更快并有效地减轻了过拟合。第二就是精心设计了一种Inception Module 。

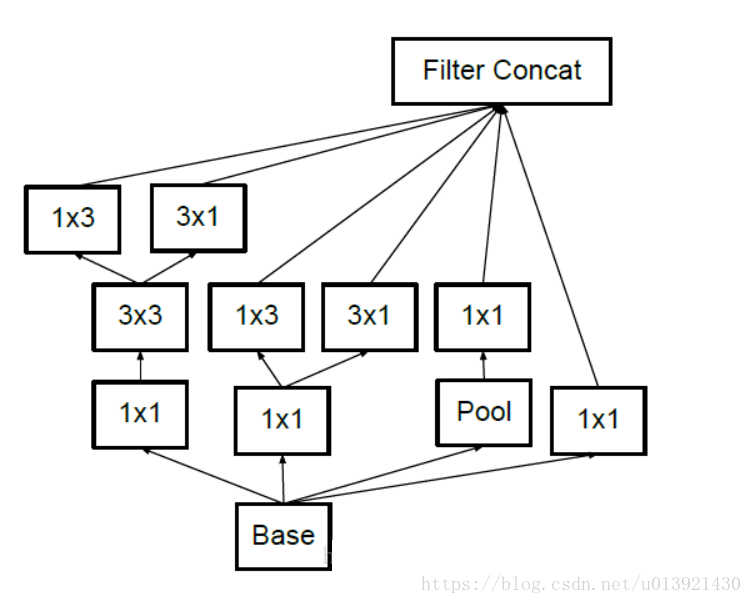

Inception Module如同一个大网络中的小网络。它使用1x1的卷积核可以有效的跨通道整合信息,又包含了不同大小的卷积核和池化层,然后将这些输出进行信息组合,这样可以提取图像中不同尺度的信息,使模型更具泛化能力,提高了参数的利用率。而且,它符合Hebbian原理的稀疏结构。

Inception V2

Inception V2学习了VGGNet,用两个3*3的卷积代替5*5的大卷积核(降低参数量的同时减轻了过拟合),同时还提出了非常著名的Batch Normalization(简称BN)方法。BN是一个非常有效的正则化方法,可以让大型卷积网络的训练速度加快很多倍,同时收敛后的分类准确率可以的到大幅度提高。

Batch Normalization

BN方法在15年被提出,此后一直被使用在各种网络中,可以说是非常nice的。众所周知,CNN网络在训练的过程中,前一层的参数变化影响着后面层的变化,并且这种影响会随着网络深度的增加而不断被传递和放大。目前在CNN训练时,绝大多数都采用mini-batch使用随机梯度下降算法进行训练,那么如果输入数据的不断变化,导致网络中参数不断调整,网络的各层输入数据的分布则会不断变化,那么在训练的过程每一层的参数就会不断跳变以适应这种新的数据分布,难以收敛。

BN方法通过让对每一层的输入进行归一化处理,减小了数据的变化,加快了梯度下降的速率。(最开始听吴恩达老师讲数据归一化,我以为只是对输入数据做归一化,BN是对每一层的输入都做了归一化)

想要简单地理解BN的过程其实也好说,你可以简单的认为它对将输入数据减去均值,然后除以了标准差,但是实际上要比这个复杂。在方差项添加了一个偏差epislon,防止方差为0;还添加了两个可训练的参数gamma和beta,对其进行缩放和平移,以保证每一次输入数据的分布比较统一。

BN在用于神经网络某层时,会对每一个mini-batch数据的内部进行标准化处理,使输出规范化到(0,1)的正态分布,减少了Internal Covariate Shift(内部神经元分布的改变)。BN论文指出,传统的深度神经网络在训练时,每一层的输入的分布都在变化,导致训练变得困难,我们只能使用一个很小的学习速率解决这个问题。而对每一层使用BN之后,我们可以有效的解决这个问题,学习速率可以增大很多倍,达到之间的准确率需要的迭代次数有需要1/14,训练时间大大缩短,并且在达到之间准确率后,可以继续训练。以为BN某种意义上还起到了正则化的作用,所有可以减少或取消Dropout,简化网络结构。

此外,在使用BN时,需要一些调整,包括增大学习率并加快学习衰减速度以适应BN规范化后的数据;去除Dropout并减轻L2正则(BN已起到正则化的作用);去除LRN;更彻底地对训练样本进行shuffle(这是个啥!!);减少数据增广过程中对数据的光学畸变(BN训练更快,每个样本被训练的次数更少,因此真实的样本对训练更有帮助)

Inception V3

Inception V3中对网络的改造主要有两个方面,第一是进一步拆卸了卷积核,比如将一个7X7的卷积核分解成为一个1x7的卷积核与一个7X1的卷积核,这样可以节约参数,加速运算并减轻过拟合(与VGGNet中的思想相同)。论文中指出,这样非对称的卷积结构拆分,结果比对称地拆分为几个相同的小卷积核效果更明显,可以处理更多、更丰富的空间特征、增加特征多样性。

另一方面,Inception V3优化了Inception Module的结构,这些Inception Module只在网络的后部出现,前部还是普通的卷积层。并且还在一些Inception Module的分支中又使用了分支,如下图所示。

Inception V4

Inception V4相比V3主要是结合了ResNet。

代码实现

按照书上的代码,我实现了InceptionV3网络,但是还是要简单地说一下一下代码。在书上的代码中大量使用了slim库中的函数。slim是一种轻量级的tensorflow库,可以使模型的构建,训练,测试都变得更加简单。

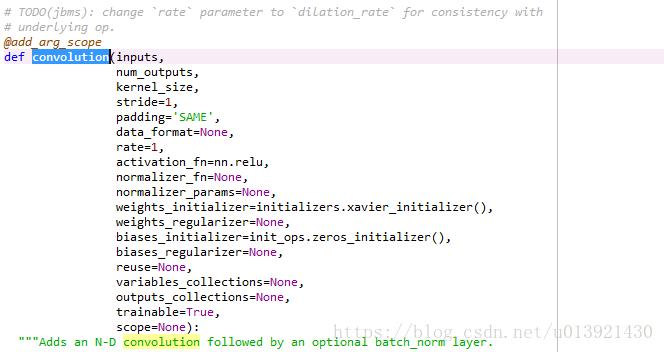

例如slim.arg_scope()函数,这个函数可以给在其范围内使用的函数定义好默认参数,这样可以节约代码编写的时间。

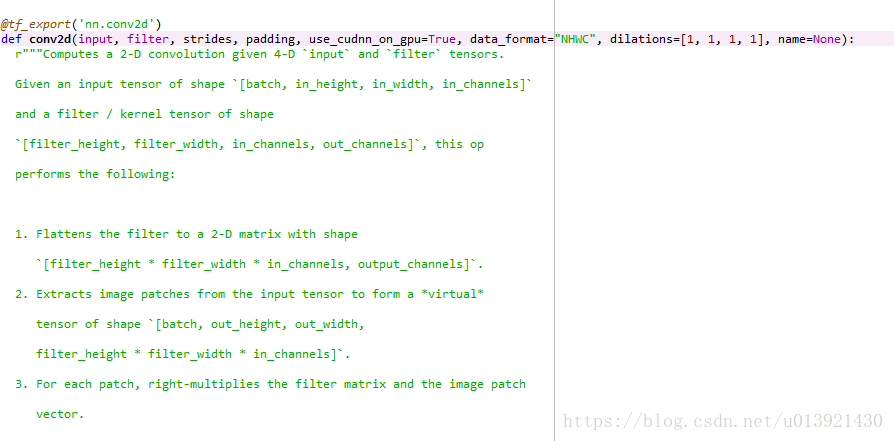

又比如slim.conv2d(),其实这个函数也就是一个卷积层,但是它与以前使用到的tf.nn.conv2d()有所不同;它提供了更多的默认参数,可以直接用它进行许多操作,比如添加偏差、进行非线性激活、L2正则化等。当只输入几个 input,filter, rides,padding四个参数时,二者是等同的。

tf.nn.conv2d()的定义

slim.conv2d()的定义

代码

# -*- coding: utf-8 -*-

"""

Created on Thu Jun 14 17:12:51 2018

@author: most_pan

"""

#定义函数,导入tensorflow等相关依赖库,产生截断的正态分布函数

import tensorflow as tf

import time

from datetime import datetime

import math

slim = tf.contrib.slim

trunc_normal = lambda stddev: tf.truncated_normal_initializer(0, 0, stddev)

#定义函数inception_v3_arg_scope,生成网络中常用到的函数的默认参数

#weight_decay是l2正则化的权重,stddev是卷积核权重初始化的标准差

def inception_v3_arg_scope(weight_decay = 0.00004, stddev = 0.1, batch_norm_var_collection = 'moving_vars'):

#BN的参数

batch_norm_params = {

'decay': 0.9997,

'epsilon': 0.001,

'updates_collections': tf.GraphKeys.UPDATE_OPS,

'variables_collections': {

'beta': None,

'gamma': None,

'moving_mean': [batch_norm_var_collection],

'moving_variance': [batch_norm_var_collection],

}

}

#为卷积层和全连接层设置默认的l2正则化参数

with slim.arg_scope([slim.conv2d, slim.fully_connected], weights_regularizer = slim.l2_regularizer(weight_decay)):

#为卷积层设置默认的初始化方式为截断的正态分布

#卷积层的激活函数使用relu,在激活函数后面添加BN层,为下一层的输入进行归一化,归一化的参数为normalizer_params中的参数

with slim.arg_scope([slim.conv2d],

weights_initializer = tf.truncated_normal_initializer(stddev = stddev),

activation_fn = tf.nn.relu,

normalizer_fn = slim.batch_norm,

normalizer_params = batch_norm_params) as sc:

return sc

#定义inception_v3_base,生成Inception V3网络结构中Inception modules 及其之前的部分

def inception_v3_base(inputs, scope = None):

end_points = {}

with tf.variable_scope(scope, 'InceptionV3', [inputs]):

with slim.arg_scope(

[slim.conv2d, slim.max_pool2d, slim.avg_pool2d],stride = 1, padding = 'VALID'):

net = slim.conv2d(inputs, 32, [3, 3], stride = 2, scope = 'Conv2d_1a_3x3')

net = slim.conv2d(net, 32, [3, 3], scope = 'Conv2d_2a_3x3')

net = slim.conv2d(net, 64, [3, 3], padding = 'SAME', scope = 'Conv2d_2b_3x3')

net = slim.max_pool2d(net, [3, 3], stride = 2, scope = 'MaxPool_3a_3x3')

net = slim.conv2d(net, 80, [1, 1], scope = 'Conv2d_3b_1x1')

net = slim.conv2d(net, 192, [3, 3], scope = 'Conv2d_4a_3x3')

net = slim.max_pool2d(net, [3, 3], stride = 2, scope = 'MaxPool_5a_3x3')

#以上定义的是inception v3中inception modules 之前的卷积和池化部分

#第1个Inception模块组的第一个module

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], stride = 1, padding = 'SAME'):

with tf.variable_scope('Mixed_5b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope = 'Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope = 'Conv2d_0b_1x1')

#tf.concat()函数将第四个维度拼接起来,前三个维度分别是图像的尺寸,第四个为输出通道数

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第二个module

with tf.variable_scope('Mixed_5c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope = 'Conv2d_0b_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope = 'Conv2d_0c_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2,branch_3], 3)

#第三个module

with tf.variable_scope('Mixed_5d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope = 'Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)#把每个分支的输出以第三个维度合并,即通道数合并

#第2个Inception的第一个module

with tf.variable_scope('Mixed_6a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_1a_1x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

#第二个module

with tf.variable_scope('Mixed_6b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 128, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 128, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 128, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第三个module

with tf.variable_scope('Mixed_6c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第四个module

with tf.variable_scope('Mixed_6d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第五个module

with tf.variable_scope('Mixed_6e'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#把Mixed_6e作为单独输出,作为辅助分类节点

end_points['Mixed_6e'] = net

#第3个Inception的第一个module

with tf.variable_scope('Mixed_7a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0, 320, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_0b_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1, 192, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_0e_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride = 2, padding = 'VALID', scope = 'MaxPool_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

#第二个module

with tf.variable_scope('Mixed_7b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope = 'Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope = 'Conv2d_0c_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 384, [3, 3], scope = 'Conv2d_0a_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope = 'Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope = 'Conv2d_0e_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第三个module

with tf.variable_scope('Mixed_7c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope = 'Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope = 'Conv2d_0c_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 384, [3, 3], scope = 'Conv2d_0a_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope = 'Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope = 'Conv2d_0e_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

return net, end_points#返回计算出的结果和作为辅助分类的节点的结果

#定义Inception V3网络的全局平均池化,Softmax和Auxiliary Logits

def inception_v3(inputs, num_classes = 1000, is_training = True, dropout_keep_prob = 0.8,

prediction_fn = slim.softmax, spatial_squeeze = True, reuse = None, scope = 'InceptionV3'):

with tf.variable_scope(scope, 'InceptionV3', [inputs, num_classes], reuse = reuse) as scope:

with slim.arg_scope([slim.batch_norm, slim.dropout], is_training = is_training):

net, end_points = inception_v3_base(inputs, scope = scope)

#对辅助作用的节点进行卷积和池化操作,最后将变量存储到end_points中

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], stride = 1, padding = 'SAME'):

aux_logits = end_points['Mixed_6e']

with tf.variable_scope('AuxLogits'):

aux_logits = slim.avg_pool2d(

aux_logits, [5, 5], stride = 3, padding = 'VALID', scope = 'AvgPool_1a_5x5')

aux_logits = slim.conv2d(

aux_logits, 128, [1, 1], scope = 'Conv2d_1b_1x1')

aux_logits = slim.conv2d(

aux_logits, 768, [5, 5], weights_initializer = trunc_normal(0.01),padding = 'VALID', scope = 'Conv2d_2a_5x5')

aux_logits = slim.conv2d(

aux_logits, num_classes, [1, 1], activation_fn = None,normalizer_fn = None, weights_initializer = trunc_normal(0.001), scope = 'Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits, [1, 2], name = 'SpatialSqueeze')

end_points['AuxLogits'] = aux_logits

#对inception modules输出的net进行池化和dropout操作,最后存储到end_points中

with tf.variable_scope('Logits'):

net = slim.avg_pool2d(net, [8, 8], padding = 'VALID', scope = 'AvgPool_1a_8x8')

net = slim.dropout(net, keep_prob = dropout_keep_prob, scope = 'Dropout_1b')

end_points['PreLogits'] = net

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn = None, normalizer_fn = None, scope = 'Conv2d_1c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits, [1, 2], name = 'SpatialSqueeze')

end_points['Predictions'] = prediction_fn(logits, scope = 'Predictions')

return logits, end_points

#测试性能定义的函数

def time_tensorflow_run(session, target, info_string):

num_steps_burn_in = 10

total_duration = 0.0

total_duration_squared = 0.0

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' %(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / num_batches

vr = total_duration_squared / num_batches - mn * mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %(datetime.now(), info_string, num_batches, mn, sd))

#对Inception V3进行运算性能测试

batch_size = 32

height, width = 299, 299

inputs = tf.random_uniform((batch_size, height, width, 3))

with slim.arg_scope(inception_v3_arg_scope()):

logits, end_points = inception_v3(inputs, is_training = False)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

num_batches = 100

time_tensorflow_run(sess, logits, "Forward")

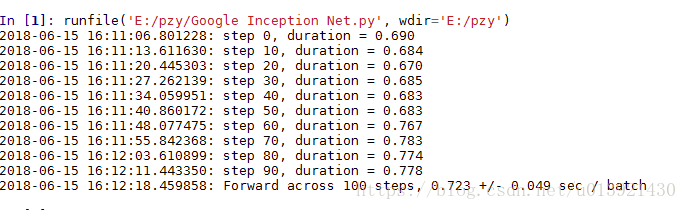

运行结果

总结

不难看出,Inception V3的结构真的很复杂,但是也确实是很优秀,是很值得学习的网络模型,其中有许多地方都值得借鉴。我们平时自己动手可能更多地是类似于VGGNet那样的模型,但是Inception V3这样的模型可以应用于更加复杂的场景。

参考书籍

《Tensorflow 实战》黄文坚等著;