这是依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>1.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>1.5.0</version>

</dependency>

这个是1.5版本的依赖..

然后是我们的代码

public class FlinkWordCount {

publicstatic void main(String[] args) throws Exception {

final ExecutionEnvironment env =ExecutionEnvironment.getExecutionEnvironment();

DataSet<String> text = env.fromElements(

"hadoop hive?",

"think hadoop hive sqoop hbase spark flink?");

DataSet<Tuple2<String,Integer>> wordCounts = text

.flatMap(new LineSplitter())

.groupBy(0)

.sum(1);

wordCounts.print();

}

public static class LineSplitter implements FlatMapFunction<String,Tuple2<String, Integer>> {

@Override

public void flatMap(String line, Collector<Tuple2<String,Integer>> out) {

for (String word : line.split("\\W+")) {

out.collect(newTuple2<String, Integer>(word, 1));

}

}

}

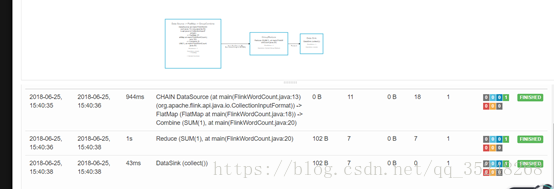

执行结果,,可以在节点根据命令提交,

也可以在UI界面提交jar.的包,,在submit的需要指定程序的主类