MapReduce练习之WordCount案例实操

上一篇简单的介绍了MapReduce的思想,优缺点以及编程规范等等。。。所以趁热打铁做一个MapReduce的实操,再深刻体会下!!!

1.需求

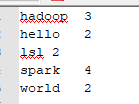

在给定的文件中统计输出每个单词出现的总次数

图解:

2.数据准备

hello world

hadoop

spark

hello world

lsl

spark

hadoop

spark

hadoop

spark

lsl

3.分别编写Mapper、Reducer、Driver

3.1编写Mapper

package com.lsl.sumword;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private Text keyOut = new Text();

//千万不要忘记传参!!!

private IntWritable valueOut = new IntWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

System.out.println("key====>" + key + ";value====>" + value);

//1.获取一行

String line = value.toString();

//2.切割

String[] words = line.split(" ");

//3.输出

for (String word : words) {

keyOut.set(word);

context.write(keyOut, valueOut);

}

}

}

3.2 编写Reducer

package com.lsl.sumword;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private int sum;

private IntWritable intWritable = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

// 1 累加求和

sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

// 2 输出

intWritable.set(sum);

context.write(key,intWritable);

}

}

3.3 编写Driver

package com.lsl.sumword;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1.获取job

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

FileSystem fs = FileSystem.get(conf);

Path input = new Path("d:/input/dea.txt");

Path output = new Path("d:/output");

//如果输出目录存在则删除

if (fs.exists(output)) {

fs.delete(output, true);

}

//2.设置jar加载路径

job.setJarByClass(WordCountDriver.class);

//3.设置map和reduce类

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

//4.设置map输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//5.设置Reduce输出

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//6.设置输入和输出路径

FileInputFormat.setInputPaths(job, input);

FileOutputFormat.setOutputPath(job, output);

//7.提交

boolean result = job.waitForCompletion(true);

System.exit(result?0:1);

}

}

4.测试

4.1 在本地情况下

1.先在自己的Windows机器上编译一下hadoop源码,然后解压到一个非中文路径,并在windows环境上配置HADOOP_HOME环境变量(在博主的交流群中有!)

2.在idea上运行程序

3.一些问题:打印不出日志

解决:需要在项目的src目录下,新建一个文件,命名为“log4j.properties”,并添加

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

4.运行结果

4.2 在集群情况下

1.在依赖中添加

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>2.3.2</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<artifactId>maven-assembly-plugin </artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<manifest>

<mainClass>com.atguigu.mr.WordCountReduce</mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

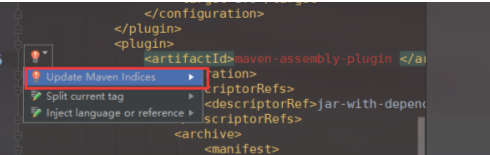

然后idea点击:

或者eclipse:右击项目->maven->update project即可

2.修改WordCountDriver 中的代码:参数写为args[0]和args[1]

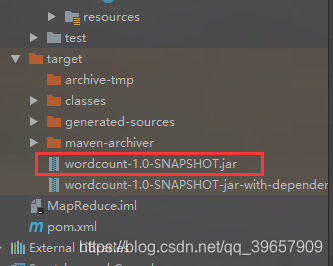

3.将程序打成jar包,然后拷贝到hadoop集群中

idea:

eclipse:

右键->Run as->maven install。等待编译完成就会在项目的target文件夹中生成jar包。如果看不到。在项目上右键-》Refresh,即可看到。修改不带依赖的jar包名称为wc.jar,并拷贝该jar包到Hadoop集群。

4.启动hadoop集群

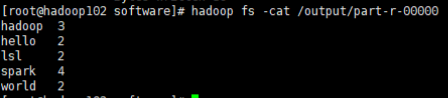

5.在集群上执行wordcount程序(别忘了把数据上传到hdfs上!!!wordcount是我的数据文件)

[lsl@hadoop102 software]$ hadoop jar wordcount-1.0-SNAPSHOT.jar com.lsl.sumword.WordCountDriver /wordcount /output

6.结果

版权声明:本博客为记录本人自学感悟,转载需注明出处!

https://me.csdn.net/qq_39657909