目录标题

Hadoop自带案例WordCount运行

MapReduce可以很好地应用于各种计算问题

关系代数运算(选择、投影、并、交、差、连接)

分组与聚合运算

矩阵-向量乘法

矩阵乘法

网页查看

浏览器默认端口

Resource Manager: http://hadoop1:8088

Web UI of the NameNode daemon: http://hadoop1:50070

HDFS NameNode web interface: http://hadoop1:8042

管理界面:http://localhost:8088

NameNode界面:http://localhost:50070

HDFS NameNode界面:

http://localhost:8042

输入:http://192.168.100.11:9000/cluster

但出现:It looks like you are making an HTTP request to a Hadoop IPC port. This is not the correct port for the web interface on this daemon.

未解决????

集群上jar包的位置

启动集群服务:./sbin/start-all.sh

进入到hadoop的文件下:[hadoop@master hadoop-2.7.7]$ ls

然后进入到 share/hadoop/mapreduce 下:(可以看到相应的jar包)

之后写的生成jar包我一般也是放在这里,比较集中好找,当然放在其他地方也可以

[hadoop@master hadoop-2.7.7]$ cd share/

[hadoop@master share]$ ls

doc hadoop

[hadoop@master share]$ cd hadoop/

[hadoop@master hadoop]$ ls

common hdfs httpfs kms mapreduce tools yarn

[hadoop@master hadoop]$ cd mapreduce/

[hadoop@master mapreduce]$ ls

hadoop-mapreduce-client-app-2.7.7.jar

hadoop-mapreduce-client-common-2.7.7.jar

hadoop-mapreduce-client-core-2.7.7.jar

hadoop-mapreduce-client-hs-2.7.7.jar

hadoop-mapreduce-client-hs-plugins-2.7.7.jar

hadoop-mapreduce-client-jobclient-2.7.7.jar

hadoop-mapreduce-client-jobclient-2.7.7-tests.jar

hadoop-mapreduce-client-shuffle-2.7.7.jar

hadoop-mapreduce-examples-2.7.7.jar

lib

lib-examples

sources

hadoop-mapreduce-examples-2.7.7.jar这个是我们例子的jar包,Wordcount,用于计算每个单词出现的次数。

程序的介绍

| 程序 | Wordcount |

|---|---|

| 输入 | 一个包含大量单词的文本文件 |

| 输出 | 文件中每个单词及其出现次数(频数),并按照单词字母顺序排序,每个单词和其频数占一行,单词和频数之间有间隔 |

我们先来试一下:

先创建两个txt文件,并在里面随意编写一些内容

创建:touch 1.txt 2.txt

然后编写:vi 1.txt vi 2.txt

[hadoop@master mapreduce]$ vi 1.txt

How are you

I am fine

What is your name

I am LinLi

[hadoop@master mapreduce]$ vi 2.txt

How are you

I am fine

What is your name

I am LiuTing

编写完之后放到hadoop中

先再hadoop中创建一个/data 文件夹(我一般一个项目创建一个目录放)

[hadoop@master mapreduce]$ hadoop fs -mkdir /data //创建一个data目录

[hadoop@master mapreduce]$ hadoop fs -lsr /

lsr: DEPRECATED: Please use ‘ls -R’ instead.

drwxr-xr-x - hadoop supergroup 0 2020-04-19 21:37 /data

drwxrwxrwx - hadoop supergroup 0 2020-04-18 21:52 /dt

drwxr-xr-x - hadoop supergroup 0 2020-04-19 00:34 /dt/tmp2

-rw-r–r-- 3 hadoop supergroup 0 2020-04-18 23:43 /dt/tmp2/2.txt

-rw-r–r-- 3 hadoop supergroup 0 2020-04-19 00:34 /dt/tmp2/test1.txt

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging/history

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging/history/done

drwxrwxrwt - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging/history/done_intermediate

[hadoop@master mapreduce]$ hadoop fs -put 1.txt 2.txt /data/

[hadoop@master mapreduce]$ hadoop fs -lsr /

lsr: DEPRECATED: Please use ‘ls -R’ instead.

drwxr-xr-x - hadoop supergroup 0 2020-04-19 21:38 /data

-rw-r–r-- 3 hadoop supergroup 51 2020-04-19 21:38 /data/1.txt

-rw-r–r-- 3 hadoop supergroup 53 2020-04-19 21:38 /data/2.txt

drwxrwxrwx - hadoop supergroup 0 2020-04-18 21:52 /dt

drwxr-xr-x - hadoop supergroup 0 2020-04-19 00:34 /dt/tmp2

-rw-r–r-- 3 hadoop supergroup 0 2020-04-18 23:43 /dt/tmp2/2.txt

-rw-r–r-- 3 hadoop supergroup 0 2020-04-19 00:34 /dt/tmp2/test1.txt

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging/history

drwxrwxrwx - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging/history/done

drwxrwxrwt - hadoop supergroup 0 2020-03-22 05:39 /tmp/hadoop-yarn/staging/history/done_intermediate

[hadoop@master mapreduce]$

然后敲命令执行

wordcount:主程序名 /data/:输入文件(内容),就是要处理的数据

/out-word:必须是一个不存在的文件

下面是执行的内容,你可忽略不看

[hadoop@master mapreduce]$ hadoop jar hadoop-mapreduce-examples-2.7.7.jar wordcount /data/ /out-word

20/04/19 21:40:59 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

20/04/19 21:40:59 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

20/04/19 21:41:00 INFO input.FileInputFormat: Total input paths to process : 2 //文件

20/04/19 21:41:00 INFO mapreduce.JobSubmitter: number of splits:2 //分片

20/04/19 21:41:01 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local453888137_0001

20/04/19 21:41:01 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

20/04/19 21:41:01 INFO mapreduce.Job: Running job: job_local453888137_0001

20/04/19 21:41:01 INFO mapred.LocalJobRunner: OutputCommitter set in config null

20/04/19 21:41:01 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

20/04/19 21:41:01 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

20/04/19 21:41:02 INFO mapred.LocalJobRunner: Waiting for map tasks

20/04/19 21:41:02 INFO mapred.LocalJobRunner: Starting task: attempt_local453888137_0001_m_000000_0

20/04/19 21:41:02 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

20/04/19 21:41:02 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

20/04/19 21:41:02 INFO mapred.MapTask: Processing split: hdfs://192.168.100.11:9000/data/2.txt:0+53

20/04/19 21:41:02 INFO mapreduce.Job: Job job_local453888137_0001 running in uber mode : false

20/04/19 21:41:02 INFO mapreduce.Job: map 0% reduce 0%

20/04/19 21:41:03 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

20/04/19 21:41:03 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

20/04/19 21:41:03 INFO mapred.MapTask: soft limit at 83886080

20/04/19 21:41:03 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

20/04/19 21:41:03 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

20/04/19 21:41:03 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTaskKaTeX parse error: Double subscript at position 523: …53888137_0001_m_̲000000_0 is don…MapOutputBuffer

20/04/19 21:41:03 INFO mapred.LocalJobRunner:

20/04/19 21:41:03 INFO mapred.MapTask: Starting flush of map output

20/04/19 21:41:03 INFO mapred.MapTask: Spilling map output

20/04/19 21:41:03 INFO mapred.MapTask: bufstart = 0; bufend = 103; bufvoid = 104857600

20/04/19 21:41:03 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214348(104857392); length = 49/6553600

20/04/19 21:41:03 INFO mapred.MapTask: Finished spill 0

20/04/19 21:41:03 INFO mapred.Task: Task:attempt_local453888137_0001_m_000001_0 is done. And is in the process of committing

20/04/19 21:41:03 INFO mapred.LocalJobRunner: map

20/04/19 21:41:03 INFO mapred.Task: Task ‘attempt_local453888137_0001_m_000001_0’ done.

20/04/19 21:41:03 INFO mapred.Task: Final Counters for attempt_local453888137_0001_m_000001_0: Counters: 23

File System Counters

FILE: Number of bytes read=296546

FILE: Number of bytes written=599408

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=104

HDFS: Number of bytes written=0

HDFS: Number of read operations=7

HDFS: Number of large read operations=0

HDFS: Number of write operations=1

Map-Reduce Framework

Map input records=4

Map output records=13

Map output bytes=103

Map output materialized bytes=118

Input split bytes=102

Combine input records=13

Combine output records=11

Spilled Records=11

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=43

Total committed heap usage (bytes)=168366080

File Input Format Counters

Bytes Read=51

20/04/19 21:41:03 INFO mapred.LocalJobRunner: Finishing task: attempt_local453888137_0001_m_000001_0

20/04/19 21:41:03 INFO mapred.LocalJobRunner: map task executor complete.

20/04/19 21:41:03 INFO mapred.LocalJobRunner: Waiting for reduce tasks

20/04/19 21:41:03 INFO mapred.LocalJobRunner: Starting task: attempt_local453888137_0001_r_000000_0

20/04/19 21:41:03 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

20/04/19 21:41:03 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

20/04/19 21:41:03 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@6b6f012d

20/04/19 21:41:03 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=363285696, maxSingleShuffleLimit=90821424, mergeThreshold=239768576, ioSortFactor=10, memToMemMergeOutputsThreshold=10

20/04/19 21:41:03 INFO reduce.EventFetcher: attempt_local453888137_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

20/04/19 21:41:04 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local453888137_0001_m_000000_0 decomp: 116 len: 120 to MEMORY

20/04/19 21:41:04 INFO reduce.InMemoryMapOutput: Read 116 bytes from map-output for attempt_local453888137_0001_m_000000_0

20/04/19 21:41:04 WARN io.ReadaheadPool: Failed readahead on ifile

EBADF: Bad file descriptor

at org.apache.hadoop.io.nativeio.NativeIO

POSIX.posixFadviseIfPossible(NativeIO.java:267)

at org.apache.hadoop.io.nativeio.NativeIO

CacheManipulator.posixFadviseIfPossible(NativeIO.java:146)

at org.apache.hadoop.io.ReadaheadPool

Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

20/04/19 21:41:04 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 116, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->116

20/04/19 21:41:04 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local453888137_0001_m_000001_0 decomp: 114 len: 118 to MEMORY

20/04/19 21:41:04 INFO reduce.InMemoryMapOutput: Read 114 bytes from map-output for attempt_local453888137_0001_m_000001_0

20/04/19 21:41:04 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 114, inMemoryMapOutputs.size() -> 2, commitMemory -> 116, usedMemory ->230

20/04/19 21:41:04 INFO reduce.EventFetcher: EventFetcher is interrupted… Returning

20/04/19 21:41:04 WARN io.ReadaheadPool: Failed readahead on ifile

EBADF: Bad file descriptor

at org.apache.hadoop.io.nativeio.NativeIO

POSIX.posixFadviseIfPossible(NativeIO.java:267)

at org.apache.hadoop.io.nativeio.NativeIO

CacheManipulator.posixFadviseIfPossible(NativeIO.java:146)

at org.apache.hadoop.io.ReadaheadPool

Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

20/04/19 21:41:04 INFO mapred.LocalJobRunner: 2 / 2 copied.

20/04/19 21:41:04 INFO reduce.MergeManagerImpl: finalMerge called with 2 in-memory map-outputs and 0 on-disk map-outputs

20/04/19 21:41:04 INFO mapred.Merger: Merging 2 sorted segments

20/04/19 21:41:04 INFO mapred.Merger: Down to the last merge-pass, with 2 segments left of total size: 218 bytes

20/04/19 21:41:04 INFO reduce.MergeManagerImpl: Merged 2 segments, 230 bytes to disk to satisfy reduce memory limit

20/04/19 21:41:04 INFO reduce.MergeManagerImpl: Merging 1 files, 232 bytes from disk

20/04/19 21:41:04 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

20/04/19 21:41:04 INFO mapred.Merger: Merging 1 sorted segments

20/04/19 21:41:04 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 222 bytes

20/04/19 21:41:04 INFO mapred.LocalJobRunner: 2 / 2 copied.

20/04/19 21:41:04 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

20/04/19 21:41:05 INFO mapred.Task: Task:attempt_local453888137_0001_r_000000_0 is done. And is in the process of committing

20/04/19 21:41:05 INFO mapred.LocalJobRunner: 2 / 2 copied.

20/04/19 21:41:05 INFO mapred.Task: Task attempt_local453888137_0001_r_000000_0 is allowed to commit now

20/04/19 21:41:05 INFO output.FileOutputCommitter: Saved output of task ‘attempt_local453888137_0001_r_000000_0’ to hdfs://192.168.100.11:9000/out-word/_temporary/0/task_local453888137_0001_r_000000

20/04/19 21:41:05 INFO mapred.LocalJobRunner: reduce > reduce

20/04/19 21:41:05 INFO mapred.Task: Task ‘attempt_local453888137_0001_r_000000_0’ done.

20/04/19 21:41:05 INFO mapred.Task: Final Counters for attempt_local453888137_0001_r_000000_0: Counters: 29

File System Counters

FILE: Number of bytes read=297080

FILE: Number of bytes written=599640

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=104

HDFS: Number of bytes written=78

HDFS: Number of read operations=10

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Map-Reduce Framework

Combine input records=0

Combine output records=0

Reduce input groups=12

Reduce shuffle bytes=238

Reduce input records=22

Reduce output records=12

Spilled Records=22

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=0

Total committed heap usage (bytes)=168366080

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Output Format Counters

Bytes Written=78

20/04/19 21:41:05 INFO mapred.LocalJobRunner: Finishing task: attempt_local453888137_0001_r_000000_0

20/04/19 21:41:05 INFO mapred.LocalJobRunner: reduce task executor complete.

20/04/19 21:41:05 INFO mapreduce.Job: map 100% reduce 100%

20/04/19 21:41:05 INFO mapreduce.Job: Job job_local453888137_0001 completed successfully

20/04/19 21:41:05 INFO mapreduce.Job: Counters: 35

File System Counters

FILE: Number of bytes read=889949

FILE: Number of bytes written=1798306

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=261

HDFS: Number of bytes written=78

HDFS: Number of read operations=22

HDFS: Number of large read operations=0

HDFS: Number of write operations=5

Map-Reduce Framework

Map input records=8

Map output records=26

Map output bytes=208

Map output materialized bytes=238

Input split bytes=204

Combine input records=26

Combine output records=22

Reduce input groups=12

Reduce shuffle bytes=238

Reduce input records=22

Reduce output records=12

Spilled Records=44

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=98

Total committed heap usage (bytes)=457912320

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=104

File Output Format Counters

Bytes Written=78

[hadoop@master mapreduce]$

编译完之后可以在网页中查看

然后可以在Hadoop中查看

输入文件夹/out-word中有两个文件,在 /out-word/part-r-00000中可以看到结果

查看结果

[hadoop@master mapreduce]$ hadoop fs -lsr /out-word

lsr: DEPRECATED: Please use 'ls -R' instead.

-rw-r--r-- 3 hadoop supergroup 0 2020-04-19 21:41 /out-word/_SUCCESS

-rw-r--r-- 3 hadoop supergroup 78 2020-04-19 21:41 /out-word/part-r-00000

[hadoop@master mapreduce]$ hadoop fs -cat /out-word/part-r-00000

How 2

I 4

LinLi 1

LiuTing 1

What 2

am 4

are 2

fine 2

is 2

name 2

you 2

your 2

[hadoop@master mapreduce]$

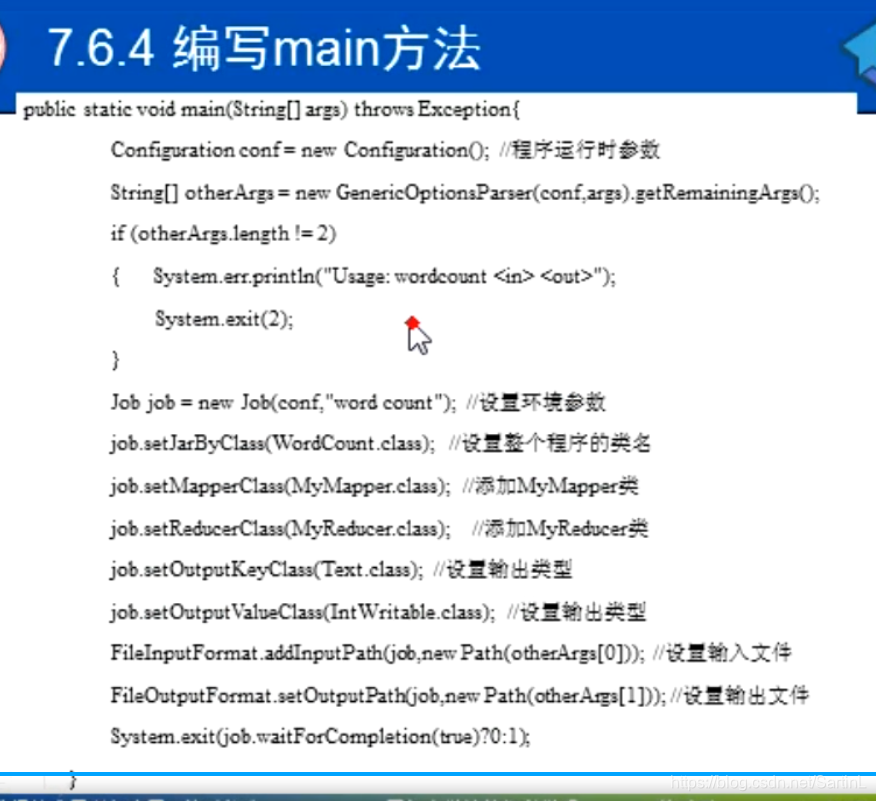

自己编写WordCount的project(MapReduce)

我还是按照上述例子的程序去编写

Map输入类型为<key,value>

期望的Map输出类型为<单词,出现次数>

Map输入类型最终确定为<Object ,Text>

Map输出类型最终确定为<Text, IntWritable>

编写好的文件WordCount.java,然后整个项目导出成一个jar包

package com.hadoop.MapReduce;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

//Map String ---- Text

//泛型 在Map里面重写它的方法

//KEYIN,VALUEIN,KEYOUT,VALUEOUT

//keyin

//valuein "hello world"

//keyout "hello 1"

//valueout 1

public static class MyMapper extends Mapper<Object, Text, Text, IntWritable>

{

@Override

protected void map(Object key, Text value, Mapper<Object, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

String vals = value.toString(); //获取一行

String keys [] = vals.split(" "); //然后分割

for(String ks : keys)

{

context.write(new Text(ks), new IntWritable(1));

}

}

}

//Reduce

public static class MyReducer extends Reducer<Text, IntWritable, Text, IntWritable>

{

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Reducer<Text, IntWritable, Text, IntWritable>.Context context) throws IOException, InterruptedException {

// TODO Auto-generated method stub

int sum = 0;

for(IntWritable val : values)

{

sum += val.get();

}

context.write(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException

{

// TODO Auto-generated method stub

if(args.length<2)

{

System.out.println("the arguments are adfadf");

System.exit(0);

}

Configuration conf = new Configuration();

String []arg = new GenericOptionsParser(conf, args).getRemainingArgs();

@SuppressWarnings("deprecation")

Job job = new Job(conf, "hadoop");

job.setJarByClass(WordCount.class); //设置整个程序的类名

job.setMapperClass(MyMapper.class); //添加 Mapper类

job.setReducerClass(MyReducer.class); //添加Reducer类

job.setOutputKeyClass(Text.class); //设置输出类型

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0])); //设置输入文件

FileOutputFormat.setOutputPath(job, new Path(arg[1])); //设置输出文件

System.exit(job.waitForCompletion(true)?0:1);

}

}

把jar包放进集群的MapReduce里,用 rz 这个命令(这个jar包的位置可以随意放)

[hadoop@master mapreduce]$ rz

rz waiting to receive.

¿ªÊ¼ zmodem ´«Êä¡£ °´ Ctrl+C È¡Ïû¡£

100% 7 KB 7 KB/s 00:00:01 0 Errors

[hadoop@master mapreduce]$ ls

1.txt

2.txt

hadoop-mapreduce-client-app-2.7.7.jar

hadoop-mapreduce-client-common-2.7.7.jar

hadoop-mapreduce-client-core-2.7.7.jar

hadoop-mapreduce-client-hs-2.7.7.jar

hadoop-mapreduce-client-hs-plugins-2.7.7.jar

hadoop-mapreduce-client-jobclient-2.7.7.jar

hadoop-mapreduce-client-jobclient-2.7.7-tests.jar

hadoop-mapreduce-client-shuffle-2.7.7.jar

hadoop-mapreduce-examples-2.7.7.jar

lib

lib-examples

sources

WordCount.jar

[hadoop@master mapreduce]$

然后就可运行试试

然后就可运行试试

[hadoop@master mapreduce]$ hadoop jar WordCount.jar /data/ /out-jar

查看结果

[hadoop@master mapreduce]$ hadoop fs -lsr /out-jar

lsr: DEPRECATED: Please use 'ls -R' instead.

-rw-r--r-- 3 hadoop supergroup 0 2020-04-19 23:45 /out-jar/_SUCCESS

-rw-r--r-- 3 hadoop supergroup 78 2020-04-19 23:45 /out-jar/part-r-00000

[hadoop@master mapreduce]$ hadoop fs -cat /out-jar/part-r-00000

How 2

I 4

LinLi 1

LiuTing 1

What 2

am 4

are 2

fine 2

is 2

name 2

you 2

your 2

[hadoop@master mapreduce]$

编译成功!!