数据来源有三张表:

user_visit_action,描述用户点击信息,存储在hive中

模拟数据如下:有效信息date,点击时间,city_id城市,order_product_id用户点击商品的id

date,user_id,session_id,page_id,action,city_id,search_keywords,order_product_id

2018/5/1,,,,,010,,1011

2018/5/1,,,,,010,,1011

2018/5/1,,,,,010,,1011

2018/5/1,,,,,010,,1011

2018/5/1,,,,,011,,1011

2018/5/1,,,,,011,,1011

2018/5/2,,,,,011,,

2018/5/2,,,,,011,,null

2018/5/2,,,,,011,,1022

2018/5/2,,,,,011,,

2018/5/2,,,,,010,,1033

2018/5/2,,,,,010,,1034

2018/5/2,,,,,012,,1035city_info,描述城市信息,存储在MySQL中

数据描述:城市id,城市名称,所属区域

city_id,city_name,area

010,beijing,huazhong

011,tianjin,huazhong

012,hebei,huazhong

020,shanghai,huanan

021,nanjing,huanan

022,shandong,huanan

030,haerbin,dongbei

031,jilin,dongbei

032,shenyang,dongbeiroducet_info,描述商品信息,存储在hive中

数据描述,商品id,商品名称,扩展信息

product_id,product_name,extends_info

1011,aa,{"product_status":0,"price":100}

1022,bb,{"product_status":1,"price":101}

1033,cc,{"product_status":0,"price":102}

1034,dd,{"product_status":1,"price":103}

1035,ee,{"product_status":0,"price":104}

1036,ff,{"product_status":1,"price":105}

1037,gg,{"product_status":0,"price":106}

1038,hh,{"product_status":0,"price":107}

1039,ii,{"product_status":1,"price":108}

1040,jj,{"product_status":0,"price":109}

1041,kk,{"product_status":1,"price":110}

1042,ll,{"product_status":0,"price":111}案例需求:求出不同区域的热点商品,求top3

额外要求:增加字段描述商品对应所在区域的城市名称,增加商品来源(product_status)

主体代码实现

package day6.test1.day9

import java.util.Properties

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{Row, SaveMode}

import org.apache.spark.sql.hive.HiveContext

import org.apache.spark.{SparkConf, SparkContext}

object HotGoodsMT{

def main(args: Array[String]): Unit = {

/**

* 第一步 创建程序入口

*/

val conf = new SparkConf().setMaster("local")

.setAppName(s"${this.getClass.getSimpleName}")

val sc = new SparkContext(conf)

val sqlContext = new HiveContext(sc)

//自定义的聚合函数

// sqlContext.udf.register("group_dinstict_func",GroupDinstictUDAF)

sqlContext.udf.register("get_product_status",(str:String) =>{//json

var status=0;

for( s <- str.split(",")){

if(s.contains("product_status")){

status=s.split(":")(1).toInt

}

}

if (status == 0)"自营" else "第三方"

})

/**

* 第二步 接收外面传进来的参数

* 起始时间 startTime

* 结束时间 endTime

*/

val Array(startTime,endTime)=Array("2018/5/1","2018/5/4")//args

/**

* 第三步:

* 根据时间去Hive表里面获取用户行为的数据

* 注册成为临时信息表cityid2Action

*/

val cityid2Actiontable = "cityid2Action"

val cityid2Action

= getActionDataByRange(startTime,endTime,sqlContext,cityid2Actiontable)

/**

* 【接下来我做的这些事,不一定是最好的选择】,大家可以按照自己思路做。

* 第四步:

* 获取城市信息表(mysql)

* 注册为临时信息表cityid2City

*/

val cityid2Citytable = "cityid2City"

val mysql_tablename = "city_info"

val cityid2City = getCityInfo(sqlContext,cityid2Citytable,mysql_tablename)

/**

* 第五步:

* 第三步和第四步的表进行 join (cityid) -> table

* cityid2Action

* cityid2City

*/

val area2ActionTable = "areaaction"

generateTempTable(sqlContext,cityid2Actiontable,cityid2Citytable,area2ActionTable)

/**

* 第六步:

* 统计商品点击的次数

* 增加需求,将商品在不同区域的城市合并,添加为新字段

*/

//注册自定义的函数

sqlContext.udf.register("combaincity",MyUDAT_cityCombain)

val area2citynameactiontable = "areacombaincityname"

productClickCountByArea(sqlContext,area2citynameactiontable)

/**

* 第七步:

* 分组求TopN

* 新增分级字段

*/

val topNtable = "topN"

topN(sqlContext,topNtable)

val leveltable = "topNlevel"

levelTopN(sqlContext,leveltable)

/**

* 第八步:

* 上一步的结果 跟 商品信息表进行join

*/

genarateFullTable(sqlContext)

/**

* 第九步:

* 把最后的结果写入mysql数据库

*/

// writeresult(sqlContext)

}

def getActionDataByRange(startTime: String, endTime: String

, sqlContext: HiveContext,table_name:String) = {

val sql =

s"""

select

city_id,order_product_id

from

myhive.user_visit_action

where

order_product_id !=''

and

order_product_id != 'null'

and

order_product_id != 'NULL'

and

datee >= '${startTime}'

and

datee <= '${endTime}'

"""

val df = sqlContext.sql(sql)

df.createOrReplaceTempView(table_name)

}

//---------------------

def getCityInfo(sqlContext: HiveContext,tablename:String,mysqltable:String) = {

val url = "jdbc:mysql://hadoop02:3306/test"

val df = sqlContext.read.format("jdbc")

.option("url", url)

.option("dbtable", mysqltable)

.option("user", "root")

.option("password", "root")

.load()

df.createOrReplaceTempView(tablename)

}

def generateTempTable(sqlContext: HiveContext, cityid2Actiontable: String

, cityid2Citytable: String,area2action:String): Unit = {

val sql =

s"""

select

b.city_id,b.order_product_id,a.area,a.city_name

from cityid2City a

left join

cityid2Action b

on

a.city_id = b.city_id

"""

val df = sqlContext.sql(sql)

df.createOrReplaceTempView(area2action)

}

def productClickCountByArea(sqlContext: HiveContext

, area2citynameactiontable: String): Unit = {

val sql=

"""

select area,order_product_id,count(*)as clicknum,combaincity(city_name)as city_name

from areaaction

group by area,order_product_id

"""

val df = sqlContext.sql(sql)

df.createOrReplaceTempView(area2citynameactiontable)

}

def topN(sqlContext: HiveContext,topNtable:String): Unit = {

val sql =

"""

select area,order_product_id,city_name,clicknum

from

(select

area,order_product_id,city_name,clicknum,

row_number() over (partition by area sort by clicknum desc) as rank

from

areacombaincityname)a

where a.rank <= 3

"""

val df = sqlContext.sql(sql)

df.createOrReplaceTempView(topNtable)

}

def levelTopN(sqlContext: HiveContext, leveltable: String): Unit = {

val sql=

"""

select area,

case when area="huazhong" then "A"

when area="huanan"then "B"

when area="dongbei"then "C"

else "D"

end as level,order_product_id,city_name,clicknum

from topN

"""

val df = sqlContext.sql(sql)

df.createOrReplaceTempView(leveltable)

}

def genarateFullTable(sqlContext: HiveContext): Unit = {

val sql =

"""

select area,level,order_product_id,city_name

,clicknum,product_name,get_product_status(extends_info)

from topNlevel a,myhive.product_info b

where a.order_product_id = b.product_id

"""

val df = sqlContext.sql(sql)

df.createOrReplaceTempView("result")

val url = "jdbc:mysql://hadoop02:3306/test"

val table = "resultFull"

val properties = new Properties()

properties.put("user","root")

properties.put("password","root")

df.write.mode(SaveMode.Overwrite).jdbc(url,table,properties)

}

}求区域商品对应的城市信息需要使用自定义udaf 函数

package day6.test1.day9

import org.apache.spark.sql.Row

import org.apache.spark.sql.expressions.{MutableAggregationBuffer, UserDefinedAggregateFunction}

import org.apache.spark.sql.types.{DataType, StringType, StructField, StructType}

object MyUDAT_cityCombain extends UserDefinedAggregateFunction{

override def inputSchema: StructType = StructType(

StructField("city_info",StringType,true)::Nil

)

override def bufferSchema: StructType = StructType(

StructField("city_info",StringType,true)::Nil

)

override def dataType: DataType = StringType

override def deterministic: Boolean = true

override def initialize(buffer: MutableAggregationBuffer): Unit = {

buffer.update(0,"")

}

override def update(buffer: MutableAggregationBuffer, input: Row): Unit = {

var last_str = buffer.getString(0)

val current_str = input.getString(0)

if(!last_str.contains(current_str)){

if (last_str.equals("")){

last_str = current_str

}else{

last_str += "," + current_str

}

}

buffer.update(0,last_str)

}

override def merge(buffer1: MutableAggregationBuffer, buffer2: Row): Unit = {

var b1 = buffer1.getString(0)

val b2 = buffer2.getString(0)

for(s <- b2.split(",")){

if (!b1.contains(s)){

if (b1.equals("")){

b1 = s

}else{

b1 += "," + s

}

}

}

buffer1.update(0,b1)

}

override def evaluate(buffer: Row): Any = {

buffer.getString(0)

}

}

-----------------------------------------------------------------------------------------------------------

程序运行,提前将表存储到对应的数据库中

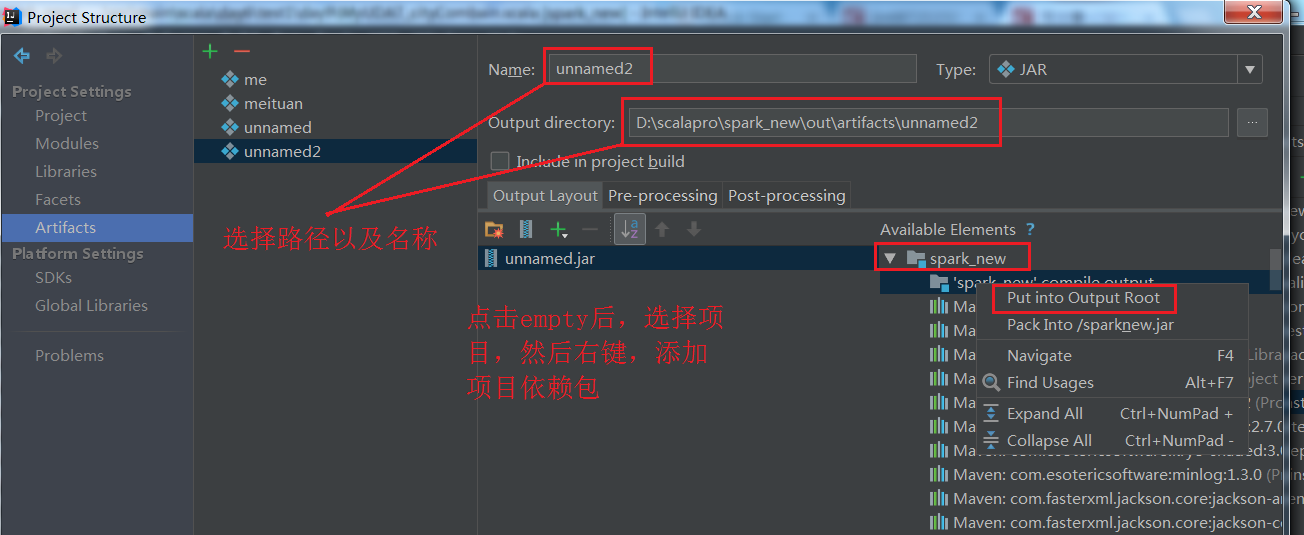

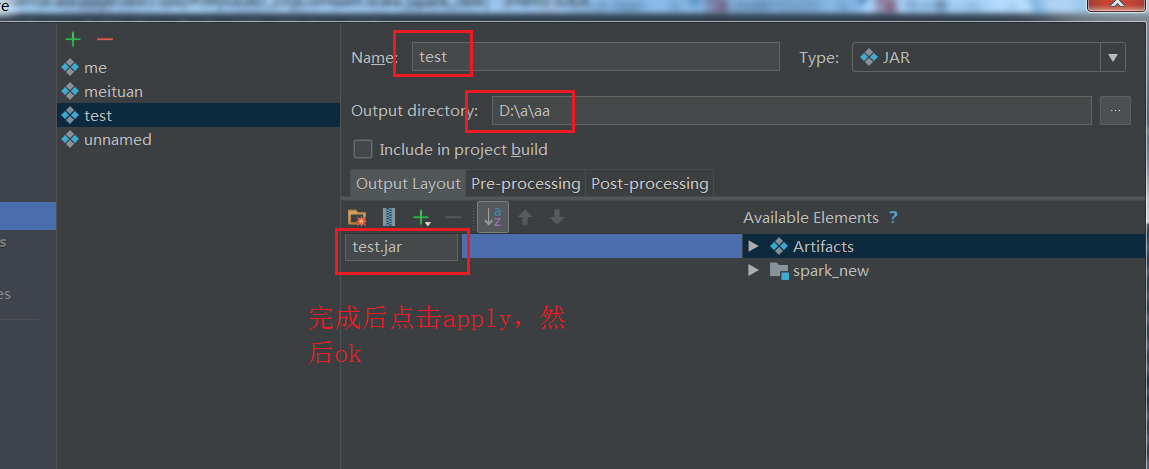

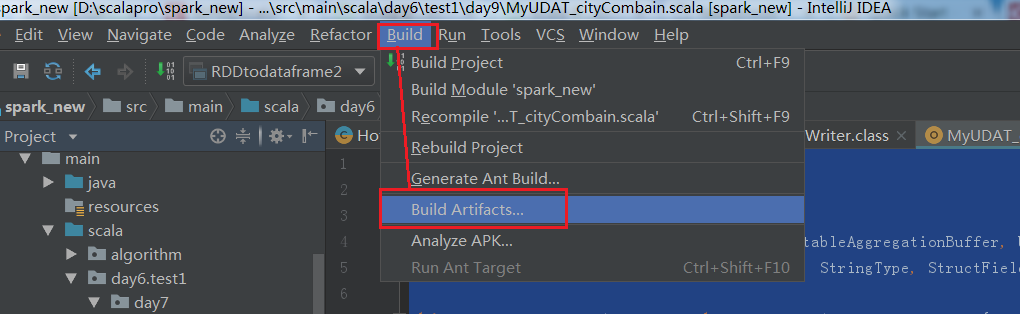

将程序打包,详细图解过程如下:

-------------------------------------------------------

将打包好的jar包上传到spark集群,使用命令运行

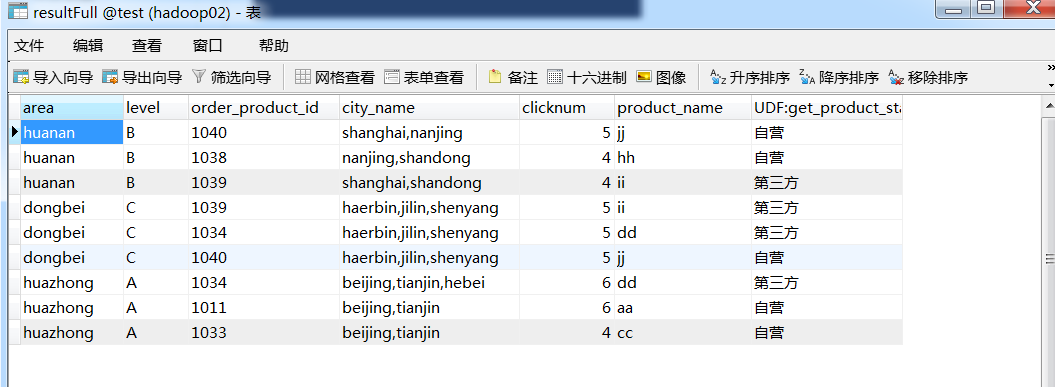

spark-submit --class day6.test1.day9.HotGoodsMT --master local[4] meituan.jar 最终结果保存在MySQL中,结果如下: