d:

进入D盘

scrapy startproject douban

创建豆瓣项目

cd douban

进入项目

scrapy genspider douban_spider movie.douban.com

创建爬虫

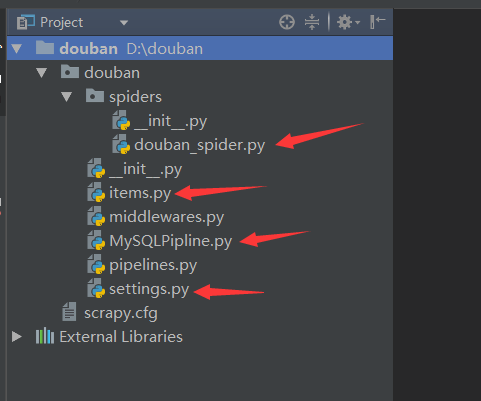

编辑items.py:

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

serial_number = scrapy.Field()

# 序号

movie_name = scrapy.Field()

# 电影的名称

introduce = scrapy.Field()

# 电影的介绍

star = scrapy.Field()

# 星级

evaluate = scrapy.Field()

# 电影的评论数

depict = scrapy.Field()

# 电影的描述

编辑douban_spider.py:

# -*- coding: utf-8 -*-

import scrapy

from douban.items import DoubanItem

class DoubanSpiderSpider(scrapy.Spider):

name = 'douban_spider'

# 爬虫的名字

allowed_domains = ['movie.douban.com']

# 允许的域名

start_urls = ['https://movie.douban.com/top250']

# 引擎入口url,扔到调度器里面去

def parse(self, response):

# 默认的解析方法

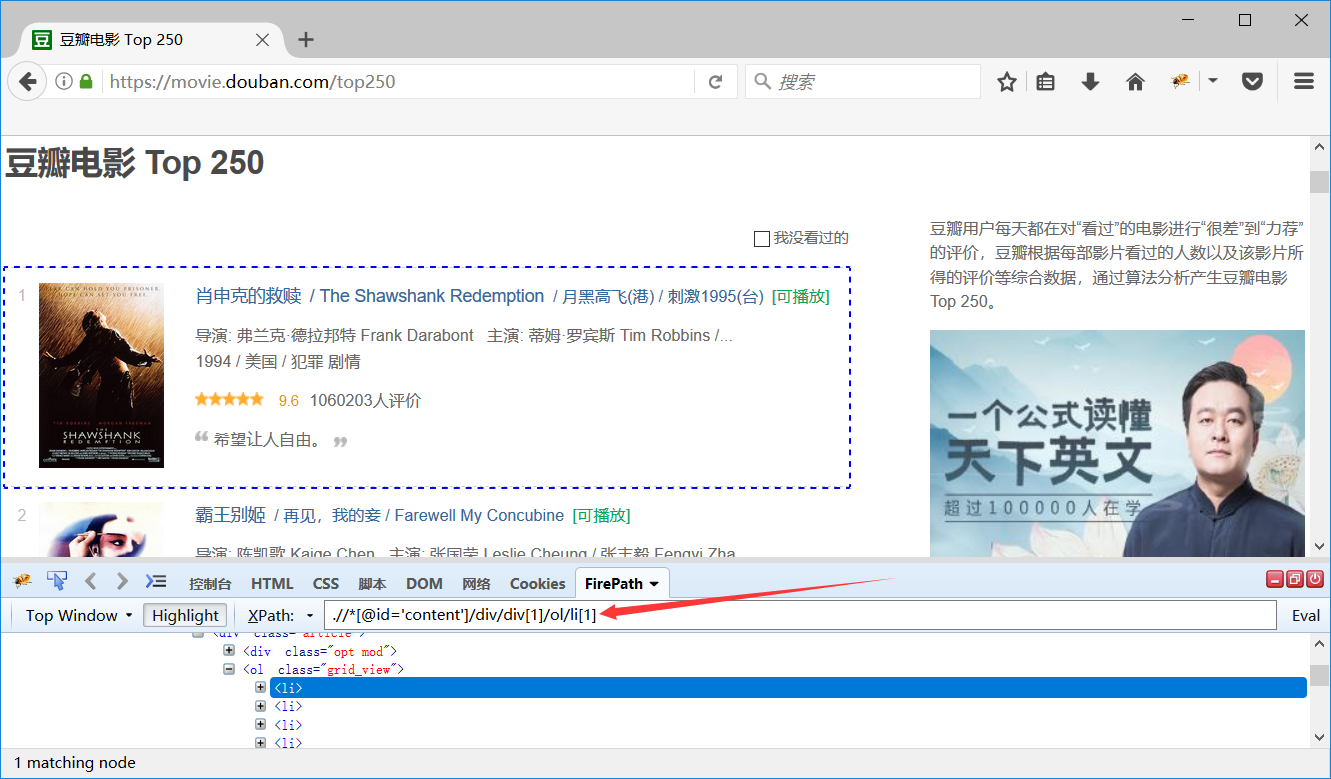

movie_list = response.xpath(".//*[@id='content']/div/div[1]/ol/li")

# 第一页展示的电影的列表

for i_item in movie_list:

# 循环电影的条目

douban_item = DoubanItem()

# 把items.py文件导进来

douban_item["serial_number"] = i_item.xpath(".//div/div[1]/em/text()").extract_first()

douban_item["movie_name"] = i_item.xpath(".//div/div[2]/div[1]/a/span[1]/text()").extract_first()

content = i_item.xpath(".//div/div[2]/div[2]/p[1]/text()").extract()

for i_content in content:

# 处理电影的介绍中的换行的数据

content_s = "".join(i_content.split())

douban_item["introduce"] = content_s

douban_item["star"] = i_item.xpath(".//div/div[2]/div[2]/div/span[2]/text()").extract_first()

douban_item["evaluate"] = i_item.xpath(".//div/div[2]/div[2]/div/span[4]/text()").extract_first()

douban_item["depict"] = i_item.xpath(".//div/div[2]/div[2]/p[2]/span/text()").extract_first()

yield douban_item

# 需要把数据yield到pipelines里面去

next_link = response.xpath(".//*[@id='content']/div/div[1]/div[2]/span[3]/a/@href").extract()

# 解析下一页,取后页的xpath

if next_link:

next_link = next_link[0]

yield scrapy.Request("https://movie.douban.com/top250" + next_link, callback=self.parse)

新建MySQLPipline.py:

from pymysql import connect

from douban import settings

class MySQLPipeline(object):

def __init__(self):

self.connect = connect(

host=settings.host,

port=settings.port,

db=settings.db,

user=settings.user,

passwd=settings.passwd,

charset='utf8',

use_unicode=True)

# 连接数据库

self.cursor = self.connect.cursor()

# 使用cursor()方法获取操作游标

def process_item(self, item, spider):

self.cursor.execute(

"""insert into douban(serial_number, movie_name, introduce, star, evaluate, depict)

value (%s, %s, %s, %s, %s, %s)""",

(item['serial_number'],

item['movie_name'],

item['introduce'],

item['star'],

item['evaluate'],

item['depict']

))

# 执行sql语句,item里面定义的字段和表字段一一对应

self.connect.commit()

# 提交

return item

# 返回item

def close_spider(self, spider):

self.cursor.close()

# 关闭游标

self.connect.close()

# 关闭数据库连接

修改settings.py配置文件:

第19行修改为:

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:52.0) Gecko/20100101 Firefox/52.0'

# 设置浏览器代理

第69行修改为:

ITEM_PIPELINES = {

'douban.MySQLPipline.MySQLPipeline': 300,

}

# 启用pipeline

在文件末尾增加MySQL数据库配置:

host = '192.168.1.23' # 数据库地址

port = 3306 # 数据库端口

db = 'scrapy' # 数据库名

user = 'root' # 数据库用户名

passwd = 'Abcdef@123456' # 数据库密码

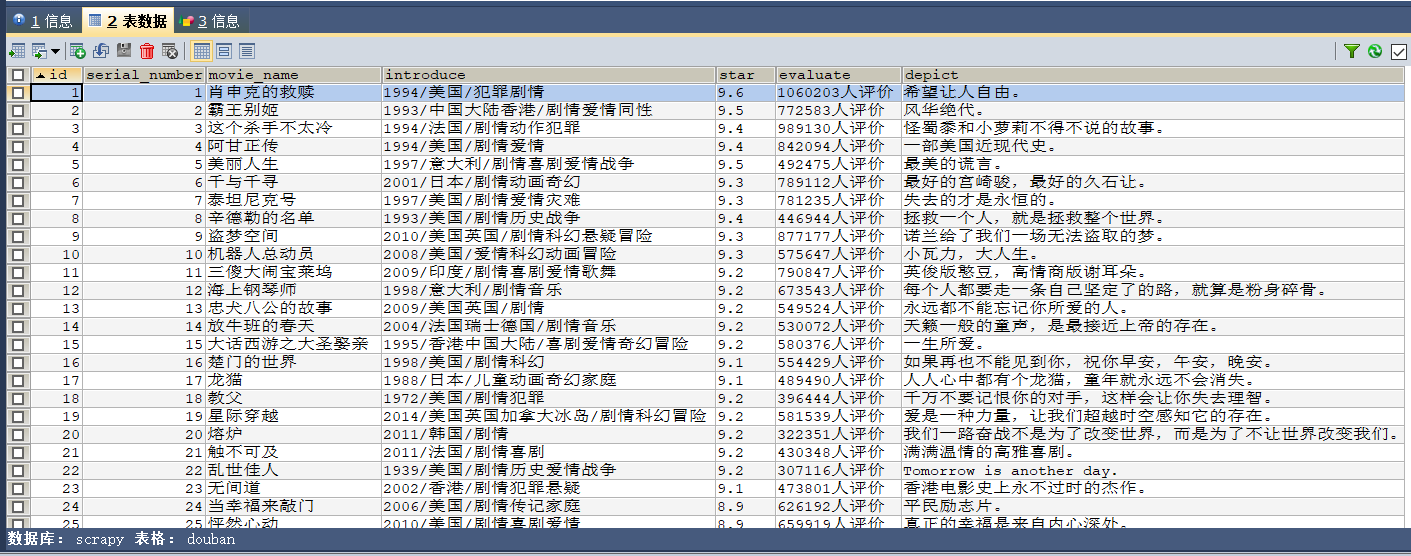

CREATE DATABASE scrapy;

创建数据库

CREATE TABLE `douban` (

`id` INT(11) NOT NULL AUTO_INCREMENT,

`serial_number` INT(11) DEFAULT NULL COMMENT '序号',

`movie_name` VARCHAR(256) DEFAULT NULL COMMENT '电影的名称',

`introduce` VARCHAR(256) DEFAULT NULL COMMENT '电影的介绍',

`star` VARCHAR(256) DEFAULT NULL COMMENT '星级',

`evaluate` VARCHAR(256) DEFAULT NULL COMMENT '电影的评论数',

`depict` VARCHAR(256) DEFAULT NULL COMMENT '电影的描述',

PRIMARY KEY (`id`)

) ENGINE=INNODB DEFAULT CHARSET=utf8 COMMENT '豆瓣表';

创建表

scrapy crawl douban_spider --nolog

运行爬虫(不打印日志)